Pleaser refer to our new implementation of SC-Depth (V1, V2, and V3) at https://github.com/JiawangBian/sc_depth_pl

This codebase implements the SC-DepthV1 described in the paper:

Unsupervised Scale-consistent Depth Learning from Video

Jia-Wang Bian, Huangying Zhan, Naiyan Wang, Zhichao Li, Le Zhang, Chunhua Shen, Ming-Ming Cheng, Ian Reid

IJCV 2021 [PDF]

This is an extended version of NeurIPS 2019 [PDF] [Project webpage]

- A geometry consistency loss, which makes the predicted depths to be globally scale consistent.

- A self-discovered mask, which detects moving objects and occlusions for boosting accuracy.

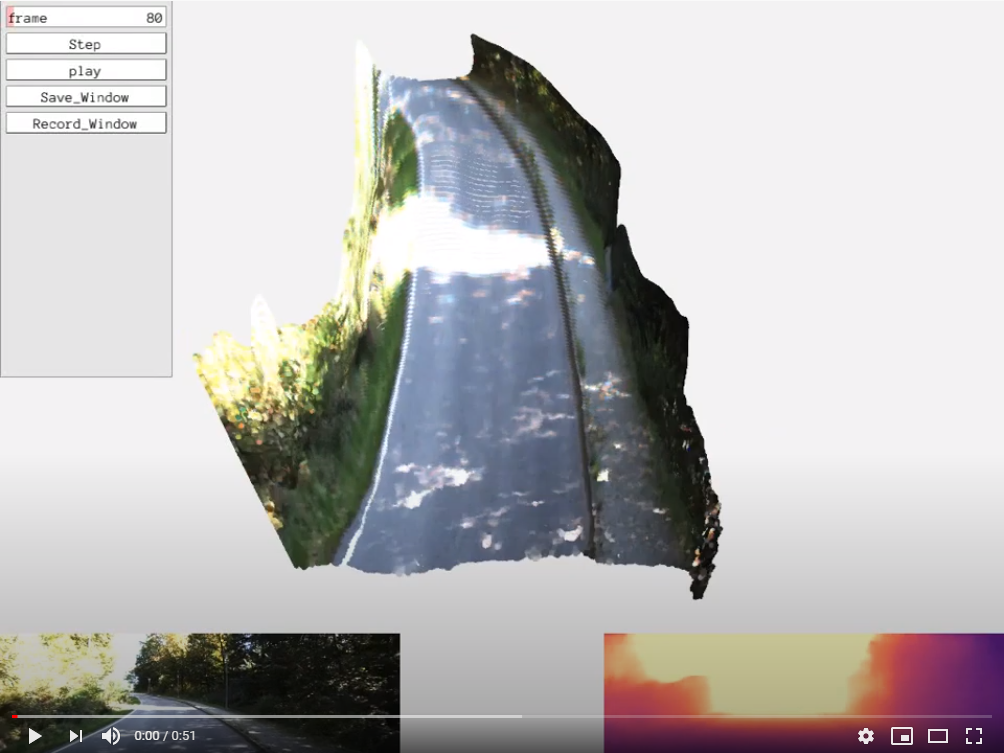

- Scale-consistent predictions, which can be used in the Monocular Visual SLAM system.

@article{bian2021ijcv,

title={Unsupervised Scale-consistent Depth Learning from Video},

author={Bian, Jia-Wang and Zhan, Huangying and Wang, Naiyan and Li, Zhichao and Zhang, Le and Shen, Chunhua and Cheng, Ming-Ming and Reid, Ian},

journal= {International Journal of Computer Vision (IJCV)},

year={2021}

}

Note that this is an improved version, and you can find the NeurIPS version in 'Release / NeurIPS Version' for reproducing the results reported in paper. Compared with NeurIPS version, we (1) Change networks by using Resnet18 and Resnet50 pretrained model (on ImageNet) for depth and pose encoders. (2) Add 'auto_mask' by Monodepth2 to remove stationary points. (3) Integrate the depth and pose prediction into the ORB-SLAM system. (4) Add training and testing on NYUv2 indoor dataset. See Unsupervised-Indoor-Depth for details.

This codebase was developed and tested with python 3.6, Pytorch 1.0.1, and CUDA 10.0 on Ubuntu 16.04. It is based on Clement Pinard's SfMLearner implementation.

pip3 install -r requirements.txtSee "scripts/run_prepare_data.sh".

For KITTI Raw dataset, download the dataset using this script http://www.cvlibs.net/download.php?file=raw_data_downloader.zip) provided on the official website.

For KITTI Odometry dataset, download the dataset with color images.

Or you can download our pre-processed dataset from the following link

kitti_256 (for kitti raw) | kitti_vo_256 (for kitti odom) | kitti_depth_test (eigen split) | kitti_vo_test (seqs 09-10)

The "scripts" folder provides several examples for training and testing.

You can train the depth model on KITTI Raw by running

sh scripts/train_resnet18_depth_256.shor train the pose model on KITTI Odometry by running

sh scripts/train_resnet50_pose_256.shThen you can start a tensorboard session in this folder by

tensorboard --logdir=checkpoints/and visualize the training progress by opening https://localhost:6006 on your browser.

You can evaluate depth on Eigen's split by running

sh scripts/test_kitti_depth.shevaluate visual odometry by running

sh scripts/test_kitti_vo.shand visualize depth by running

sh scripts/run_inference.shTo evaluate the NeurIPS models, please download the code from 'Release/NeurIPS version'.

| Models | Abs Rel | Sq Rel | RMSE | RMSE(log) | Acc.1 | Acc.2 | Acc.3 |

|---|---|---|---|---|---|---|---|

| resnet18 | 0.119 | 0.857 | 4.950 | 0.197 | 0.863 | 0.957 | 0.981 |

| resnet50 | 0.114 | 0.813 | 4.706 | 0.191 | 0.873 | 0.960 | 0.982 |

| Models | Abs Rel | Log10 | RMSE | Acc.1 | Acc.2 | Acc.3 |

|---|---|---|---|---|---|---|

| resnet18 | 0.159 | 0.068 | 0.608 | 0.772 | 0.939 | 0.982 |

| resnet50 | 0.157 | 0.067 | 0.593 | 0.780 | 0.940 | 0.984 |

NYUv2 dataset (Rectifed Images by Unsupervised-Indoor-Depth)

| Models | Abs Rel | Log10 | RMSE | Acc.1 | Acc.2 | Acc.3 |

|---|---|---|---|---|---|---|

| resnet18 | 0.143 | 0.060 | 0.538 | 0.812 | 0.951 | 0.986 |

| resnet50 | 0.142 | 0.060 | 0.529 | 0.813 | 0.952 | 0.987 |

| Metric | Seq. 09 | Seq. 10 |

|---|---|---|

| t_err (%) | 7.31 | 7.79 |

| r_err (degree/100m) | 3.05 | 4.90 |

| Metric | Seq. 09 | Seq. 10 |

|---|---|---|

| t_err (%) | 5.08 | 4.32 |

| r_err (degree/100m) | 1.05 | 2.34 |

-

SfMLearner-Pytorch (CVPR 2017, our baseline framework.)

-

Depth-VO-Feat (CVPR 2018, trained on stereo videos for depth and visual odometry)

-

DF-VO (ICRA 2020, use scale-consistent depth with optical flow for more accurate visual odometry)

-

Kitti-Odom-Eval-Python (python code for kitti odometry evaluation)

-

Unsupervised-Indoor-Depth (Using SC-SfMLearner in NYUv2 dataset)