Hierarchical perception library in Python.

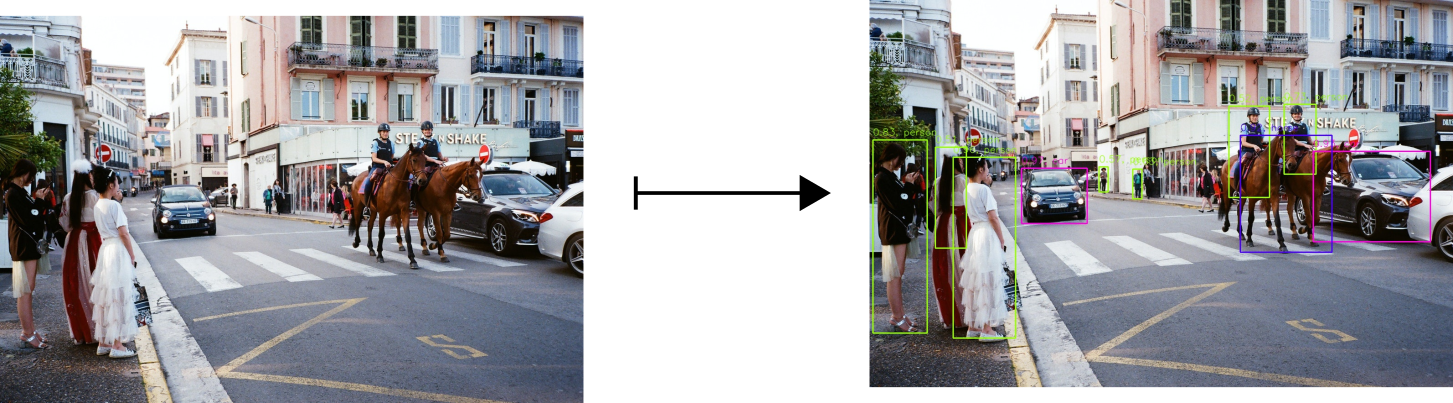

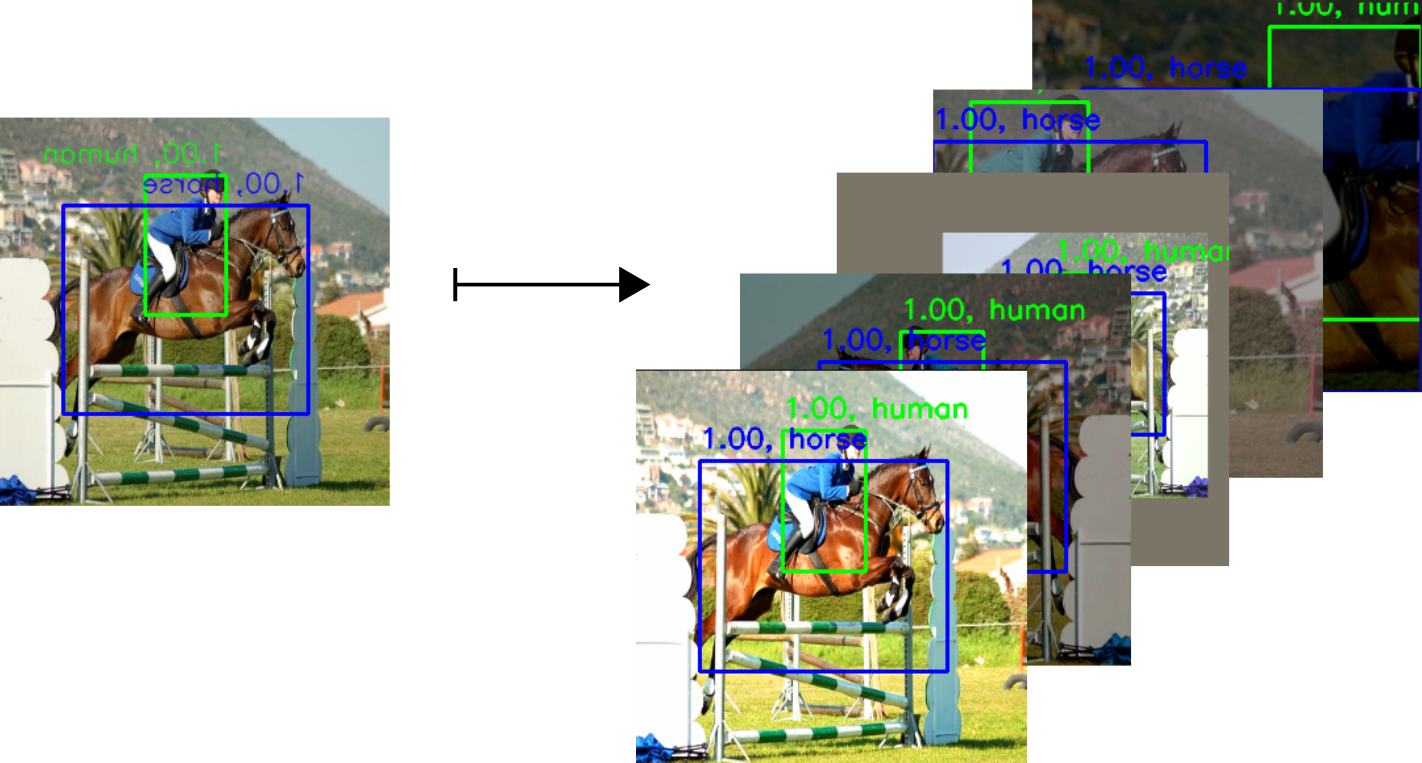

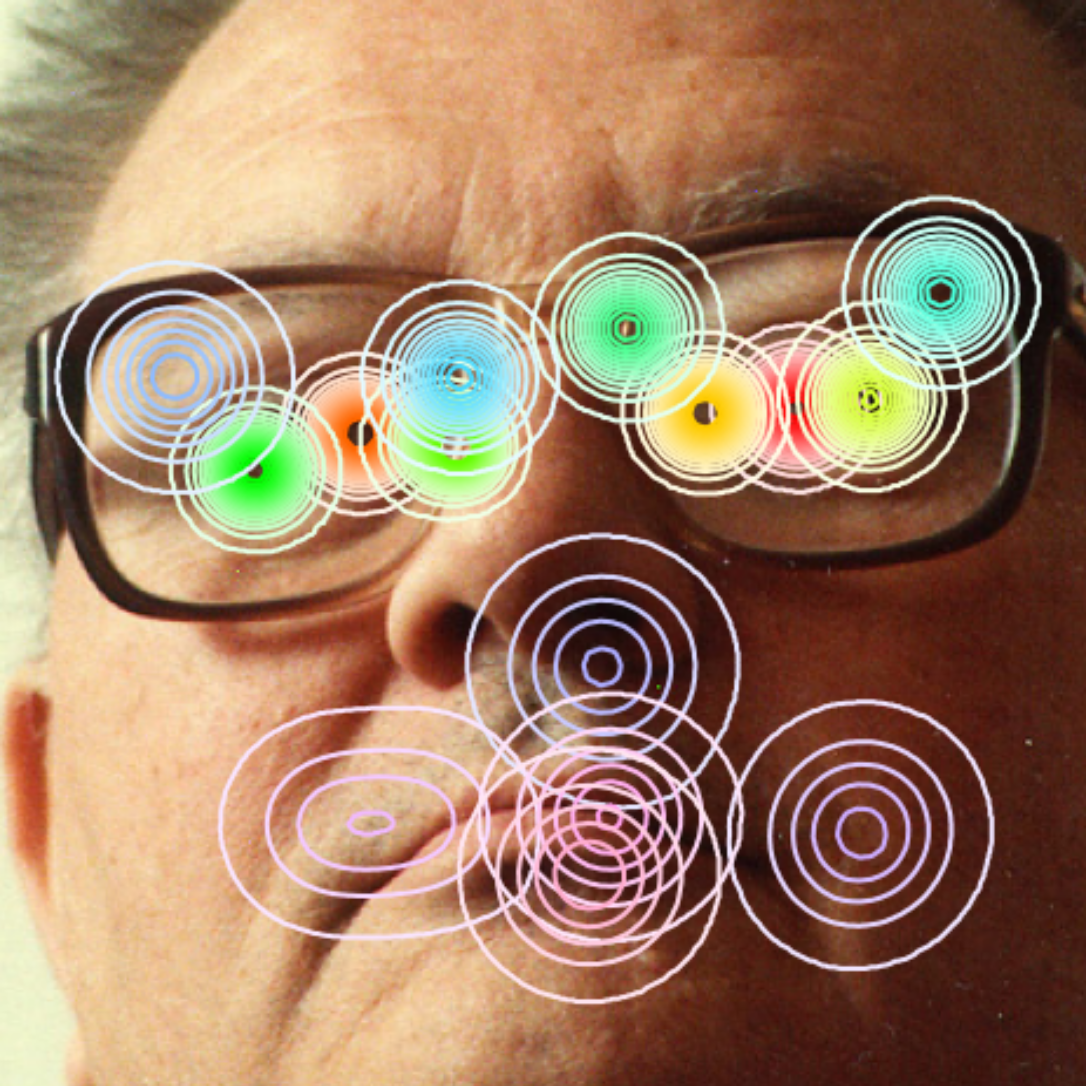

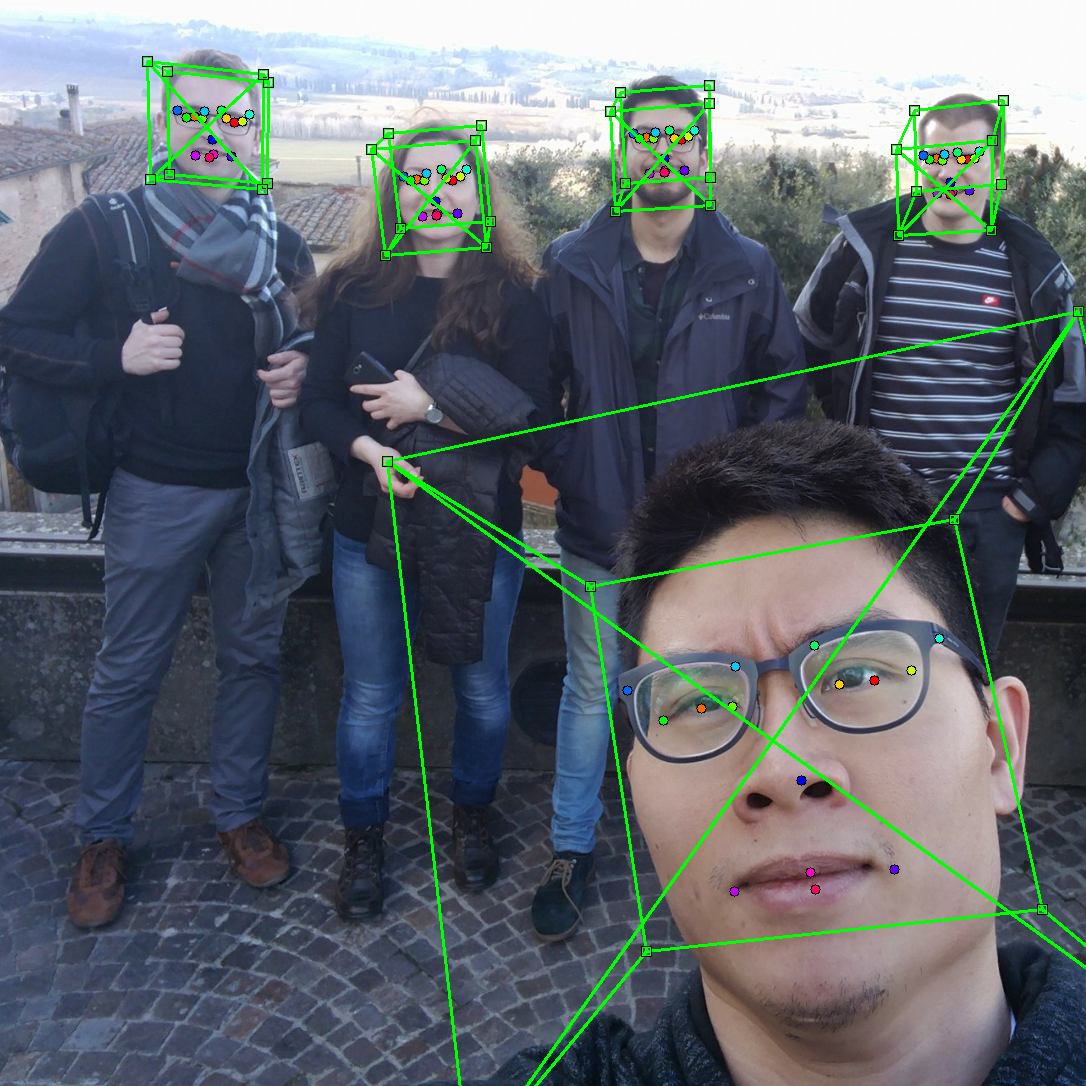

PAZ is used in the following examples (links to real-time demos and training scripts):

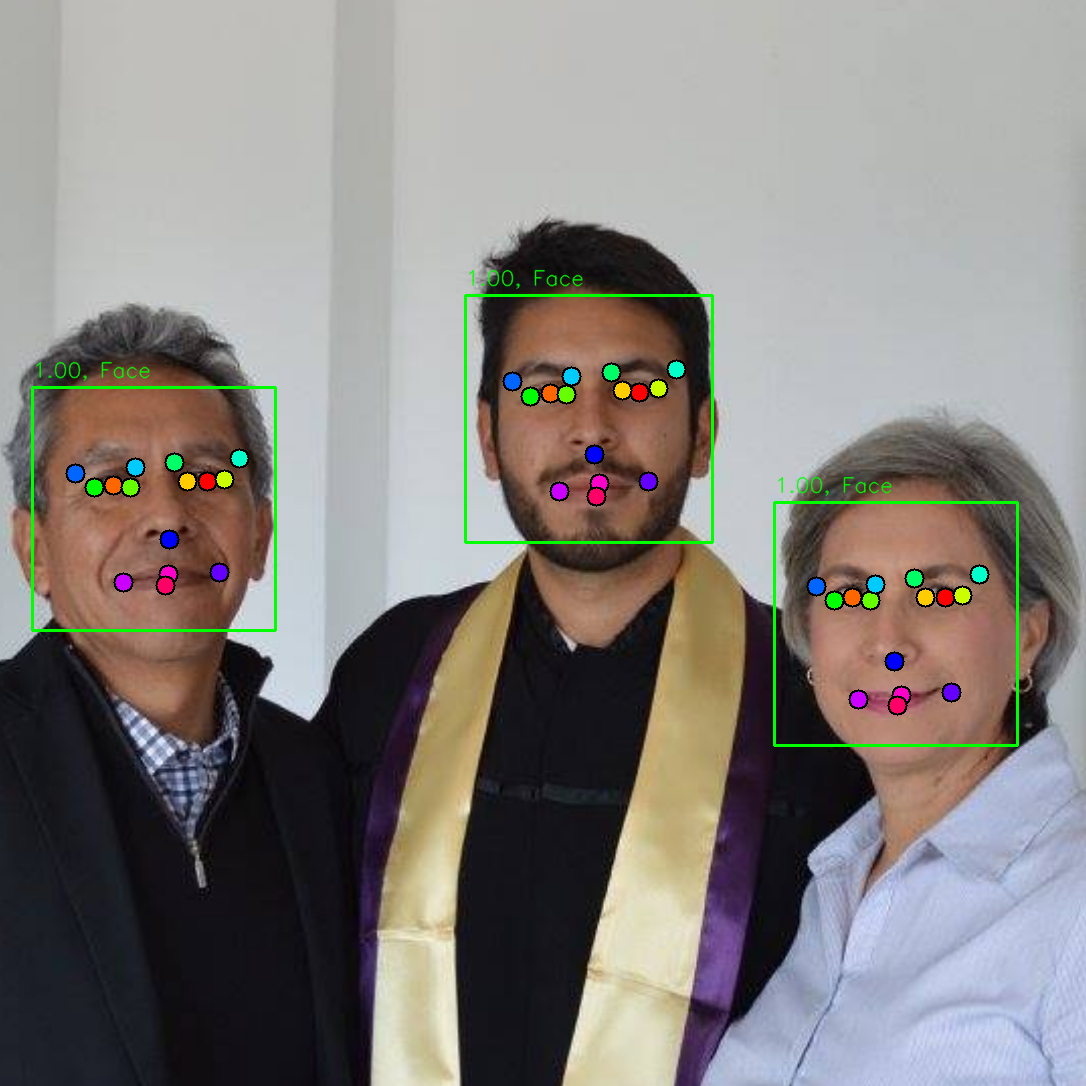

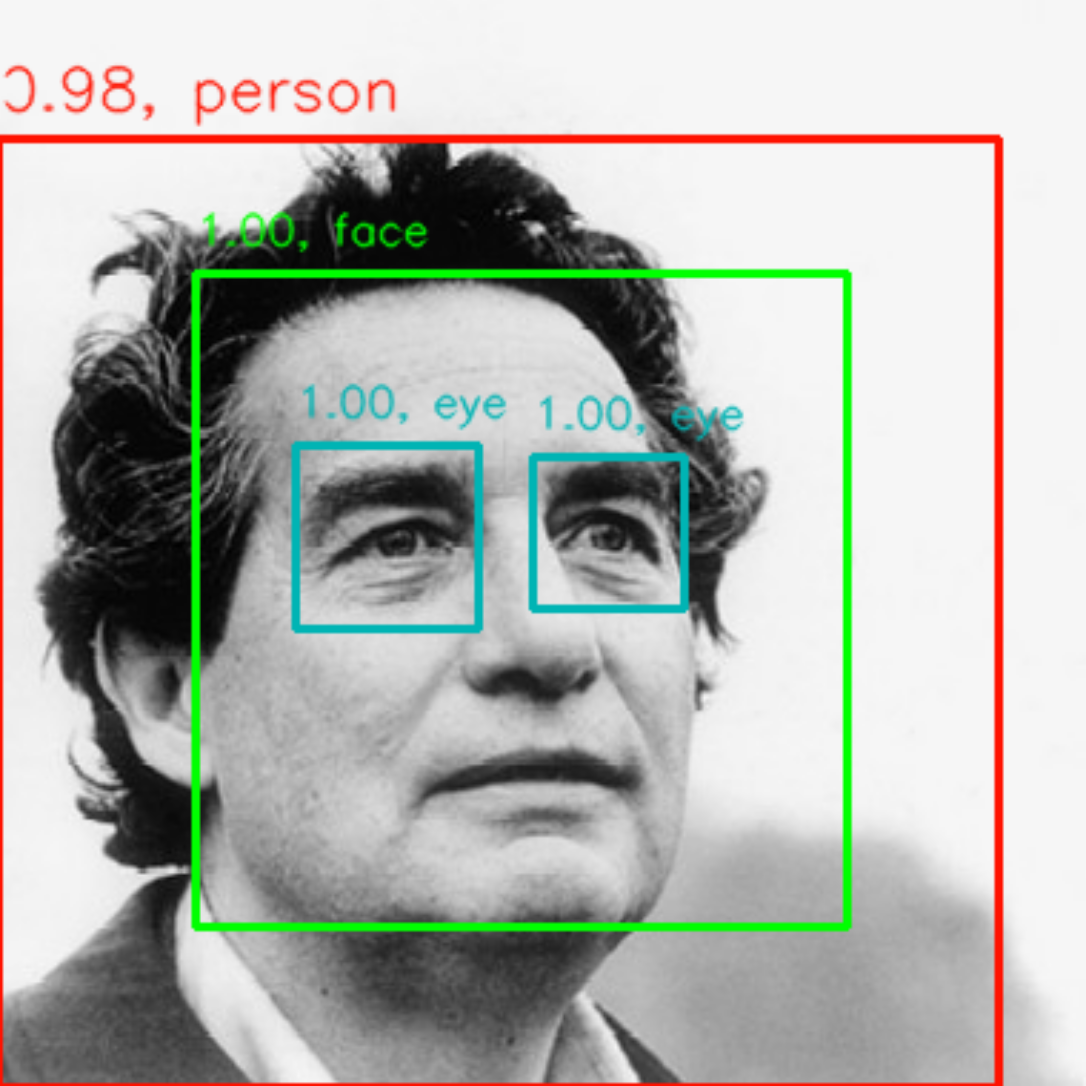

| Probabilistic 2D keypoints | 6D head-pose estimation | Object detection |

|---|---|---|

|

|

|

| Emotion classifier | 2D keypoint estimation | Mask-RCNN (in-progress) |

|---|---|---|

|

|

|

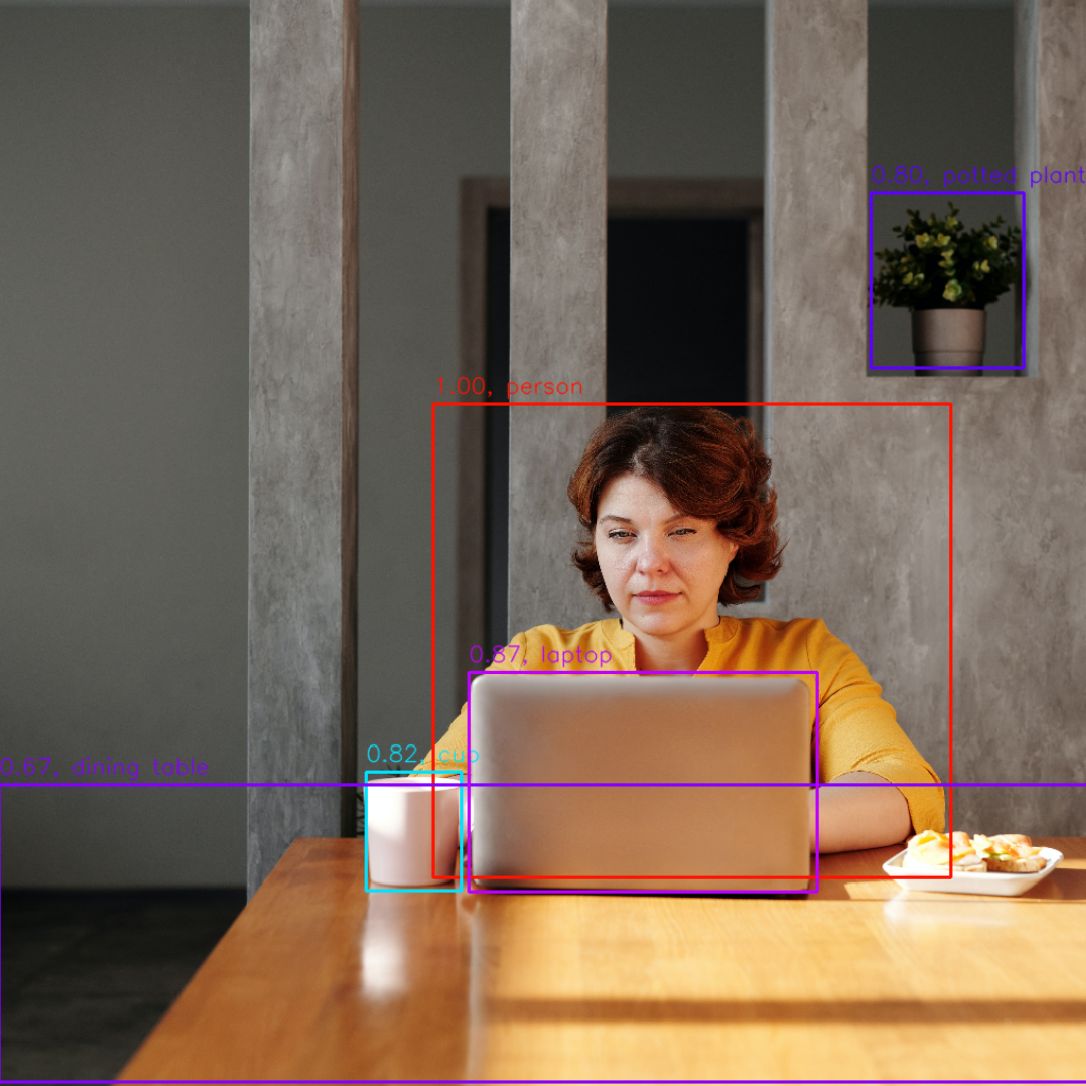

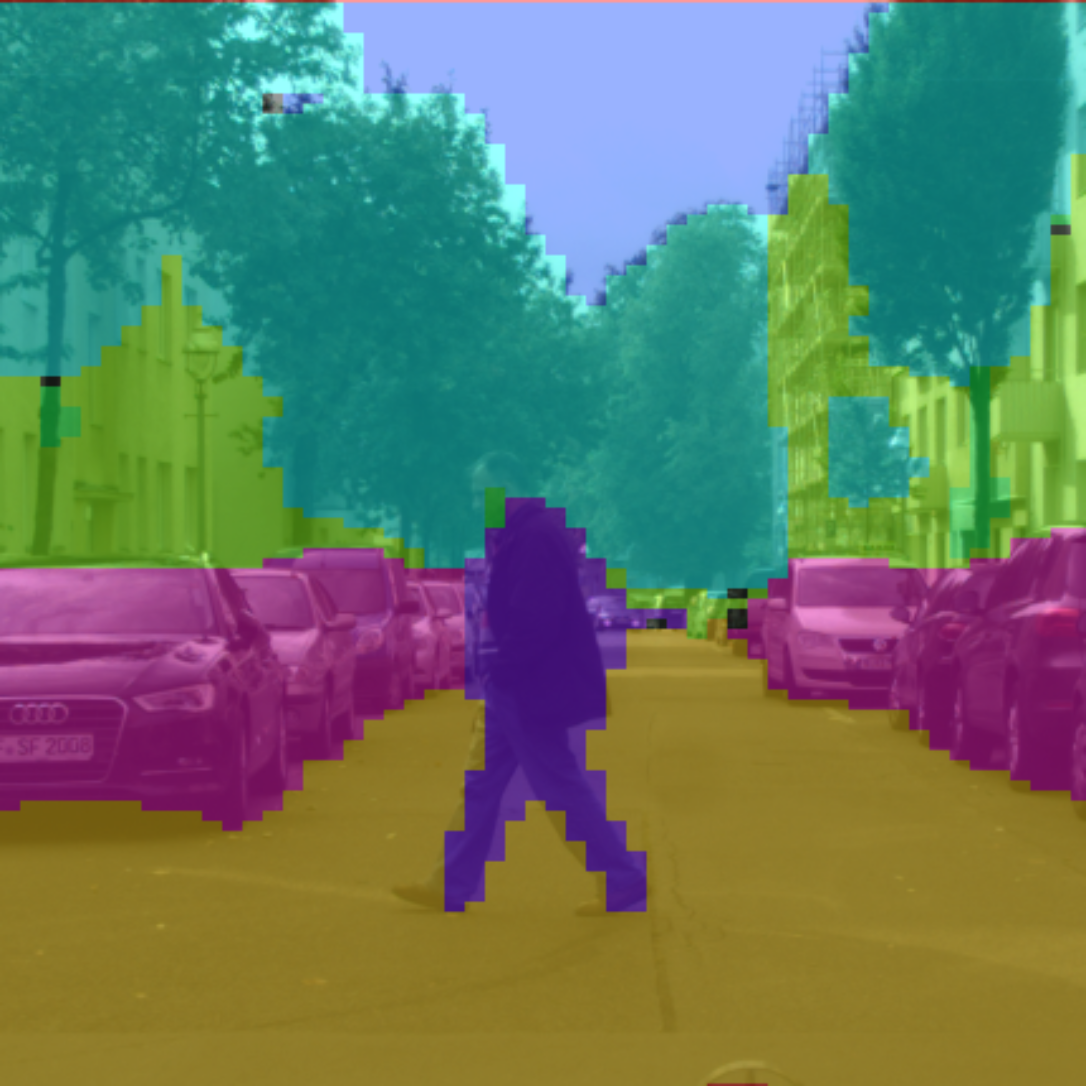

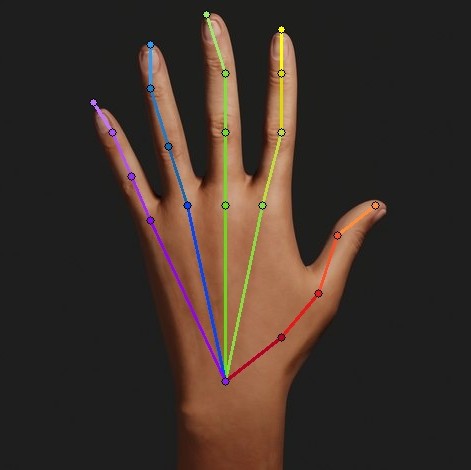

| Semantic segmentation | Hand pose estimation | 2D Human pose estimation |

|---|---|---|

|

|

|

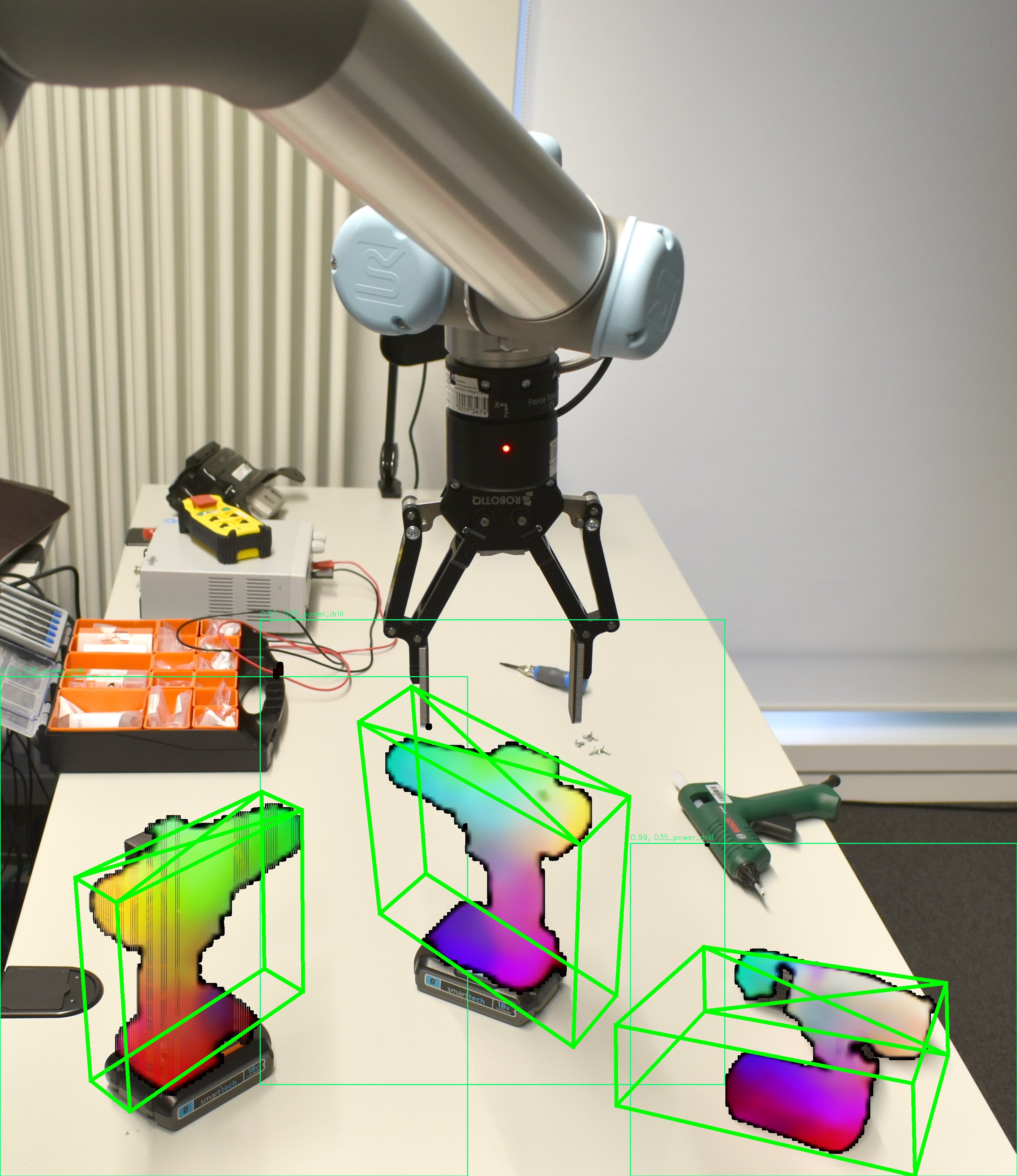

| 3D keypoint discovery | Hand closure detection | 6D pose estimation |

|---|---|---|

|

|

|

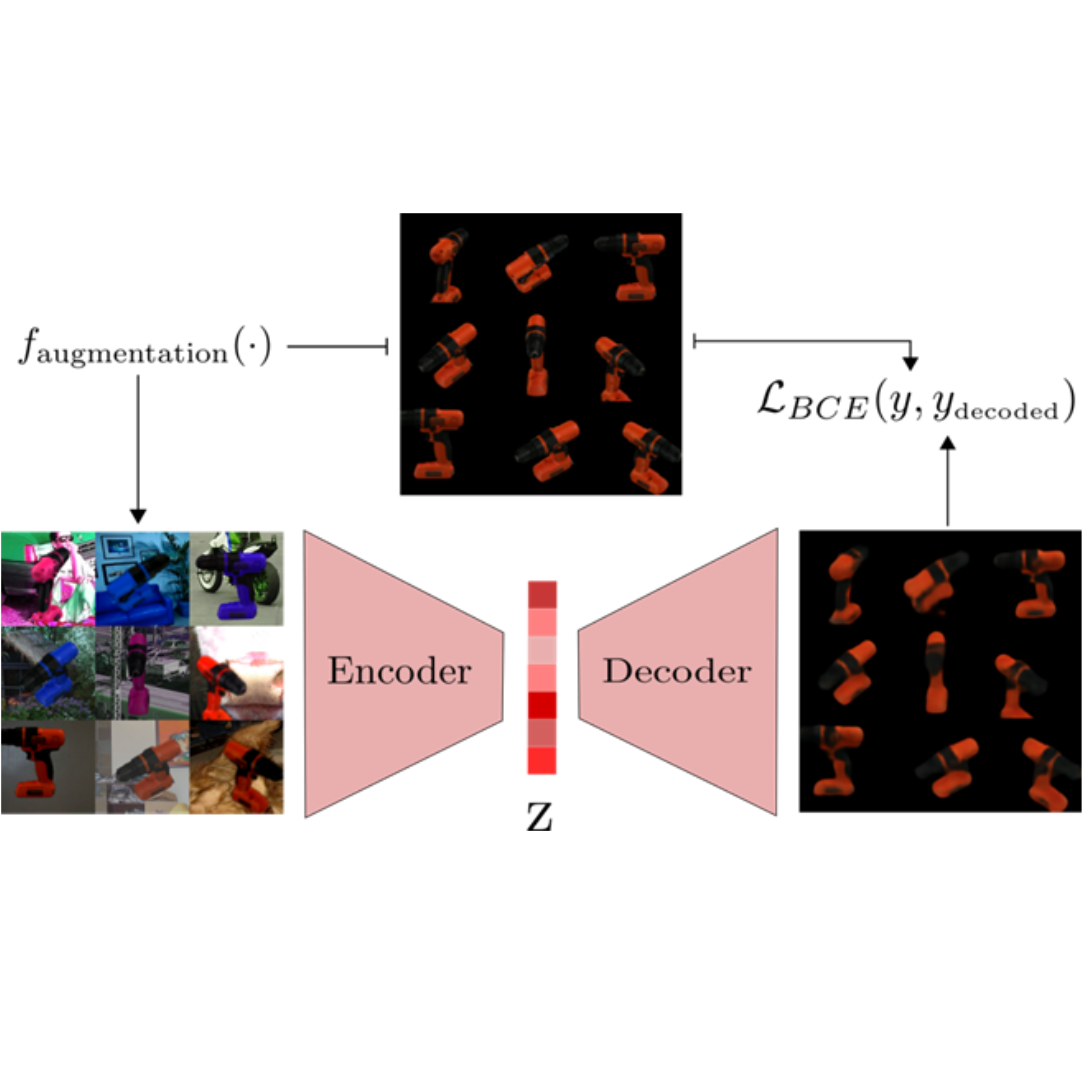

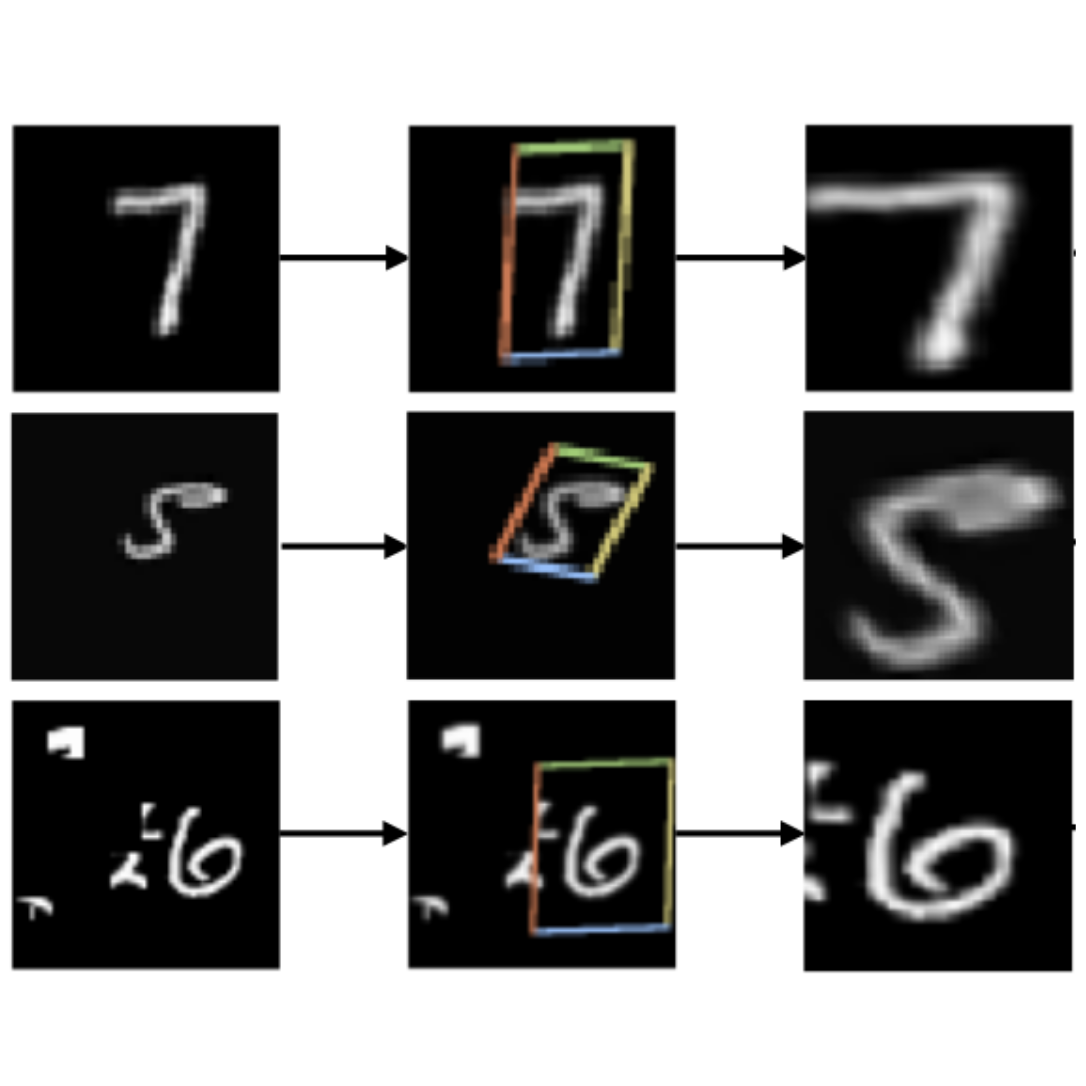

| Implicit orientation | Attention (STNs) | Haar Cascade detector |

|---|---|---|

|

|

|

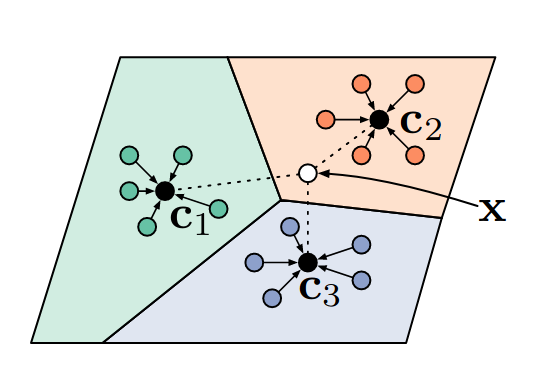

| Eigenfaces | Prototypical Networks | 3D Human pose estimation |

|---|---|---|

|

|

|

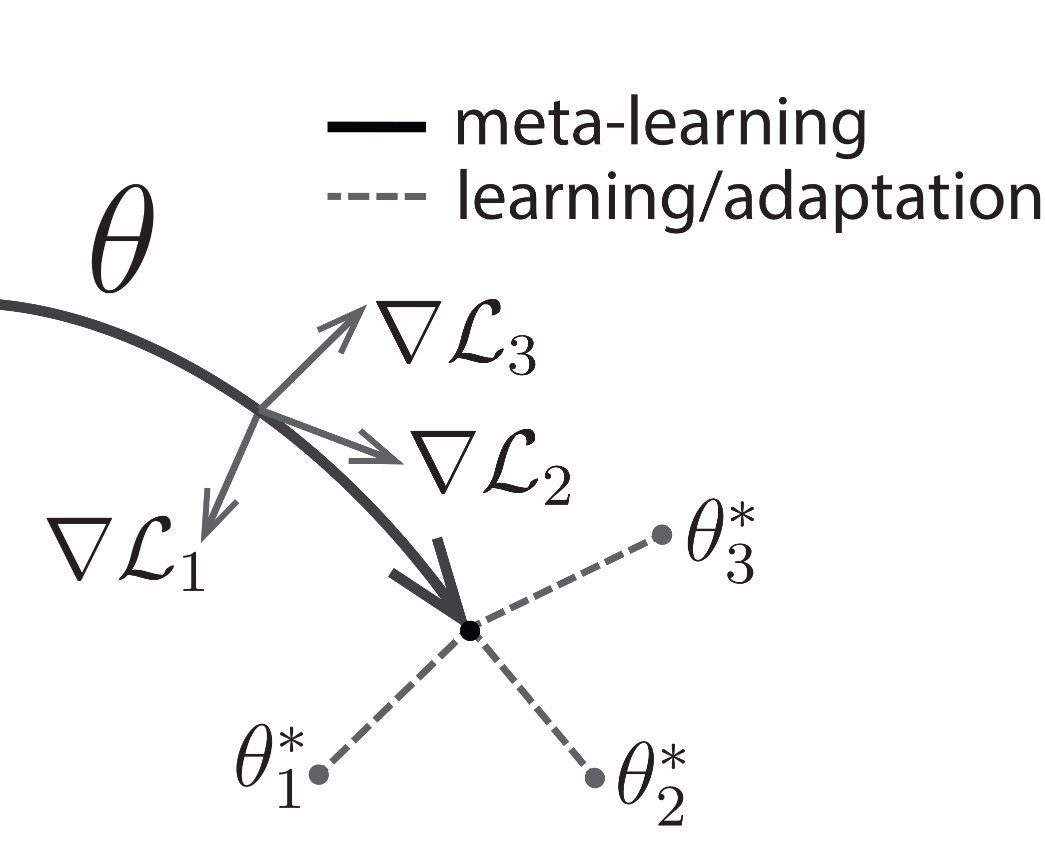

| MAML | ||

|---|---|---|

|

|

|

All models can be re-trained with your own data (except for Mask-RCNN, we are working on it here).

- Examples

- Installation

- Documentation

- Hierarchical APIs

- Additional functionality

- Motivation

- Citation

- Funding

PAZ has only three dependencies: Tensorflow2.0, OpenCV and NumPy.

To install PAZ with pypi run:

pip install pypaz --user

Full documentation can be found https://oarriaga.github.io/paz/.

PAZ can be used with three different API levels which are there to be helpful for the user's specific application.

Easy out-of-the-box prediction. For example, for detecting objects we can call the following pipeline:

from paz.applications import SSD512COCO

detect = SSD512COCO()

# apply directly to an image (numpy-array)

inferences = detect(image)There are multiple high-level functions a.k.a. pipelines already implemented in PAZ here. Those functions are build using our mid-level API described now below.

While the high-level API is useful for quick applications, it might not be flexible enough for your specific purpose. Therefore, in PAZ we can build high-level functions using our a mid-level API.

If your function is sequential you can construct a sequential function using SequentialProcessor. In the example below we create a data-augmentation pipeline:

from paz.abstract import SequentialProcessor

from paz import processors as pr

augment = SequentialProcessor()

augment.add(pr.RandomContrast())

augment.add(pr.RandomBrightness())

augment.add(pr.RandomSaturation())

augment.add(pr.RandomHue())

# you can now use this now as a normal function

image = augment(image)You can also add any function not only those found in processors. For example we can pass a numpy function to our original data-augmentation pipeline:

augment.add(np.mean)There are multiple functions a.k.a. Processors already implemented in PAZ here.

Using these processors we can build more complex pipelines e.g. data augmentation for object detection: pr.AugmentDetection

Non-sequential pipelines can be also build by abstracting Processor. In the example below we build a emotion classifier from scratch using our high-level and mid-level functions.

from paz.applications import HaarCascadeFrontalFace, MiniXceptionFER

import paz.processors as pr

class EmotionDetector(pr.Processor):

def __init__(self):

super(EmotionDetector, self).__init__()

self.detect = HaarCascadeFrontalFace(draw=False)

self.crop = pr.CropBoxes2D()

self.classify = MiniXceptionFER()

self.draw = pr.DrawBoxes2D(self.classify.class_names)

def call(self, image):

boxes2D = self.detect(image)['boxes2D']

cropped_images = self.crop(image, boxes2D)

for cropped_image, box2D in zip(cropped_images, boxes2D):

box2D.class_name = self.classify(cropped_image)['class_name']

return self.draw(image, boxes2D)

detect = EmotionDetector()

# you can now apply it to an image (numpy array)

predictions = detect(image)Processors allow us to easily compose, compress and extract away parameters of functions. However, most processors are build using our low-level API (backend) shown next.

Mid-level processors are mostly built from small backend functions found in: boxes, cameras, images, keypoints and quaternions.

These functions can found in paz.backend:

from paz.backend import boxes, camera, image, keypoints, quaternionFor example, you can use them in your scripts to load or show images:

from paz.backend.image import load_image, show_image

image = load_image('my_image.png')

show_image(image)-

PAZ has built-in messages e.g.

Pose6Dfor an easier data exchange with other frameworks such as ROS. -

There are custom callbacks e.g. MAP evaluation for object detectors while training.

-

PAZ comes with data loaders for the multiple datasets: OpenImages, VOC, YCB-Video, FAT, FERPlus, FER2013, CityScapes.

-

We have an automatic batch creation and dispatching wrappers for an easy connection between you

pipelinesand tensorflow generators. Please look at the tutorials for more information.

The following models are implemented in PAZ and they can be trained with your own data:

Even though there are multiple high-level computer vision libraries in different deep learning frameworks, I felt there was not a consolidated deep learning library for robot-perception in my framework of choice (Keras).

As a final remark, I would like to mention, that I feel that we might tend to forget the great effort and emotional status behind every (open-source) project. I feel it's easy to blurry a company name with the individuals behind their work, and we forget that there is someone feeling our criticism and our praise. Therefore, whatever good code you can find here, is all dedicated to the software-engineers and contributors of open-source projects like Pytorch, Tensorflow and Keras. You put your craft out there for all of us to use and appreciate, and we ought first to give you our thankful consideration.

- The name PAZ satisfies it's theoretical definition by having it as an acronym for Perception for Autonomous Systems where the letter S is replaced for Z in order to indicate that for "System" we mean almost anything i.e. Z being a classical algebraic variable to indicate an unknown element.

Continuous integration is managed trough github actions using pytest. You can then check for the tests by running:

pytest tests

Test coverage can be checked using coverage.

You can install coverage by calling: pip install coverage --user

You can then check for the test coverage by running:

coverage run -m pytest tests/

coverage report -m

If you use PAZ please consider citating it. You can also find our paper here https://arxiv.org/abs/2010.14541.

@misc{arriaga2020perception,

title={Perception for Autonomous Systems (PAZ)},

author={Octavio Arriaga and Matias Valdenegro-Toro and Mohandass Muthuraja and Sushma Devaramani and Frank Kirchner},

year={2020},

eprint={2010.14541},

archivePrefix={arXiv},

primaryClass={cs.CV}

}PAZ is currently developed in the Robotics Group of the University of Bremen, together with the Robotics Innovation Center of the German Research Center for Artificial Intelligence (DFKI) in Bremen. PAZ has been funded by the German Federal Ministry for Economic Affairs and Energy and the German Aerospace Center (DLR). PAZ been used and/or developed in the projects TransFIT and KiMMI-SF.