Andrew Hundt, Benjamin Killeen, Heeyeon Kwon, Chris Paxton, and Gregory D. Hager

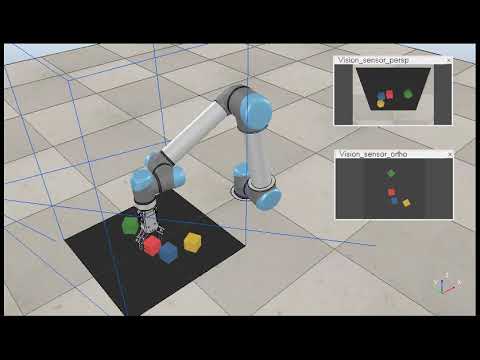

Click the image to watch the video:

@misc{hundt2019good,

title={"Good Robot!": Efficient Reinforcement Learning for Multi-Step Visual Tasks via Reward Shaping},

author={Andrew Hundt and Benjamin Killeen and Heeyeon Kwon and Chris Paxton and Gregory D. Hager},

year={2019},

eprint={1909.11730},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/1909.11730}

}

Abstract— In order to learn effectively, robots must be able to extract the intangible context by which task progress and mistakes are defined. In the domain of reinforcement learning, much of this information is provided by the reward function. Hence, reward shaping is a necessary part of how we can achieve state-of-the-art results on complex, multi-step tasks. However, comparatively little work has examined how reward shaping should be done so that it captures task context, particularly in scenarios where the task is long-horizon and failure is highly consequential. Our Schedule for Positive Task (SPOT) reward trains our Efficient Visual Task (EVT) model to solve problems that require an understanding of both task context and workspace constraints of multi-step block arrangement tasks. In simulation EVT can completely clear adversarial arrangements of objects by pushing and grasping in 99% of cases vs an 82% baseline in prior work. For random arrangements EVT clears 100% of test cases at 86% action efficiency vs 61% efficiency in prior work. EVT + SPOT is also able to demonstrate context understanding and complete stacks in 74% of trials compared to a base- line of 5% with EVT alone. To our knowledge, this is the first instance of a Reinforcement Learning based algorithm successfully completing such a challenge. Code is available at https://github.com/jhu-lcsr/costar visual stacking.

Details of our specific training and test runs, command line commands, pre-trained models, and logged data with images are on the costar visual stacking github releases page.

Download V-REP and run it to start the simulation. Uou may need to adjust the paths below to match your V-REP folder, and it should be run from the costar_visual_stacking repository directory:

~/src/V-REP_PRO_EDU_V3_6_2_Ubuntu16_04/vrep.sh -gREMOTEAPISERVERSERVICE_19997_FALSE_TRUE -s simulation/simulation.tttexport CUDA_VISIBLE_DEVICES="0" && python3 main.py --is_sim --obj_mesh_dir 'objects/blocks' --num_obj 4 --push_rewards --experience_replay --explore_rate_decay --placeTo use trial SPOT also add --trial_reward to this command.

Remember to first train the model or download the snapshot file from the release page (ex: v0.12 release) and update the command line --snapshot_file FILEPATH:

export CUDA_VISIBLE_DEVICES="0" && python3 main.py --is_sim --obj_mesh_dir 'objects/blocks' --num_obj 4 --push_rewards --experience_replay --explore_rate_decay --place --load_snapshot --snapshot_file ~/Downloads/snapshot.reinforcement-best-stack-rate.pth --random_seed 1238 --is_testing --save_visualizations --disable_situation_removalRow Testing Video:

Row v0.12 release page and pretrained models.

export CUDA_VISIBLE_DEVICES="1" && python3 main.py --is_sim --obj_mesh_dir 'objects/blocks' --num_obj 4 --push_rewards --experience_replay --explore_rate_decay --place --check_rowexport CUDA_VISIBLE_DEVICES="0" && python3 main.py --is_sim --obj_mesh_dir 'objects/blocks' --num_obj 4 --push_rewards --experience_replay --explore_rate_decay --trial_reward --future_reward_discount 0.65 --place --check_row --is_testing --tcp_port 19996 --load_snapshot --snapshot_file '/home/costar/Downloads/snapshot-backup.reinforcement-best-stack-rate.pth' --random_seed 1238 --disable_situation_removal --save_visualizationsWe provide backwards compatibility with the Visual Pushing Grasping (VPG) GitHub Repository, and evaluate on their pushing and grasping task as a baseline from which to compare our algorithms.

export CUDA_VISIBLE_DEVICES="0" && python3 main.py --is_sim --obj_mesh_dir 'objects/toys' --num_obj 10 --push_rewards --experience_replay --explore_rate_decayYou can also run without --trial_reward and with the default --future_reward_discount 0.5.

export CUDA_VISIBLE_DEVICES="0" && python3 main.py --is_sim --obj_mesh_dir 'objects/toys' --num_obj 10 --push_rewards --experience_replay --explore_rate_decay --load_snapshot --snapshot_file '/home/costar/src/costar_visual_stacking/logs/2019-08-17.20:54:32-train-grasp-place-split-efficientnet-21k-acc-0.80/models/snapshot.reinforcement.pth' --random_seed 1238 --is_testing --save_visualizationsAdversarial pushing and grasping release v0.3.2 video:

export CUDA_VISIBLE_DEVICES="0" && python3 main.py --is_sim --obj_mesh_dir 'objects/toys' --num_obj 10 --push_rewards --experience_replay --explore_rate_decay --trial_reward --future_reward_discount 0.65 --tcp_port 19996 --is_testing --random_seed 1238 --load_snapshot --snapshot_file '/home/ahundt/src/costar_visual_stacking/logs/2019-09-12.18:21:37-push-grasp-16k-trial-reward/models/snapshot.reinforcement.pth' --max_test_trials 10 --test_preset_casesAfter Running the test you need to summarize the results:

python3 evaluate.py --session_directory /home/ahundt/src/costar_visual_stacking/logs/2019-09-16.02:11:25 --method reinforcement --num_obj_complete 6 --presetIt is possible to do multiple runs on different GPUs on the same machine. First, start an instance of V-Rep as below,

~/src/V-REP_PRO_EDU_V3_6_2_Ubuntu16_04/vrep.sh -gREMOTEAPISERVERSERVICE_19997_FALSE_TRUE -s simulation/simulation.tttbeing careful to use V-Rep 3.6.2 wherever it is installed locally. The port number, here 19997 which is the usual default, is the important point, as we will cahnge it in subsequent runs.

Start the simulation as usual, but now specify --tcp_port 19997.

Start another V-Rep session.

~/src/V-REP_PRO_EDU_V3_6_2_Ubuntu16_04/vrep.sh -gREMOTEAPISERVERSERVICE_19996_FALSE_TRUE -s simulation/simulation.tttFor some reason, the port number is important here, and should be selected to be lower than already running sessions.

When you start training, be sure to specify a different GPU. For example, if previously you set

export CUDA_VISIBLE_DEVICES="0"then you should likely set

export CUDA_VISIBLE_DEVICES="1"and specify the corresponding tcp port --tcp_port 19996.

Additional runs in parallel should use ports 19995, 19994, etc.

To run jupyter lab or a jupyter notebook in the costar_visual_stacking/plot_success_rate folder use the following command:

jupyter lab ~/src/costar_visual_stacking/plot_success_rateOpen jupyter in your favorite browser and there you will also find instructions for generating the plots.

Original Visual Pushing Grasping (VPG) Repository. Edits have been made to the text below to reflect some configuration and code updates needed to reproduce the previous VPG paper's original behavior:

Visual Pushing and Grasping (VPG) is a method for training robotic agents to learn how to plan complementary pushing and grasping actions for manipulation (e.g. for unstructured pick-and-place applications). VPG operates directly on visual observations (RGB-D images), learns from trial and error, trains quickly, and generalizes to new objects and scenarios.

This repository provides PyTorch code for training and testing VPG policies with deep reinforcement learning in both simulation and real-world settings on a UR5 robot arm. This is the reference implementation for the paper:

Andy Zeng, Shuran Song, Stefan Welker, Johnny Lee, Alberto Rodriguez, Thomas Funkhouser

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2018

Skilled robotic manipulation benefits from complex synergies between non-prehensile (e.g. pushing) and prehensile (e.g. grasping) actions: pushing can help rearrange cluttered objects to make space for arms and fingers; likewise, grasping can help displace objects to make pushing movements more precise and collision-free. In this work, we demonstrate that it is possible to discover and learn these synergies from scratch through model-free deep reinforcement learning. Our method involves training two fully convolutional networks that map from visual observations to actions: one infers the utility of pushes for a dense pixel-wise sampling of end effector orientations and locations, while the other does the same for grasping. Both networks are trained jointly in a Q-learning framework and are entirely self-supervised by trial and error, where rewards are provided from successful grasps. In this way, our policy learns pushing motions that enable future grasps, while learning grasps that can leverage past pushes. During picking experiments in both simulation and real-world scenarios, we find that our system quickly learns complex behaviors amid challenging cases of clutter, and achieves better grasping success rates and picking efficiencies than baseline alternatives after only a few hours of training. We further demonstrate that our method is capable of generalizing to novel objects.

If you find this code useful in your work, please consider citing:

@inproceedings{zeng2018learning,

title={Learning Synergies between Pushing and Grasping with Self-supervised Deep Reinforcement Learning},

author={Zeng, Andy and Song, Shuran and Welker, Stefan and Lee, Johnny and Rodriguez, Alberto and Funkhouser, Thomas},

booktitle={IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

year={2018}

}

Demo videos of a real robot in action can be found here.

The contact for CoSTAR Visual Stacking is Andrew Hundt. The contact for the original Visual Pushing Grasping repository is Andy Zeng andyz[at]princeton[dot]edu

This implementation requires the following dependencies (tested on Ubuntu 16.04.4 LTS):

-

Python 2.7 or Python 3

-

NumPy, SciPy, OpenCV-Python, Matplotlib. You can quickly install/update these dependencies by running the following (replace

pipwithpip3for Python 3):pip3 install numpy scipy opencv-python matplotlib

-

PyTorch version 1.2:

pip3 install torch==1.2 torchvision==0.4.0

-

V-REP (simulation environment)

Accelerating training/inference with an NVIDIA GPU requires installing CUDA and cuDNN. You may need to register with NVIDIA for the CUDA Developer Program (it's free) before downloading. This code has been tested with CUDA 8.0 and cuDNN 6.0 on a single NVIDIA Titan X (12GB). Running out-of-the-box with our pre-trained models using GPU acceleration requires 8GB of GPU memory. Running with GPU acceleration is highly recommended, otherwise each training iteration will take several minutes to run (as opposed to several seconds). This code automatically detects the GPU(s) on your system and tries to use it. If you have a GPU, but would instead like to run in CPU mode, add the tag --cpu when running main.py below.

This demo runs our pre-trained model with a UR5 robot arm in simulation on challenging picking scenarios with adversarial clutter, where grasping an object is generally not feasible without first pushing to break up tight clusters of objects.

-

Checkout this repository and download our pre-trained models.

git clone https://github.com/jhu-lcsr/costar_visual_stacking.git visual-pushing-grasping cd visual-pushing-grasping/downloads ./download-weights.sh cd ..

-

Run V-REP (navigate to your V-REP directory and run

./vrep.sh). From the main menu, selectFile>Open scene..., and open the filevisual-pushing-grasping/simulation/simulation.tttfrom this repository. -

In another terminal window, run the following (simulation will start in the V-REP window):

python main.py --is_sim --obj_mesh_dir 'objects/blocks' --num_obj 10 \ --push_rewards --experience_replay --explore_rate_decay \ --is_testing --test_preset_cases --test_preset_file 'simulation/test-cases/test-10-obj-07.txt' \ --load_snapshot --snapshot_file 'downloads/vpg-original-sim-pretrained-10-obj.pth' \ --save_visualizations --nn densenet

Note: you may get a popup window titled "Dynamics content" in your V-REP window. Select the checkbox and press OK. You will have to do this a total of 3 times before it stops annoying you.

To train a regular VPG policy from scratch in simulation, first start the simulation environment by running V-REP (navigate to your V-REP directory and run ./vrep.sh). From the main menu, select File > Open scene..., and open the file visual-pushing-grasping/simulation/simulation.ttt. Then navigate to this repository in another terminal window and run the following:

python main.py --is_sim --push_rewards --experience_replay --explore_rate_decay --save_visualizationsData collected from each training session (including RGB-D images, camera parameters, heightmaps, actions, rewards, model snapshots, visualizations, etc.) is saved into a directory in the logs folder. A training session can be resumed by adding the flags --load_snapshot and --continue_logging, which then loads the latest model snapshot specified by --snapshot_file and transition history from the session directory specified by --logging_directory:

python main.py --is_sim --push_rewards --experience_replay --explore_rate_decay --save_visualizations \

--load_snapshot --snapshot_file 'logs/YOUR-SESSION-DIRECTORY-NAME-HERE/models/snapshot-backup.reinforcement.pth' \

--continue_logging --logging_directory 'logs/YOUR-SESSION-DIRECTORY-NAME-HERE' \Various training options can be modified or toggled on/off with different flags (run python main.py -h to see all options):

usage: main.py [-h] [--is_sim] [--obj_mesh_dir OBJ_MESH_DIR]

[--num_obj NUM_OBJ] [--num_extra_obj NUM_EXTRA_OBJ]

[--tcp_host_ip TCP_HOST_IP] [--tcp_port TCP_PORT]

[--rtc_host_ip RTC_HOST_IP] [--rtc_port RTC_PORT]

[--heightmap_resolution HEIGHTMAP_RESOLUTION]

[--random_seed RANDOM_SEED] [--cpu] [--flops] [--method METHOD]

[--push_rewards]

[--future_reward_discount FUTURE_REWARD_DISCOUNT]

[--experience_replay] [--heuristic_bootstrap]

[--explore_rate_decay] [--grasp_only] [--check_row]

[--random_weights] [--max_iter MAX_ITER] [--place]

[--no_height_reward] [--grasp_color_task]

[--grasp_count GRASP_COUT] [--transfer_grasp_to_place]

[--check_z_height] [--trial_reward]

[--check_z_height_goal CHECK_Z_HEIGHT_GOAL]

[--disable_situation_removal] [--is_testing]

[--evaluate_random_objects] [--max_test_trials MAX_TEST_TRIALS]

[--test_preset_cases] [--test_preset_file TEST_PRESET_FILE]

[--test_preset_dir TEST_PRESET_DIR]

[--show_preset_cases_then_exit] [--load_snapshot]

[--snapshot_file SNAPSHOT_FILE] [--nn NN] [--continue_logging]

[--logging_directory LOGGING_DIRECTORY] [--save_visualizations]

Train robotic agents to learn how to plan complementary pushing, grasping, and placing as well as multi-step tasks

for manipulation with deep reinforcement learning in PyTorch.

optional arguments:

-h, --help show this help message and exit

--is_sim run in simulation?

--obj_mesh_dir OBJ_MESH_DIR

directory containing 3D mesh files (.obj) of objects

to be added to simulation

--num_obj NUM_OBJ number of objects to add to simulation

--num_extra_obj NUM_EXTRA_OBJ

number of secondary objects, like distractors, to add

to simulation

--tcp_host_ip TCP_HOST_IP

IP address to robot arm as TCP client (UR5)

--tcp_port TCP_PORT port to robot arm as TCP client (UR5)

--rtc_host_ip RTC_HOST_IP

IP address to robot arm as real-time client (UR5)

--rtc_port RTC_PORT port to robot arm as real-time client (UR5)

--heightmap_resolution HEIGHTMAP_RESOLUTION

meters per pixel of heightmap

--random_seed RANDOM_SEED

random seed for simulation and neural net

initialization

--cpu force code to run in CPU mode

--flops calculate floating point operations of a forward pass

then exit

--method METHOD set to 'reactive' (supervised learning) or

'reinforcement' (reinforcement learning ie Q-learning)

--push_rewards use immediate rewards (from change detection) for

pushing?

--future_reward_discount FUTURE_REWARD_DISCOUNT

--experience_replay use prioritized experience replay?

--heuristic_bootstrap

use handcrafted grasping algorithm when grasping fails

too many times in a row during training?

--explore_rate_decay

--grasp_only

--check_row check for placed rows instead of stacks

--random_weights use random weights rather than weights pretrained on

ImageNet

--max_iter MAX_ITER max iter for training. -1 (default) trains

indefinitely.

--place enable placing of objects

--no_height_reward disable stack height reward multiplier

--grasp_color_task enable grasping specific colored objects

--grasp_count GRASP_COUT

number of successful task based grasps

--transfer_grasp_to_place

Load the grasping weights as placing weights.

--check_z_height use check_z_height instead of check_stacks for any

stacks

--trial_reward Experience replay delivers rewards for the whole

trial, not just next step.

--check_z_height_goal CHECK_Z_HEIGHT_GOAL

check_z_height goal height, a value of 2.0 is 0.1

meters, and a value of 4.0 is 0.2 meters

--disable_situation_removal

Disables situation removal, where rewards are set to 0

and a reset is triggerd upon reveral of task progress.

--is_testing

--evaluate_random_objects

Evaluate trials with random block positions, for

example testing frequency of random rows.

--max_test_trials MAX_TEST_TRIALS

maximum number of test runs per case/scenario

--test_preset_cases

--test_preset_file TEST_PRESET_FILE

--test_preset_dir TEST_PRESET_DIR

--show_preset_cases_then_exit

just show all the preset cases so you can have a look,

then exit

--load_snapshot load pre-trained snapshot of model?

--snapshot_file SNAPSHOT_FILE

--nn NN Neural network architecture choice, options are

efficientnet, densenet

--continue_logging continue logging from previous session?

--logging_directory LOGGING_DIRECTORY

--save_visualizations

save visualizations of FCN predictions?

Results from our baseline comparisons and ablation studies in our paper can be reproduced using these flags. For example:

-

Train reactive policies with pushing and grasping (P+G Reactive); specify

--methodto be'reactive', remove--push_rewards, remove--explore_rate_decay:python main.py --is_sim --method 'reactive' --experience_replay --save_visualizations -

Train reactive policies with grasping-only (Grasping-only); similar arguments as P+G Reactive above, but add

--grasp_only:python main.py --is_sim --method 'reactive' --experience_replay --grasp_only --save_visualizations -

Train VPG policies without any rewards for pushing (VPG-noreward); similar arguments as regular VPG, but remove

--push_rewards:python main.py --is_sim --experience_replay --explore_rate_decay --save_visualizations

-

Train shortsighted VPG policies with lower discount factors on future rewards (VPG-myopic); similar arguments as regular VPG, but set

--future_reward_discountto0.2:python main.py --is_sim --push_rewards --future_reward_discount 0.2 --experience_replay --explore_rate_decay --save_visualizations

To plot the performance of a session over training time, run the following:

python plot.py 'logs/YOUR-SESSION-DIRECTORY-NAME-HERE'Solid lines indicate % grasp success rates (primary metric of performance) and dotted lines indicate % push-then-grasp success rates (secondary metric to measure quality of pushes) over training steps. By default, each point in the plot measures the average performance over the last 200 training steps. The range of the x-axis is from 0 to 2500 training steps. You can easily change these parameters at the top of plot.py.

To compare performance between different sessions, you can draw multiple plots at a time:

python plot.py 'logs/YOUR-SESSION-DIRECTORY-NAME-HERE' 'logs/ANOTHER-SESSION-DIRECTORY-NAME-HERE'We provide a collection 11 test cases in simulation with adversarial clutter. Each test case consists of a configuration of 3 - 6 objects placed in the workspace in front of the robot. These configurations are manually engineered to reflect challenging picking scenarios, and remain exclusive from the training procedure. Across many of these test cases, objects are laid closely side by side, in positions and orientations that even an optimal grasping policy would have trouble successfully picking up any of the objects without de-cluttering first. As a sanity check, a single isolated object is additionally placed in the workspace separate from the configuration. This is just to ensure that all policies have been sufficiently trained prior to the benchmark (i.e. a policy is not ready if fails to grasp the isolated object).

The demo above runs our pre-trained model multiple times (x30) on a single test case. To test your own pre-trained model, simply change the location of --snapshot_file:

export CUDA_VISIBLE_DEVICES="0" && python3 main.py --is_sim --obj_mesh_dir 'objects/toys' --num_obj 10 --push_rewards --experience_replay --explore_rate_decay --load_snapshot --snapshot_file '/home/$USER/Downloads/snapshot.reinforcement.pth' --random_seed 1238 --is_testing --save_visualizations --test_preset_cases --test_preset_dir 'simulation/test-cases/' --max_test_trials 10

Data from each test case will be saved into a session directory in the logs folder. To report the average testing performance over a session, run the following:

python evaluate.py --session_directory 'logs/YOUR-SESSION-DIRECTORY-NAME-HERE' --method SPECIFY-METHOD --num_obj_complete Nwhere SPECIFY-METHOD can be reactive or reinforcement, depending on the architecture of your model.

--num_obj_complete N defines the number of objects that need to be picked in order to consider the task completed. For example, when evaluating our pre-trained model in the demo test case, N should be set to 6:

python evaluate.py --session_directory 'logs/YOUR-SESSION-DIRECTORY-NAME-HERE' --method 'reinforcement' --num_obj_complete 6Average performance is measured with three metrics (for all metrics, higher is better):

- Average % completion rate over all test runs: measures the ability of the policy to finish the task by picking up at least

Nobjects without failing consecutively for more than 10 attempts. - Average % grasp success rate per completion.

- Average % action efficiency: describes how succinctly the policy is capable of finishing the task. See our paper for more details on how this is computed.

To design your own challenging test case:

-

Open the simulation environment in V-REP (navigate to your V-REP directory and run

./vrep.sh). From the main menu, selectFile>Open scene..., and open the filevisual-pushing-grasping/simulation/simulation.ttt. -

In another terminal window, navigate to this repository and run the following:

python create.py

-

In the V-REP window, use the V-REP toolbar (object shift/rotate) to move around objects to desired positions and orientations.

-

In the terminal window type in the name of the text file for which to save the test case, then press enter.

-

Try it out: run a trained model on the test case by running

main.pyjust as in the demo, but with the flag--test_preset_filepointing to the location of your test case text file.

The same code in this repository can be used to train on a real UR5 robot arm (tested with UR Software version 1.8). To communicate with later versions of UR software, several minor changes may be necessary in robot.py (e.g. functions like parse_tcp_state_data). Tested with Python 2.7 (not fully tested with Python 3).

The latest version of our system uses RGB-D data captured from an Intel® RealSense™ D415 Camera. We provide a lightweight C++ executable that streams data in real-time using librealsense SDK 2.0 via TCP. This enables you to connect the camera to an external computer and fetch RGB-D data remotely over the network while training. This can come in handy for many real robot setups. Of course, doing so is not required -- the entire system can also be run on the same computer.

-

Download and install librealsense SDK 2.0

-

Navigate to

visual-pushing-grasping/realsenseand compilerealsense.cpp:cd visual-pushing-grasping/realsense cmake . make

-

Connect your RealSense camera with a USB 3.0 compliant cable (important: RealSense D400 series uses a USB-C cable, but still requires them to be 3.0 compliant to be able to stream RGB-D data).

-

To start the TCP server and RGB-D streaming, run the following:

./realsense

Keep the executable running while calibrating or training with the real robot (instructions below). To test a python TCP client that fetches RGB-D data from the active TCP server, run the following:

cd visual-pushing-grasping/real

python capture.pyWe provide a simple calibration script to estimate camera extrinsics with respect to robot base coordinates. To do so, the script moves the robot gripper over a set of predefined 3D locations as the camera detects the center of a moving 4x4 checkerboard pattern taped onto the gripper. The checkerboard can be of any size (the larger, the better).

-

Predefined 3D locations are sampled from a 3D grid of points in the robot's workspace. To modify these locations, change the variables

workspace_limitsandcalib_grid_stepat the top ofcalibrate.py. -

Measure the offset between the midpoint of the checkerboard pattern to the tool center point in robot coordinates (variable

checkerboard_offset_from_tool). This offset can change depending on the orientation of the tool (variabletool_orientation) as it moves across the predefined locations. Change both of these variables respectively at the top ofcalibrate.py. -

The code directly communicates with the robot via TCP. At the top of

calibrate.py, change variabletcp_host_ipto point to the network IP address of your UR5 robot controller. -

With caution, run the following to move the robot and calibrate:

python calibrate.py

The script also optimizes for a z-scale factor and saves it into real/camera_depth_scale.txt. This scale factor should be multiplied with each depth pixel captured from the camera. This step is more relevant for the RealSense SR300 cameras, which commonly suffer from a severe scaling problem where the 3D data is often 15-20% smaller than real world coordinates. The D400 series are less likely to have such a severe scaling problem.

To train on the real robot, simply run:

python main.py --tcp_host_ip 'XXX.XXX.X.XXX' --tcp_port 30002 --push_rewards --experience_replay --explore_rate_decay --save_visualizationswhere XXX.XXX.X.XXX is the network IP address of your UR5 robot controller.

- Use

touch.pyto test calibrated camera extrinsics -- provides a UI where the user can click a point on the RGB-D image, and the robot moves its end-effector to the 3D location of that point - Use

debug.pyto test robot communication and primitive actions