Edge-guided Multi-domain RGB-to-TIR image Translation for Training Vision Tasks with Challenging Labels

Accepted Proceedings to ICRA 2023

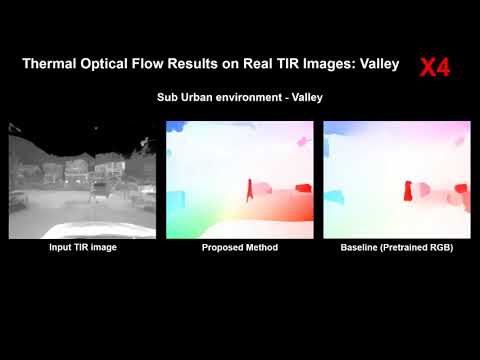

- Our target tasks are deep optical flow estimation and object detection in thermal images.

Disclaimer

-The same model was used for both synthetic and real RGB to TIR image translation

-The model was trained on identical datasets (sRGB=GTA, TIR=STheReO)

- model trained on synthetic RGB image was adapted to translate real RGB image to TIR image.

- Upload inference code

- Upload style selection code

- Upload training code for custom data training

-

Download Repo

$ git clone https://github.com/rpmsnu/sRGB-TIR.git

-

Docker support

To make things alot easier for environmental setup, I have uploaded my docker image on Dockerhub,

please use the following command to get the docker

$docker pull donkeymouse/donkeymouse:icra*If there persists any problems, please file an issue!

-

Inference

$ python3 inference_batch.py --input_folder {input dir to your RGB images} --output_folder {output dir to store your translated images} --checkpoint {weight_file address} --a2b 0 --seed {your choice} --num_style {number of tir styles to sample} --synchronized --output_onlyFor example, to translate RGB images stored under a folder called "input", and say you want to sample 5 styles, run the following command:

$python3 inference_batch.py --input_folder ./input --output_folder ./output --checkpoint ./translation_weights.pt --a2b 0 --seed 1234 --num_style 5 --synchronized --output_only --config configs/tir2rgb_folder.yaml -

Network weights

Please download them from here: {link to google drive}

*If the link doesn't work, please file an issue!

Edge-guided multi-domain RGB2TIR translation architecture

-

Network Architecture

- Content Encoder: single 7x7 conv block + four 4x4 conv block + four residual blocks + Instance Normalization

- Style Encoder: single 7x7 conv block + four 4x4 conv block + four residual blocks + GAP + FC layers

- Decoder (Generator): 4x4 conv + residual blocks in encoder-decoder architecture. 2 downsampling layers and reflection padding were used.

- Discriminator: four 4x4 convolutions. Leaky relu activations; LSGAN for loss function, reflection padding was used.

-

Model codes will be released after the review process has been cleared.

-

Training details

- Iterations: 60,000

- batch size = 1

- weight decay = 0.001

- Optimizer: Adam with B1 = 0.5, B2= 0.999

- initial learning rate = 0.0001

- step learning rate policy

- Learning rate decay rate(gamma) = 0.5

- Input image size= 640 x 400 for both synthetic RGB and thermal images

-

Config files will be released after the review process has been cleared

Please consider citing the paper as:

@ARTICLE{lee-2023-edgemultiRGB2TIR,

author={Lee, Dong-Guw and Kim, Ayoung},

conference={IEEE International Conference on Robotics and Automation},

title={Edge-guided Multi-domain RGB-to-TIR image Translation for Training Vision Tasks with Challenging Labels},

year={2023},

status={underreview}

Also, a lot of the code has been built on top of MUNIT (ECCV2018), so please go cite their paper as well.

If you have any questions, contact here please