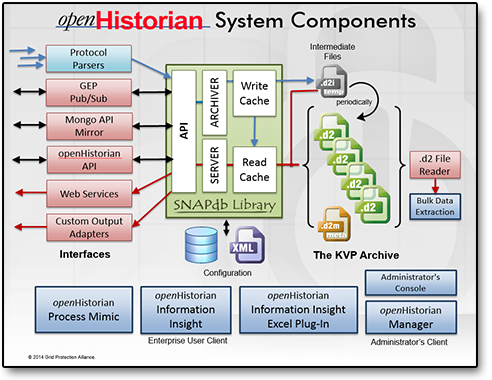

The openHistorian is a back office system designed to efficiently integrate and archive process control data, e.g., SCADA, synchrophasor, digital fault recorder or any other time-series data used to support process operations.

The openHistorian is optimized to store and retrieve large volumes of time-series data quickly and efficiently, including high-resolution sub-second information that is measured very rapidly, e.g., many thousands of times per second.

The openHistorian 2 is built using the GSF SNAPdb Engine - a key/value pair archiving technology developed to significantly improve the ability to archive extremely large volumes of real-time streaming data and directly serve the data to consuming applications and systems.

Through use of the SNAPdb Engine, the openHistorian inherits very fast performance with very low lag-time for data insertion. The openHistorian 2 is a time-series implementation of the SNABdb engine where the "key" is a tuple of time and measurement ID, and the "value" is the stored data - which can be most any data type and associated flags.

The system comes with a high-speed API that interacts with an in-memory cache for very high speed extraction of near real-time data. The archive files produced by the openHistorian are ACID Compliant which create a very durable and consistent file structure that is resistant to data corruption. Internally the data structure is based on a B+ Tree that allows out-of-order data insertion.

The openHistorian service also hosts the GSF Time-Series Library (TSL), creating an ideal platform for integrating streaming time-series data processing in real-time:

Three utilities are currently available to assist in using the openHistorian 2. They are automatically installed alongside openHistorian.

- Data Migration Utility - Converts openHistorian 1.0 / DatAWAre Archives to openHistorian 2.0 Format - View Screen Shot

- Data Trending Tool - Queries Selected Historical Data for Visual Trending Using a Provided Date/Time Range - View Screen Shot

- Data Extraction Utility - Queries Selected Historian Data for Export to a CSV File Using a Provided Date/Time Range - View Screen Shot

- Documentation for openHistorian can be found in the openHistorian wiki.

- Get in contact with our development team on our new discussion boards.

- View old discussion board topics here.

For detailed instructions on deploying the openHistorian, see the installation guide

- Make sure your system meets all the requirements below.

- Choose a download below.

- Unzip, if necessary.

- Run openHistorianSetup.msi.

- Follow the wizard.

- Enjoy.

- .NET 4.6 or higher.

- 64-bit Windows 7 or newer.

- HTML 5 capable browser.

- Database management system such as:

- SQL Server (Express version is fine)

- MySQL

- Oracle

- PostgreSQL

- SQLite* (included, no extra install required)

* Not recommended for large deployments.

If you would like to contribute please:

- Read our styleguide.

- Fork the repository.

- Work your magic.

- Create a pull request.

openHistorian is licensed under the MIT License.