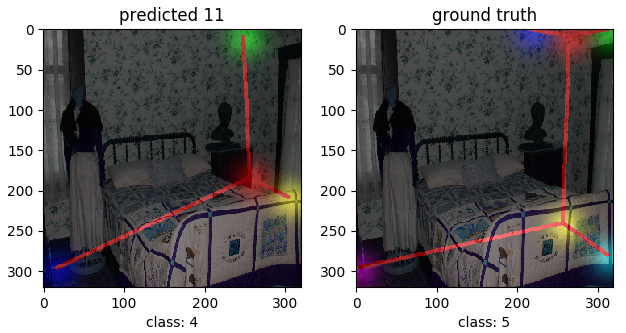

This is a tensorflow implementation of room layout paper: RoomNet: End-to-End Room Layout Estimation.

New: You can find pre-trained model here and sample.npz for get_res.py here

Note: This is a simply out-of-interest experiemnt and I cannot guarantee to get the same effect of the origin paper.

Here I implement two nets: vanilla encoder-decoder version and 3-iter RCNN refined version. As the author noted, the latter achieve better results.

Here I implement two nets: vanilla encoder-decoder version and 3-iter RCNN refined version. As the author noted, the latter achieve better results.

I use LSUN dataset and please download and prepare the RGB images and get a explorationo of the .mat file it includs because they contain layout type, key points and other information. Here I simply resize the image to (320, 320) with cubic interpolation and do the flip horizontally. (Note: When you flip the image, the order of layout key points should also be fliped.) You can see the preparation of data in prepare_data.py

You need to install tensorflow>=1.2, opencv, numpy, scipy and other basic dependencies.

Training:

python main.py --train 0 --net vanilla (or rcnn) --out_path path-to-output

Testing:

python main.py --test 0 --net vanilla (or rcnn) --out_path path-to-output

- Classification loss: The author only use loss on the ground truth label while I consider whole classes and I use the common cross entropy loss.

- Layout loss: I split the layout map to 3 level according to the numerical value which means the foreground and background. The bigger the value is, the larger weight its corresponding loss takes.

- Upsampling layer: Since I don't find upsampling operation that remember the pooling positions and reproject those back, I simply use the conv_transpose operation in tf.