A Scala Jupyter kernel that uses metakernel in combination with py4j.

- Apache Spark 2.1.1 compiled for Scala 2.11

- Jupyter Notebook

- Python 3.5+

You can install the spylon-kernel package using pip or conda.

pip install spylon-kernel

# or

conda install -c conda-forge spylon-kernelYou can use spylon-kernel as Scala kernel for Jupyter Notebook. Do this when you want to work with Spark in Scala with a bit of Python code mixed in.

Create a kernel spec for Jupyter notebook by running the following command:

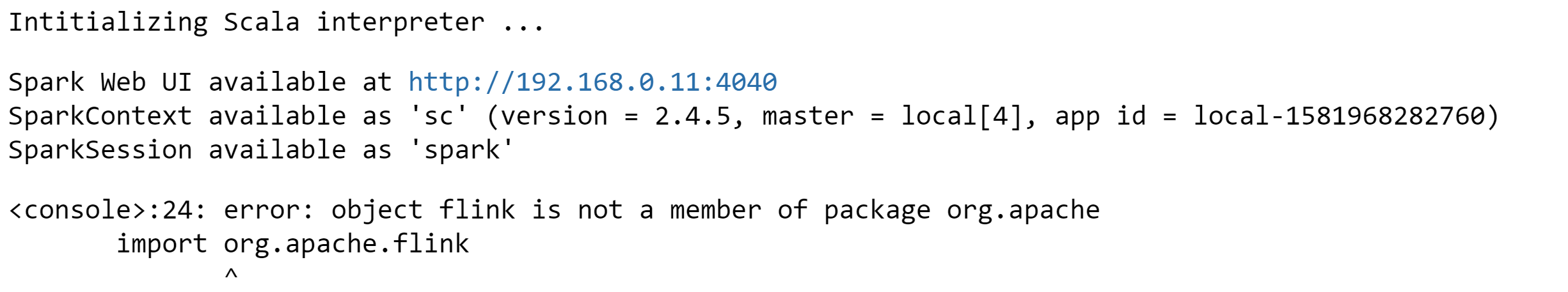

python -m spylon_kernel installLaunch jupyter notebook and you should see a spylon-kernel as an option

in the New dropdown menu.

See the basic example notebook for information about how to intiialize a Spark session and use it both in Scala and Python.

You can also use spylon-kernel as a magic in an IPython notebook. Do this when you want to mix a little bit of Scala into your primarily Python notebook.

from spylon_kernel import register_ipython_magics

register_ipython_magics()%%scala

val x = 8

xFinally, you can use spylon-kernel as a Python library. Do this when you want to evaluate a string of Scala code in a Python script or shell.

from spylon_kernel import get_scala_interpreter

interp = get_scala_interpreter()

# Evaluate the result of a scala code block.

interp.interpret("""

val x = 8

x

""")

interp.last_result()Push a tag and submit a source dist to PyPI.

git commit -m 'REL: 0.2.1' --allow-empty

git tag -a 0.2.1 # and enter the same message as the commit

git push origin master # or send a PR

# if everything builds / tests cleanly, release to pypi

make release

Then update https://github.com/conda-forge/spylon-kernel-feedstock.