Pipelined Relational Query Language, pronounced "Prequel".

PRQL is a modern language for transforming data — a simple, powerful, pipelined SQL replacement. Like SQL, it's readable, explicit and declarative. Unlike SQL, it forms a logical pipeline of transformations, and supports abstractions such as variables and functions. It can be used with any database that uses SQL, since it compiles to SQL.

PRQL can be as simple as:

from tracks

filter artist == "Bob Marley" # Each line transforms the previous result

aggregate { # `aggregate` reduces each column to a value

plays = sum plays,

longest = max length,

shortest = min length, # Trailing commas are allowed

}Here's a fuller example of the language;

from employees

filter start_date > @2021-01-01 # Clear date syntax

derive { # `derive` adds columns / variables

gross_salary = salary + (tax ?? 0), # Terse coalesce

gross_cost = gross_salary + benefits_cost, # Variables can use other variables

}

filter gross_cost > 0

group {title, country} ( # `group` runs a pipeline over each group

aggregate { # `aggregate` reduces each group to a value

average gross_salary,

sum_gross_cost = sum gross_cost, # `=` sets a column name

}

)

filter sum_gross_cost > 100_000 # `filter` replaces both of SQL's `WHERE` & `HAVING`

derive id = f"{title}_{country}" # F-strings like Python

derive country_code = s"LEFT(country, 2)" # S-strings allow using SQL as an escape hatch

sort {sum_gross_cost, -country} # `-country` means descending order

take 1..20 # Range expressions (also valid here as `take 20`)For more on the language, more examples & comparisons with SQL, visit prql-lang.org. To experiment with PRQL in the browser, check out PRQL Playground.

PRQL is being actively developed by a growing community. It's ready to use by the intrepid, either with our supported integrations, or within your own tools, using one of our supported language bindings.

PRQL still has some bugs and some missing features, and is probably only ready to be rolled out to non-technical teams for fairly simple queries.

In particular, we're working on a new resolver, which will let us squash many bugs and simplify our code a lot. It'll also let us scale the language without scaling the complexity of the compiler.

While we work on that, we're also focusing on

- Ensuring our supported features feel extremely robust; resolving any priority bugs. As more folks have started using PRQL, we've had more bug reports — good news, but also gives us more to work on.

- Filling remaining feature gaps, so that PRQL is possible to use for almost all standard SQL queries.

- Expanding our set of supported features — we've recently added experimental support for modules / multi-file projects, and for auto-formatting.

We're also spending time thinking about:

- Making it really easy to start using PRQL. We're doing that by building integrations with tools that folks already use; for example our VS Code extension & Jupyter integration. If there are tools you're familiar with that you think would be open to integrating with PRQL, please let us know in an issue.

- Whether all our initial decisions were correct — for example

how we handle window functions outside of a

windowtransform. - Making it easier to contribute to the compiler. We have a wide group of contributors to the project, but contributions to the compiler itself are quite concentrated. We're keen to expand this; #1840 for feedback, some suggestions on starter issues are below.

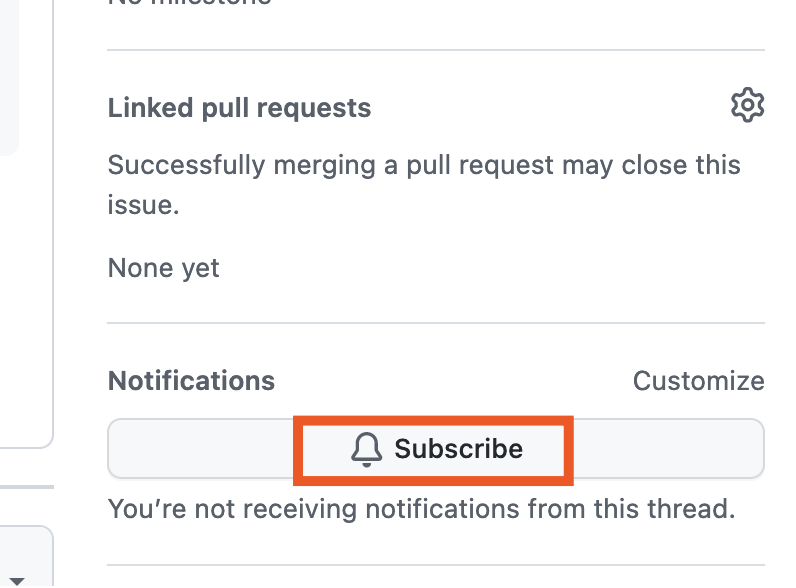

To stay in touch with PRQL:

- Follow us on Twitter

- Join us on Discord

- Star this repo

- Contribute — join us in building PRQL, through writing code (send us your use-cases!), or inspiring others to use it.

- See the development documentation for PRQL. It's easy to get started — the project can be built in a couple of commands, and we're a really friendly community!

- For those who might be interested in contributing to the code now, check out issues with the good first issue label. Always feel free to ask questions or open a draft PR.

- PRQL Playground — experiment with PRQL in the browser.

- PRQL Book — the language documentation.

- Jupyter magic — run PRQL in Jupyter, either against a DB, or a Pandas DataFrame / CSV / Parquet file through DuckDB.

- pyprql Docs — the pyprql documentation, the Python bindings to PRQL, including Jupyter magic.

- PRQL VS Code extension

- prql-js — JavaScript bindings for PRQL.

This repo is composed of:

- prqlc — the compiler, written in rust, whose main role is to compile PRQL into SQL. Also contains the CLI and bindings from various languages.

- web — our web content: the Book, Website, and Playground.

It also contains our testing / CI infrastructure and development tools. Check out our development docs for more details.

Many thanks to those who've made our progress possible: