This is the official implementation of the MCUNet series.

If you are interested in getting updates, please sign up here to get notified!

- (2024/03) We release a new demo video of On-Device Training Under 256KB Memory.

- (2023/10) Tiny Machine Learning: Progress and Futures [Feature] appears at IEEE CAS Magazine.

- (2022/12) We simplified the

net_idof models (new version:mcunet-in0,mcunet-vww1, etc.) for an upcoming review paper (stay tuned!). - (2022/10) Our new work On-Device Training Under 256KB Memory is highlighted on the MIT homepage!

- (2022/09) Our new work On-Device Training Under 256KB Memory is accepted to NeurIPS 2022! It enables tiny on-device training for IoT devices.

- (2022/08) We release the source code of TinyEngine in this repo. Please take a look!

- (2022/08) Our new course on TinyML and Efficient Deep Learning will be released soon in September 2022: efficientml.ai.

- (2022/07) We also include the person detection model used in the video demo above. We will also include the deployment code in TinyEngine release.

- (2022/06) We refactor the MCUNet repo as a standalone repo (previous repo: https://github.com/mit-han-lab/tinyml)

- (2021/10) MCUNetV2 is accepted to NeurIPS 2021: https://arxiv.org/abs/2110.15352 !

- (2020/10) MCUNet is accepted to NeurIPS 2020 as spotlight: https://arxiv.org/abs/2007.10319 !

- Our projects are covered by: MIT News, MIT News (v2), WIRED, Morning Brew, Stacey on IoT, Analytics Insight, Techable, etc.

Microcontrollers are low-cost, low-power hardware. They are widely deployed and have wide applications.

But the tight memory budget (50,000x smaller than GPUs) makes deep learning deployment difficult.

MCUNet is a system-algorithm co-design framework for tiny deep learning on microcontrollers. It consists of TinyNAS and TinyEngine. They are co-designed to fit the tight memory budgets.

With system-algorithm co-design, we can significantly improve the deep learning performance on the same tiny memory budget.

Our TinyEngine inference engine could be a useful infrastructure for MCU-based AI applications. It significantly improves the inference speed and reduces the memory usage compared to existing libraries like TF-Lite Micro, CMSIS-NN, MicroTVM, etc. It improves the inference speed by 1.5-3x, and reduces the peak memory by 2.7-4.8x.

You can build the pre-trained PyTorch fp32 model or the int8 quantized model in TF-Lite format.

from mcunet.model_zoo import net_id_list, build_model, download_tflite

print(net_id_list) # the list of models in the model zoo

# pytorch fp32 model

model, image_size, description = build_model(net_id="mcunet-in3", pretrained=True) # you can replace net_id with any other option from net_id_list

# download tflite file to tflite_path

tflite_path = download_tflite(net_id="mcunet-in3")To evaluate the accuracy of PyTorch fp32 models, run:

python eval_torch.py --net_id mcunet-in2 --dataset {imagenet/vww} --data-dir PATH/TO/DATA/valTo evaluate the accuracy of TF-Lite int8 models, run:

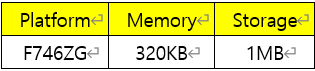

python eval_tflite.py --net_id mcunet-in2 --dataset {imagenet/vww} --data-dir PATH/TO/DATA/val- Note that all the latency, SRAM, and Flash usage are profiled with TinyEngine on STM32F746.

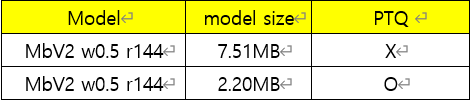

- Here we only provide the

int8quantized modes.int4quantized models (as shown in the paper) can further push the accuracy-memory trade-off, but lacking a general format support. - For accuracy (top1, top-5), we report the accuracy of

fp32/int8models respectively

The ImageNet model list:

| net_id | MACs | #Params | SRAM | Flash | Res. | Top-1 (fp32/int8) |

Top-5 (fp32/int8) |

|---|---|---|---|---|---|---|---|

| # baseline models | |||||||

| mbv2-w0.35 | 23.5M | 0.75M | 308kB | 862kB | 144 | 49.7%/49.0% | 74.6%/73.8% |

| proxyless-w0.3 | 38.3M | 0.75M | 292kB | 892kB | 176 | 57.0%/56.2% | 80.2%/79.7% |

| # mcunet models | |||||||

| mcunet-in0 | 6.4M | 0.75M | 266kB | 889kB | 48 | 41.5%/40.4% | 66.3%/65.2% |

| mcunet-in1 | 12.8M | 0.64M | 307kB | 992kB | 96 | 51.5%/49.9% | 75.5%/74.1% |

| mcunet-in2 | 67.3M | 0.73M | 242kB | 878kB | 160 | 60.9%/60.3% | 83.3%/82.6% |

| mcunet-in3 | 81.8M | 0.74M | 293kB | 897kB | 176 | 62.2%/61.8% | 84.5%/84.2% |

| mcunet-in4 | 125.9M | 1.73M | 456kB | 1876kB | 160 | 68.4%/68.0% | 88.4%/88.1% |

The VWW model list:

Note that the VWW dataset might be hard to prepare. You can download our pre-built minival set from here, around 380MB.

| net_id | MACs | #Params | SRAM | Flash | Resolution | Top-1 (fp32/int8) |

|---|---|---|---|---|---|---|

| mcunet-vww0 | 6.0M | 0.37M | 146kB | 617kB | 64 | 87.4%/87.3% |

| mcunet-vww1 | 11.6M | 0.43M | 162kB | 689kB | 80 | 88.9%/88.9% |

| mcunet-vww2 | 55.8M | 0.64M | 311kB | 897kB | 144 | 91.7%/91.8% |

For TF-Lite int8 models, we do not use quantization-aware training (QAT), so some results is slightly lower than paper numbers.

We also share the person detection model used in the demo. To visualize the model's prediction on a sample image, please run the following command:

python eval_det.pyIt will visualize the prediction here: assets/sample_images/person_det_vis.jpg.

The model takes in a small input resolution of 128x160 to reduce memory usage. It does not achieve state-of-the-art performance due to the limited image and model size but should provide decent performance for tinyML applications (please check the demo for a video recording). We will also release the deployment code in the upcoming TinyEngine release.

-

Python 3.6+

-

PyTorch 1.4.0+

-

Tensorflow 1.15 (if you want to test TF-Lite models; CPU support only)

We thank MIT-IBM Watson AI Lab, Intel, Amazon, SONY, Qualcomm, NSF for supporting this research.

If you find the project helpful, please consider citing our paper:

@article{lin2020mcunet,

title={Mcunet: Tiny deep learning on iot devices},

author={Lin, Ji and Chen, Wei-Ming and Lin, Yujun and Gan, Chuang and Han, Song},

journal={Advances in Neural Information Processing Systems},

volume={33},

year={2020}

}

@inproceedings{

lin2021mcunetv2,

title={MCUNetV2: Memory-Efficient Patch-based Inference for Tiny Deep Learning},

author={Lin, Ji and Chen, Wei-Ming and Cai, Han and Gan, Chuang and Han, Song},

booktitle={Annual Conference on Neural Information Processing Systems (NeurIPS)},

year={2021}

}

@article{

lin2022ondevice,

title = {On-Device Training Under 256KB Memory},

author = {Lin, Ji and Zhu, Ligeng and Chen, Wei-Ming and Wang, Wei-Chen and Gan, Chuang and Han, Song},

journal = {arXiv:2206.15472 [cs]},

url = {https://arxiv.org/abs/2206.15472},

year = {2022}

}

On-Device Training Under 256KB Memory (NeurIPS'22)

TinyTL: Reduce Memory, Not Parameters for Efficient On-Device Learning (NeurIPS'20)

Once for All: Train One Network and Specialize it for Efficient Deployment (ICLR'20)

ProxylessNAS: Direct Neural Architecture Search on Target Task and Hardware (ICLR'19)

AutoML for Architecting Efficient and Specialized Neural Networks (IEEE Micro)

AMC: AutoML for Model Compression and Acceleration on Mobile Devices (ECCV'18)

HAQ: Hardware-Aware Automated Quantization (CVPR'19, oral)