Looks like there is another project we should look into for inspiration/collaboration.

https://fenicsproject.org/

FEniCS is a popular open-source (LGPLv3) computing platform for solving partial differential equations (PDEs). FEniCS enables users to quickly translate scientific models into efficient finite element code. With the high-level Python and C++ interfaces to FEniCS, it is easy to get started, but FEniCS offers also powerful capabilities for more experienced programmers. FEniCS runs on a multitude of platforms ranging from laptops to high-performance clusters.

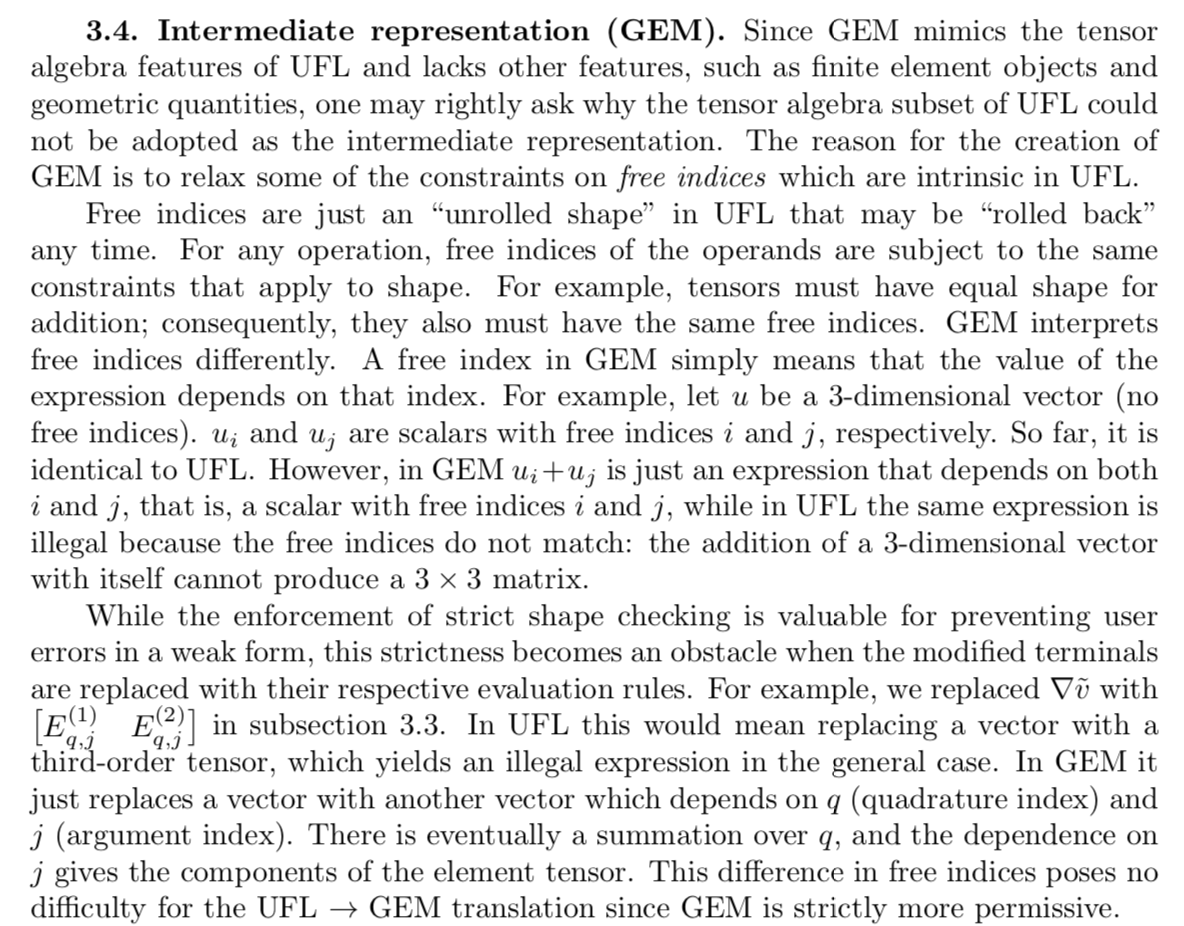

In their recent paper "TSFC: a structure-preserving form compiler", they outline a process similar to ours. In particular, I am interested in how their internal forms differ from ours. Here is an overview of that part from their paper:

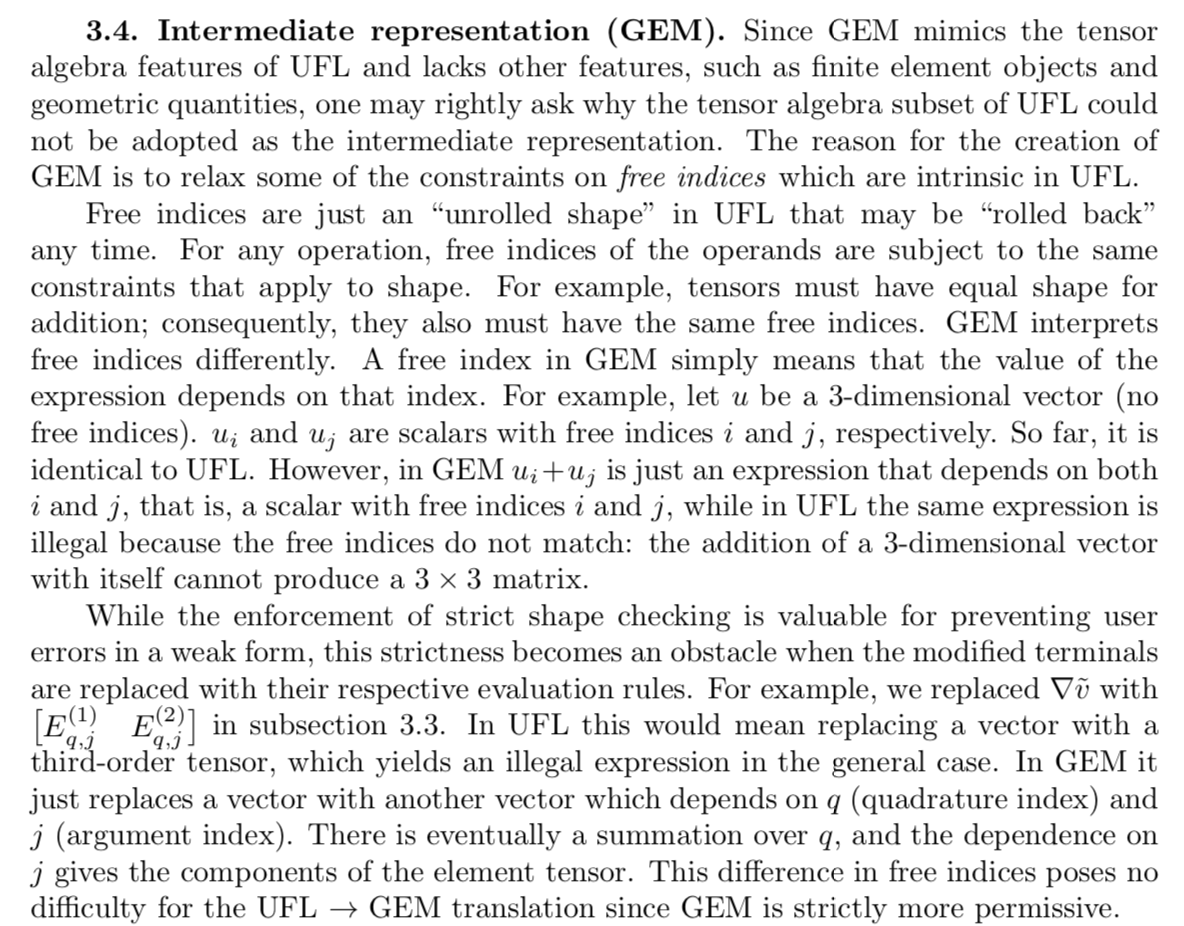

And then later down skipping some details I don't understand about PDEs:

This is actually pretty cool, because the idea of "free indices" is one I have to wrestle with in the compilation currently. The basic concept in our system is that we start with a lambda calculus like form of length and indexing. We call this a Sequence, because it corresponds to the Python idea of a sequence. So we start with a form that is a Sequence that has a function, that should return another Sequence or a Scalar. How do we actually compile this?

Well let's look at the code that turns the a Sequence into some AST statements that creates a NumPy array: https://github.com/Quansight-Labs/uarray/blob/ec8be30c7ec0097989661ec8d8d958d56e9fc45f/uarray/uarray/ast.py#L208-L284

We are basically generating code that looks like this:

# only create array if `alloc` is true

array = np.array(shape)

for i in range(length):

result = getitem(i)

array[i] = resultWe are calling the getitem function that defines the sequence with an AST form that represents the index i. So we now have some "Free indices".

Let me give a more explicit example, that isn't tied to code generation. This notebook shows how we take an expression equivalent to np.outer(np.arange(10), np.arange(5)) and index it with two unbound variables, i and j. Now we effectively have two free indices on this form.

The free indices idea seems very related to implemented einsum generally, because einsum is really about associating names to the dimensions of multiple arrays, and the result array, and the algorithm just falls out of that (along with choosing your addition and multiplication operations).