- This is the implementation for HRNet + OCR.

- The PyTroch 1.1 version ia available here.

- The PyTroch 0.4.1 version is available here.

-

[2021/05/04] We rephrase the OCR approach as Segmentation Transformer pdf. We will provide the updated implementation soon.

-

[2021/02/16] Based on the PaddleClas ImageNet pretrained weights, we achieve 83.22% on Cityscapes val, 59.62% on PASCAL-Context val (new SOTA), 45.20% on COCO-Stuff val (new SOTA), 58.21% on LIP val and 47.98% on ADE20K val. Please checkout openseg.pytorch for more details.

-

[2020/08/16] MMSegmentation has supported our HRNet + OCR.

-

[2020/07/20] The researchers from AInnovation have achieved Rank#1 on ADE20K Leaderboard via training our HRNet + OCR with a semi-supervised learning scheme. More details are in their Technical Report.

-

[2020/07/09] Our paper is accepted by ECCV 2020: Object-Contextual Representations for Semantic Segmentation. Notably, the reseachers from Nvidia set a new state-of-the-art performance on Cityscapes leaderboard: 85.4% via combining our HRNet + OCR with a new hierarchical mult-scale attention scheme.

-

[2020/03/13] Our paper is accepted by TPAMI: Deep High-Resolution Representation Learning for Visual Recognition.

-

HRNet + OCR + SegFix: Rank #1 (84.5) in Cityscapes leaderboard. OCR: object contextual represenations pdf. HRNet + OCR is reproduced here.

-

Thanks Google and UIUC researchers. A modified HRNet combined with semantic and instance multi-scale context achieves SOTA panoptic segmentation result on the Mapillary Vista challenge. See the paper.

-

Small HRNet models for Cityscapes segmentation. Superior to MobileNetV2Plus ....

-

Rank #1 (83.7) in Cityscapes leaderboard. HRNet combined with an extension of object context

-

Pytorch-v1.1 and the official Sync-BN supported. We have reproduced the cityscapes results on the new codebase. Please check the pytorch-v1.1 branch.

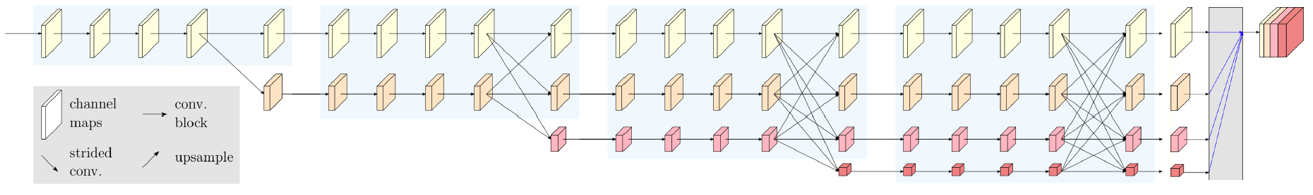

This is the official code of high-resolution representations for Semantic Segmentation. We augment the HRNet with a very simple segmentation head shown in the figure below. We aggregate the output representations at four different resolutions, and then use a 1x1 convolutions to fuse these representations. The output representations is fed into the classifier. We evaluate our methods on three datasets, Cityscapes, PASCAL-Context and LIP.

Besides, we further combine HRNet with Object Contextual Representation and achieve higher performance on the three datasets. The code of HRNet+OCR is contained in this branch. We illustrate the overall framework of OCR in the Figure and the equivalent Transformer pipelines:

The models are initialized by the weights pretrained on the ImageNet. ''Paddle'' means the results are based on PaddleCls pretrained HRNet models. You can download the pretrained models from https://github.com/HRNet/HRNet-Image-Classification. Slightly different, we use align_corners = True for upsampling in HRNet.

- Performance on the Cityscapes dataset. The models are trained and tested with the input size of 512x1024 and 1024x2048 respectively. If multi-scale testing is used, we adopt scales: 0.5,0.75,1.0,1.25,1.5,1.75.

| model | Train Set | Test Set | OHEM | Multi-scale | Flip | mIoU | Link |

|---|---|---|---|---|---|---|---|

| HRNetV2-W48 | Train | Val | No | No | No | 80.9 | Github/BaiduYun(Access Code:pmix) |

| HRNetV2-W48 + OCR | Train | Val | No | No | No | 81.6 | Github/BaiduYun(Access Code:fa6i) |

| HRNetV2-W48 + OCR | Train + Val | Test | No | Yes | Yes | 82.3 | Github/BaiduYun(Access Code:ycrk) |

| HRNetV2-W48 (Paddle) | Train | Val | No | No | No | 81.6 | --- |

| HRNetV2-W48 + OCR (Paddle) | Train | Val | No | No | No | --- | --- |

| HRNetV2-W48 + OCR (Paddle) | Train + Val | Test | No | Yes | Yes | --- | --- |

- Performance on the LIP dataset. The models are trained and tested with the input size of 473x473.

| model | OHEM | Multi-scale | Flip | mIoU | Link |

|---|---|---|---|---|---|

| HRNetV2-W48 | No | No | Yes | 55.83 | Github/BaiduYun(Access Code:fahi) |

| HRNetV2-W48 + OCR | No | No | Yes | 56.48 | Github/BaiduYun(Access Code:xex2) |

| HRNetV2-W48 (Paddle) | No | No | Yes | --- | --- |

| HRNetV2-W48 + OCR (Paddle) | No | No | Yes | --- | --- |

Note Currently we could only reproduce HRNet+OCR results on LIP dataset with PyTorch 0.4.1.

- Performance on the PASCAL-Context dataset. The models are trained and tested with the input size of 520x520. If multi-scale testing is used, we adopt scales: 0.5,0.75,1.0,1.25,1.5,1.75,2.0 (the same as EncNet, DANet etc.).

| model | num classes | OHEM | Multi-scale | Flip | mIoU | Link |

|---|---|---|---|---|---|---|

| HRNetV2-W48 | 59 classes | No | Yes | Yes | 54.1 | Github/BaiduYun(Access Code:wz6v) |

| HRNetV2-W48 + OCR | 59 classes | No | Yes | Yes | 56.2 | Github/BaiduYun(Access Code:yyxh) |

| HRNetV2-W48 | 60 classes | No | Yes | Yes | 48.3 | OneDrive/BaiduYun(Access Code:9uf8) |

| HRNetV2-W48 + OCR | 60 classes | No | Yes | Yes | 50.1 | Github/BaiduYun(Access Code:gtkb) |

| HRNetV2-W48 (Paddle) | 59 classes | No | Yes | Yes | --- | --- |

| HRNetV2-W48 (Paddle) | 60 classes | No | Yes | Yes | --- | --- |

| HRNetV2-W48 + OCR (Paddle) | 59 classes | No | Yes | Yes | --- | --- |

| HRNetV2-W48 + OCR (Paddle) | 60 classes | No | Yes | Yes | --- | --- |

- Performance on the COCO-Stuff dataset. The models are trained and tested with the input size of 520x520. If multi-scale testing is used, we adopt scales: 0.5,0.75,1.0,1.25,1.5,1.75,2.0 (the same as EncNet, DANet etc.).

| model | OHEM | Multi-scale | Flip | mIoU | Link |

|---|---|---|---|---|---|

| HRNetV2-W48 | Yes | No | No | 36.2 | Github/BaiduYun(Access Code:92gw) |

| HRNetV2-W48 + OCR | Yes | No | No | 39.7 | Github/BaiduYun(Access Code:sjc4) |

| HRNetV2-W48 | Yes | Yes | Yes | 37.9 | Github/BaiduYun(Access Code:92gw) |

| HRNetV2-W48 + OCR | Yes | Yes | Yes | 40.6 | Github/BaiduYun(Access Code:sjc4) |

| HRNetV2-W48 (Paddle) | Yes | No | No | --- | --- |

| HRNetV2-W48 + OCR (Paddle) | Yes | No | No | --- | --- |

| HRNetV2-W48 (Paddle) | Yes | Yes | Yes | --- | --- |

| HRNetV2-W48 + OCR (Paddle) | Yes | Yes | Yes | --- | --- |

- Performance on the ADE20K dataset. The models are trained and tested with the input size of 520x520. If multi-scale testing is used, we adopt scales: 0.5,0.75,1.0,1.25,1.5,1.75,2.0 (the same as EncNet, DANet etc.).

| model | OHEM | Multi-scale | Flip | mIoU | Link |

|---|---|---|---|---|---|

| HRNetV2-W48 | Yes | No | No | 43.1 | Github/BaiduYun(Access Code:f6xf) |

| HRNetV2-W48 + OCR | Yes | No | No | 44.5 | Github/BaiduYun(Access Code:peg4) |

| HRNetV2-W48 | Yes | Yes | Yes | 44.2 | Github/BaiduYun(Access Code:f6xf) |

| HRNetV2-W48 + OCR | Yes | Yes | Yes | 45.5 | Github/BaiduYun(Access Code:peg4) |

| HRNetV2-W48 (Paddle) | Yes | No | No | --- | --- |

| HRNetV2-W48 + OCR (Paddle) | Yes | No | No | --- | --- |

| HRNetV2-W48 (Paddle) | Yes | Yes | Yes | --- | --- |

| HRNetV2-W48 + OCR (Paddle) | Yes | Yes | Yes | --- | --- |

- For LIP dataset, install PyTorch=0.4.1 following the official instructions. For Cityscapes and PASCAL-Context, we use PyTorch=1.1.0.

git clone https://github.com/HRNet/HRNet-Semantic-Segmentation $SEG_ROOT- Install dependencies: pip install -r requirements.txt

If you want to train and evaluate our models on PASCAL-Context, you need to install details.

pip install git+https://github.com/zhanghang1989/detail-api.git#subdirectory=PythonAPIYou need to download the Cityscapes, LIP and PASCAL-Context datasets.

Your directory tree should be look like this:

$SEG_ROOT/data

├── cityscapes

│ ├── gtFine

│ │ ├── test

│ │ ├── train

│ │ └── val

│ └── leftImg8bit

│ ├── test

│ ├── train

│ └── val

├── lip

│ ├── TrainVal_images

│ │ ├── train_images

│ │ └── val_images

│ └── TrainVal_parsing_annotations

│ ├── train_segmentations

│ ├── train_segmentations_reversed

│ └── val_segmentations

├── pascal_ctx

│ ├── common

│ ├── PythonAPI

│ ├── res

│ └── VOCdevkit

│ └── VOC2010

├── cocostuff

│ ├── train

│ │ ├── image

│ │ └── label

│ └── val

│ ├── image

│ └── label

├── ade20k

│ ├── train

│ │ ├── image

│ │ └── label

│ └── val

│ ├── image

│ └── label

├── list

│ ├── cityscapes

│ │ ├── test.lst

│ │ ├── trainval.lst

│ │ └── val.lst

│ ├── lip

│ │ ├── testvalList.txt

│ │ ├── trainList.txt

│ │ └── valList.txtNote that the codebase supports both PyTorch 0.4.1 and 1.1.0, and they use different command for training. In the following context, we use $PY_CMD to denote different startup command.

# For PyTorch 0.4.1

PY_CMD="python"

# For PyTorch 1.1.0

PY_CMD="python -m torch.distributed.launch --nproc_per_node=4"e.g., when training on Cityscapes, we use PyTorch 1.1.0. So the command

$PY_CMD tools/train.py --cfg experiments/cityscapes/seg_hrnet_ocr_w48_train_512x1024_sgd_lr1e-2_wd5e-4_bs_12_epoch484.yamlindicates

python -m torch.distributed.launch --nproc_per_node=4 tools/train.py --cfg experiments/cityscapes/seg_hrnet_ocr_w48_train_512x1024_sgd_lr1e-2_wd5e-4_bs_12_epoch484.yamlJust specify the configuration file for tools/train.py.

For example, train the HRNet-W48 on Cityscapes with a batch size of 12 on 4 GPUs:

$PY_CMD tools/train.py --cfg experiments/cityscapes/seg_hrnet_w48_train_512x1024_sgd_lr1e-2_wd5e-4_bs_12_epoch484.yamlFor example, train the HRNet-W48 + OCR on Cityscapes with a batch size of 12 on 4 GPUs:

$PY_CMD tools/train.py --cfg experiments/cityscapes/seg_hrnet_ocr_w48_train_512x1024_sgd_lr1e-2_wd5e-4_bs_12_epoch484.yamlNote that we only reproduce HRNet+OCR on LIP dataset using PyTorch 0.4.1. So we recommend to use PyTorch 0.4.1 if you want to train on LIP dataset.

For example, evaluating HRNet+OCR on the Cityscapes validation set with multi-scale and flip testing:

python tools/test.py --cfg experiments/cityscapes/seg_hrnet_ocr_w48_train_512x1024_sgd_lr1e-2_wd5e-4_bs_12_epoch484.yaml \

TEST.MODEL_FILE hrnet_ocr_cs_8162_torch11.pth \

TEST.SCALE_LIST 0.5,0.75,1.0,1.25,1.5,1.75 \

TEST.FLIP_TEST TrueEvaluating HRNet+OCR on the Cityscapes test set with multi-scale and flip testing:

python tools/test.py --cfg experiments/cityscapes/seg_hrnet_ocr_w48_train_512x1024_sgd_lr1e-2_wd5e-4_bs_12_epoch484.yaml \

DATASET.TEST_SET list/cityscapes/test.lst \

TEST.MODEL_FILE hrnet_ocr_trainval_cs_8227_torch11.pth \

TEST.SCALE_LIST 0.5,0.75,1.0,1.25,1.5,1.75 \

TEST.FLIP_TEST TrueEvaluating HRNet+OCR on the PASCAL-Context validation set with multi-scale and flip testing:

python tools/test.py --cfg experiments/pascal_ctx/seg_hrnet_ocr_w48_cls59_520x520_sgd_lr1e-3_wd1e-4_bs_16_epoch200.yaml \

DATASET.TEST_SET testval \

TEST.MODEL_FILE hrnet_ocr_pascal_ctx_5618_torch11.pth \

TEST.SCALE_LIST 0.5,0.75,1.0,1.25,1.5,1.75,2.0 \

TEST.FLIP_TEST TrueEvaluating HRNet+OCR on the LIP validation set with flip testing:

python tools/test.py --cfg experiments/lip/seg_hrnet_w48_473x473_sgd_lr7e-3_wd5e-4_bs_40_epoch150.yaml \

DATASET.TEST_SET list/lip/testvalList.txt \

TEST.MODEL_FILE hrnet_ocr_lip_5648_torch04.pth \

TEST.FLIP_TEST True \

TEST.NUM_SAMPLES 0Evaluating HRNet+OCR on the COCO-Stuff validation set with multi-scale and flip testing:

python tools/test.py --cfg experiments/cocostuff/seg_hrnet_ocr_w48_520x520_ohem_sgd_lr1e-3_wd1e-4_bs_16_epoch110.yaml \

DATASET.TEST_SET list/cocostuff/testval.lst \

TEST.MODEL_FILE hrnet_ocr_cocostuff_3965_torch04.pth \

TEST.SCALE_LIST 0.5,0.75,1.0,1.25,1.5,1.75,2.0 \

TEST.MULTI_SCALE True TEST.FLIP_TEST TrueEvaluating HRNet+OCR on the ADE20K validation set with multi-scale and flip testing:

python tools/test.py --cfg experiments/ade20k/seg_hrnet_ocr_w48_520x520_ohem_sgd_lr2e-2_wd1e-4_bs_16_epoch120.yaml \

DATASET.TEST_SET list/ade20k/testval.lst \

TEST.MODEL_FILE hrnet_ocr_ade20k_4451_torch04.pth \

TEST.SCALE_LIST 0.5,0.75,1.0,1.25,1.5,1.75,2.0 \

TEST.MULTI_SCALE True TEST.FLIP_TEST TrueIf you find this work or code is helpful in your research, please cite:

@inproceedings{SunXLW19,

title={Deep High-Resolution Representation Learning for Human Pose Estimation},

author={Ke Sun and Bin Xiao and Dong Liu and Jingdong Wang},

booktitle={CVPR},

year={2019}

}

@article{WangSCJDZLMTWLX19,

title={Deep High-Resolution Representation Learning for Visual Recognition},

author={Jingdong Wang and Ke Sun and Tianheng Cheng and

Borui Jiang and Chaorui Deng and Yang Zhao and Dong Liu and Yadong Mu and

Mingkui Tan and Xinggang Wang and Wenyu Liu and Bin Xiao},

journal={TPAMI},

year={2019}

}

@article{YuanCW19,

title={Object-Contextual Representations for Semantic Segmentation},

author={Yuhui Yuan and Xilin Chen and Jingdong Wang},

booktitle={ECCV},

year={2020}

}

[1] Deep High-Resolution Representation Learning for Visual Recognition. Jingdong Wang, Ke Sun, Tianheng Cheng, Borui Jiang, Chaorui Deng, Yang Zhao, Dong Liu, Yadong Mu, Mingkui Tan, Xinggang Wang, Wenyu Liu, Bin Xiao. Accepted by TPAMI. download

[2] Object-Contextual Representations for Semantic Segmentation. Yuhui Yuan, Xilin Chen, Jingdong Wang. download

We adopt sync-bn implemented by InplaceABN for PyTorch 0.4.1 experiments and the official sync-bn provided by PyTorch for PyTorch 1.10 experiments.

We adopt data precosessing on the PASCAL-Context dataset, implemented by PASCAL API.