aliaksandrsiarohin / motion-cosegmentation Goto Github PK

View Code? Open in Web Editor NEWReference code for "Motion-supervised Co-Part Segmentation" paper

License: Other

Reference code for "Motion-supervised Co-Part Segmentation" paper

License: Other

Hello @AliaksandrSiarohin !

Thanks for sharing such an awesome work.

I have tried the code using the following command

python ./motion-cosegmentation/part_swap.py --source_image ./final.jpeg --target_video ./crop.mp4 --checkpoint vox-first-order.pth.tar --config ./motion-cosegmentation/config/vox-256-sem-10segments.yaml --supervised --first_order_motion_model --swap_index 1,2,3,4,5,6,7,8,9,10,11,12,13,14,15

However, I am getting deformed outputs. I have attached the input video - crop.mp4. This is obtained by using crop-video.py from one of your other repos. I have also attached 4 sample images used on the same video and their 4 deformed results. I made sure that crop.mp4 & test*.jpg are all of 256x256 resolution.

face_swap_4_examples.zip

Can you please help me with this ?

Hello Aliaksandr!

Thank you for your project!~

I'm new to python, just wondering if your project has a .py file or function for whole-face swap?

And is there an instruction for the swap_index function? That'd be pretty awesome!

Thank you so much!

Hi, I tried training my own first order model network with slight modification of first order model code. Basically just replacing one perceptual loss with another.

When I try plugging in that new model in the notebook you provided in this repo in the final part where supervised segmentation is used along with first order model, I get the following:

However when I use your provided pretrained first order model, I get the following where the face is stretched across entire target face.

Obviously source hair is the issue because source image only has part of face visible and that's the part that is missing in the mask as well, but doesn't seem to bother your model which properly covers entire target face. Do you have intuition why this might be happening?

Thanks!

Hi,

Thanks for sharing nice work.

I downloaded the pretrained checkpoints from google drive but they do not look like a tar archive.

command

$ tar -xf vox-10segments.pth.tar

message (linux)

tar: This does not look like a tar archive

tar: Skipping to next header

tar: Exiting with failure status due to previous errors

message (mac)

tar: Error opening archive: Unrecognized archive format

FYI (linux)

$ tar --version

tar (GNU tar) 1.28

Copyright (C) 2014 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>.

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

Written by John Gilmore and Jay Fenlason.

FYI (mac)

tar --version

bsdtar 3.3.2 - libarchive 3.3.2 zlib/1.2.11 liblzma/5.0.5 bz2lib/1.0.6

vox-15segments.pth.tar gave me the same error.

Could you check the files?

Has anyone found the same issue?

Hey @AliaksandrSiarohin

I had a few beginner questions, can you please help me?

vox-256-sem-5/vox-256-sem-10/vox-256-sem-15 config files? In terms of performance?My task is to swap whole head - hair, eyes, nose, lips, ears neck. from source image to driving video.

A new error on load checkpoints

TypeError Traceback (most recent call last)

in

3 reconstruction_module, segmentation_module = load_checkpoints(config='config/vox-256-sem-10segments.yaml',

4 checkpoint='/content/gdrive/My Drive/motion-supervised-co-segmentation/vox-10segments.pth.tar',

----> 5 blend_scale=1)

/content/motion-co-seg/part_swap.py in load_checkpoints(config, checkpoint, blend_scale, first_order_motion_model, cpu)

103 def load_checkpoints(config, checkpoint, blend_scale=0.125, first_order_motion_model=False, cpu=False):

104 with open(config) as f:

--> 105 config = yaml.load(f)

106

107 reconstruction_module = PartSwapGenerator(blend_scale=blend_scale,

TypeError: load() missing 1 required positional argument: 'Loader'

hello,when i run the "vox-first-order.pth.tar" with "face_parsing",and the index is [1,2,3...,15],

it happens"RuntimeError: CUDA error: device-side assert triggered",it's mean index out of range。

but i don't konw why this happens?

hope for the answer!

Thanks so much for maintaining this!

I've been trying to use the First Order Motion Model

python part_swap.py --config /home/growbettereyes/motion-cosegmentation-master/config/vox-256-sem-15segments.yaml --target_video /home/growbettereyes/materials_for_testing/somany2.mp4 --source_image /home/growbettereyes/materials_for_testing/26.png --checkpoint /home/growbettereyes/motion-cosegmentation-master/vox-first-order.pth.tar --swap_index 0,1 --supervised --first_order_motion_model

and I keep getting this error:

File "part_swap.py", line 230, in

first_order_motion_model=opt.first_order_motion_model, cpu=opt.cpu)

File "part_swap.py", line 126, in load_checkpoints

load_segmentation_module(segmentation_module, checkpoint)

File "/home/growbettereyes/motion-cosegmentation-master/logger.py", line 35, in load_segmentation_module

module.state_dict()['affine.weight'].copy_(checkpoint['kp_detector']['jacobian.weight'])

RuntimeError: The expanded size of the tensor (60) must match the existing size (40) at non-singleton dimension 0. Target sizes: [60, 35, 7, 7]. Tensor sizes: [40, 35, 7, 7]

I've scaled all the images to the recommended sizes, checked my pytorch installation was 1.0.0, tried with several different sources of both kinds. Am I using the right config?

Ps. the readme says the argument is "--first-order-motion-model" but its "--first_order_motion_model"

Is it possible to swap the whole face, instead of just a part like the hair?

What's the difference in using --checkpoint vox-adv-cpk.pth.tar and --checkpoint vox-first-order.pth.tar checkpoints with --config config/vox-256-sem-10segments.yaml ?

The vox-adv-cpk.pth.tar includes vox-first-order.pth.tar?

I see no difference.

I am getting an error when trying to load the checkpoint I trained with the first-order motion model. A quick google search suggests that the pytorch version might cause checkpoints to be corrupted

I am still investigating my problem, however, I just want to ask if you had encountered if before.

great job.

tell me if TaiChi pretrained model will be available?

Hello Aliaksandr,

For the checkpoints stored on Drive, especially:

vox-first-order.pth.tar

vox-10segments.pth.tar

For how many epochs\repeats the models were trained?

Click on the link to get the weight file, but it has been rejected or cannot be loaded. Who can share the weight file, thank you very much!

When training the model, I encountered the following problems:

in logger.py line 35

module.state_dict()['affine.weight'].copy_(checkpoint['kp_detector']['jacobian.weight'])

RuntimeError: The expanded size of the tensor (60) must match the existing size (40) at non-singleton dimension 0. Target sizes: [60, 35, 7, 7]. Tensor sizes: [40, 35, 7, 7]

Great work and thanks for sharing code. Is there any information about the license of the code?

i kinda get that it says what parts are swapped, but it would be very nice to know how exactly this works

Great work.

I've installed it on my local machine and have written a GUI for the face swap and also the first order motion model.

For this I a had to modify some of the python scripts to pass all extra parameters....

My question:

Would it be possible to rescale the swapped face from the source-image before it is "merged" to the target-video?

Problem:

Depending on the face in the source-image it somtimes does not fit correctly.

For example, the results then have 4 ears or the mouth is not at the expected position.

hi AliaksandrSiarohin,

so awesome project, about model training, i find there is no discriminator (include updating discriminator parameters) in the script of train.py, just have generator and segmentation,

is there any problem when i retrain motion-cosegmentation?

When I run the command pip install -r requirements.txt, I get this error in the command prompt

command: 'c:\python38\python.exe' -c 'import sys, setuptools, tokenize; sys.argv[0] = '"'"'C:\\Users\\matth\\AppData\\Local\\Temp\\pip-install-oux8a7yk\\matplotlib\\setup.py'"'"'; __file__='"'"'C:\\Users\\matth\\AppData\\Local\\Temp\\pip-install-oux8a7yk\\matplotlib\\setup.py'"'"';f=getattr(tokenize, '"'"'open'"'"', open)(__file__);code=f.read().replace('"'"'\r\n'"'"', '"'"'\n'"'"');f.close();exec(compile(code, __file__, '"'"'exec'"'"'))' egg_info --egg-base 'C:\Users\matth\AppData\Local\Temp\pip-pip-egg-info-ym7ep1u6'

cwd: C:\Users\matth\AppData\Local\Temp\pip-install-oux8a7yk\matplotlib\

Complete output (61 lines):

============================================================================

Edit setup.cfg to change the build options`

`BUILDING MATPLOTLIB

matplotlib: yes [2.2.2]

python: yes [3.8.3 (tags/v3.8.3:6f8c832, May 13 2020,

22:20:19) [MSC v.1925 32 bit (Intel)]]

platform: yes [win32]

REQUIRED DEPENDENCIES AND EXTENSIONS

numpy: yes [not found. pip may install it below.]

install_requires: yes [handled by setuptools]

libagg: yes [pkg-config information for 'libagg' could not

be found. Using local copy.]

freetype: no [The C/C++ header for freetype

(freetype2\ft2build.h) could not be found. You may

need to install the development package.]

png: no [The C/C++ header for png (png.h) could not be

found. You may need to install the development

package.]

qhull: yes [pkg-config information for 'libqhull' could not

be found. Using local copy.]

OPTIONAL SUBPACKAGES

sample_data: yes [installing]

toolkits: yes [installing]

tests: no [skipping due to configuration]

toolkits_tests: no [skipping due to configuration]

OPTIONAL BACKEND EXTENSIONS

macosx: no [Mac OS-X only]

qt5agg: no [PySide2 not found; PyQt5 not found]

qt4agg: no [PySide not found; PyQt4 not found]

gtk3agg: no [Requires pygobject to be installed.]

gtk3cairo: no [Requires cairocffi or pycairo to be installed.]

gtkagg: no [Requires pygtk]

tkagg: yes [installing; run-time loading from Python Tcl /

Tk]

wxagg: no [requires wxPython]

gtk: no [Requires pygtk]

agg: yes [installing]

cairo: no [cairocffi or pycairo not found]

windowing: yes [installing]

OPTIONAL LATEX DEPENDENCIES

dvipng: no

ghostscript: no

latex: no

pdftops: no

OPTIONAL PACKAGE DATA

dlls: no [skipping due to configuration]

============================================================================

* The following required packages can not be built:

* freetype, png

* Please check http://gnuwin32.sourceforge.net/packa

* ges/freetype.htm for instructions to install

* freetype

* Please check http://gnuwin32.sourceforge.net/packa

* ges/libpng.htm for instructions to install png

----------------------------------------

ERROR: Command errored out with exit status 1: python setup.py egg_info Check the logs for full command output.```

How can apply part swapping in this video on a particular portion.?

I get a very unwanted result in the whole video. I know in this video we should detect the face in the full-size video then apply the mask on a particular part. maybe, I know the process but don't know where to start. or is that use different method can you please tell that. can you please take time and help with that!!!?

Iron man replace with this vin image

Hello Aliaksadr, me again.

What's the use of "First Order Motion Model based alignment"?

I can't get it to work. It gives me following errors:

missing 5 required positional arguments: 'block_expansion', 'num_blocks', 'max_features', 'num_kp', and 'num_channels'

This is an example of my commandline (working when i don't use "--first_order_motion_model" option)

python demo.py --config c:\tutorial\first-order-model/config/vox-256-sem-5segments.yaml --target_video "D:\Temp\ich2.mp4" --source_image "D:\Temp\attila.jpg" --result_video "D:\Temp\00super_Swap.mp4" --checkpoint c:\tutorial\first-order-model/checkpoints/vox-5segments.pth.tar --swap_index 1,2,5 --supervised --first_order_motion_model

Which corresponding checkpoint do you mean?

I hope you can help me...

Hi, I am a great fan for this kinda works and my appreciations to you. On analyzing the code, I get to know that, you swap face from an image to the face in a video feed. I have seen image to image face swapping using 68/81 facial keypoints. The results of your work seem to be better than keypoint based method. Is there any implementation of segmentation based swapping between images? Help, if you could.

Thanks.

I loaded in a checkpoint from my 512x512 training into part_swap using --supervised and --use_source_segmentation so that the source replaces the targets' parts. It seems to be reproducing the source image as the output. Is there something I may have done wrong?

Hi it looks like some quota has been hit for you in google drive and the checkpoints cannot be downloaded.

Thank you for your great job!

Is there any way to support high-res images? like 512 pixels

check attached image , output of face swap is very blurry (image taken from video)

the question is does this relate to networks (segmentation network or generator) operations , or relates to frame post or pre-processing after model predictions

In other words , how can I get higher resolution results

@AliaksandrSiarohin Following up on AliaksandrSiarohin/first-order-model#20, getting an error trying to run this with 512x512 source & target. Here are my configs & the full errors.

diff --git a/config/vox-256-sem-10segments.yaml b/config/vox-256-sem-10segments.yaml

index 5871c97..eda5d74 100644

--- a/config/vox-256-sem-10segments.yaml

+++ b/config/vox-256-sem-10segments.yaml

@@ -39,7 +39,7 @@ model_params:

num_blocks: 5

# Segmentations is predicted on smaller images for better performance,

# scale_factor=0.25 means that 256x256 image will be resized to 64x64

- scale_factor: 0.25

+ scale_factor: 0.125

reconstruction_module_params:

# Number of features mutliplier

block_expansion: 64

diff --git a/modules/util.py b/modules/util.py

index 7530acd..cccab51 100644

--- a/modules/util.py

+++ b/modules/util.py

@@ -198,7 +198,7 @@ class Hourglass(nn.Module):

class AntiAliasInterpolation2d(nn.Module):

def __init__(self, channels, scale):

super(AntiAliasInterpolation2d, self).__init__()

- sigma = (1 / scale - 1) / 2

+ sigma = 1.5 # Hard coded as per issues/20#issuecomment-600784060

kernel_size = 2 * round(sigma * 4) + 1

self.ka = kernel_size // 2

self.kb = self.ka - 1 if kernel_size % 2 == 0 else self.ka

diff --git a/part_swap.py b/part_swap.py

index 2d8aad0..4d185d6 100644

--- a/part_swap.py

+++ b/part_swap.py

@@ -222,8 +222,8 @@ if __name__ == "__main__":

source_image = imageio.imread(opt.source_image)

target_video = imageio.mimread(opt.target_video, memtest=False)

- source_image = resize(source_image, (256, 256))[..., :3]

- target_video = [resize(frame, (256, 256))[..., :3] for frame in target_video]

+ source_image = resize(source_image, (512, 512))[..., :3]

+ target_video = [resize(frame, (512, 512))[..., :3] for frame in target_video]

blend_scale = (256 / 4) / 512 if opt.supervised else 1

reconstruction_module, segmentation_module = load_checkpoints(opt.config, opt.checkpoint, blend_scale=blend_scale, (base) C:\Users\admin\git\motion-cosegmentation>python part_swap.py --config config/vox-256-sem-10segments.yaml --result_video 0123456789_result.mp4 --target_video target_512_long.mp4 --source_image source.jpg --checkpoint "C:\Users\admin\Downloads\vox-10segments.pth.tar" --swap_index 0,1,2,3,4,5,6,7,8,9

C:\Users\admin\Anaconda3\lib\site-packages\skimage\transform\_warps.py:105: UserWarning: The default mode, 'constant', will be changed to 'reflect' in skimage 0.15.

warn("The default mode, 'constant', will be changed to 'reflect' in "

C:\Users\admin\Anaconda3\lib\site-packages\skimage\transform\_warps.py:110: UserWarning: Anti-aliasing will be enabled by default in skimage 0.15 to avoid aliasing artifacts when down-sampling images.

warn("Anti-aliasing will be enabled by default in skimage 0.15 to "

part_swap.py:105: YAMLLoadWarning: calling yaml.load() without Loader=... is deprecated, as the default Loader is unsafe. Please read https://msg.pyyaml.org/load for full details.

config = yaml.load(f)

0%| | 0/449 [00:00<?, ?it/s]C:\Users\admin\Anaconda3\lib\site-packages\torch\nn\functional.py:2423: UserWarning: Default upsampling behavior when mode=bilinear is changed to align_corners=False since 0.4.0. Please specify align_corners=True if the old behavior is desired. See the documentation of nn.Upsample for details.

"See the documentation of nn.Upsample for details.".format(mode))

Traceback (most recent call last):

File "part_swap.py", line 237, in <module>

face_parser, hard=opt.hard, use_source_segmentation=opt.use_source_segmentation, cpu=opt.cpu)

File "part_swap.py", line 194, in make_video

blend_mask=blend_mask, use_source_segmentation=use_source_segmentation)

File "C:\Users\admin\Anaconda3\lib\site-packages\torch\nn\modules\module.py", line 489, in __call__

result = self.forward(*input, **kwargs)

File "C:\Users\admin\Anaconda3\lib\site-packages\torch\nn\parallel\data_parallel.py", line 141, in forward

return self.module(*inputs[0], **kwargs[0])

File "C:\Users\admin\Anaconda3\lib\site-packages\torch\nn\modules\module.py", line 489, in __call__

result = self.forward(*input, **kwargs)

File "part_swap.py", line 88, in forward

out = enc_target * (1 - blend_mask) + enc_source * blend_mask

RuntimeError: The size of tensor a (128) must match the size of tensor b (64) at non-singleton dimension 3

hi, aliaksandrSiarohin, very appreciate your work, i find keypoint detector network of fomm is similar with segmentation module of motion cos, and others (generator and dense motion) are same totally, when i train the fomm model from scrath on new dataset, Can this model be used to inference in motion-cos directly?or do some parameters need to be modified?

Hi Aliaksandr,

Thanks for sharing this awesome project :-)

I dug into the code and tried to understand how is the "First Order Motion Model" used when adding the --first_order_motion_model CLI argument.

I saw that when using this argument, an instance of DenseMotionNetwork is created in PartSwapGenerator and this instance is used in the forward pass of this module in order to calculate the dense motion map between the source and the target objects.

My question is, in this case, does this module use segmentation maps (as described in the Motion-Supervied Co-Part Segmentation paper) or discrete keypoints (as described in First Order Motion Model)?

I would guess that keypoints would be used instead of segmentation maps so that we will be able to align the keypoints of the source and target faces, otherwise they wouldn't be aligned and so blending their features would result in an undesired result. Am I correct?

Thanks again!

Waht kind of dataset would i need for that, is it even possible ?

Same model I was trying to train on issue #43. The face swap model doesn't output very good results. How do I improve the model results? Face swap examples on epoch 5 https://imgur.com/a/lMhvZOf and on epoch 10 https://imgur.com/a/yCNyjx9 on images.

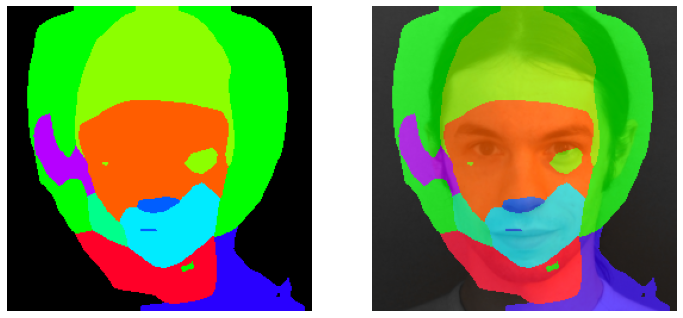

Segmentation module example output on epoch 5:

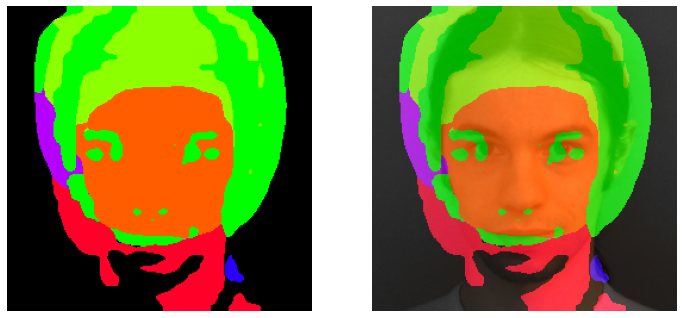

Segmentation module example output on epoch 10:

I replaced this line:

motion-cosegmentation/part_swap.py

Line 88 in 571e26f

bm = F.interpolate(blend_mask, size=128)

out = enc_target * (1 - bm) + enc_source * bmI did that in order to avoid tensors errors about tensors being different size.

Logs messages when I reran the code to get the warning messages at beginning. I didn't keep the logs when I trained the model.

train.py:85: YAMLLoadWarning: calling yaml.load() without Loader=... is deprecated, as the default Loader is unsafe. Please read https://msg.pyyaml.org/load for full details.

config = yaml.load(f)

Use predefined train-test split.

Training...

Segmentation part initialized at random.

0%| | 0/20 [00:00<?, ?it/s]/opt/conda/lib/python3.8/site-packages/torch/nn/functional.py:3454: UserWarning: Default upsampling behavior when mode=bilinear is changed to align_corners=False since 0.4.0. Please specify align_corners=True if the old behavior is desired. See the documentation of nn.Upsample for details.

warnings.warn(

/opt/conda/lib/python3.8/site-packages/torch/nn/functional.py:3828: UserWarning: Default grid_sample and affine_grid behavior has changed to align_corners=False since 1.3.0. Please specify align_corners=True if the old behavior is desired. See the documentation of grid_sample for details.

warnings.warn(

/opt/conda/lib/python3.8/site-packages/torch/nn/functional.py:1709: UserWarning: nn.functional.sigmoid is deprecated. Use torch.sigmoid instead.

warnings.warn("nn.functional.sigmoid is deprecated. Use torch.sigmoid instead.")

Code I used to get the face swap output:

#!/usr/bin/env python

# coding: utf-8

# In[2]:

import imageio, os,random

import numpy as np

import matplotlib.pyplot as plt

from skimage.transform import resize

from tqdm.notebook import tqdm

from PIL import Image

from pathlib import Path

import matplotlib.pyplot as plt

get_ipython().run_line_magic('matplotlib', 'inline')

# In[3]:

from part_swap import load_checkpoints

cpu = True

reconstruction_module, segmentation_module = load_checkpoints(config='config/vox-512-sem-10segments.yaml',

checkpoint='log/vox-512-sem-10segments 26-04-21 19:25:33/00000005-checkpoint.pth.tar',

blend_scale=0.125, first_order_motion_model=True,cpu=cpu)

# In[4]:

from part_swap import make_video, load_face_parser

face_parser = load_face_parser(cpu=cpu)

# In[5]:

def swap(source_image, target_image):

shape = source_image.shape

#Resize image and video to 256x256

source_image = resize(source_image, (512, 512))[..., :3]

target_video = [resize(target_image, (512, 512))[..., :3]]

out = make_video(swap_index=[1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15], source_image = source_image,

target_video = target_video, use_source_segmentation=True, segmentation_module=segmentation_module,

reconstruction_module=reconstruction_module, face_parser=face_parser, cpu=cpu)[0]

return resize(out, (shape[0], shape[1]))

# In[6]:

def get_concat_h(im1, im2):

dst = Image.new('RGB', (im1.width + im2.width, im1.height))

dst.paste(im1, (0, 0))

dst.paste(im2, (im1.width, 0))

return dst

# In[8]:

get_ipython().run_line_magic('matplotlib', 'inline')

dir_ = str(Path.home()) + '/gdrive/images1024x1024/57000/'

ims = os.listdir(dir_)

g = 5

s= random.sample(ims, g * 2)

srcs = s[:g]

dess = s[g:]

len(dess)

for i in range(g):

target_image = imageio.imread(dir_ + dess[i])

source_image = imageio.imread(dir_ + srcs[i])

out = swap(source_image, target_image)

dis = get_concat_h(get_concat_h(Image.fromarray(np.uint8(target_image)), Image.fromarray(np.uint8(source_image))),Image.fromarray(np.uint8(out * 255)))

dis.save('dis/' + str(i) + '.jpg')

plt.figure()

plt.imshow(dis)That's all the information I could think of sending.

Thanks for the great work! I have a question about checkpoint loading as below:

def load_segmentation_module(module, checkpoint):

if 'kp_detector' in checkpoint:

partial_state_dict_load(module, checkpoint['kp_detector'])

module.state_dict()['affine.weight'].copy_(checkpoint['kp_detector']['jacobian.weight'])

module.state_dict()['affine.bias'].copy_(checkpoint['kp_detector']['jacobian.bias'])

module.state_dict()['shift.weight'].copy_(checkpoint['kp_detector']['kp.weight'])

module.state_dict()['shift.bias'].copy_(checkpoint['kp_detector']['kp.bias'])

if 'semantic_seg.weight' in checkpoint['kp_detector']:

module.state_dict()['segmentation.weight'].copy_(checkpoint['kp_detector']['semantic_seg.weight'])

module.state_dict()['segmentation.bias'].copy_(checkpoint['kp_detector']['semantic_seg.bias'])

else:

print ('Segmentation part initialized at random.')

else:

module.load_state_dict(checkpoint['segmentation_module'])

Since your provided pre-trained model only has the weights of the kp-detector in first-order-motion-model, the segmentation part will be initialized at random and the estimated dense motion field will be wrong. Are you going to provide the pre-trained model for the segmentation module in the future?

Thanks!

Hey, thanks again for another awesome project. I was curious, is there a similar function to relative and absolute warping like in First Order Model? There are some cases where you may not want to warp every feature to the target video. Sometimes it's a bit extreme and looks unnatural. I would use First Order Model, but I feel like this project works a lot better for my specific use case. Thanks!

Hello @AliaksandrSiarohin !

I have tried the code using the following command

python part_swap.py --source_image ./final.jpeg --target_video ./crop.mp4 --checkpoint vox-first-order.pth.tar --config ./motion-cosegmentation/config/vox-256-sem-15segments.yaml --supervised --first_order_motion_model --swap_index 1,2,3,4,5,6,7,8,9,10,11,12,13,14,15

How can I use the checkpoint of first_order_motion_model with changing the number of segments?

For object motion on the face, it seems the swap by id is blending it instead of swapping it. Is there any easy way to fix it or does it requires more segments?

In the paper, for evaluating landmarks, it says "we use 5000 images for fitting the regression model, and 300 other images for computing the MAE". What is exactly the 5000/300 split? I would like to do a comparison. Thanks in advance.

trying to download vox-first-order.pth.tar from https://drive.google.com/open?id=1SsBifjoM_qO0iFzb8wLlsz_4qW2j8dZe , but google drive issues this message

Too many users have viewed or downloaded this file recently. Please try accessing the file again later. If the file you are trying to access is particularly large or is shared with many people, it may take up to 24 hours to be able to view or download the file. If you still can't access a file after 24 hours, contact your domain administrator.

Hi @AliaksandrSiarohin !

Thanks for sharing such an awesome work.

I've trained model with my own dataset. with config/vox-256-sem-15segments.yaml

and It's worked with command:

part_swap.py --config config/vox-256-sem-15segments.yaml --checkpoint log/vox-256-sem-15segments\ 26-08-20\ 07\:56\:19/00000000-checkpoint.pth.tar --source_image 2.jpg --target_video 2.mp4 --swap_index 0,1,2,3,4,5,6,7,8,9,10,11,12,13,14

however got errors with command:

part_swap.py --config config/vox-256-sem-15segments.yaml --checkpoint log/vox-256-sem-15segments\ 26-08-20\ 07\:56\:19/00000000-checkpoint.pth.tar --source_image 2.jpg --target_video 2.mp4 --swap_index 0,1,2,3,4,5,6,7,8,9,10,11,12,13,14 --supervised

Can you please help me with this ?

Thanks

Hi @AliaksandrSiarohin ,

This is very interesting work.

I wanted to fine tune the model with few more new videos. Though I could do the video-processing part, I am unable to run the training code. I am getting the below error. How should i fine tune the model.

I have followed the steps in the repository. Please help me out!

!python train.py --config "config/Retrain_15segments.yaml" --checkpoint "models/vox-cpk.pth.tar"

I am training on a 512 x 512 set of data, using 4 gpus. I am having a stall during the loss process where it locks during the calculation of the loss. It gives a user warning of "was asked to gather along dimension 0, but all input tensors were scalars; will instead unsqueeze and return a vector."

Is there a way to split the loss function to work on multiple gpus with the DataParallelwithCallback? I noticed that the loss is only calculating on gpu 0.

Is face parsing segmentation used for 'deforming' the selected segments(parts) in --supervised mode or is it just kind of additional mask for the 5/10/15 part checkpoint segmentation model?

module.state_dict()['affine.weight'].copy_(checkpoint['kp_detector']['jacobian.weight'])

RuntimeError: The size of tensor a (60) must match the size of tensor b (40) at non-singleton dimension 0

tar: This does not look like a tar archive

tar: Skipping to next header

tar: Exiting with failure status due to previous errors

Hello Aliaksander,

Thanks a lot for providing this code that would like to experiment.

Could ou explain the use of the swap_index parameter in the part_swap.py script ?

How the index values relate to parts and how to use them in particular with the different vox-* checkpoints ?

I'm trying to train a 512x512 faceswap model. I trained a 512x512 first order model for faces. More info about it here.

I got "RuntimeError: The size of tensor a (29) must match the size of tensor b (13) at non-singleton dimension 3" in

at here for the segmentation model:

motion-cosegmentation/logger.py

Line 22 in c1a71a7

I modified the code loaded "reconstruction_module" as "generator" and I used the face parser and I had to resize it to 128x128. This is the results of an image I did face swap on epoch 7.

What should I do?

Also to the creator, could you add a licence to the project?

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.