zawy12 / difficulty-algorithms Goto Github PK

View Code? Open in Web Editor NEWSee the Issues for difficulty algorithms

License: MIT License

See the Issues for difficulty algorithms

License: MIT License

This shows the similarity of all difficulty algorithms.

T = target solvetime

n = number of blocks in averaging

r = a dilution aka tempering aka buffering factor

w = a weighting function based on n. It's 1 for all but LWMA. In LWMA it increases from 1 to n from oldest to more recent blocks, giving them more weight.

target = avg(n targets) / r * [(r-1) + sum(n w*STs)/sum(n w's) / T]

Less accurately:

difficulty = avg(n Ds) * r / [ (r-1) + sum(n w*STs)/sum(n w's) / T ]

For clarity, here it is w=1 (no increased weight given to more recent blocks like LWMA and OSS)

target = avg(n targets) / r * [(r-1) + avg(n STs) / T]

w=1, r=1 Dark Gravity Wave (a Simple Moving Average in deep disguise)

w=1, n=1 for EMA. Larger r means smoother, slower response. Other articles show this is almost precisely ASERT (BCH) & ETH's.

w=1, r=4 for Digishield, r=2 for Grin

r=1, w = function of n. LWMA for linear increase in w for more recent blocks. Pretty close to EMA.

r = 4, and simple w function. OSS (like a combination of LWMA and Digishield)

There is not a real-time clock in cryptocoins that has an accurate time except for the median time of node peers (or of just single peers). This puts an upper limit on what time a miner can assign to a block. This upper limit is crucial. If it were not there, miners could assign a large future time which would drive difficulty down as far as they want in only 1 block.

Allowing miners to assign the timestamp instead of simply using the median of node peers is crucial to a cryptocoin. At the core of why POW is used, miners perform a timestamp service as a for-profit competitive group that prevents the need for a 3rd party centralized timestamp service. It can't be accomplished by just nodes in an asynchronous network, especially if many nodes can be created to perform a Sybil attack.

The best way to handle bad timestamps is to make sure the future time limit allowed by nodes is restricted to 6xT or less. Default is on many coins and it is 7200 seconds.

[ Update: to minimize profit from bad timestamps, I am recommending FTL be set to 300 or TxN/20, whichever is larger. ]

A bad timestamp can lower difficulty by N/(N+7200/T) when the future_time_limit is the usual 7200 seconds (Bitcoin and Monero clones). The next honest timestamp will reverse the effect. To maximize the number of blocks a big miner can get without difficulty rising, he assigns to all his blocks

timestamp = (HR+P)/P*T + previous_timestamp

where P is his hashrate multiple of the network's hashrate HR before he came online. For example, if he has 2x network hashrate, he can assign 1.333xT plus previous timestamp for all his timestamps. This prevents difficulty from rising, keeping it the same value, maximizing the number of blocks he can get. With CRYPTONOTE_BLOCK_FUTURE_TIME_LIMIT=7200 and T=120 seconds, this would allow him to get 7200/120 = 60 blocks without the difficulty rising. He can't continue because he will have reached the future time limit that the nodes enforce. He then leaves and difficulty starts rising (if negative solvetimes are allowed in the difficulty calculation). If negative solvetimes are not allowed, he gets 60 blocks all the same over the course of 90 blocks that he and the rest of the network obtained. The average real solvetime for the 90 would be 1/3 of T (if he has 2x the network hashrate) without the difficulty rising. And when he leaves, difficulty will stay the same. So the algorithm will have issued blocks too quickly without penalizing other miners (except for coin inflation which also penalizes hodlers).

A miner might be able to own the local node peers and have control of the time limit, but I believe this risks splitting the chain or causing other nodes to reject the blocks.

[update: Graft had big problem from a big miner "owning the MTP". This was made easy by Graft not lowering the FTL from 7200 to 500. Here's a full discussion. ]

The lower limit is approximately 6xT into the past (in Bitcoin and maybe vast majority of all others). It's not based on real time, but on the median of the past 11 block timestamps, which is the 6th block in the past if the 11 blocks had sequential timestamps. This is called median time past (MTP).

The lower limit can get "owned" by a >50% miner who would generally get 6 of the 11 past blocks. He could keep assigning the same time initial_timestamp - 6xT to all his timestamps. By his 7th block it could be -12xT compared to real time because he owns the MTP. Six blocks later he can assign -18xT, and so on. This drives difficulty up (if negative solvetimes are allowed in the difficulty calculation), so its a malicious attack that does not help him.

If an algo has a limit on the rate of difficulty falling that is less strict than its limit on rising (asymmetrical limits) and if it is looking at individual timestamps and allowing negative solvetimes, then he can ironically drive difficulty down by constantly assigning reverse timestamps (it's ironic because a reverse timestamp should send difficulty up). The extreme asymmetry is not allowing negative solvetimes by using if (solvetime<1) { solvetime=1;}. A bad actor with >50% hash rate and constantly assigning negative timestamps in this case would cause difficulty to drop to zero.

[this was written before I realized lowering FTL would solve all timestamps problems]

The +12xT and -6xT limits on timestamps in Bitcoin are pertty good if T=600. In a simple moving average (SMA), an incorrect time that is 12xT into the future would make the difficulty drop for only 1 block by about N/(12+N) which is 17% for a typical N=60. The next block after it would immediately correct the error with a timestamp that is about -11xT his timestamp which should still be a full 6xT ahead of the MTP. Conversely, bad -6xT timestamps would typically raise difficulty less by N/(N-6) and be immediately corrected on the next honest timestamp.

Doing nothing could cause a serious problem for the WHM-like algorithms that give a higher weight to more recent timestamps. If the two most recent blocks are assigned the -6xT, there are many scenarios where difficulty would come out negative, causing a catastrophic problem. It becomes a lot harder to do as N gets higher.

The EMA difficulty would shoot up exponentially in a single block with a single negative solvetime that results from an out-of-sequence timestamp.

Even the SMA could have a problem if N is really low like N=10. For example, if 6 of the past 11 solvetimes were 1xT to allow a -6xT and then if 3 of the 10 in the window were 0.1xT then the denominator will be

5xT+4x0.1xT-1x6xT = -0.6xT

which gives a negative difficulty.

But the worst that can happen in an SMA is if a dishonest 12xT forward stamp is at the end of the window when a -6xT comes in the front, then the difficulty is incorrectly lowered by N/(N+18) and the vice versa case is a rise in difficulty of N/(N-18).

An attacker could also cause a divide by zero failure, or give a really small value for the denominator like 0.001, sending the difficulty up 1000x, effectively forcing a fork.

In DAs that loop over each block in the N window to get all solvetimes rather than subtracting oldest from the most recent timestamp, simply blocking a negative solvetime as in the following code has an ironic catastrophic failure:

if ST < 0 then ST=0

# or this would be about the same

# if ST < 1 then ST=1The failure occurs if a largish ~20% miner keeps assigning the -6xT limit. The irony is that you expect -6xT to drive D up, but since it is converted to 0 second, the next honest timestamp that is solved in a normal 1xT will cause a very large "calculated" solvetime of 7xT for the next block after the biggish miner assigns -6xT. Consider the following timestamps and calculated solvetimes from the loop in an SMA that uses ST= timestamp[i] - timestamp[i-1] when a 20% miner (20% of blocks) assigns -6xT:

apparent solvetimes = 1, 1, 1, -6, 7, 1, 1, 1, -6, 7, 1, 1, 1, -6, 7 (average is 1, so it's OK)

after blocking negatives = 1, 1, 1, 0, 7, 1, 1, 1, 0, 7, .....

When blocking negatives the average ST is 2, so a 20% miner can cut difficulty in half in just 1 window length. When the difficulty drops, more mining will come on and the 20% miner's effect will be reduced. But if he were able to maintain 20%,, difficulty would drop to zero in a few N windows (replace the 1's in the last 2 sequences above with 0 to represent everyone solving in 0 seconds, and the difficulty will still continue to drop).

The EMAs and WHM algorithm would be affected about the same.

For an SMA algo or Digishield v3 who simply subtract the oldest from the newest timestamp to get sum(STs), and is not using MTP as the newest block it is not too bad and a little better than doing nothing. The difficulty could be incorrectly high or low from bad timestamps by N/(N +/- 13) in the case where -6xT is the oldest block in the window and -6xT is also the 2nd to most recent block, causing the most recent block to be +7xT.

This method is if you are not going to allow a signed integer for solvetime (otherwise use method 6). It's good for all algorithms. Method 6 is more accurate and retains symmetry and is preferred.

Superficially it seems like method 2 because the bad timestamp will be reported with a solvetime of zero, but it does not have the catastrophic exploit.

In code it can be done like this:

# Idea from Neil (kyuupichan)

# in case several negatives were in a row before our N window:

# numbering is "blocks into past", -1 = most recently solved block

# The next line could have been just -N-1, but checking more reduces potential small error.

prev_max = max(timestamp[-N-1],timestamp[-N-2],timestamp[-N-3],timestamp[-N-4])

for i=-N to -1

max_timestamp = max(timestamp[i], prev_max)

solvetime[i] = max_timestamp - prev_max

prev_max = max_timestamp

.....

For N in a low range like N=60 combined with a coin that allows timestamps up to 24xT ahead of median of peer node time, the above allows an exploit for getting 15 blocks at ~35% below average difficulty (see further below). The following is a fix for WHM (and WT-144) that retains the above idea, but greatly blocks the exploit without slowing the ability of the difficulty to drop with normal speed if hashrate goes to, say, 1/3.

for i=-N to -1

prev_max_timestamp = max(T[i-1],T[i-2],T[i-3],T[i-4],T[i-5],T[i-6],T[i-7] )

solvetime[i] = T[i] - prev_max_timestamp

if solvetime[i] > 7*T then solvetime[i] = 7*T

...

In EMA's we do it similarly:

L = 7 # limits forwarded stamps

maxT=timestamp[height-7-1]

for ( i = height - 7 to i < height ) { maxT = max(maxT, timestamp[i]) }

ST = timestamp[height] - maxT

ST = max(T/200,min(T*7, ST))

The 7 is chosen based on being a fairly rare event, but also fairly tight choice so that N for the EMA-JE can be N=40 without causing a big problem.

Describing the problem if limit=7 is not used: imagine solvetimes are all magically 1xT in order to simplify the math. Now say a 20% miner comes on and always assigns the max allowed timestamp:

Timestamps:

1,2,3,4,(5+12),6,7,8,9,(10+12)...

Solvetime without using the "7"

1,1,1,13,0,0,0,0,5,0,0,0,0,5,..repeat

Ignoring the 4 startup blocks, avg ST is 1 like it should be. The SMA (with a loop), WHM, and EMA-JE with N=60 (which is like EMA-Z with N=30) all end up at about 22% below the correct difficulty for as long as the miner does this. The 13 triggers a 30% drop in EMA and WHM (18% in SMA) and the 5's slow its recovery. The 5's are actually 4 in the steady state because the difficulty dropped. They are even lower because low difficulty attracts more hash power, so steady state is not as bad as I've said. My point is that there is a recovery in 30 blocks, but there are 15 blocks obtained at an average of 25% too low in WHM and EMA and 15% in SMA.

Monero clones may allow +24xT timestamps and with WHM N=60, this is a disaster. My testing indicates about 25 blocks at 35% below correct difficulty in WHM and EMA-JE with N=60 (EMA-Z N=30). Some clones of other coins may keep a rigid 2 hour max while reducing the T, so that a T=120 seconds coins with a 2-hour max ahead of the median of node peer time would allow a +60xT.

Here's the scenario with the limit=7 protection, assuming the worse situation where +24xT is allowed:

timestamps:

1,2,3,4,(5+24),6,7,8,9,(10+24),11,12,13,(14+24)..

solvetimes with "7" protection:

1,1,1,8,0,0,0,0,5,0,0,0,0,5...

For WHM and EMA, there are 15 blocks obtained at only 15% below the correct difficulty. So it's better under worse circumstances.

The way the 7 is used above "retains symmetry". It limits the jump which makes a smaller N more palatable in the presence of timestamp manipulation. "Retaining symmetry" means it will come back down to the correct value. Making the 7 smaller in the loop than in the max limitation enforced at the end would have caused the difficulty to be below the correct value for a few blocks.

In the algos I've refined the above idea to make the "7" range from 5 to 9. Really, using 5 is problematic it's need to prevent a 20% in an N=40 algorithm every time there's a bad timestamp. So Method 6 is really preferred.

An SMA that simply subtracts first and last timestamps in the window and nothing else would again have much less error in D from bad timestamps, limited to even less than method 2. In this case: N/(N-1) is the max of raising difficulty to N/(N+13) = 18% drop for N=60 for one block (it then immediately corrects when an accurate timestamp comes in). Then when that block exists the window, D rises by 18% for 1 block. so it seems like doing nothing works really well, but method 4 is a way you can prevent the single-bad-timestamp cases from having an effect if you don't mind delaying your response by 1 block: See method 4.

There is another way to stop the single case in SMA's (that subtract first from last timestamps). They could use something like the limit=7 idea and apply it to both the beginning and end of the window to protect as the bad time comes into and out of the window. EMA and WHM don't give hardly any weight when it leaves their "window", so it's not needed there. Here it is for just 4 blocks.

# numbering is "blocks into past"

begin_timestamp =max(timestamp[-N-1], timestamp[-N-2], timestamp[-N-3], timestamp[-N-4] )

end_timestamp = max(timestamp[-1], timestamp[-2], timestamp[N-3], timestamp[N-4] )

total_solvetime = end_timestamp - begin_timestamp

I learned this from BCH's new DAA. For SMAs that subtract first and last timestamps, do this:

begin_timestamp =median(timestamp[-N-1], timestamp[-N-2], timestamp[-N-3] )

end_timestamp = median(timestamp[-1], timestamp[-2], timestamp[N-3] )

I can't logically justify this because it only protects against single bad timestamps when they would not cause much problem anyway and delays response by 1 block. But I love it for some reason, and it inspired the last part of method 3.

You can put symmetrical limits on difficulty changes from the previous block. There's no reason to do this, but I wanted to mention it. I think it's not hardly different than method 6. This is what MANY coins should have used instead of copying Digishield's POW limits that are useless and cause harm if you try to make Digishiled respond faster. This allows the algorithm to change as fast as it can in the presence of a specified-size hash attack starting or stopping, but not faster.

D = calculated D for next block to be considered for limiting

X = 10 # max expected size of "hash attacks" as multiple of bash hash rate

limit = X^(3/N) # 3 for WHM and EMA. Use 2 for SMA

if ( D > prev_D*limit) { D=prev_D*limit }

if ( D > prev_D/limit) { D=prev_D/limit }

Or it can be made to act as if method 6 timestamp limits are in place.

limit = 1+max_allowed_solvetime/N

if ( D > prev_D*limit) { D=prev_D*limit }

if ( D > prev_D/limit) { D=prev_D/limit }

This is the best method if the future time limit determined by median of nodes is not reduced as described at very top.

When a bad timestamp comes through, it can be allowed it to "do its damage" with almost no problem as long as negative solvetimes are allowed. If a forward time is used to try to lower difficulty, the next accurate timestamp that comes through will erase its effect completely in all algorithms. And vice versa if a bad reverse timestamp comes in. Monero clones may allow a 24xT forward time to come in and if the algo uses N=24, then difficulty can be cut to 1/4 in the next blockwhen using the low N's I recommend for T=600 second coins. It can even throw a negative in EMA-Z A limit of 8xT as max solvetime after previous timestamp did not hurt performance much (see chart below). If the limits are not symmetrical, an exploit is possible. If you're using an SMA or Digi v3 for some reason, keep in mind the effects are reversed when the bad timestamp leaves out the back of the window.

if ST > 6*T then ST=6*T

if ST < -5*T then ST= -5*T

Limiting the solvetime makes D slower to drop if there is a big decrease in hashrate. The plots below show what happens without and then with the 8xT limit when hashrate drops to 1/50th with the EMA-Z. This shows performance is not hurt much.

This shows the benefit in a live coin of allowing the negative solvetimes. Graft was using method 3, which is the second best method.

If there was good real-time clock such as all nodes keeping a good universal time, we could theoretically make the network synchronous like a computer relying on a clock pulse and do away with consensus. Synchronous networks do not have FLP or Byzantine problems because there is no need for consensus. In short, every node would have a good universal clock accurate to within a few seconds, and there would be an agreed-upon clock cycle with an "on time" when new transactions are accepted by nodes, and an "off time" to wait for all nodes to get and validate all transactions. It takes about a minute for 0.5 MB blocks to propagate to 90% of nodes, so the off time might need to be 5 minutes. All nodes would accept all valid transactions within the window, but they have to each independently determine what the acceptable window is by their independent but accurate clock (independent so they all do not all use the same source for time which would be an attack-able 3rd party). Then transactions would be sorted into a block and hashed like a regular block chain. Nodes do not theoretically need to ask other nodes anything. They just share transactions. There are hairy details (such as straggling transactions on the edges of the "on window" and re-syncing to get the right block chain when rejoining the network) that would require some asking of other nodes which is the beginning of trusted peers or consensus that only POW has solved. But CPUs work without consensus and with "large" (nanosecond) delays. To what extent are nodes not like a disparate groups of transistors on a CPU waiting on electrons (transactions) to arrive? Nodes going online and dropping out is like only some of the transistors turning off and on, so the analogy even works to show the problems.

Best algorithm:

WHM difficulty algorithm

I've been careful to select the best N and adjustment factor (to get the correct average solvetime) for this algorithm. The next two algorithms are very nearly as good, but the selection of N and adjustment factor are only optimal for T=120 second target solvetimes.

A close 2nd with a lot less code:

Simple EMA difficulty algorithm

Improved version of Digishield has more stability than Simple EMA, but is a little slower. The Simple EMA page shows how to model the EMA to be more like Digishield in order to give what seems to be the same results.

A more advanced form of the Simple EMA, with no advantage other than being more precise. This article also covers every aspect of EMAs.

EMA-Z

New algorithm that might be better but is more complicated:

Dynamic EMA difficulty algorithm

This idea could solve a lot of problems, but bad timestamps arguably prevent it from being better because they force us to require a certain minimum number of blocks, which severely limit it where it is most needed.

Extending EMA algorithm to a PID controller so you can tweak it to have characteristics very different from other algorithms. But overall, it seems to not be adjustable to anything better than the EMA, just different.

PID controller difficulty algorithm

Masari coin (Monero clone) has implemented the WHM N=60 and so far appears to be an incredible success.

See also

Selection of N (more important than choice of algorithm)

Introduction to difficulty algorithms

Comparing algorithms,

Handling bad timestamps.

Difficulty performance of live coins

Hash attack examples

Dark Gravity Wave problems:

2 and 3 cause solvetime to be 6% longer than expected.

# Simple moving average difficulty algorithm

# timestamps[1] = most recent timestamp

next_target = average(N past targets) * (timestamps[1]-timestamps[N+1])/(N*desired_block_time)

Instead of average of past N targets, DGW does this:

# Dark gravity wave "average" Targets

avgTarget = Target[1];

for ( i=2 ; i <= N; i++) {

avgTarget = ( avgTarget*i +Target[i] ) / (i+1) ;

}

There's nothing in the comments to explain what this is supposed to be doing, so I expanded it out for i =2 to 4 (N=4). Target[1] = T1 = previous block's target and T4 is 4th block in the past.

which simplifies to:

This is just an average that gives double weight to the most recent target.

Here's the actual code.

for (unsigned int nCountBlocks = 1; nCountBlocks <= nPastBlocks; nCountBlocks++) {

arith_uint256 bnTarget = arith_uint256().SetCompact(pindex->nBits);

if (nCountBlocks == 1) {

bnPastTargetAvg = bnTarget;

} else {

// NOTE: that's not an average really...

bnPastTargetAvg = (bnPastTargetAvg * nCountBlocks + bnTarget) / (nCountBlocks + 1);

}

if(nCountBlocks != nPastBlocks) {

assert(pindex->pprev); // should never fail

pindex = pindex->pprev;

}

}

And what it should be:

for (unsigned int i = 1; i <= nPastBlocks; i++) {

arith_uint256 bnTarget = arith_uint256().SetCompact(pindex->nBits);

bnPastTargetAvg += bnTarget/nPastBlocks; // simple average

pindex = pindex->pprev;

}

The problem is that there is a sweet spot in attacking, shown here:

==================

Come back to check before doing any pull or merge. Code compiles and is currently on 2 to 5 testnets. Hopefully the testnets will concur with my spreadsheet today 5/22/2018.

Change history:

5/21/2018:

5/22/2018:

There were some instances where LWMA was not performing optimally see LWMA failures. This is an attempt to solve the problem. Large coins should use the Simple EMA. This one should be the best for small coins. This supersedes LWMA, D-LWMA, and Dynamic EMA.

// Cryptonote coins must make the following changes:

// const uint64_t CRYPTONOTE_BLOCK_FUTURE_TIME_LIMIT = 3xT; // (360 for T=120 seconds)

// const size_t BLOCKCHAIN_TIMESTAMP_CHECK_WINDOW = 11;

// const unit64_t DIFFICULTY_BLOCKS_COUNT = DIFFICULTY_WINDOW + 1

// Make sure lag is zero and do not sort timestamps.

// CN coins must also deploy the Jagerman MTP Patch. See:

// https://github.com/loki-project/loki/pull/26#event-1600444609

Slipstream EMA Description

Slipstream EMA estimates current hashrate in order to set difficulty to get the correct solvetime. It gives more weight to the most recent solvetimes. It is designed for small coin protection against timestamp manipulation, hash-attacks, delays, and oscillations. It rises 10% per block when an attack begins, so most attacks can't benefit from staying on more than 2 blocks. It uses a very fast Exponential Moving Average to respond to hashrate changes as long as the result is within a +/- 14% range around a slow tempered Simple Moving Average. If it goes outside that range, it switches to a medium-speed EMA to prevent it from rising too high or falling too far. Slipstream refers to it having a strong preference to being "behind" the SMA within +/- 14%. It resists going outside that range, and is anxious to return to it.

// Slipstream EMA difficulty algorithm

// Copyright (c) 2018 Zawy

// EMA math by Jacob Eliosoff and Tom Harding.

// https://github.com/zawy12/difficulty-algorithms/issues/27

// Cryptonote-like coins must see the link above for additional changes.

// Round Off Protection. D must normally be > 100 and always < 10 T.

// This is used because some devs believe double may pose a round-off risk.

double ROP(double RR) {

if( ceil(RR + 0.01) > ceil(RR) ) { RR = ceil(RR + 0.03); }

return RR;

}

difficulty_type next_difficulty(std::vector<std::uint64_t> timestamps,

std::vector<difficulty_type> cumulative_difficulties, size_t target_seconds) {

// After startup, timestamps & cumulative_difficulties vectors should be size N+1.

double T = target_seconds;

double N = DIFFICULTY_WINDOW; // N=60 in all coins.

double FTL = CRYPTONOTE_BLOCK_FUTURE_TIME_LIMIT; // < 3xT

double next_D, ST, D, tSMA, sumD, sumST;

// For new coins height < N+1, give away first 4 blocks or use smaller N

if (timestamps.size() < 4) { return 100; }

else if (timestamps.size() < N+1) { N = timestamps.size() - 1; }

// After startup, the following should be the norm.

else { timestamps.resize(N+1); cumulative_difficulties.resize(N+1); }

// Calculate fast EMA using most recent 2 blocks.

// +6xT prevents D dropping too far after attack to prevent on-off attack oscillations.

// -FTL prevents maliciously raising D. ST=solvetime.

ST = std::max(-FTL, std::min(double(timestamps[N] - timestamps[N-1]), 6*T));

D = cumulative_difficulties[N] - cumulative_difficulties[N-1];

next_D = ROP( D*9*T / (8*T+ST*1.058) );

// Calculate a 50% tempered SMA.

sumD = cumulative_difficulties[N] - cumulative_difficulties[0];

sumST = timestamps[N] - timestamps[0];

tSMA = ROP( sumD*2*T / (N*T+sumST) );

// Do slow EMA if fast EMA is outside +/- 14% from tSMA. 0.877 = 1/1.14.

if (next_D > tSMA*1.14 || next_D < tSMA*0.877) {

next_D = ROP( D*28*T / (27*T+ST*1.02) );

}

return static_cast<uint64_t>(0.9935*next_D);

}

Notice how much higher Digishield goes, causing delays.

I'm against a dev such as myself selecting any constant in difficulty algorithms. It should be an objective setting. But due to the non-linearity of of miners jumping on or off based on some rough threshold (like +/- 25%), and based on miners being able to change behavior when the algorithm can't, it's mathematically difficult to develop an algorithm that can figure out the best settings. It seems to need a an A.I. Lacking the skill, I've needed to rely on experience and observation to choose several settings. These are supposedly close to what an A.I. would conclude Some of the constants are not chosen by me other than to do the correct math, but the important ones are. The important ones are 6xT ,+/- 14%, N=60, 50%, N=28, and N=9.

subtitle: My White Whale

Pre-LWMA

In 2016 I worked on trying to improve Zcash's difficulty algorithm with many different ideas. Nothing came of it except for wasting @str4d and @Daria's time. During this time I posted an errant issue to Monero recommending a simple moving average with N=17. It was errant because N=17 was much too fast which also means it randomly varies a lot. It attracts constant on-off mining by dropping 25% too low on accident all day, everyday. Karbo coin adopted it to stop terrible delays resulting from the 24-hour averaging of the default cryptonote algorithm. It saved their coin, they were grateful, and other coins noticed. I found out about Karbo 6 months later when Sumo had to fork for the same reason, adopted it with minor modifications, and contacted me. Karbo had asked for help earlier, but I was busy and didn't respond. Then more CN/Monero/Forknote/Bytecoin clones noticed and copied either Sumo and Karbo. It was known as Zawy v1 but it was no more than a simple moving average. At the time we were not aware that it was not as good as the Digishield Zcash adopted.

Karbo kept me interested in difficulty algorithms in early 2017, testing every idea I had, showing interest, and providing a bouncing board. I was unable to find anything better than a simple moving average, but I learned how to identify good and bad algorithms. BCH (after their EDA disaster) used a good simple moving average seemingly based on my inability to find something better and may have called it my idea, but it's just BTC's algo converted to a rolling average and a much smaller N. They chose N=144 instead of my N=50 and it has worked good for them. They used a symmetrical limit I promoted and devised an interesting median of 3 method (@deadalnix?) for ignoring single bad timestamps at a cost of a 1-block delay.

LWMA discovery

In July 2017, @dgenr8 showed me his WT-144 being tested for BCH that used an LWMA in difficulty. My Excel spreadsheet "difficulty algorithm platform" immediately declared it a winner. I had tried it in 2016 and 2017 on difficulty/solvetime values to get next_difficulty and it didn't work. He applied the LWMA function to solvetime*target values to get next_Target. I see his sound logic in doing it on those values (each target corresponds to each solvetime, so keep them together), but in trying to understand why his

nextTarget = LWMA(STs*targets) / T (ST = solvetime, T=target solvetime)

was so much better than my old testing of

nextDifficulty = LWMA(difficulties/STs) * T

I thought a lot about averaging and came to believe the following should be better:

nextTarget = avgTarget*LWMA(STs) / T

which is the same as

nextDifficulty = harmonicMeanDifficulties/LWMA(STs) * T

Testing revealed this to be just enough better (not greatly, but measurably) that I abandoned WT-144.

Dgenr8's method made me realize simple moving averages like this

next_Target = average(ST)\*avg(target)/T

work better and are more precise than

next_Difficulty = avg(D) * T / avg(ST) * 0.95

Intuitively speaking it should have been more clear because the STs vary a lot, hitting a lot of solvetimes below the average and small values in denominator cause big changes. The 0.95 is a correction factor for N=30 to get the correct solvetime and it gets small as N increases, nearing 0.99 at N=100. The effect is more clearly seen in a different way. This: next_D = T*avg(D/ST) gives terrible results but this next_D = T/avg(ST/D) gives perfect results. The first is avg(1/X) while the 2nd is the correct 1/avg(X). BTW, 1/avg(1/D) = harmonicMean(D).

EMA Discovery

@jacob-eliosoff noticed our work on trying to get BCH a new DA and suggested a specific type of EMA. He seemed to derive it from past experience and intuition in an evening or two and maybe solidified his thinking from a paragraph in Wikipedia's moving average article that seems to have been there only by chance. It always gives perfect solvetimes with any N, so it's the best theoretical starting point. Dgenr8 suggested I try to combine it with an LWMA but I didn't try it because I could see it looked already to be about the same thing, just exponential instead of linear. Dgenr8 then did it himself, and it came out to be almost exactly the same (to our surprise) but his version could handle zero and negatives. He had basically inverted it and come up with something that has deeper theoretical significance if not a little more accuracy. It was yet again showing working in terms of target is mathematically more correct than working in terms of difficulty.

The EMAs do not do as well LWMA in my testing. One thing that makes LWMA shine is its ability to drop quickly after a hash attack. The only avenue for improving difficulty algorithms is to somehow getting one to rise as quickly as the LWMA falls without causing other problems. There are ways to do better but would require substantial changes (such as @gandrewstone's idea to allow difficulty to fall if a solvetime is taking too long and Bobtail that collects the best hashes for each block, not just one, originally for reasons other than difficulty). [ update: I went full throttle on andrewstones idea, making symmetrically and theoretically perfect with EMA. See "TSA article" ]

The EMA is better than the original LWMA but not quite as good as the new one. Jacob recommended using the EMA because it could be simplified with an e^x = 1 + x substitution in addition to not needing a loop. I opted to "advertise" the more complicated LWMA. I wanted to be sure we had the best for small coins, especially in its ability to drop after an attack. After showing them the results of a more complicated Dynamic LWMA, devs already aware of complexity problems want me it anyway (it switches to smaller N during hash attacks).

I found a section in a book that briefly described how to use the EMA as a PID controller and employed it. This is something many people have considered. But, as I expected for theoretical reasons, it could only do as well as our other algorithms, not better. But like the others, it acts in its own interesting way.

The Stampede for LWMA

After Masari rapidly forked to be the first to implement LWMA in early December 2017, there was a stampede to get it to stop new catastrophic attacks that were occurring from Nicehash if not ASICs that people were not yet aware of. Zawy v1 was no longer sufficient protection as the oscillations all day were bad. Bigger coins on the default CN algo were beginning to find it as unacceptable as the smaller coins always had. Now (5 months later) there are over 30 coins who have implemented LWMA or are about to. About 1 coin per 3 days is getting it.

History of LWMA issues & improvements

Masari was in a rush and therefore did not want to redo variable declarations to allow negative solvetimes. @thaer used if ST < 1 then ST =1 which allows a catastrophic exploit. After seeing this in his code, I immediately showed him @kyuupichan's (?) method of handling out-of-sequence timestamps. Two weeks later he forked to do the repair. Unfortunately, other coins had copied his initial code without us knowing it which led to catastrophic exploits with one coin losing 5000 blocks in 2 hours.

Cryptonote coins sometimes kept old Cryptonote code that caused problems. One problem was a sort of the timestamps before LWMA was applied which is a milder form of the problem above. Another problem was keeping in the 15 block lag which is basically a 15 block gift to an attacker, making LWMA seem slow to respond. Cut should also be changed to zero.

Several coins did not change their N. It appears they just briefly read about it and forked to implement it. One used N=17 left over from Karbo and Sumo. Another left N = 720 from CN default. Another used N=30. All of these were clearly not as good as the recommended N =~ 60.

In December I told BTG about there not being any fix to > 50% miners who apply a bunch of forwarded timestamps. My +7/-7 limits in the code only stopped < 50% bad timestamps. I then realized the FTL could be lowered to stop even > 50% attacks from causing harm. I started telling LWMA coins to lower their FTL and shortly thereafter the first attacks using bad timestamps on coins who had not changed the FTL began. They all had to fork ASAP to correct it. Some have not. Coins copying others' LWMA without changing their FTL is a continuing threat.

IPBC copied my recommended LWMA code which was a Karbo version with some variable declaration problems corrected by Haven. IPBC's testnet ran fine. But like most other coins, they did not know how to send it out of sequence (forwarded) timestamps in order to generate negative solvetimes. Soon after they forked, a negative solvetime came though and difficulty went to zero because the denominator was really high. A line of code attempted multiplication of a signed and unsigned integer, i.e. : double = size_t * int64_t. The high bit for sign in int64_t was treated as a really large number. This is a very basic mistake 3 of us should have caught before IPBC tripped over it and had to debug the code to identify the problem. Intense did not see this change and has to fork a 3rd time (first time they had the old Masari code). I feel responsible for half the forks and bloating people's code with multiple LWMA's. The main problem is that too many needed it too fast.

A minor but ugly bug. The timestamp and cumulative difficulty vectors in CN are sized to N before they're passed to LWMA. The fix is to set difficulty_window to N+1 in the config file, then get N inside LWMA from N=difficulty_window - 1. In some LWMA versions out there originating from the initial Masari code, the LWMA is using N=59 when it looks like N=60. There's a quiet resetting of N at the top of the LWMA code. Further below that, there's an "N+1" that would have been correct if N were correct, but since it's not, it should result in a 1/60 = 1.7% error in difficulty. But it turns out that due to integer handling, there's a round off that should have caused some error, but instead it corrects the other error if N is an odd number.

As discovered in the recent Graft fork, even with the FTL = 500 fix, big miners can still play havoc with CN coins due to a bug in CN. They can "own the MTP" which can result in blocking all other miners and pools from submitting blocks if the "Jagerman MTP Patch" is not applied ( @jagerman ). This is a not an LWMA problem but something that fixes a vulnerability in all DAs if a miner has > 50% power.

Sometimes devs want to make improvements to the LWMA. Normally they want to make it respond faster by lowering N. Graft tried an ATAN() function. All attempts so far have lowered its performance. I'll change the LWMA page if a modification shows an improvement. A Dynamic LWMA is in the works.

With the FTL limit lowered, I can remove a +7xT timestamp limit. This will allow it to drop faster after bad hash attacks without losing bad timestamp protection. I have been hesitant to remove the +7 because if a coin does not use it and does not set their FTL correctly, a catastrophic timestamp exploit is allowed even with < 30% hashpower. I could make the code do an if / then based on FTL setting to set or remove +7xT limit. I have opted to change the -7xT limit to -FTL because negative solvetimes should only occur if there was a fake forward timestamp. If there is a fake reverse timestamp, to drive difficulty up (there can be profitable reasons for this, not just malicious) then the tighter reverse limit helps.

A certain theoretical malicious attack is now stopped by using MTP=11 instead of 60.

Oscillations in LWMA start appearing. In March 2018 Niobio started LWMA and was showing an oscillation pattern. It soon subsided. Then BTC Candy switched from an SMA to LWMA and saw improvement, but it still had TERRIBLE oscillations. It was constantly on the verge of collapse small CN default coins, but somehow survived. Then Iridium started having moderate oscillation problems before it forked to a new POW. Then Masari forked to a new POW and started having moderately bad oscillations. The POW changes in Monero were appeared to be causing either Nicehash or Asics to start focusing on certain POWs which exposed LWMA's weaknesses: it needed to respond faster and not fall too far after an attack. Wownero and Intense started showing minor oscillations in May. About 15 other LWMA coins were still doing good. I tried a Dynamic LWMA and Slipstream EMA, both of which I later determined were likely to cause as many problems as they solved, so I abandoned them. Karbo, Technohacker, Niobio, and Wownero were already coding Slipstream and running testnets when I abandoned it. BTC Candy made a change about May 17 that is so far (only 6 days) working great for them. My review of their code indicates it may not help the smaller types of oscillations.

The oscillations continued on BTC Candy and Masari into June 2018, while being minor on other coins. Looking at it closely, it had the appearance of a single big miner who loves the coin, and is willing to constantly mine it at a higher difficulty than others. The truth is that the POW seemed to make it more profitable for a smaller goup like ASICs or Nicehash than GPU miners. The problem with Masari was repeatedly clear 5 to 10 times per day: big "miner" drove it up 20% to 30% in 10 to 20 blocks, then stopped. Since other miners were not present, that block took 7xT to 20xT to solve instead of 30% longer. Seriously clear: last 3 blocks took T/10 and the 4th one took 20xT. The long delay caused a 20% to 30% drop, and the miner came back, repeating the problem. Stellite did like BTC Candy and turned my "Dynamic LWMA" idea of triggering on a sequence of fast solve times into a fast rise without a symmetric fall which I was sort of opposed to on mathematical grounds. But the worst that can happen is that average solvetime will be a little too high and that will occur only if there are constant attacks. Their main net results were better than LWMA, so I experimented with many different modifications and came up with some a lot simpler than theirs and I believe better with LWMA-2.

In June 2018, four Zcash clones started looking into LWMA, so I refined BTG's code to be more easily used in those BTC-like coins. Over 50 coins have LWMA on mainnet or testnet. Several have LWMA-2 on testnet and are planning to switch to it in the next fork.

In September 2018 a > 50% selfish mine attack was performed on an LWMA that was able to lower difficulty and get 4800 blocks in 5 hours. It's the result of an error I made in handling negative solvetimes that was present most of LWMAs. The attack used a method I seem to have discovered and publicized 60 days earlier, but I did not realize the attack applied to my own algorithm. It still applies to LTC, BCH, Dash, and DGW coins, unless there is a requirement outside the DA to limit out-of-sequence timestamps more strictly than the MTP, or unless the MTP is used as the most recent block in the calculation. A different method is used in LWMA-3 and LWMA-4 so developers do not need to do work outside the algorithm, and so that there is not a delay caused by using the MTP protection like Digishield does (which adds to oscillations). LWMA-3 refers to coins that have the timestamp protection, and probably contain LWMA-2's jumps.

November 1. It looks like LWMA-2's 8% jumps (when sum last 3 blocks solvetimes < 0.8xT) may not have helped the NH-attacked coins. Lethean, wownero, graft, and saronite still look very jumpy and have ~10 or so blocks delayed > 7xT which normally almost never occur. Bitsum's LWMA-2 made things a lot worse because I did not realize until now that negative solvetimes make LWMA difficulty jump up a lot more. LWMA-4 has been modified to refine the LWMA-2 & 3 jumps to be more aggressive and yet a lot safer (not make things worse). It required a lot of work and makes the code a bit more complicated. Coins not needing it should stick with (fixed) LWMA. LWMA-4 also introduces using only the 3 most significant digits in the difficulty, forcing the rest to 0, for easier viewing of the number. Also, the lowest 3 digits will equal the average of the past 10 solvetimes, helping everyone to immediately see if there is a problem.

About January 2019: although LWMA 2, 3, and 4 seems better on most coins than LWMA-1, when there is a persistent problem like there has been on Wownero, they seem to make it worse. Also 2, 3, and 4 may bias the performance metrics to look better than they are. For these reasons I've deprecated all versions except LWMA-1.

May 16, 2019. I discovered lowering FTL to greatly reduce timestamp manipulation results in allowing a 33% Sybil attack on nodes that ruins the POW security. Many devs from many coins were involved in the FTL discussions specifically to determine if there was a reason not to lower it too much. I blame the original BTC code for not making the code follow its proof of security. If changing a constant affects the security of an unrelated section without warning, it's not good code. If your coin uses network time instead of node local time, lowering FTL < about 125% of the "revert to node time" rule (70 minutes in BCH, ZEC, & BTC) will allow a 33% Sybil attack on your nodes, So revert rule must be ~ FTL/2 instead of 70 minutes. If your coin uses network time without a revert rule (a bad design), it is subject to this attack under all conditions See: zcash/zcash#4021 for more details.

Problems I've been a part of

Other than wasting Zcash developers' time and sending Cryptonote clones down the path of Zawy v1 SMA N=17 when the default Digishield would have been better (or just lowering their N and removing the lag, which is basically what Zawy v1 was except N was too low) other problems I've been at least involved in:

Bitcoin Gold also suffered greatly from following my recommendation of converting their Zcash-derived Digishield to an SMA N=30. It may have worked fine if not substantially better than Digishield (with the default values but not an optimized Digishield), except there is a +16 / - 32% "POWLimit" in Digishield done in a way that is derived from BTC's 4x / 1/4 POW limit. I thought the POW limit was referring to a per-block allowable decrease in difficulty. The Digishield terminology implies the creators of the limit thought so too. It's checked and potentially applied to every block, and a 16% / -32% limit makes send, although I objected to it not being symmetrical. It would have been not nearly as bad if the limit were symmetrical. But I knew a 16% limit on D per block would rarely be enforced, so I acquiesced to keeping the limits in and not even bothering to make them symmetrical. Keep in mind BTG was trusting me to make the correct final decision and did exactly as I recommended. The result has been a constant but limited oscillation and a block release rate that is about 10% too fast. The 16% actually limits the per block increase to 0.5% to 1.5% exactly when it is needed the most. I had not tested it as it was coded, but tested my incorrect interpretation of it. It may have been OK, maybe better than Digishield, if the limits were symmetrical. THe limits are almost never reach in Digishield because it is too slow in responding to activate the limits, except in startup where it causes a 500 to 1000 block delay in reaching the correctly difficulty. The error is a much milder form of BCH's EDA's asymmetry mistake. They would have been fine if it were symmetrical, i.e. if the allowed increase were as fast as the decrease. Since BTG had reached $7B market cap, this was the biggest mistake in economic terms.

First error in LWMA: see item 1) in above history of LWMA. I didn't create the error, I found it pretty soon, and I got them to correct it ASAP. But I was involved. I did not actively watch to see what code they were writing. I was unable to stop other coins from copying it.

2nd and 3rd errors in LWMA: again see history above, items 2) and 3). Again, it is and is not my fault for the same reasons.

Item 4 in LWMA history was "caused" by LWMA being a lot faster. Like the BTG problem, a faster algorithm exposed an existing ... feature ... in someone else's code. It could only be exploited by a > 50% miner, so some (like a Monero commenter) would say it's not an issue we can or should worry about. However, > 50% miners are not intentionally harming coins unless they can find a way to lower difficulty while they are mining. I found the way to address this problem: simply lower the FTL. This issue also demonstrates something: > 50% "attacks" are daily life for possibly every coin that is not one of the top 20. Zcash is the 2nd largest for its POW and sees > 200% mining increases (66% miner(s)) come online to get 20 blocks at cheap difficulty at least once every day. Problems caused by > 50% miners can and should be addresses where it can. Getting back to my fault here: an unkind perspective is that LWMA caused this. I think a more accurate description is that by using the only way possible to protect small coins (making it faster) a problem was created and repaired, at a cost of making the newest adopters of LWMA fork twice. Technical detail on this problem describing why lower N exposes the problem: difficulty can be temporarily lowered to (N-FTL)/N where FTL is in terms of a multiple of the coin's T.

See item 5 in LWMA history. I didn't write the code, but I approved it, and a coin trusted my recommendation.

Item 10 in LWMA is something I was aware of from the very beginning, but did not carefully measure the extent of the problem. I thought it was a remote possibility. Again, it is only possible with a > 50% miner. Maybe I had not realized back then that > 50% mining is actually addressable.

Item 11. Four devs had started testnets on one of my ideas I had strongly promised would work, but then found out it had an exploitable hole. Several devs were good-spirited to tolerate LWMA-2 constantly being refined ... after I said it was definitely finished each time.

I recently noticed coins who need a lower D have an unexpectedly high Std Dev of their D, and vice versa. After a lot of testing and watching many live coins, it seems resilient. This can enable the algorithm to automatically adjust N to optimize performance.

This is a place holder for a possible future article on this.

this was for a scratchpad

See this page for LWMA-4

[ Edit: This article is a little dated. I now have all the equations in integer format and the solvetime limits limits should be -FTL and +6 (instead of +7 and -6) where FTL = future time limit nodes allow blocks to have and is 3xT as described on the LWMA page. Otherwise there is a timestamp exploit by > 50% miners that can greatly lower difficulty. ]

The simplest algorithm is the Simple Moving Average (SMA)::

next_D = avg(D) * T / avg(ST)

where D and ST are N of past difficulties and solvetimes. The N in the two averages cancel which can code and compute a little more efficiently as:

next_D = sum(D) * T / sum(ST)

Sum(D) is just the difference in total chain work between the first and last block in the averaging window. Sum(ST) is just the difference between the first and last timestamp. This is the same as finding your average speed by dividing the change in distance by the change in time at the end of your trip. I do not use this sum() method because it allows a bad timestamp to strongly affect the next block's difficulty in coins where future time limit is the normal 7200 seconds, and if T=120 and N=60 (in many of them).

The SMA is not very good because it is simply estimating the needed difficulty as it was N/2 blocks in the past. The others try to more accurately estimate current hash rate.

For whatever reason, using the average of the targets instead of the difficulties gives a more accurate avg solvetime when N is small. Since you have to invert the target to get the difficulty, this is the same as taking the harmonic mean of the difficulties.

# Harmonic mean aka Target SMA method

next_D = harmonic_mean(D) * T / avg(ST)

# or

next_D = max_Target/avg(Target) * T / avg(ST)

# or

next_Target = avg(Target) * avg(ST) / T

max_Target = 2^(256-x) where x is the leading zeros in the coin's max_Target parameter that allows difficulty to scale down.

The best way to handle bad timestamps without blocking reasonably-expected solvetimes requires going through a loop to find the maximum timestamp. There are other ways to deal with bad timestamps, but this is the best and safest.

# SMA difficulty algorithm (not a good method)

# This is for historical purposes. There is no reason to use it.

# height-1 = index value of the most recently solved block

# Set param constants:

T=<target solvetime>

# For choosing N and adjust, do not write these equations as code. Calculate N and adjust,

# round to integers, and leave the equation as a comment.

# N=int(40*(600/T)^0.3)

# adjust = 1/ (0.9966+.705/N) # keeps solvetimes accurate for 10<N<150

for (i=height-N; i<height; $i++) {

avgD += D[i]/N;

solvetime = timestamp[i] - timestamp[i-1]

avgST +=solvetime/N

}

next_D = avgD * T / avgST * adjust

# I've used avgD and avgST for math clarity instead of letting N's cancel with sumD/sumST

# H-SMA or Target-SMA difficulty algorithm

# Harmonic mean of difficulties divided by average solvetimes

T=<target solvetime>

# Calculate N round to an integer, and leave the equation as a comment.

N=int(40*(600/T)^0.3)

# height-1 = index value of the most recently solved block

for (i=height-N; i<height; $i++) {

sum_inverse_D += 1/D[i];

# alternate: avgTarget += Target[i]/N;

solvetime = timestamp[i] - timestamp[i-1]

# Because of the following bad timestamp handling method, do NOT block out-of-sequences

# timestamps. That is, you MUST allow negative solvetimes.

if (solvetime > 7*T) {solvetime = 7*T; }

if (solvetime < -6*T) {solvetime = -6*T; }

avgST +=solvetime/N

}

harmonic_mean_D = N / sum_inverse_D

next_D = harmonic_mean_D * T / avgST * adjust

# alternate: next_Target = (avgTarget * avgST) / (N*N*T) # overflow problem?

Digishield v3 has a tempered-SMA that works a lot better than simple SMA algos .... provided the 2 mistakes in Digishield v3 are removed (remove the 6 block MTP delay and the 16/32 limits). The tempering enables fast response to hashrate increases while having good stability (smoothness). Its drawback compared to the others is 2x more delays after hash attacks. Even so, it's response speed plus stability can't be beat by LWMA and EMA unless they use its trick of tempering. It cost them some in delays, but they still have fewer delays than Digishield. The root problem is that although Digishield uses a small N to see more recent blocks than an SMA, it's still not optimally trying to estimate current hashrate like LWMA and EMA. PID and dynamic versions of LWMA and EMA can also beat Digishield without copying it. But Digishield is not far behind anyone of them if stability is more important to you than delays.

# Zawy modifed Digishield v3's (tempered SMA)

# [edit this is very dated. New versions revert to the target method and does better timestamp limits. ]

# 0.2523 factor replaces 0.25 factor to correct a 0.4% solvetime error. Half of this

# error results only because I used a difficulty instead of target calculation which

# makes it an average D instead of the more accurate average target (which is harmonic mean of D.)

# This algo, removes POW limits, removes MTP, and employs bad timestamp protection

# Since MTP was removed. Height-1 = index value of the most recently solved block

T=<target solvetime>

# Recommended N for balance between the trade off between fast response and stability

# If T changes away from Zcash's T=150, the stability in real time will be the same.

# N=17 for T=150, N=11 for T=600

N=int(17*(150/T)^0.3)

for (i=height-N; i<height; $i++) {

sumD += D[i];

solvetime = timestamp[i] - timestamp[i-1]

if (solvetime > 7*T) {solvetime = 7*T; }

if (solvetime < -6*T) {solvetime = -6*T; }

sumST +=solvetime

}

sumST = 0.75*N*T + 0.2523*sumST # "Tempering" the SMA

next_D = sumD * T / sumST

I've tried modifying the tempering and I can't make it better. I've tried using the tempering in non-SMA algos but there was no benefit. I tried using harmonic mean of difficulties, but it did not help.

Re-arranging Digishield's math gives:

next_D = avg(17 D) * 4 / ( 3 + avg(17 ST)/T)

The EMA can be expressed in something that looks suspiciously similar. The exact EMA uses an e^x. But e^x for small x is very close to 1+x. This is actually a vanilla EMA that's not as exact. It parallels Digishield (but is substantially better:

next_D = prev_D * 36 / ( 35 + prev_ST/T)

The following are articles I plan to write (largely re-writing things that are already somewhere on GitHub.

Begin update: This article is long and complicated because I wanted to cover every possibility, such as RTT which I'm sure no one is going to push. To make this long story short, this is all I think a dev needs to know to implement it.

next_target = previous_target * (1 + st/600/N - 1/N )

st= previous solvetime

Clipping: An attacker with say 10% hashrate could set large forward times to lower difficulty to where he could get a lot of blocks and then submit them when that time arrives. He can't win a chain work race, but Mark L in a comment below was concerned this could enable spam if there is no clipping. Clipping could be done with max solvetime allowed like 7xT. This is the same as a max drop in difficulty. It should be a bit more than you normally expect to see if HR is constant. It can reduce the motivation for constant on-off attacks at a cost in slightly more delays depending on the size of HR changes but I was not been able to see a clear benefit from 6xT clipping after recommending it to a lot of alt coins. I recommended it because LWMA has a bigger problem of dropping too much when on-off mining leaves. There should not be any clipping on how much difficulty rises unless it is many multiples of the max drop. Otherwise a timespan limit attack is possible. To give an example of how clipping makes it harder to spam, a 10% HR miner could send a timestamp 333xT into the future and immediately drop an N=72 EMA to 1% of it's former difficulty. It would take 55 blocks if clipping is limited to 7xT and have a net cost of 12.4 blocks at the initial difficulty instead of just 1 block. So clipping in this example makes a spam attack 12x harder.

End update

A good difficulty algorithm can allow more time to consider a POW change. If BCH switches DAs, the best options seems to be the EMA (@dgenr8's improvement to @jacob-eliosoff's) such as the one @Mengerian is doing with bit-shifting is pretty much the same as @markblundeberg's re-invention of the perfect algorithm that Jacob once briefly considered (but not in the Real Time Target (RTT) form).

The following are all the things I know of that need to be considered in implementing an EMA.

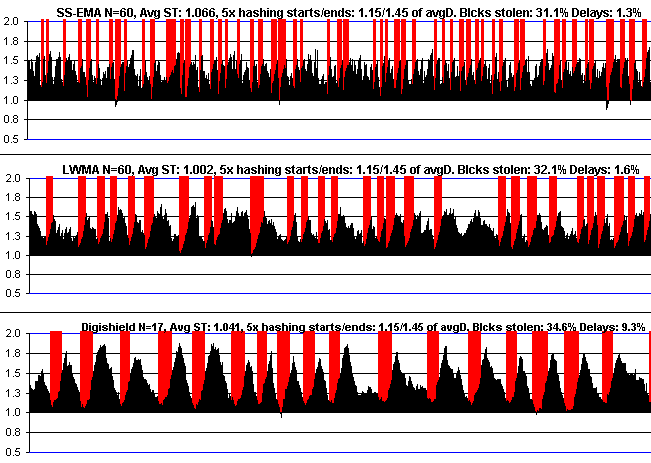

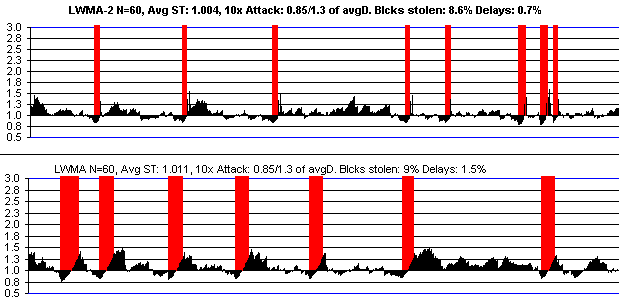

The following are testing results on the various options. The attack models BCH's current situation which is a fairly rigorous test compared to other possible settings. Other settings result in similar relative results. The target solvetimes were tweaked to give the same avg solvetime so to prevent an unfair advantage. All the algos except the RTT have an N setting that gives the same Std Dev in difficulty as SMA N=144 under constant hashrate.

The best way to a judge a good algorithm from these charts is to see if the blue lines (avg of 11 solvetimes) are not going up and down too much, and also for thin green bars which is how many blocks (not time) the attacker is getting before difficulty has risen above his "stop mining" point. The stolen metric is the time-weighted target the attacker faced verses the average. If it's 3% then his target was 3% higher than a dedicated miner (his difficulty was 3% lower). The delay metric is the sum of solvetimes in terms of target solvetimes that took 6xT, minus 2xT, expressed as a percentage of the total number of blocks.

SMA_ Target ST/avgST= 599/600.357 N= 144

attack_size: 600 start/start: 130/135, StdDev STs: 1.283

delays: 9.6% stolen: 5.4%

EMA with 2-block delay on timestamps

Target ST/avgST= 594/599.769 N= 80

attack_size: 600 start/start: 130/135, StdDev STs: 1.270

delays: 6.3% stolen: 4.4%

EMA (normal)

Target ST/avgST= 594/599.721 N= 80

attack_size: 600 start/start: 130/135, StdDev STs: 1.265

delays: 5.5% stolen: 3.3%

Marks RTT "EMA"

This is identical in results to a normal EMA using current block's solvetime instead of previous block's.

Target ST/avgST= 602/600.094 N= 80

attack_size: 600 start/start: 130/135, StdDev STs: 1.130

delays: 1.4% stolen: 0.7%

LWMA (looks same as normal EMA)

Target ST/avgST= 598/600.321 N= 144

attack_size: 600 start/start: 130/135, StdDev STs: 1.270

delays: 5.7% stolen: 3.4%

TSA (A slow normal EMA N=80 with a fast EMA N=11 RTT riding on top)

The negative stolen metric indicates it's costly to try to pick a low difficulty to being mining with a high hashrate. The blue + symbols are the target the miner actually had to solve. The purple solid line is the difficulty that goes on the chain. If you look closely, almost no + marks in the green areas (attacks) are below the average difficulty. This is because the big hashrate is doing a fast solve which causes difficulty to be higher. Notice the delays and blue line swings are not any better, but in practice they will a lot better because big miners will be much less likely to participate if a 2% loss like this is the outcome as opposed to the current 5.4% gain in SMA. I discuss this more in my TSA article.

Target ST/avgST= 595/599.93 N= 80 and M=11

attack_size: 600 start/start: 130/135

StdDev STs: 1.13

delays: 2.13% stolen: -1.23%

This shows the difference between an EMA with a 2-block solvetime delay (like the chart above) WITH a 1 block delay in targets. It's a disaster.

[update: the e^x forms I described in this issue in 2018 are unnecessarily complicated and they give almost identical results to ASERT. The simpler way to view this content is that the primary equation presented:

next_target = prior_target * (1+t/T/N-1/N)

is just an EMA where t=solvetime of previous block, T = desired block time, N is a "filter", and t/T is the estimate of how much previous target needed to be adjusted. To show this is just an EMA, Wikipedia's EMA: is:

S[i] = A*Y[i] + (1-A)*S[i-1]

Our estimated next difficulty based on previous solvetime and previous difficulty is

Y[i] = S[i-1]*adjustment_needed

which gives:

target[i] = A*target[i-1]*t/T + (1-A)*target[i-1]

Using, A = α = 1/N, this simplifies to the 1st Target equation above.

We should have guessed (and JE did) this could be improved with replacing the implied 1+x in the 1st equation with e^x to get the later-discovered-as-possibly-ideal ASERT. Wikipedia says EMA is a discrete approximation to the exponential function. So this leads us to relative ASERT:

next_target = prior_target * e^(t/T/N-1/N)

In an upcoming issue (it is now January 2022 in case I do not come back to link to it) I'll argue adjustment_needed should not be t/T but be

adjustment_needed = 1+ error =1 + e^(-median_solvetime/T) - e^(-t/T) = 1.5 - e^(-t/T)

In words, this adjusts based on the probabilities of the solvetimes we expect. It is the ratio of the observed to the expected solvetime probability for individual solvetimes. We expect to see a median solvetime which for an exponential distribution is e^(-t/T) * T= 0.5*T, not the long term average. A simple t/T does not take into account probabilities of the t's we expect. So the proper EMA from this assumption is

target[i] = A*target[i-1]*(1.5 - e^(-t/T) ) + (1-A)*target[i-1]

which is

next_target = previous_target * (1 + 0.5/N - e^(-t/T)/N )

and if we guess this should be in an ASERT-like form we can guess this is

next_target = previous_target * e^(0.5/N - e^(-t/T))

This is just replacing the error signal "t/T -1" in ASERT with 0.5-e^(-t/T). This works lot a better than ASERT in the sense of getting the same stability as ASERT with 1/3 the value of N. It results in 1/2 as many "blocks stolen" compared to dedicated miners during on-off mining at a cost of very slightly longer delays and avg solvetime being longer during the attack.

end update

Summary: This explores the theoretical origins of EMA algorithms. The only sensible one to use that does not have problem due to integer math, negative difficulties or zero solvetimes is:

simplified EMA-TH (floating point for clarity)

next_target = previous_target * (1+t/T/N-1/N)

next_difficulty = previous_difficulty / (1+t/T/N-1/N)

ETH does something like the above WITH the integer round off error and surprisingly does OK.

simplified EMA-TH (actual code for integer math with less chance of overflow problem in target)

k=10000;

next_target =(previous_target/ (k*N)) * (k*N+(k*t)/T-k)

next_difficulty =( previous_difficulty *k* N )/(k*N+(k*t)/T-k)

Bit shifting can be used to replace the above division.

Notation:

There are two different ideal forms of the EMA shown below that are equal within 0.02% for most solvetimes, and equal to within 0.005% on average. The differences between them all can be attributed to

e^x =~ 1+x for small x which results in the simple forms

1+x =~ 1/(1-x) when x is small which is the distinction between JE and TH.

JE and TH refer to Jacob Eliosoff's original version, and TH refers to a precise form of Tom Harding's simple version . The simplified JE-ema can result in divide by zero or negatives for smallish N, or if a big miner keep sending "+1" second timestamps that ends with an honest timestamp that throws it negative. The more precise e^x and "accurate" JE-ema versions do not have this problem and are slightly better than TH versions at small N. But it is very difficult to get the e^x versions to not have error that cancels the benefits when working in integer math. The e^x needs to accurately handle values like e^(t/T/N) which for t=1, T=600, and N=100 is e^(0.000017). Even after using e^(1E6*t/T/N) methodology the avg solvetime the error from floating point that I could not resolve was 1% plus there was another 1% error at N=100 from being able to let t=0 in these versions due to divide by zero.

ema-JE = prev_target / ( T/t+(1-T/t)*e^(-t/T/N) )

ema-TH = prev_target * ( T/t+(1-T/t)*e^(+t/T/N) )

The bottom of this article shows how this comes from common EMA terminology about stock prices. After Jacob Eliosoff derived or maybe guessed this from experience with EMAs and some thought, I later found out his result has applications in measuring computer performance

A e^x = 1+x+x^2 substitution can be used that gets rid of the e^x version, but it introduces a much greater potential for overflow. It

k=t/T/N

accurate ema-JE = prev_target / (1+1/N+k*(k/2-1-1/2/N)) // ** The most accurate one **

// To do the above in integer math, the follow can be used but has large overflow potential:

accurate ema-JE = (prev_target * (N*T*N*T*2)) / (2*T*N*T*N+2*T*T*N+ST*(ST-2*T*N-T))

// But a difficulty version does is not a problem i D is large:

accurate ema-JE = (prev_target + prevD/N + (prevD*ST*ST)/(N*T*N*T*2) - prevD*ST/T/N -(prevD*ST)/(N*N*T*2)

accurate ema-TH = prev_target * (1-1/N+k*(k/2+1-1/2/N))

With the simpler substitution e^x = 1+x, another near-perfect algorithm is possible, as long as N is not smaller than about < 20. These can have zero and divide by zero problems

simplified ema-JE = prev_target * N / (N-t/T+1) // Do not use this one.

simplified ema-TH = prev_target * (N+t/T-1) / N // This is the most sensible one. Simpler small error.

(just inverse for difficulty versions)

Problems

As PID Controller

The EMA might be viewable as a PID controller that takes the Poisson distribution of the solvetimes into account. A PID controller is a*P+b*I+c*D where P is present value, I is the integral (sum) of previous values, and D is the derivative (slope) of recent values. So a PID controller is taking present, past, and an estimate of the future into account. To see it possibly here, the ema-JE can be written in the form:

next_difficulty = prev_D*T/t*(1-e^(-t/T/N) + t/T*e^(-t/T/N) )

1-e^(-t/T) is the single-tail probability of a solvetime being less than t.

t/T*e^(-t/T) is the probability density function of solvetimes. It is also the derivative of the former.

The next_D (aka 1/(target hash)) keeps a running record of previous results, so in a sense it's like a summation. The summation can be seen if you recursively substitute prev_D with the equation that came before it, and expand terms. I can't see how that would lead to the PID equation, but I wanted to point out the 3 elements of a PID controller seem to be present. A similar statement can be made about the WHM. The SMA and Digishield seems to be like a PI controller since it does not take the slope into account.

The EMA can be the starting point of a fully functional PID Controller that does not work noticeably better than the above. This is because PID controllers are needed in systems that have any kind "mass" with "inertia" (which might be an economy or public opinion, not merely restricted to the physics of mass). The "inertia" leads to 2nd order differential equations that can be dealt with by PID controllers. Difficulty that needs to have faster adjustment as might be needed by a PID controller does not have any inertia. Long-term dedicated miners have inertia, but the price/difficulty motivation of big miners jumping on and off a coin (the source of a need for a controller) is a non-linear equation that invalidates the relevance of PID controllers.

I've been promoting this algorithm, but after further study, the

LWMA and Simple EMA are the best

Links to background information:

Original announcement of the algorithm

Handling bad timestamps

Determining optimal value of N

Other difficulty algorithms

All my previous inferior attempts

Note: there is a 2.3 factor to make the N of this algorithm match the speed of the WHM.

# EMA-Z Difficulty Algorithm

# credits: Jacob Eliosoff, Tom Harding (Degenr8), Neil (kyuupichan), Scott Roberts (Zawy)

# Extensive research is behind this:

# Difficulty articles: https://github.com/zawy12/difficulty-algorithms/issues

# https://github.com/kyuupichan/difficulty

# https://github.com/seredat/karbowanec/commit/231db5270acb2e673a641a1800be910ce345668a

# ST = solvetime, T=target solvetime, TT=adjusted target solvetime

# height - 1 = most recently solved block.

# basic equation: next_target=prev_target*(N+ST/T-1)/N

# Do not use MTP as the most recent block because shown method is a lot better.

# But if you do not MTP, you MUST allow negative solvetimes or an exploit is possible.

# Ideal N appears to be N= 20 to 43 for T=600 to 60 by the following formula.

# ( Note: N=20 here is like N=46 in an SMA, WHM, and the EMA-D )

# The following allows the N here to match all other articles that mention "N"

# An EMA with this N will match the WHM in response speed of the same N

N = int(45*(600/T)^0.3)

# But I need to convert that N for use in the algorithm.

# M is the "mean life" or "1/(extinction coefficient)" used in e^(-t/M) equations.

M = int(N/2.3+0.5)

# WARNING: Do not use "if ST<1 then ST=1" or a 20% hashrate miner can

# cut your difficulty in half. See timestamp article link above.

ST = states[-1].timestamp - states[-2].timestamp;

prev_target = bits_to_target(states[-1].bits);

k = (1000*ST)/(T*M);

// integer math to prevent round off error

next_target = (1000*prev_target * (1000-1000/M+(k*k)/2000+k-k/(2*M) )/1000)/1000;

These are my comments each section of Orbital coin's difficulty algorithm. I've simplified the code for readability. There's no missing code.

// POW only

// ####### Begin OSS algorithm #########

nTargetSpacing = 360;

nTargetTimespan = nTargetSpacing * 20

nActualTimespanShort = timestamps[-1] - timestamps[-5];

nActualTimespanLong = timestamps[-1] - timestamps[-20];

/* Time warp protection */

nActualTimespanShort = max(nActualTimespanShort, (nTargetSpacing * 5 / 2));

nActualTimespanShort = min(nActualTimespanShort, (nTargetSpacing * 5 * 2));

nActualTimespanLong = max(nActualTimespanLong, (nTargetSpacing * 20 / 2));

nActualTimespanLong = min(nActualTimespanLong, (nTargetSpacing * 20 * 2));

The above prevents it from changing very much if the last 5 or 20 blocks were 2x too fast, or 2x too slow. For N=5 or 20, this is not good. nActualTimespan limits should be to prevent big problems, but with N=5 and 20, it gets activated a low. It limits how fast the algorithm can change, especially during a hash attack. BCH uses the same 2x factor, but they have N=144 and more importantly they are a large coin with stable hash rate. So it will not get activated unless there is a catastrophic change. BTC uses 4x as protection for catastrophic situations, again with a much larger N=2016.

/* The average of both windows */

nActualTimespanAvg = (nActualTimespanShort * (20 / 5) +

nActualTimespanLong) / 2;

This above gives equal weighting to the short and long windows. This is good It's like a really mild form of LWMA. I show at the bottom that it's good, so this idea could be used in Digishield coins to improve them.

/* 0.25 damping */

nActualTimespan = nActualTimespanAvg + 3 * nTargetTimespan;

nActualTimespan /= 4;

This is Digishield's 75% dampening, not 25%. It is saying "we believe the previous difficulty was 75% correct and we'll give the recent timestamps 25% vote on what the next difficulty should be. It's better than simple moving averages, but not as good as EMA and LWMA.

/* Oscillation limiters */

/* +5% to -10% */

nActualTimespanMin = nTargetTimespan * 100 / 105;

nActualTimespanMax = nTargetTimespan * 110 / 100;

if(nActualTimespan < nActualTimespanMin) nActualTimespan = nActualTimespanMin;

if(nActualTimespan > nActualTimespanMax) nActualTimespan = nActualTimespanMax;

Since the above comes after the 75% Digishield dampening, it's like limits that are 4x these limits when expressed in terms of the previous 2x limits. That is, they are basically +20% and -50% limits, so it is overriding most effects the 2x limits had. I confirmed this by testing. This is not how you want to limit difficulty changes. There should not be any limits except for extreme circumstances, otherwise you could have used a larger N to get the same benefits with fewer problems. The +5% limit would have enforced a 0.2% rise per block during hash attack attacks, if the big error in the last line of this code were not in place. That error prevents this error from causing a problem. Start up is like a hash attack and it takes Digishield 500 blocks to reach the correct difficulty with a 16% limit where this one has 5%, so if it were not for the last line of code, it would have taken Orbitcoin 1500 blocks. See this for a detailed review of the problem.

Making it asymmetrical with +5% and -10% instead of +10% / -10% causes two other problems. The first is that avg solvetime will be a little faster than target. The bigger problem is that it invites oscillations. It's slow to rise, so big miners stay on longer. It ends up with a longer delay which allows it to drop faster, especially since the larger limit is allowing it to drop faster. The extreme case was the fantastic failure of BCH's initial asymmetrical EDA. A milder case is what I allowed to happen to BTG by not removing the +16% and -32% limits from the Digishield code when i made it faster. These limits made it slower and with bad oscillations.

However these problems I normally expect from +5% and -10% limits did not occur in Orbitcoin because the of the next error. The combined errors gives significantly better results than either error alone, but still terrible results.

/* Retarget */

bnNew.SetCompact(pindexPrev->nBits);

bnNew = bnNew * nActualTimespan / nTargetTimespan;

// ######## End OSS algorithm ###########

The above uses the previous target instead of doing like Digishield and using the avg of the previous difficulties. My testing indicates using the average of the past 20 instead of 1 or 5 is a LOT better. EMA is able to do this, but it's done in a very specific way.

The following code is what Digishield does, expressed to look like the OSS above. It's a lot simpler and gives a lot better results. I didn't include Digishield's limit because they are never activated except at start up where it causes a 500 block delay in coins reaching the correct difficulty. I've also removed the 6-block MTP delay.

nActualTimespan = timestamps[-1] - timestamps[-17];

nActualTimespan = ( nActualTimespan + 3*nTargetTimespan) / 4;

bnNew = Average_17_bnNew * nActualTimespan / nTargetTimespan;

The following compares OSS and this simplified version of Digishield/Zcash. Reminder: Digishield/Zcash is not as good as the Simple EMa and LWMA, especially with the MTP 6 that it normally has.

Constant hash rate OSS verses Digishield

Typical Hash attack OSS verses Digishield

Digishield scores a lot better because it does not accidentally go low as often (which invites a hash attack) These are hash attacks based on simulation of miner motivation: they begin if D drops 15% below average, and end when it is 20% above average. These results are robust for a wide range of start and stop points.

OSS worse WITHOUT the +5%/-10% limits

This shows the results of OSS are worse if the 5%/-10% were not in place.

Digishield worse WITH the +5% / -10% limits

Digishield worse when using previous bnNew instead of avg

Using OSS idea to improve Digishield

The following is a modified Digshield using the 5/20 idea from OSS. I used 5/17. It is pretty much the same as Digishield, except with 1/2 the delays. If I lower the 17 to 14, it is very competitive to LWMA. It has fewer delays with slightly more blocks stolen, at the same speed of response and stability.

Unified Equation for Difficulty Algorithms

next_D = avg(n Ds) *r / ( (r-1) + sum(n w*STs)/sum(n w's)/T )

r=1, w=1 is SMA

n=1, w=1 is EMA