Using Flickr8k dataset since the size is 1GB. MS-COCO is 14GB!

Used Keras with Tensorflow backend for the code. InceptionV3 is used for extracting the features.

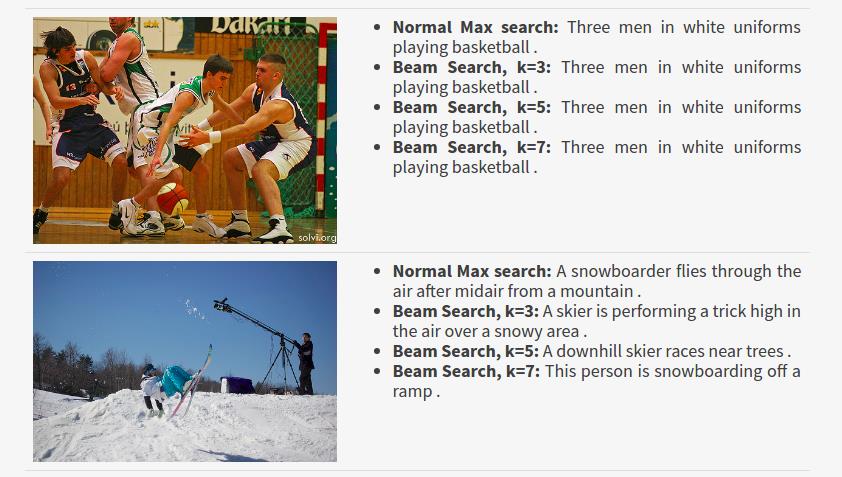

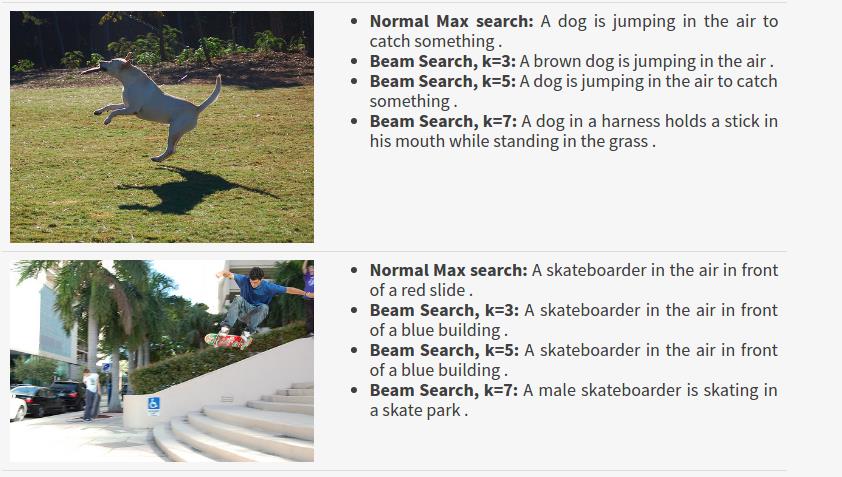

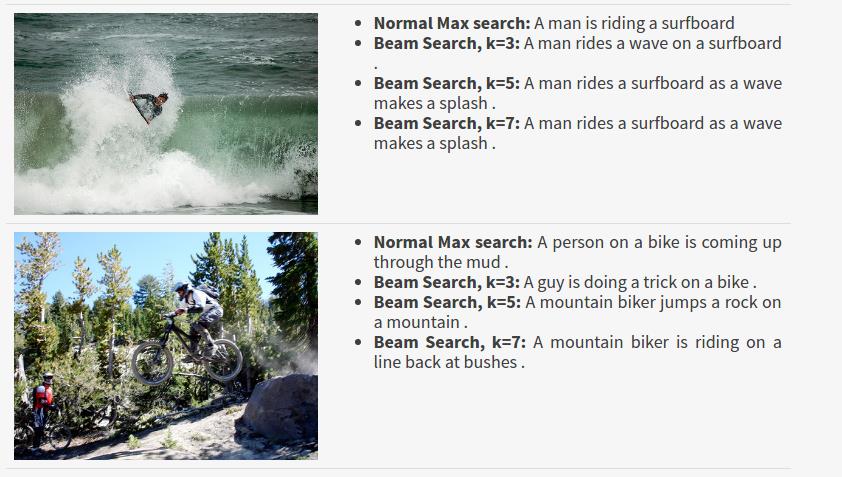

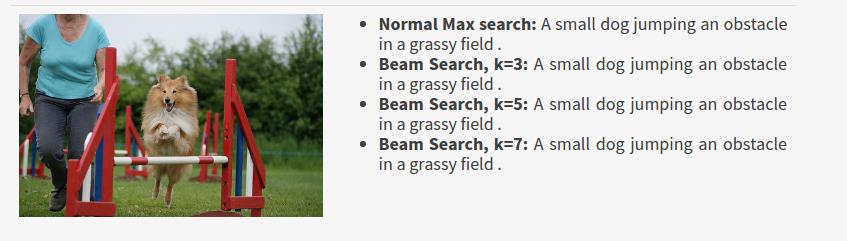

I am using Beam search with k=3, 5, 7 and an Argmax search for predicting the captions of the images.

The loss value of 1.5987 has been achieved which gives good results. You can check out some examples below. The rest of the examples are in the jupyter notebook. You can run the Jupyter Notebook and try out your own examples. unique.p is a pickle file which contains all the unique words in the vocabulary.

Everything is implemented in the Jupyter notebook which will hopefully make it easier to understand the code.

I have also written a blog post describing my experience while implementing this project. You can find it here.

You can download the weights here.

- Keras 1.2.2

- Tensorflow 0.12.1

- tqdm

- numpy

- pandas

- matplotlib

- pickle

- PIL

- glob

[1] M. Hodosh, P. Young and J. Hockenmaier (2013) "Framing Image Description as a Ranking Task: Data, Models and Evaluation Metrics", Journal of Artificial Intelligence Research, Volume 47, pages 853-899 http://www.jair.org/papers/paper3994.html

[2] Oriol Vinyals, Alexander Toshev, Samy Bengio, Dumitru Erhan Show and Tell: A Neural Image Caption Generator

[3] CS231n Winter 2016 Lesson 10 Recurrent Neural Networks, Image Captioning and LSTM https://youtu.be/cO0a0QYmFm8?t=32m25s