Verdaccio stands for peace, stop the war, we will be yellow / blue 🇺🇦 until that happens.

Looking for Verdaccio 5 version? Check the branch

5.xThe plugins for thev5.xthat are hosted within this organization are located at theverdaccio/monoreporepository, while for thenextversion are hosted on this project./packages/plugins, keep on mindnextplugins will eventually would be incompatible withv5.xversions. Note that contributing guidelines might be different based on the branch.

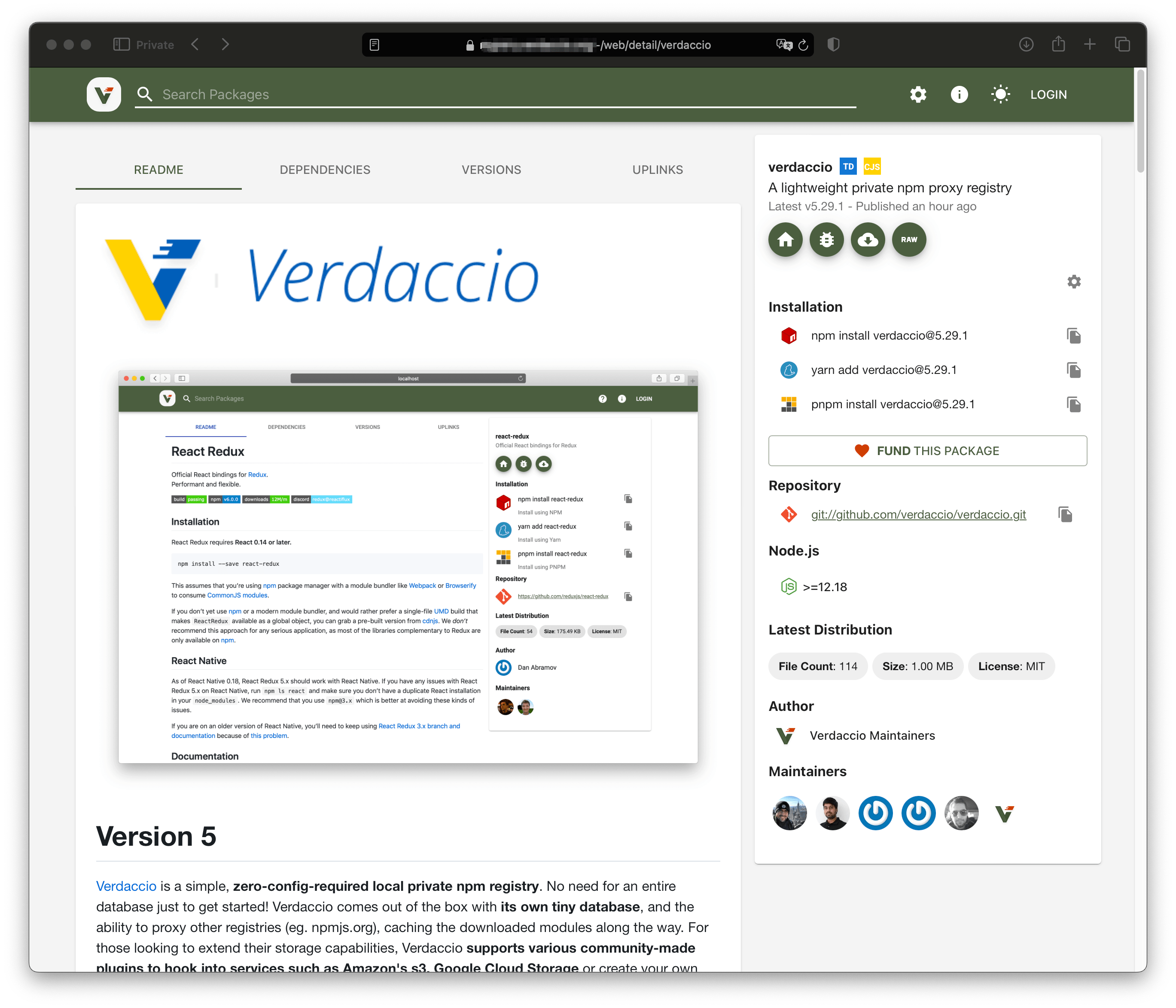

Verdaccio is a simple, zero-config-required local private npm registry. No need for an entire database just to get started! Verdaccio comes out of the box with its own tiny database, and the ability to proxy other registries (eg. npmjs.org), caching the downloaded modules along the way. For those looking to extend their storage capabilities, Verdaccio supports various community-made plugins to hook into services such as Amazon's s3, Google Cloud Storage or create your own plugin.

Latest Node.js v16 required

Install with npm:

npm install -g verdaccio@nextWith yarn

yarn global add verdaccio@nextWith pnpm

pnpm i -g verdaccio@nextor

docker pull verdaccio/verdaccio:nightly-masteror with helm official chart.

helm repo add verdaccio https://charts.verdaccio.org

helm repo update

helm install verdaccio/verdaccioFurthermore, you can read the Debugging Guidelines and the Docker Examples for more advanced development.

You can develop your own plugins with the verdaccio generator. Installing Yeoman is required.

npm install -g yo

npm install -g generator-verdaccio-plugin

Learn more here how to develop plugins. Share your plugins with the community.

In our compatibility testing project, we're dedicated to ensuring that your favorite commands work seamlessly across different versions of npm, pnpm, and Yarn. From publishing packages to managing dependencies. Our goal is to give you the confidence to use your preferred package manager without any issues. So dive in, check out our matrix, and see how your commands fare across the board!

| cmd | npm6 | npm7 | npm8 | npm9 | npm10 | pnpm8 | pnpm9 (beta) | yarn1 | yarn2 | yarn3 | yarn4 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| publish | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| info | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| audit | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ❌ |

| install | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| deprecate | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ⛔ | ⛔ | ⛔ | ⛔ |

| ping | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ⛔ | ⛔ | ⛔ | ⛔ |

| search | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ⛔ | ⛔ | ⛔ | ⛔ |

| star | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ⛔ | ⛔ | ⛔ | ⛔ |

| stars | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ⛔ | ⛔ | ⛔ | ⛔ |

| dist-tag | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ |

Verdaccio is run by volunteers; nobody is working full-time on it. If you find this project to be useful and would like to support its development, consider doing a long support donation - and your logo will be on this section of the readme.

Donate 💵👍🏻 starting from $1/month or just one single contribution.

If you want to use all benefits of npm package system in your company without sending all code to the public, and use your private packages just as easy as public ones.

If you have more than one server you want to install packages on, you might want to use this to decrease latency (presumably "slow" npmjs.org will be connected to only once per package/version) and provide limited failover (if npmjs.org is down, we might still find something useful in the cache) or avoid issues like How one developer just broke Node, Babel and thousands of projects in 11 lines of JavaScript, Many packages suddenly disappeared or Registry returns 404 for a package I have installed before.

If you use multiples registries in your organization and need to fetch packages from multiple sources in one single project you might take advance of the uplinks feature with Verdaccio, chaining multiple registries and fetching from one single endpoint.

If you want to use a modified version of some 3rd-party package (for example, you found a bug, but maintainer didn't accept pull request yet), you can publish your version locally under the same name. See in detail here.

Verdaccio has proved to be a lightweight registry that can be booted in a couple of seconds, fast enough for any CI. Many open source projects use Verdaccio for end to end testing, to mention some examples, create-react-app, mozilla neutrino, pnpm, storybook, babel.js, angular-cli or docusaurus. You can read more in here.

Furthermore, here few examples how to start:

Node 2022, February 2022, Online Free

You might want to check out as well our previous talks:

- Using Docker and Verdaccio to make Integration Testing Easy - Docker All Hands #4 December - 2021

- Juan Picado – Testing the integrity of React components by publishing in a private registry - React Finland - 2021

- BeerJS Cba Meetup No. 53 May 2021 - Juan Picado

- Node.js Dependency Confusion Attacks - April 2021 - Juan Picado

- OpenJS World 2020 about *Cover your Projects with a Multi purpose Lightweight Node.js Registry - Juan Picado

- ViennaJS Meetup - Introduction to Verdaccio by Priscila Olivera and Juan Picado

- Open Source? trivago - Verdaccio (Ayush and Juan Picado) January 2020

- GitNation Open Source Stage - How we have built a Node.js Registry with React - Juan Picado December 2019

- Verdaccio - A lightweight Private Proxy Registry built in Node.js | Juan Picado at The Destro Dev Show

Run in your terminal

verdaccioYou would need set some npm configuration, this is optional.

npm set registry http://localhost:4873/For one-off commands or to avoid setting the registry globally:

NPM_CONFIG_REGISTRY=http://localhost:4873 npm iNow you can navigate to http://localhost:4873/ where your local packages will be listed and can be searched.

Warning: Verdaccio does not currently support PM2's cluster mode, running it with cluster mode may cause unknown behavior.

npm adduser --registry http://localhost:4873if you use HTTPS, add an appropriate CA information ("null" means get CA list from OS)

npm set ca nullnpm publish --registry http://localhost:4873This will prompt you for user credentials which will be saved on the verdaccio server.

Below are the most commonly needed information, every aspect of Docker and verdaccio is documented separately

docker pull verdaccio/verdaccio:nightly-master

Available as tags.

To run the docker container:

docker run -it --rm --name verdaccio -p 4873:4873 verdaccio/verdaccioDocker examples are available in this repository.

Verdaccio aims to support all features of a standard npm client that make sense to support in a private repository. Unfortunately, it isn't always possible.

- Installing packages (

npm install,npm update, etc.) - supported - Publishing packages (

npm publish) - supported

- Unpublishing packages (

npm unpublish) - supported - Tagging (

npm dist-tag) - supported - Deprecation (

npm deprecate) - supported

- Registering new users (

npm adduser {newuser}) - supported - Change password (

npm profile set password) - supported - Transferring ownership (

npm owner) - supported - Token (

npm token) - supported

- Searching (

npm search) - supported (cli / browser) - Ping (

npm ping) - supported - Starring (

npm star,npm unstar,npm stars) - supported

- Audit (

npm/yarn audit) - supported

If you want to report a security vulnerability, please follow the steps which we have defined for you in our security policy.

Thanks to the following companies to help us to achieve our goals providing free open source licenses. Every company provides enough resources to move this project forward.

| Juan Picado | Ayush Sharma | Sergio Hg |

|---|---|---|

| @jotadeveloper | @ayusharma_ | @sergiohgz |

| Priscila Oliveria | Daniel Ruf | |

| @priscilawebdev | @DanielRufde |

You can find and chat with them over Discord, click here or follow them at Twitter.

- create-react-app (+86.2k ⭐️)

- Grafana (+54.9k ⭐️)

- Gatsby (+49.2k ⭐️)

- Babel.js (+38.5k ⭐️)

- Docusaurus (+34k ⭐️)

- Vue CLI (+27.4k ⭐️)

- Angular CLI (+24.3k ⭐️)

- Uppy (+23.8k ⭐️)

- bit (+13k ⭐️)

- Aurelia Framework (+11.6k ⭐️)

- pnpm (+10.1k ⭐️)

- ethereum/web3.js (+9.8k ⭐️)

- Webiny CMS (+6.6k ⭐️)

- NX (+6.1k ⭐️)

- Mozilla Neutrino (+3.7k ⭐️)

- workshopper how to npm (+1k ⭐️)

- Amazon SDK v3

- Amazon Encryption SDK for Javascript

🤓 Don't be shy, add yourself to this readme.

Support this project by becoming a sponsor. Your logo will show up here with a link to your website. [Become a sponsor]

Thank you to all our backers! 🙏 [Become a backer]

This project exists thanks to all the people who contribute. [Contribute].

If you have any issue you can try the following options. Do no hesitate to ask or check our issues database. Perhaps someone has asked already what you are looking for.

Verdaccio is MIT licensed

The Verdaccio documentation and logos (excluding /thanks, e.g., .md, .png, .sketch) files within the /assets folder) is Creative Commons licensed.