Tokay is a programming language designed for ad-hoc parsing.

Tokay is under development and not considered for production use yet; Be part of Tokay's ongoing development, and contribute!

Tokay is a programming language to quickly implement solutions for text processing problems. This can either be just simple data extractions, but also parsing entire structures or parts of it, and turning information into structured parse trees or abstract syntax trees for further processing.

Therefore, Tokay is both a tool and language for simple one-liners, but can also be used to implement code-analyzers, refactoring tools, interpreters, compilers or transpilers. Actually Tokay's own language parser is implemented in Tokay itself.

Tokay is inspired by awk, has syntactic and semantic flavours of Python and Rust, but also follows its own philosophy, ideas and design principles. Thus, it isn't compareable to other languages or projects, and is a language on its own.

Tokay is still a very young project and gains much potential. Volunteers are welcome!

- Interpreted, procedural and imperative scripting language

- Concise and easy to learn syntax and object system

- Stream-based input processing

- Automatic parse tree construction and synthesis

- Left-recursive parsing structures ("parselets") supported

- Implements a memoizing packrat parsing algorithm internally

- Robust and fast, as it is written entirely in safe Rust

- Enabling awk-style one-liners in combination with other tools

- Generic parselets and functions

- Import system to create modularized programs (*coming soon)

- Embedded interoperability with other programs (*coming soon)

By using Rusts dependency manager and build-tool cargo, simply install Tokay with

$ cargo install tokayFor Arch Linux-based distros, there is also a tokay and tokay-git package in the Arch Linux AUR.

Tokay's version of "Hello World" is quite obvious.

print("Hello World")

$ tokay 'print("Hello World")' Hello World

Tokay can also greet any wor(l)ds that are being fed to it. The next program prints "Hello Venus", "Hello Earth" or "Hello" followed by any other name previously parsed by the builtin Word-token. Any other input than a word is automatically omitted.

print("Hello", Word)

$ tokay 'print("Hello", Word)' -- "World 1337 Venus Mars 42 Max" Hello World Hello Venus Hello Mars Hello Max

A simple program for counting words which exists of a least three characters and printing a total can be implemented like this:

Word(min=3) words += 1

end print(words)

$ tokay "Word(min=3) words += 1; end print(words)" -- "this is just the 1st stage of 42.5 or .1 others" 5

The next, extended version of the program from above counts all words and numbers.

Word words += 1

Number numbers += 1

end print(words || 0, "words,", numbers || 0, "numbers")

$ tokay 'Word words += 1; Number numbers += 1; end print(words || 0, "words,", numbers || 0, "numbers")' -- "this is just the 1st stage of 42.5 or .1 others" 9 words, 3 numbers

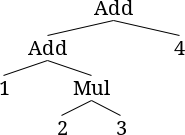

By design, Tokay constructs syntax trees from consumed information automatically.

The next program directly implements a parser and interpreter for simple mathematical expressions, like 1 + 2 + 3 or 7 * (8 + 2) / 5. The result of each expression is printed afterwards. Processing direct and indirect left-recursions without ending in infinite loops is one of Tokay's core features.

_ : Char< \t>+ # redefine whitespace to just tab and space

Factor : @{

Int _ # built-in 64-bit signed integer token

'(' _ Expr ')' _

}

Term : @{

Term '*' _ Factor $1 * $4

Term '/' _ Factor $1 / $4

Factor

}

Expr : @{

Expr '+' _ Term $1 + $4

Expr '-' _ Term $1 - $4

Term

}

Expr _ print("= " + $1) # gives some neat result output

$ tokay examples/expr_from_readme.tok 1 + 2 + 3 = 6 7 * (8 + 2) / 5 = 14 7*(3-9) = -42 ...

Tokay can also be used for programs without any parsing features.

Next is a recursive attempt for calculating the factorial of an integer.

factorial : @x {

if !x return 1

x * factorial(x - 1)

}

factorial(4)

$ tokay examples/factorial.tok 24

And this version of above program calculates the factorial for any integer token matches from the input. Just the invocation is different, and uses the Number token.

factorial : @x {

if !x return 1

x * factorial(x - 1)

}

print(factorial(int(Number)))

$ tokay examples/factorial2.tok -- "5 6 ignored 7 other 14 yeah" 120 720 5040 87178291200 $ tokay examples/factorial2.tok 5 120 6 720 ignored 7 5040 other 14 87178291200 ...

The Tokay homepage tokay.dev provides links to a quick start and documentation. The documentation source code is maintained in a separate repository.

The Tokay programming language is named after the Tokay gecko (Gekko gecko) from Asia, shouting out "token" in the night.

The Tokay logo and icon was thankfully designed by Timmytiefkuehl.

Check out the tokay-artwork repository for different versions of the logo as well.

Copyright © 2024 by Jan Max Meyer, Phorward Software Technologies.

Tokay is free software under the MIT license.

Please see the LICENSE file for details.