The following tutorials demonstrate features of StreamSets Data Collector, StreamSets Transformer, StreamSets Control Hub and StreamSets SDK For Python.

-

Log Shipping to Elasticsearch - Read weblog files from a local filesystem directory, decorate some of the fields (e.g. GeoIP Lookup), and write them to Elasticsearch.

-

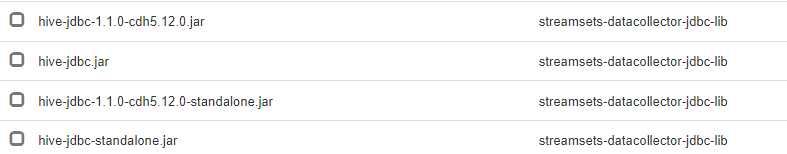

What’s the Biggest Lot in the City of San Francisco? - Read city lot data from JSON, calculate lot areas in JavaScript, and write them to Hive.

-

Ingesting Local Data into Azure Data Lake Store - Read records from a local CSV-formatted file, mask out PII (credit card numbers) and send them to a JSON-formatted file in Azure Data Lake Store.

-

Working with StreamSets Data Collector and Microsoft Azure - Integrate Azure Blob Storage, Apache Kafka on HDInsight, Azure SQL Data Warehouse and Apache Hive backed by Azure Blob Storage.

-

Creating a Custom StreamSets Origin - Build a simple custom origin that reads a Git repository's commit log and produces the corresponding records.

-

Creating a Custom Multithreaded StreamSets Origin - A more advanced tutorial focusing on building an origin that supports parallel execution, so the pipeline can run in multiple threads.

-

Creating a Custom StreamSets Processor - Build a simple custom processor that reads metadata tags from image files and writes them to the records as fields.

-

Creating a Custom StreamSets Destination - Build a simple custom destination that writes batches of records to a webhook.

We have a DataCollector API Java Docs to share in case of need, please reach out to us if you need them.

-

Ingesting Drifting Data into Hive and Impala - Build a pipeline that handles schema changes in MySQL, creating and altering Hive tables accordingly.

-

Creating a StreamSets Spark Transformer in Java - Build a simple Java Spark Transformer that computes a credit card's issuing network from its number.

-

Creating a StreamSets Spark Transformer in Scala - Build a simple Scala Spark Transformer that computes a credit card's issuing network from its number.

-

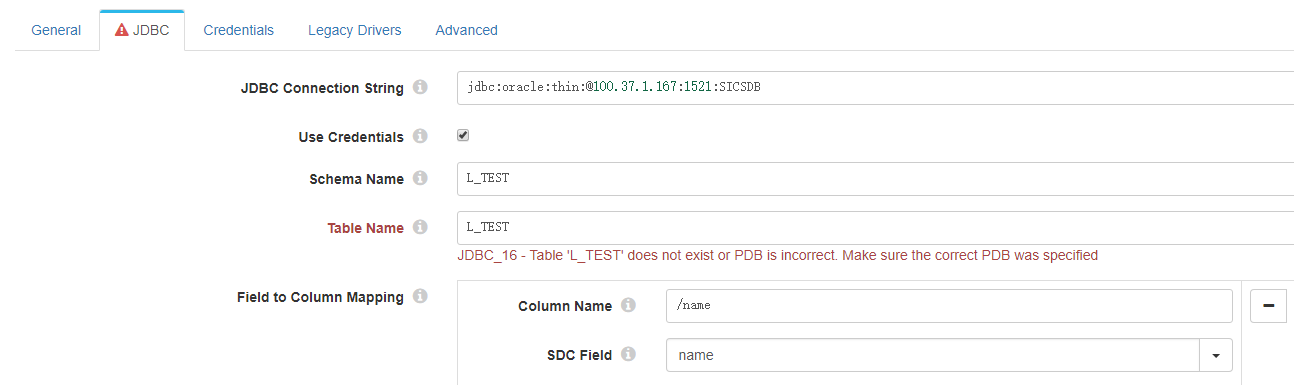

Creating a CRUD Microservice Pipeline - Build a microservice pipeline to implement a RESTful web service that reads from and writes to a database via JDBC.

The Data Collector documentation also includes an extended tutorial that walks through basic Data Collector functionality, including creating, previewing and running a pipeline, and creating alerts.

- Kubernetes-based Deployment - Example configurations for Kubernetes-based deployments of StreamSets Data Collector.

- Creating Custom Data Protector Procedure - Create, build and deploy your own custom data protector procedure that you can use as protection method to apply to record fields.

-

Creating a Custom Processor for StreamSets Transformer - Create a simple custom processor, using Java and Scala, that will compute the type of a credit card from its number, and configure Transformer to use it.

-

Creating a Custom Scala Project For StreamSets Transformer - This tutorial explains how to create a custom Scala project and import the compiled jar into StreamSets Transformer.

- Find SDK methods and fields of an object available - Object examples can be instances of a pipeline or SCH job or a stage under the pipeline.

-

Getting started with StreamSets SDK for Python - Design and publish a pipeline. Then create, start, and stop a job using StreamSets SDK for Python.

-

-

Sample ways to fetch one or more jobs - Sample ways to fetch one or more jobs.

-

Start a job and monitor that specific job - Start a job and monitor that specific job using metrics and time series metrics.

-

Move jobs from dev to prod using data_collector_labels - Move jobs from dev to prod by updating data_collector label.

-

Generate a report for a specific job - Generate a report for a specific job and then; fetch and download it.

-

See logs for a data-collector where a job is running - Get the DataCollector where a job is running and then see its logs.

-

-

-

Common pipeline methods - Common operations for StreamSets Control Hub pipelines like update, duplicate , import, export.

-

Loop over pipelines and stages and make an edit to stages - When there are many pipelines and stages that need an update, SDK for Python makes it easy to update them with just a few lines of code.

-

Create CI CD pipeline used in demo - This covers the steps to create CI CD pipeline as used in the SCH CI CD demo. The steps include how to add stages like JDBC, some processors and Kineticsearch; and how to set stage configurations. Also shows, the use of runtime parameters.

-

StreamSets Data Collector and its tutorials are built on open source technologies; the tutorials and accompanying code are licensed with the Apache License 2.0.

We welcome contributors! Please check out our guidelines to get started.