English | 简体中文 | 繁體中文 | العربية | Italiano | Українська | Español | Português | 日本語 | Türkçe | हिंदी | Tiếng Việt

QuestDB is an open-source time-series database for high throughput ingestion and fast SQL queries with operational simplicity.

QuestDB is well-suited for financial market data, IoT sensor data, ad-tech and real-time dashboards. It shines for datasets with high cardinality and is a drop-in replacement for InfluxDB via support for the InfluxDB Line Protocol.

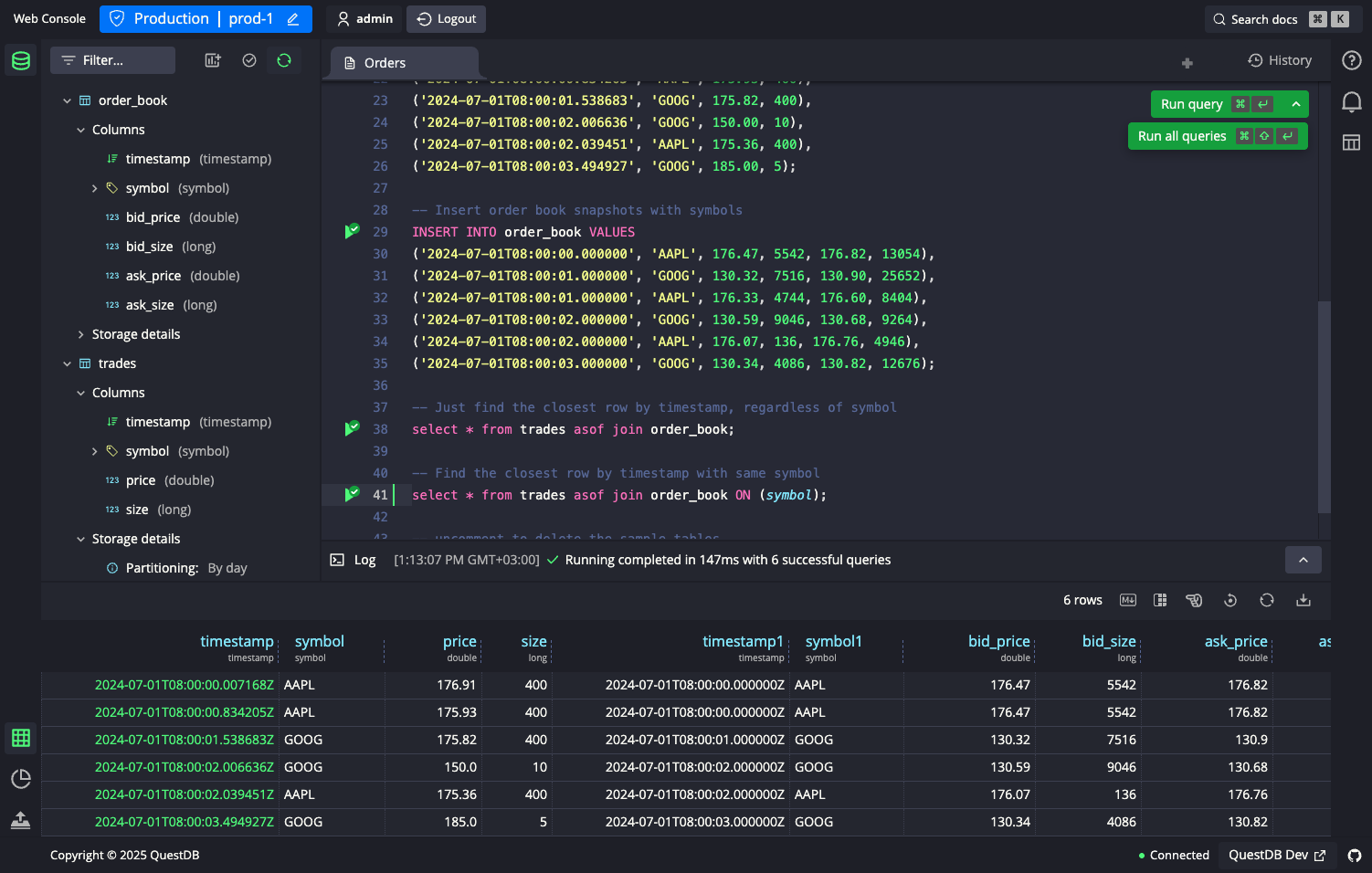

QuestDB implements ANSI SQL with native time-series SQL extensions. These SQL extensions make it simple to filter and downsample data, or correlate data from multiple sources using relational and time-series joins.

We achieve high performance by adopting a column-oriented storage model, parallelized vector execution, SIMD instructions, and low-latency techniques. The entire codebase is built from the ground up in Java, C++ and Rust with no dependencies and zero garbage collection.

QuestDB supports schema-agnostic streaming ingestion using the InfluxDB line protocol and a REST API for bulk imports and exports. The QuestDB SQL Web Console is an interactive SQL editor facilitating CSV import. Finally, QuestDB also includes the Postgres Wire Protocol for programmatic queries.

Popular tools that integrate with QuestDB include Apache Kafka, Grafana, Superset, Telegraf and Apache Flink.

We provide a live demo provisioned with the latest QuestDB release and sample datasets:

- Trips: 10 years of NYC taxi trips with 1.6 billion rows

- Trades: live crypto market data with 30M+ rows per month

- Pos: geolocations of 250k unique ships over time

Checkout our interactive real-time market data dashboards and NYC Taxi Data Analytics Dashboards powered by QuestDB and Grafana.

| Query | Execution time |

|---|---|

SELECT sum(double) FROM trips |

0.15 secs |

SELECT sum(double), avg(double) FROM trips |

0.5 secs |

SELECT avg(double) FROM trips WHERE time in '2019' |

0.02 secs |

SELECT time, avg(double) FROM trips WHERE time in '2019-01-01' SAMPLE BY 1h |

0.01 secs |

SELECT * FROM trades LATEST ON timestamp PARTITION BY symbol |

0.00025 secs |

Our demo is running on c5.metal instance and using 24 cores out of 96.

To run QuestDB, Docker can be used to get started quickly:

docker run -p 9000:9000 -p 9009:9009 -p 8812:8812 questdb/questdbmacOS users can use Homebrew:

brew install questdb

brew services start questdb

questdb start // To start questdb

questdb stop // To stop questdbThe QuestDB downloads page provides direct downloads for binaries and has details for other installation and deployment methods.

QuestDB Cloud is the fully managed version of QuestDB, with additional features such as Role-based access control, Cloud-native Replication, Compression, monitoring and cloud-native snapshots. Get started with $200 credits.

You can interact with QuestDB using the following interfaces:

- Web Console for an interactive SQL editor and CSV import on port

9000 - InfluxDB line protocol for streaming ingestion on port

9000 - PostgreSQL wire protocol for programmatic queries and transactional inserts on port

8812 - REST API for CSV import and cURL on port

9000

Below are the official QuestDB clients for ingesting data via the InfluxDB Line Protocol:

Want to walk through everything, from streaming ingestion to visualization with Grafana? Check out our multi-path quickstart repository.

Checkout our benchmark blog post which compares QuestDB and InfluxDB across functionality, maturity and performance.

- QuestDB documentation: understand how to run and configure QuestDB.

- Tutorials: learn what's possible with QuestDB step by step.

- Product roadmap: check out our plan for upcoming releases.

- Community Slack: join technical discussions, ask questions, and meet other users!

- GitHub issues: report bugs or issues with QuestDB.

- Stack Overflow: look for common troubleshooting solutions.

We welcome contributions to the project, whether source code, documentation, bug reports, feature requests or feedback. To get started with contributing:

- Have a look through GitHub issues labelled "Good first issue".

- For Hacktoberfest, see the relevant labelled issues

- Read the contribution guide.

- For details on building QuestDB, see the build instructions.

- Create a fork of QuestDB and submit a pull request with your proposed changes.

✨ As a sign of our gratitude, we also send QuestDB swag to our contributors. Claim your swag.

A big thanks goes to the following wonderful people who have contributed to QuestDB: (emoji key):

This project adheres to the all-contributors specification. Contributions of any kind are welcome!