This repository is the official PyTorch implementation of Data-free Knowledge Distillation for Object Detection, WACV 2021.

Data-free Knowledge Distillation for Object Detection

Akshay Chawla, Hongxu Yin, Pavlo Molchanov and Jose Alvarez

NVIDIA

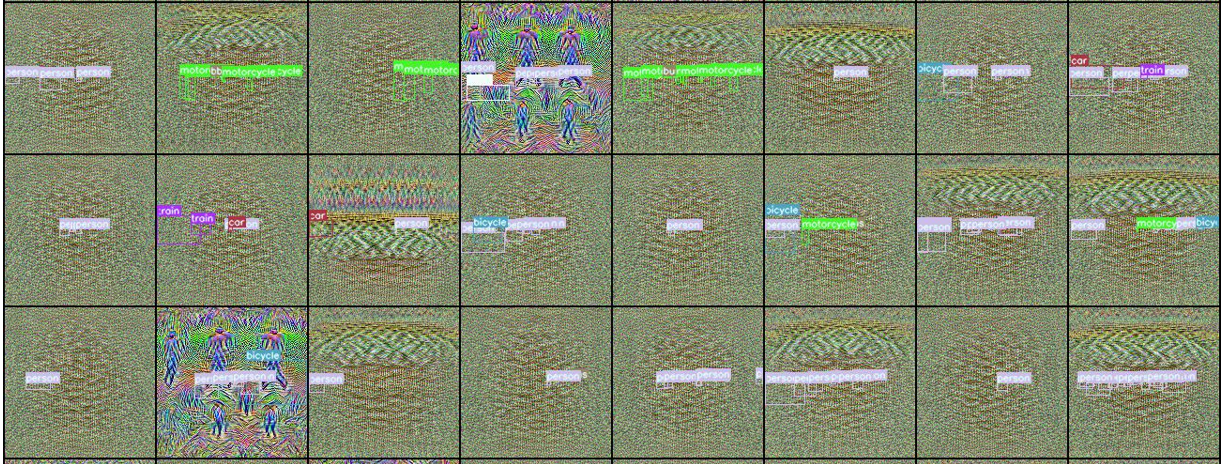

Abstract: We present DeepInversion for Object Detection (DIODE) to enable data-free knowledge distillation for neural networks trained on the object detection task. From a data-free perspective, DIODE synthesizes images given only an off-the-shelf pre-trained detection network and without any prior domain knowledge, generator network, or pre-computed activations. DIODE relies on two key components—first, an extensive set of differentiable augmentations to improve image fidelity and distillation effectiveness. Second, a novel automated bounding box and category sampling scheme for image synthesis enabling generating a large number of images with a diverse set of spatial and category objects. The resulting images enable data-free knowledge distillation from a teacher to a student detector, initialized from scratch. In an extensive set of experiments, we demonstrate that DIODE’s ability to match the original training distribution consistently enables more effective knowledge distillation than out-of-distribution proxy datasets, which unavoidably occur in a data-free setup given the absence of the original domain knowledge.

Copyright (c) 2021, NVIDIA CORPORATION. All rights reserved.

This work is made available under the Nvidia Source Code License (1-Way Commercial). To view a copy of this license, visit https://github.com/NVlabs/DIODE/blob/master/LICENSE

Install conda [link] python package manager then install the lpr environment and other packages as follows:

$ conda env create -f ./docker_environment/lpr_env.yml

$ conda activate lpr

$ conda install -y -c conda-forge opencv

$ conda install -y tqdm

$ git clone https://github.com/NVIDIA/apex

$ cd apex

$ pip install -v --no-cache-dir ./

Note: You may also generate a docker image based on provided Dockerfile docker_environments/Dockerfile.

This repository allows for generating location and category conditioned images from an off-the-shelf Yolo-V3 object detection model.

- Download the directory DIODE_data from google cloud storage: gcs-link (234 GB)

- Copy pre-trained yolo-v3 checkpoint and pickle files as follows:

$ cp /path/to/DIODE_data/pretrained/names.pkl /pathto/lpr_deep_inversion/models/yolo/ $ cp /path/to/DIODE_data/pretrained/colors.pkl /pathto/lpr_deep_inversion/models/yolo/ $ cp /path/to/DIODE_data/pretrained/yolov3-tiny.pt /pathto/lpr_deep_inversion/models/yolo/ $ cp /path/to/DIODE_data/pretrained/yolov3-spp-ultralytics.pt /pathto/lpr_deep_inversion/models/yolo/ - Extract the one-box dataset (single object per image) as follows:

$ cd /path/to/DIODE_data $ tar xzf onebox/onebox.tgz -C /tmp - Confirm the folder

/tmp/oneboxcontaining the onebox dataset is present and has following directories and text filemanifest.txt:$ cd /tmp/onebox $ ls images labels manifest.txt - Generate images from yolo-v3:

$ cd /path/to/lpr_deep_inversion $ chmod +x scripts/runner_yolo_multiscale.sh $ scripts/runner_yolo_multiscale.sh

- For ngc, use the provided bash script

scripts/diode_ngc_interactivejob.shto start an interactive ngc job with environment setup, code and data setup. - To generate large dataset use bash script

scripts/LINE_looped_runner_yolo.sh. - Check

knowledge_distillationsubfolder for code for knowledge distillation using generated datasets.

@inproceedings{chawla2021diode,

title = {Data-free Knowledge Distillation for Object Detection},

author = {Chawla, Akshay and Yin, Hongxu and Molchanov, Pavlo and Alvarez, Jose M.},

booktitle = {The IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = January,

year = {2021}

}