This repository provides a template for training data-driven simulators that can then be leveraged for training brains (reinforcement learning agents) with Project Bonsai.

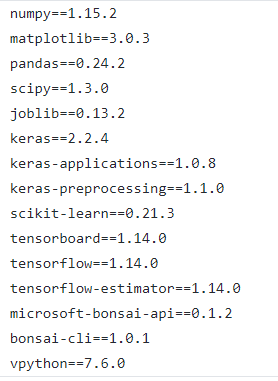

This repository leverages Anaconda for Python virtual environments and all dependencies. Please install Anaconda or miniconda first and then run the following:

conda env update -f environment.yml

conda activate ddmThis will create and activate a new conda virtual environment named ddm based on the configuration in the environment.yml file.

To get an understanding of the package, you may want to look at the tests in tests, and the configuration files in conf. You can run the tests by simply:

pytest tests

# or

python -m pytest tests/The scripts in this package expect that you have a dataset of CSVs or numpy arrays. If you are using a CSV, you should ensure that:

- The CSV has a header with unique column names describing your inputs to the model and the outputs of the model.

- The CSV should have a column for the episode index and another column for the iteration index.

- The CSV should have been cleaned from any rows containing NaNs

For an example on how to generate logged datasets from a simulator using the Python SDK, take a look at the examples in the samples repository, in particular, you can use the flag --test-local True --log-iteration True to generate a CSV data that matches the schema used in this repository.

The scripts in this package leverage the configuration files saved in the conf folder to load CSV files, train and save models, and interface them to the Bonsai service. There are three configuration files:

- conf/data/$YOUR_DATA_CONFIG.yaml defines the interface to the data to train on

- conf/model/$YOUR_MODEL_CONFIG.yaml defines the Machine Learning model's hyper-parameters

- conf/simulator/$YOUR_SIM_CONFIG.yaml defines the simulator interface

The library comes with a default configuration set in conf/config.yaml.

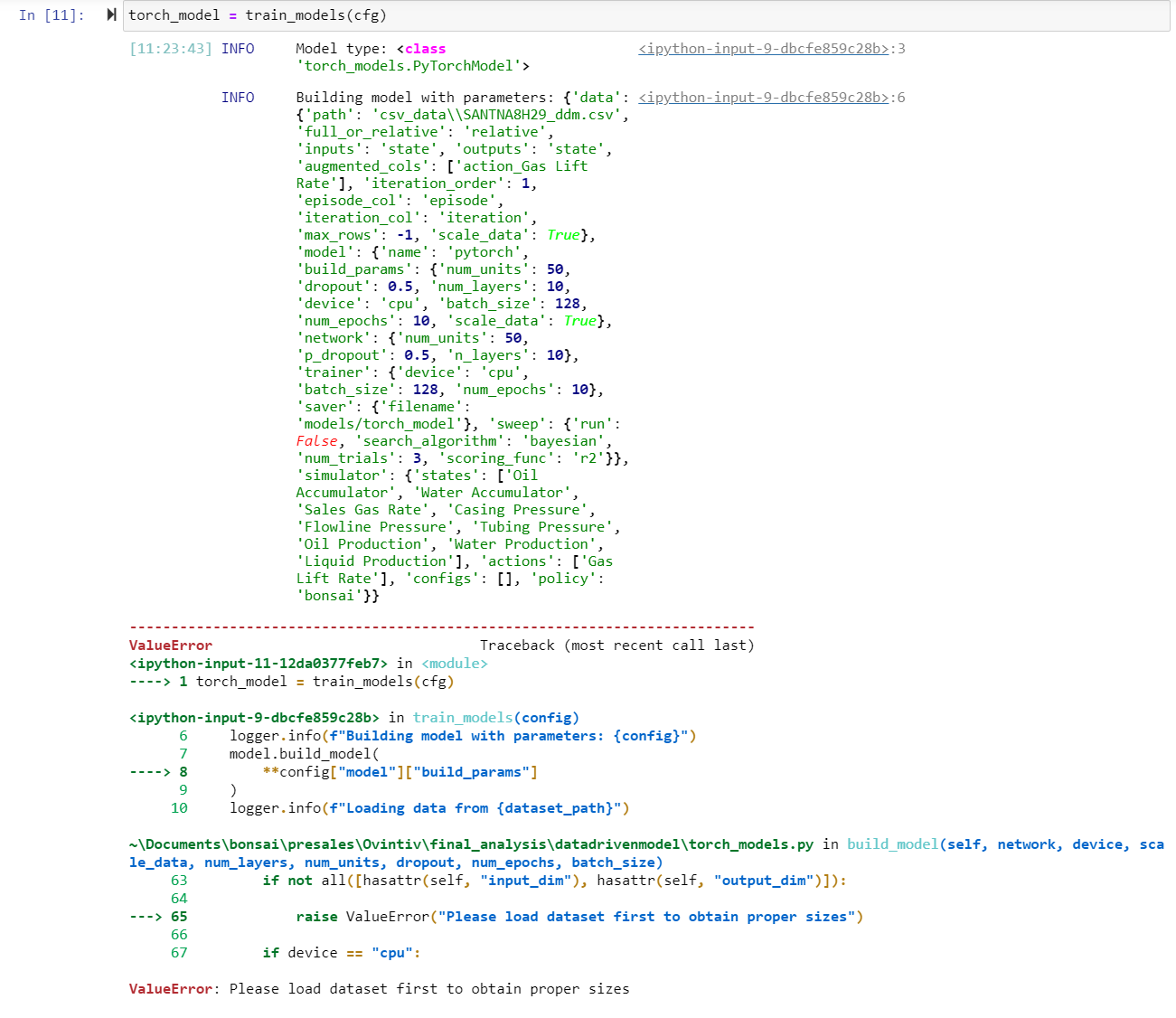

python ddm_trainer.pyYou can change any configuration parameter by specifying the configuration file you would like to change and its new path, i.e.,

python ddm_trainer.py data=cartpole_st_at simulator=gboost_cartpolewhich will use the configuration files in conf/data/cartpole_st_at.yaml and conf/simulator/gboost_cartpole.yaml.

You can also override the parameters of the configuration file by specifying their name:

python ddm_trainer.py data.path=csv_data/cartpole_at_st.csv data.iteration_order=1

python ddm_trainer.py data.path=csv_data/cartpole_at_st.csv model=xgboost The script automatically saves your model to the path specified by model.saver.filename. An outputs directory is also saved with your configuration file and logs.

In order to specify episode initializations and scenario parameters, you can provide a dictionary of parameters to the simulator yaml file:

simulator:

states:

["cart_position", "cart_velocity", "pole_angle", "pole_angular_velocity"]

actions: ["command"]

configs: ["pole_length", "pole_mass", "cart_mass"]

# estimate these during training

# e.g.,:

episode_inits: { "pole_length": 0.4, "pole_mass": 0.055, "cart_mass": 0.31 }

# e.g.,: your simulator may need to know the initial state

# before the first episode. define these here as a dictionary

# you can include these in your Inkling scenarios during brain training

initial_states:

{

"cart_position": 0,

"cart_velocity": 0,

"pole_angle": 0,

"pole_angular_velocity": 0,

}

# episode_inits:

policy: bonsai

logging: enable

workspace_setup: TrueWhen training with a brain, make sure that your scenario definitions include both initial_state values and/or episode_inits values.

You can also do some hyperparameter tuning by setting sweep.run to True in your conf.model.yaml file and specifying the parameters to sweep over and their distributions in the params argument:

sweep:

run: True

search_algorithm: random

num_trials: 3

scoring_func: r2

params:

estimator__max_depth: [1, 3, 5, 10]

estimator__gamma: [0, 0.5, 1, 5]

estimator__subsample: [0.1, 0.5, 1]The sweeping function uses tune-sklearn. Valid choices for search_algorithm are: bayesian, random, bohb, and hyperopt.

The schema for your simulator resides in conf/simulator. After defining your states, actions, and configs, you can run the simulator as follows:

python ddm_predictor.py simulator=$YOUR_SIM_CONFIGNOTE: If wanting to train with bonsai, make sure

conf/simulator/policyis set to "bonsai" instead of "random"

If you would like to test your simulator before connecting to the platform, you can use a random policy:

python ddm_predictor.py simulator=$YOUR_SIM_CONFIG simulator.policy=randomNOTE: The optional flags should NOT have .yml; it should just be the name of the config file

If you're having trouble running locally, chances are you need to set up your workspace and access key configs. You can do this by using environment variables or the following command

python ddm_predictor.py simulator.workspace_setup=TrueSometimes there are variables that need to be provided from the simulator for brain training, but we don't necessarily want a ddm model to predict it. A SignalBuilder class in signal_builder.py can be used to create signals with different types such as step_function, ramp, and sinewave. You can configure signals in the conf/simulator file by adding a key and a the desired signal type. The horizon is the length of episode. The signal will be created and the current signal will be provided as it is indexed through an episode in ddm_predictor.py.

Depending on the signal type, you'll need to provide different signal parameters. One can randomize the signals at the start of every episode by specifying the min/max. If you want them to be constant, just set them to be the same.

- start

- stop

- transition

- start

- stop

- amplitude

- mean

- value

- conditions (must use list)

- values (must use list)

signal_builder:

signal_types:

Tset: step_function

Tout: sinewave

horizon: 288

signal_params:

Tset:

conditions:

min: [0, 110, 205]

max: [0, 110, 205]

values:

min: [25, 22, 24]

max: [25, 22, 24]

Tout:

amplitude:

min: 5

max: 5

median:

min: 25

max: 25

median: 25Validating your ddm simulator against the original sim is heavily recommended, especially paying attention to error propagation in a sequential manner. ddm_test_validate.py is one way to generate two csv files in outputs/<DATE>/<TIME>/logs.

NOTE: ddm_test_validate.py does NOT currently generate plots for generic models

In order to use ddm_test_validate.py, a few steps will need to be followed:

- Place the original simulator's

main.pyat the same root level whereddm_test_validate.pyis. Add simulator files intosim/<FOLDER>/sim/.

├───ddm_test_validate.py

├───main.py

├───sim

│ ├───quanser

│ │ ├───sim

│ │ | ├───qube_simulator.py- Modify imports so main.py can successfully run simulator in new location

from sim.quanser.sim.qube_simulator import QubeSimulator

from sim.quanser.policies import random_policy, brain_policy- Ensure the config in

conf/simulatordoes NOT have the default policy as bonsai. You'll want to use "random" or create your own expert policy.

NOTE: You can override your policy from the CLI, will be shown in the final step

- Provide a scenario config in

ddm_test_validate.pyto ensure you start with initial configurations that are better than just random.

'''

TODO: Add episode_start(config) so sim works properly and not initializing

with unrealistic initial conditions.

'''

sim.episode_start()

ddm_state = sim.get_state()

sim_state = sim.get_sim_state()- Run

ddm_test_validate.py

python ddm_test_validate.py simulator.policy=randomaz acr build --image <IMAGE_NAME>:<IMAGE_VERSION> --file Dockerfile --registry <ACR_REGISTRY> .This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.microsoft.com.

When you submit a pull request, a CLA-bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., label, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repositories using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

The software may collect information about you and your use of the software and send it to Microsoft. Microsoft may use this information to provide services and improve our products and services. You may turn off the telemetry as described in the repository. There are also some features in the software that may enable you and Microsoft to collect data from users of your applications. If you use these features, you must comply with applicable law, including providing appropriate notices to users of your applications together with a copy of Microsoft's privacy statement. Our privacy statement is located at https://go.microsoft.com/fwlink/?LinkID=824704. You can learn more about data collection and use in the help documentation and our privacy statement. Your use of the software operates as your consent to these practices.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.