- 2023.5.31: TheBloke has created a 🤗Wizard-Vicuna-30B-Uncensored-GGML model for us!

- 2023.5.31: TheBloke has created a 🤗Wizard-Vicuna-30B-Uncensored-fp16 model for us!

- 2023.5.31: TheBloke has created a 🤗Wizard-Vicuna-30B-Uncensored-GPTQ model for us!

- 2023.5.30: ehartford has created a 🤗Wizard-Vicuna-30B-Uncensored model for us!

- 2023.5.20: TheBloke has created a 🤗Wizard-Vicuna-7B-Uncensored-GGML model for us!

- 2023.5.20: TheBloke has created a 🤗Wizard-Vicuna-13B-Uncensored-GGML model for us!

- 2023.5.18: ehartford has created a 🤗Wizard-Vicuna-7B-Uncensored model for us!

- 2023.5.13: ehartford has created a 🤗Wizard-Vicuna-13B-Uncensored model for us!

- 2023.5.5: TheBloke has created a 🤗wizard-vicuna-13B-HF version for us!

- 2023.5.5: TheBloke has created a 🤗wizard-vicuna-13B-GPTQ version for us!

- 2023.5.5: TheBloke has created a 🤗WizardVicuna 13B GGML version for us!

- 2023.5.4: We are releasing the 🤗WizardVicuna 13B model.

- 2023.5.3: We are releasing the 🤗WizardVicuna 70K conversation dataset.

I am a big fan of the ideas behind WizardLM and VicunaLM. I particularly like the idea of WizardLM handling the dataset itself more deeply and broadly, as well as VicunaLM overcoming the limitations of single-turn conversations by introducing multi-round conversations. As a result, I combined these two ideas to create WizardVicunaLM. This project is highly experimental and designed for proof of concept, not for actual usage.

gptordie

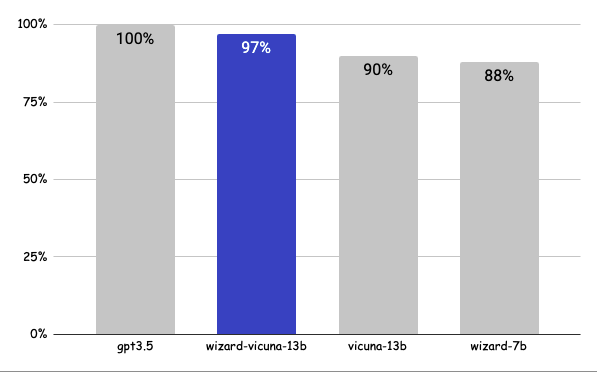

The questions presented here are not from rigorous tests, but rather, I asked a few questions and requested GPT-4 to score them. The models compared were ChatGPT 3.5, WizardVicunaLM, VicunaLM, and WizardLM, in that order.

| gpt3.5 | wizard-vicuna-13b | vicuna-13b | wizard-7b | link | |

|---|---|---|---|---|---|

| Q1 | 95 | 90 | 85 | 88 | link |

| Q2 | 95 | 97 | 90 | 89 | link |

| Q3 | 85 | 90 | 80 | 65 | link |

| Q4 | 90 | 85 | 80 | 75 | link |

| Q5 | 90 | 85 | 80 | 75 | link |

| Q6 | 92 | 85 | 87 | 88 | link |

| Q7 | 95 | 90 | 85 | 92 | link |

| Q8 | 90 | 85 | 75 | 70 | link |

| Q9 | 92 | 85 | 70 | 60 | link |

| Q10 | 90 | 80 | 75 | 85 | link |

| Q11 | 90 | 85 | 75 | 65 | link |

| Q12 | 85 | 90 | 80 | 88 | link |

| Q13 | 90 | 95 | 88 | 85 | link |

| Q14 | 94 | 89 | 90 | 91 | link |

| Q15 | 90 | 85 | 88 | 87 | link |

| 91 | 88 | 82 | 80 |

We adopted the approach of WizardLM, which is to extend a single problem more in-depth. However, instead of using individual instructions, we expanded it using Vicuna's conversation format and applied Vicuna's fine-tuning techniques.

Turning a single command into a rich conversation is what we've done here.

After creating the training data, I later trained it according to the Vicuna v1.1 training method.

First, we explore and expand various areas in the same topic using the 7K conversations created by WizardLM. However, we made it in a continuous conversation format instead of the instruction format. That is, it starts with WizardLM's instruction, and then expands into various areas in one conversation using ChatGPT 3.5.

After that, we applied the following model using Vicuna's fine-tuning format.

Trained with 8 A100 GPUs for 35 hours.

You can see the dataset we used for training and the 13b model in the Hugging Face.

If we extend the conversation to gpt4 32K, we can expect a dramatic improvement, as we can generate 8x more, more accurate and richer conversations.

This model was trained with Vicuna 1.1v, so it performs best when used as shown below.

USER: What is 4x8?

ASSISTANT:

Although it was tuned 100% for English, it's curious how the language abilities for other countries, such as Korean, Chinese, and Japanese, have been enhanced even though their share should have decreased.

link1

link2

The model is licensed under the LLaMA model, and the dataset is licensed under the terms of OpenAI because it uses ChatGPT. Everything else is free.

JUNE LEE - He is active in Songdo Artificial Intelligence Study and GDG Songdo.