A Python library for Continual Inference Networks in PyTorch

Quick-start • Docs • Principles • Paper • Examples • Modules • Model Zoo • Contribute • License

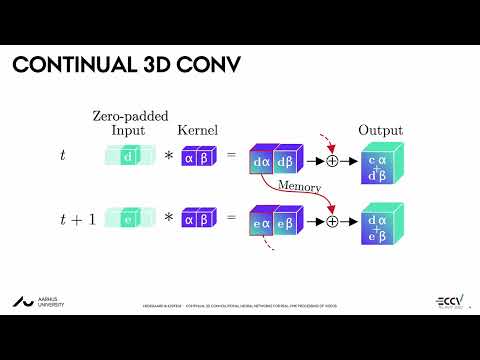

Many of our favorite Deep Neural Network architectures (e.g., CNNs and Transformers) were built with offline-processing for offline processing. Rather than processing inputs one sequence element at a time, they require the whole (spatio-)temporal sequence to be passed as a single input. Yet, many important real-life applications need online predictions on a continual input stream. While CNNs and Transformers can be applied by re-assembling and passing sequences within a sliding window, this is inefficient due to the redundant intermediary computations from overlapping clips.

Continual Inference Networks (CINs) are built to ensure efficient stream processing by employing an alternative computational ordering, which allows sequential computations without the use of sliding window processing. In general, CINs requires approx. L × fewer FLOPs per prediction compared to sliding window-based inference with non-CINs, where L is the corresponding sequence length of a non-CIN network. For more details, check out the videos below describing Continual 3D CNNs [1] and Transformers [2].

- 2022-12-02: ONNX compatibility for all modules is available from v1.0.0. See test_onnx.py for examples.

pip install continual-inferenceco modules are weight-compatible drop-in replacement for torch.nn, enhanced with the capability of efficient continual inference:

import torch

import continual as co

# B, C, T, H, W

example = torch.randn((1, 1, 5, 3, 3))

conv = co.Conv3d(in_channels=1, out_channels=1, kernel_size=(3, 3, 3))

# Same exact computation as torch.nn.Conv3d ✅

output = conv(example)

# But can also perform online inference efficiently 🚀

firsts = conv.forward_steps(example[:, :, :4])

last = conv.forward_step(example[:, :, 4])

assert torch.allclose(output[:, :, : conv.delay], firsts)

assert torch.allclose(output[:, :, conv.delay], last)

# Temporal properties

assert conv.receptive_field == 3

assert conv.delay == 2See the network composition and model zoo sections for additional examples.

The library components feature three distinct forward modes, which are handy for different situations, namely forward, forward_step, and forward_steps:

Performs a forward computation over multiple time-steps. This function is identical to the corresponding module in torch.nn, ensuring cross-compatibility. Moreover, it's handy for efficient training on clip-based data.

O (O: output)

↑

N (N: network module)

↑

----------------- (-: aggregation)

P I I I P (I: input frame, P: padding)

Performs a forward computation for a single frame and (optionally) updates internal states accordingly. This function performs efficient continual inference.

O+S O+S O+S O+S (O: output, S: updated internal state)

↑ ↑ ↑ ↑

N N N N (N: network module)

↑ ↑ ↑ ↑

I I I I (I: input frame)

Performs a forward computation across multiple time-steps while updating internal states for continual inference (if update_state=True). Start-padding is always accounted for, but end-padding is omitted per default in expectance of the next input step. It can be added by specifying pad_end=True. If so, the output-input mapping the exact same as that of forward.

O (O: output)

↑

----------------- (-: aggregation)

O O+S O+S O+S O (O: output, S: updated internal state)

↑ ↑ ↑ ↑ ↑

N N N N N (N: network module)

↑ ↑ ↑ ↑ ↑

P I I I P (I: input frame, P: padding)

Per default, the __call__ function operates identically to torch.nn and executes forward. We supply two options for changing this behavior, namely the call_mode property and the call_mode context manager. An example of their use follows:

timeseries = torch.randn(batch, channel, time)

timestep = timeseries[:, :, 0]

net(timeseries) # Invokes net.forward(timeseries)

# Assign permanent call_mode property

net.call_mode = "forward_step"

net(timestep) # Invokes net.forward_step(timestep)

# Assign temporary call_mode with context manager

with co.call_mode("forward_steps"):

net(timeseries) # Invokes net.forward_steps(timeseries)

net(timestep) # Invokes net.forward_step(timestep) againContinual Inference Networks require strict handling of internal data delays to guarantee correspondence between forward modes. While it is possible to compose neural networks by defining forward, forward_step, and forward_steps manually, correct handling of delays is cumbersome and time-consuming. Instead, we provide a rich interface of container modules, which handles delays automatically. On top of co.Sequential (which is a drop-in replacement of torch.nn.Sequential), we provide modules for handling parallel and conditional dataflow.

co.Sequential: Invoke modules sequentially, passing the output of one module onto the next.co.Broadcast: Broadcast one stream to multiple.co.Parallel: Invoke modules in parallel given each their input.co.ParallelDispatch: Dispatch multiple input streams to multiple output streams flexibly.co.Reduce: Reduce multiple input streams to one.co.BroadcastReduce: Shorthand for Sequential(Broadcast, Parallel, Reduce).co.Residual: Residual connection.co.Conditional: Conditionally checks whether to invoke a module (or another) at runtime.

Residual module

Short-hand:

residual = co.Residual(co.Conv3d(32, 32, kernel_size=3, padding=1))Explicit:

residual = co.Sequential(

co.Broadcast(2),

co.Parallel(

co.Conv3d(32, 32, kernel_size=3, padding=1),

co.Delay(2),

),

co.Reduce("sum"),

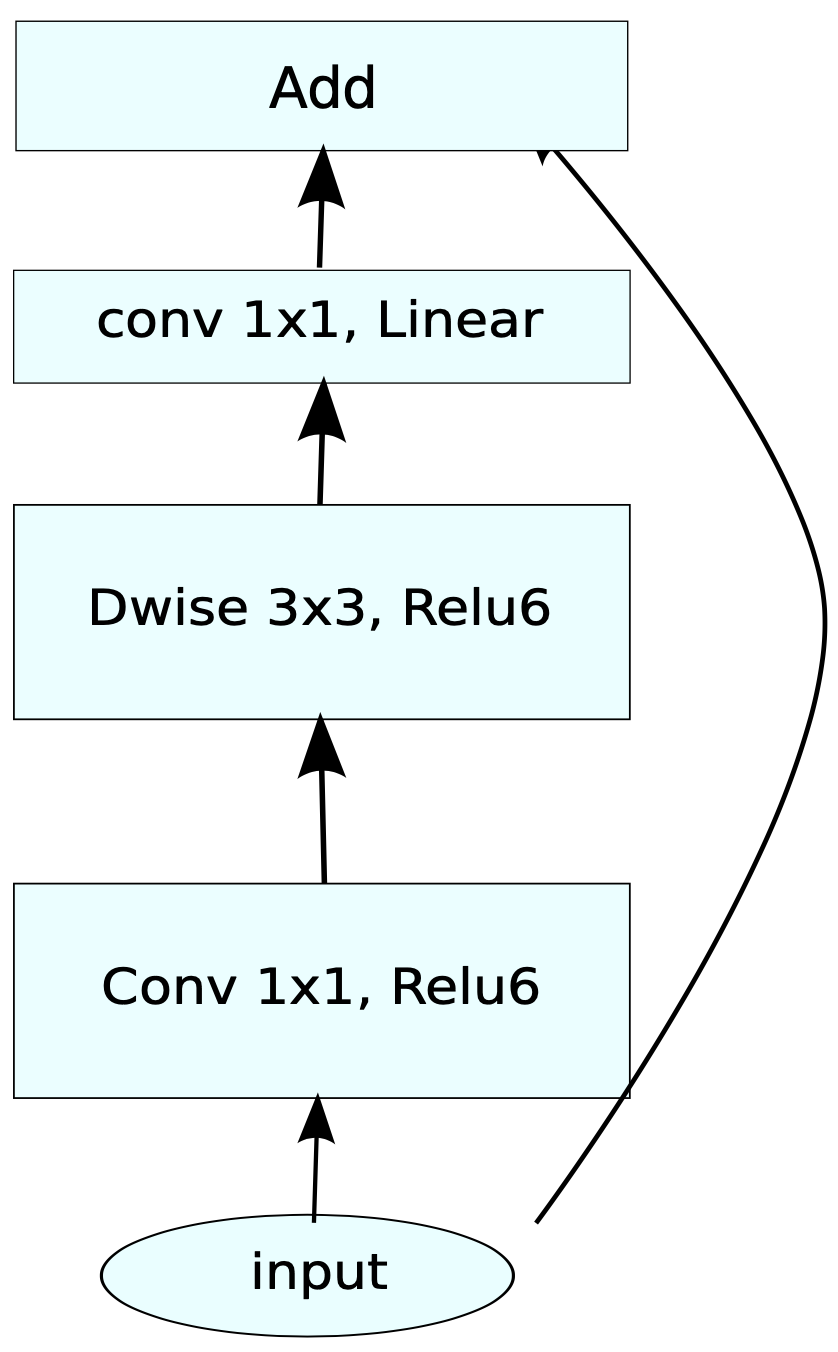

)3D MobileNetV2 Inverted residual block

Continual 3D version of the MobileNetV2 Inverted residual block.

mb_conv = co.Residual(

co.Sequential(

co.Conv3d(32, 64, kernel_size=(1, 1, 1)),

nn.BatchNorm3d(64),

nn.ReLU6(),

co.Conv3d(64, 64, kernel_size=(3, 3, 3), padding=(1, 1, 1), groups=64),

nn.ReLU6(),

co.Conv3d(64, 32, kernel_size=(1, 1, 1)),

nn.BatchNorm3d(32),

)

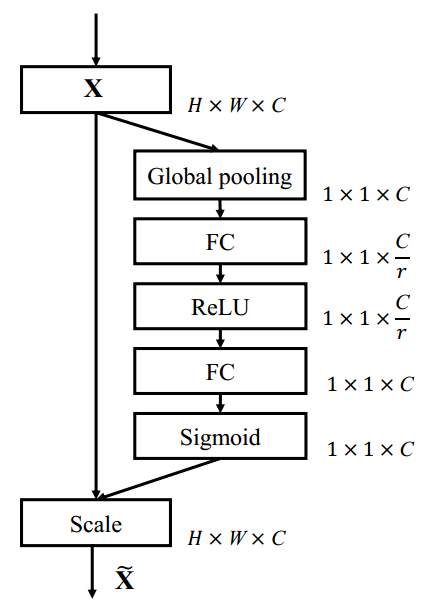

)3D Squeeze-and-Excitation module

Continual 3D version of the Squeeze-and-Excitation module

Squeeze-and-Excitation block. Scale refers to a broadcasted element-wise multiplication. Adapted from: https://arxiv.org/pdf/1709.01507.pdf

se = co.Residual(

co.Sequential(

OrderedDict([

("pool", co.AdaptiveAvgPool3d((1, 1, 1), kernel_size=7)),

("down", co.Conv3d(256, 16, kernel_size=1)),

("act1", nn.ReLU()),

("up", co.Conv3d(16, 256, kernel_size=1)),

("act2", nn.Sigmoid()),

])

),

reduce="mul",

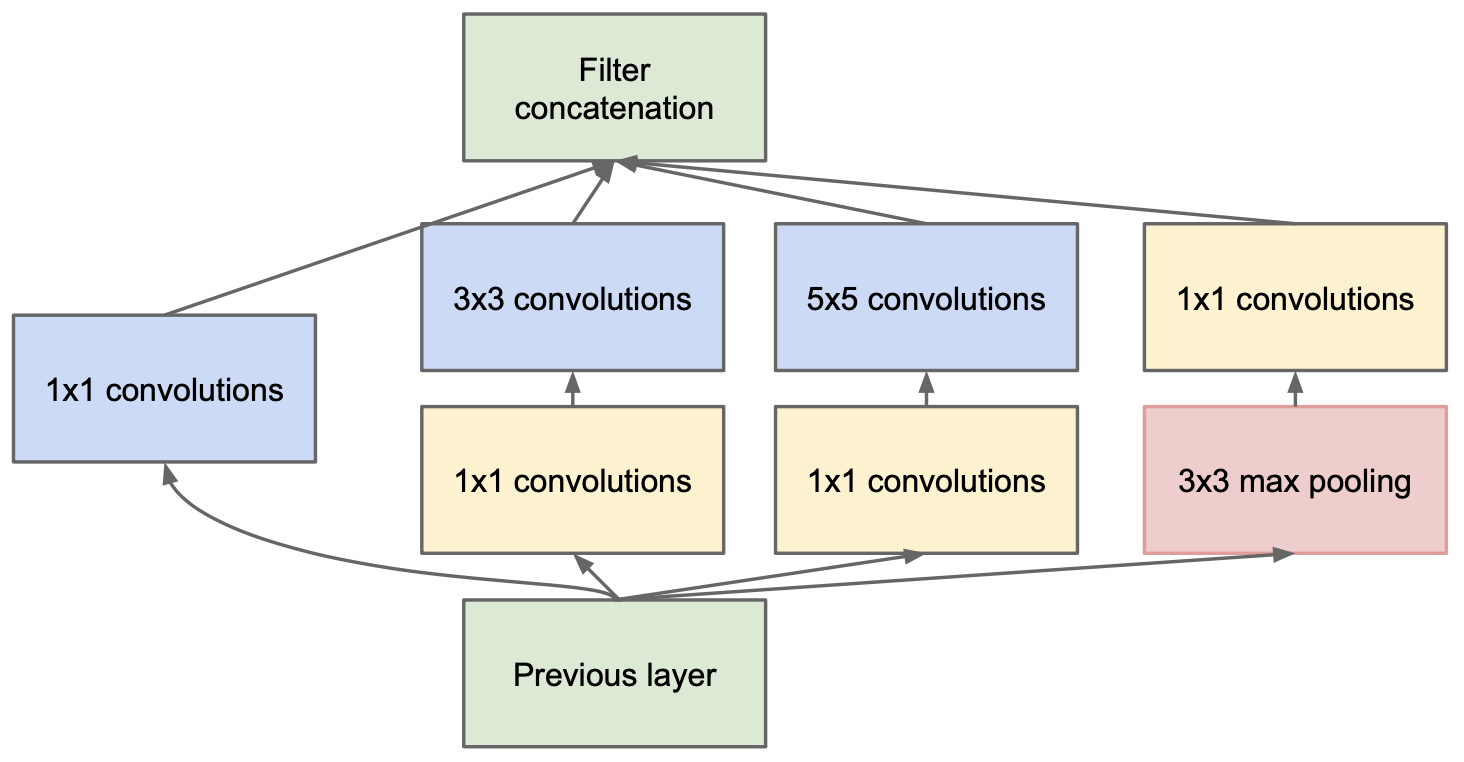

)3D Inception module

Continual 3D version of the Inception module:

def norm_relu(module, channels):

return co.Sequential(

module,

nn.BatchNorm3d(channels),

nn.ReLU(),

)

inception_module = co.BroadcastReduce(

co.Conv3d(192, 64, kernel_size=1),

co.Sequential(

norm_relu(co.Conv3d(192, 96, kernel_size=1), 96),

norm_relu(co.Conv3d(96, 128, kernel_size=3, padding=1), 128),

),

co.Sequential(

norm_relu(co.Conv3d(192, 16, kernel_size=1), 16),

norm_relu(co.Conv3d(16, 32, kernel_size=5, padding=2), 32),

),

co.Sequential(

co.MaxPool3d(kernel_size=(1, 3, 3), padding=(0, 1, 1), stride=1),

norm_relu(co.Conv3d(192, 32, kernel_size=1), 32),

),

reduce="concat",

)We enforce a unified ordering of input dimensions for all library modules, namely:

(batch, channel, time, optional_dim2, optional_dim3)

The outputs produces by forward_step and forward_steps are identical to those of forward, provided the same data was input beforehand and state update was enabled. We know that input and output shapes aren't necessarily the same when using forward in the PyTorch library, and generally depends on padding, stride and receptive field of a module.

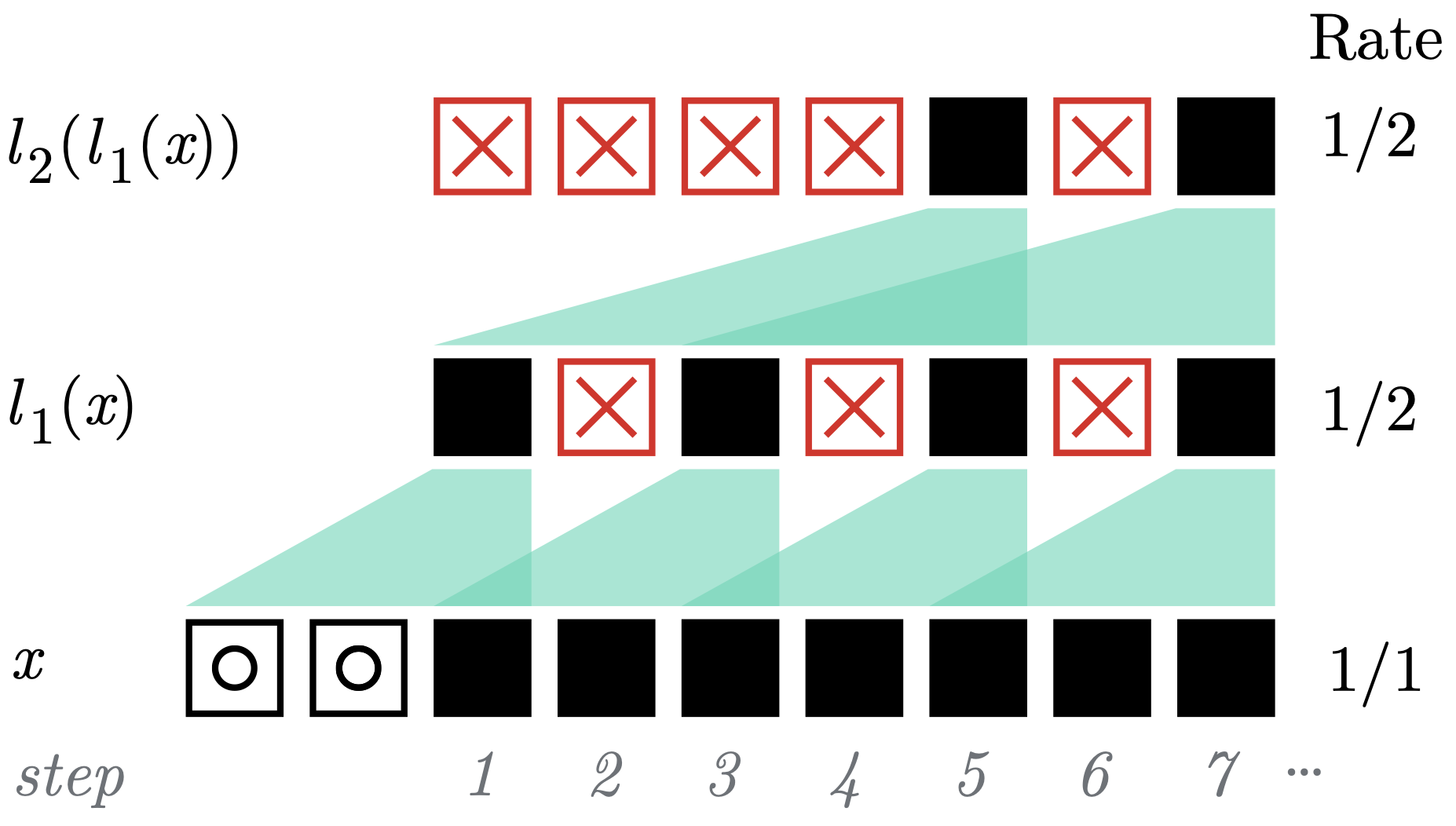

For the forward_step function, this comes to show by some None-valued outputs. Specifically, modules with a delay (i.e. with receptive fields larger than the padding + 1) will produce None until the input count exceeds the delay. Moreover, stride > 1 will produce Tensor outputs every stride steps and None the remaining steps. A visual example is shown below:

A mixed example of delay and outputs under padding and stride. Here, we illustrate the step-wise operation of two co module layers, l1 with with receptive_field = 3, padding = 2, and stride = 2 and l2 with receptive_field = 3, no padding and stride = 1. ⧇ denotes a padded zero, ■ is a non-zero step-feature, and ☒ is an empty output.

For more information, please see the library paper.

During stream processing, network modules which operate over multiple time-steps, e.g., a convolution with kernel_size > 1 in the temporal dimension, will aggregate and cache state internally. Each module has its own local state, which can be inspected using module.get_state(). During forward_step and forward_steps, the state is updated unless the forward_step(s) is invoked with an update_state = False argument.

A state cleanup can be accomplished via module.clean_state().

Continual Inference features a rich collection of modules for defining Continual Inference Networks. Specific care was taken to create CIN versions of the PyTorch modules found in torch.nn:

Pooling

Linear

co.Linearco.Identity: Maps input to output without modification.co.Add: Adds a constant value.co.Multiply: Multiplies with a constant factor.

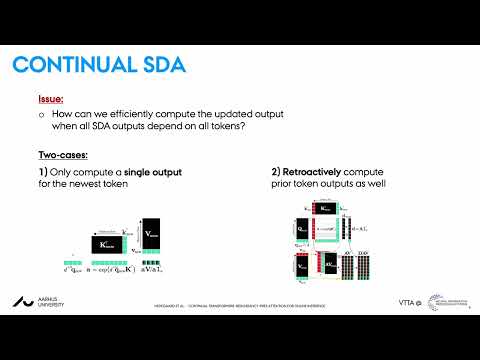

Transformers

co.TransformerEncoderco.TransformerEncoderLayerFactory: Factory function corresponding tonn.TransformerEncoderLayer.co.SingleOutputTransformerEncoderLayer: SingleOutputMHA version ofnn.TransformerEncoderLayer.co.RetroactiveTransformerEncoderLayer: RetroactiveMHA version ofnn.TransformerEncoderLayer.co.RetroactiveMultiheadAttention: Retroactive version ofnn.MultiheadAttention.co.SingleOutputMultiheadAttention: Single-output version ofnn.MultiheadAttention.co.RecyclingPositionalEncoding: Positional Encoding used for Continual Transformers.

Modules for composing and converting networks. Both composition and utility modules can be used for regular definition of PyTorch modules as well.

Composition modules

co.Sequential: Invoke modules sequentially, passing the output of one module onto the next.co.Broadcast: Broadcast one stream to multiple.co.Parallel: Invoke modules in parallel given each their input.co.ParallelDispatch: Dispatch multiple input streams to multiple output streams flexibly.co.Reduce: Reduce multiple input streams to one.co.BroadcastReduce: Shorthand for Sequential(Broadcast, Parallel, Reduce).co.Residual: Residual connection.co.Conditional: Conditionally checks whether to invoke a module (or another) at runtime.

Utility modules

co.Delay: Pure delay module (e.g. needed in residuals).co.Skip: Skip a predefined number of input steps.co.Reshape: Reshape non-temporal dimensions.co.Lambda: Lambda module which wraps any function.co.Constant: Maps input to and output with constant value.co.Zero: Maps input to output of zeros.co.One: Maps input to output of ones.

Converters

co.continual: conversion function fromtorch.nnmodules tocomodules.co.forward_stepping: functional wrapper, which enhances temporally localtorch.nnmodules with the forward_stepping functions.

We support drop-in interoperability with with the following torch.nn modules:

Activation

nn.Thresholdnn.ReLUnn.RReLUnn.Hardtanhnn.ReLU6nn.Sigmoidnn.Hardsigmoidnn.Tanhnn.SiLUnn.Hardswishnn.ELUnn.CELUnn.SELUnn.GLUnn.GELUnn.Hardshrinknn.LeakyReLUnn.LogSigmoidnn.Softplusnn.Softshrinknn.PReLUnn.Softsignnn.Tanhshrinknn.Softminnn.Softmaxnn.Softmax2dnn.LogSoftmax

Normalization

nn.BatchNorm1dnn.BatchNorm2dnn.BatchNorm3dnn.GroupNorm,nn.InstanceNorm1d(affine=True, track_running_stats=True required)nn.InstanceNorm2d(affine=True, track_running_stats=True required)nn.InstanceNorm3d(affine=True, track_running_stats=True required)nn.LayerNorm(only non-temporal dimensions must be specified)

Dropout

nn.Dropoutnn.Dropout1dnn.Dropout2dnn.Dropout3dnn.AlphaDropoutnn.FeatureAlphaDropout

Benchmark results for 1-view testing on Kinetics400. For reference, X3D-L scores 69.3% top-1 acc with 19.2 GFLOPs per prediction.

| Arch | Avg. pool size | Top 1 (%) | FLOPs (G) per step | FLOPs reduction | Params (M) | Code | Weights |

|---|---|---|---|---|---|---|---|

| CoX3D-L | 64 | 71.6 | 1.25 | 15.3x | 6.2 | link | link |

| CoX3D-M | 64 | 71.0 | 0.33 | 15.1x | 3.8 | link | link |

| CoX3D-S | 64 | 64.7 | 0.17 | 12.1x | 3.8 | link | link |

| CoSlow | 64 | 73.1 | 6.90 | 8.0x | 32.5 | link | link |

| CoI3D | 64 | 64.0 | 5.68 | 5.0x | 28.0 | link | link |

FLOPs reduction is noted relative to non-continual inference. Note that on-hardware inference doesn't reach the same speedups as "FLOPs reductions" might suggest due to overhead of state reads and writes. This overhead is less important for large batch sizes. This applies to all models in the model zoo.

Benchmark results for on NTU RGB+D 60 for the joint modality. For reference, ST-GCN achieves 86% X-Sub and 93.4 X-View accuracy with 16.73 GFLOPs per prediction.

| Arch | Receptive field | X-Sub Acc (%) | X-View Acc (%) | FLOPs (G) per step | FLOPs reduction | Params (M) | Code |

|---|---|---|---|---|---|---|---|

| CoST-GCN | 300 | 86.3 | 93.8 | 0.16 | 107.7x | 3.1 | link |

| CoA-GCN | 300 | 84.1 | 92.6 | 0.17 | 108.7x | 3.5 | link |

| CoST-GCN | 300 | 86.3 | 92.4 | 0.15 | 107.6x | 3.1 | link |

Here, you can download pre-trained,model weights for the above architectures on NTU RGB+D 60, NTU RGB+D 120, and Kinetics-400 on joint and bone modalities.

Benchmark results for on THUMOS14 on top of features extracted using a TSN-ResNet50 backbone pre-trained on Kinetics400. For reference, OadTR achieves 64.4 % mAP with 2.5 GFLOPs per prediction.

| Arch | Receptive field | mAP (%) | FLOPs (G) per step | Params (M) | Code |

|---|---|---|---|---|---|

| CoOadTR-b1 | 64 | 64.2 | 0.41 | 15.9 | link |

| CoOadTR-b2 | 64 | 64.4 | 0.01 | 9.6 | link |

The library features complete implementations of the one- and two-block continual transformer encoders as well.

The library modules are built to integrate seamlessly with other PyTorch projects. Specifically, extra care was taken to ensure out-of-the-box compatibility with:

@inproceedings{hedegaard2022colib,

title={Continual Inference: A Library for Efficient Online Inference with Deep Neural Networks in PyTorch},

author={Lukas Hedegaard and Alexandros Iosifidis},

booktitle={European Conference on Computer Vision Workshops (ECCVW)},

year={2022}

}This work has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 871449 (OpenDR).