反复按照教程安装出现这个问题:

(xtuner0.1.9) root@intern-studio:~/xtuner019/xtuner# xtuner

Traceback (most recent call last):

File "/root/.local/bin/xtuner", line 33, in

sys.exit(load_entry_point('xtuner', 'console_scripts', 'xtuner')())

File "/root/.local/bin/xtuner", line 22, in importlib_load_entry_point

for entry_point in distribution(dist_name).entry_points

File "/share/conda_envs/internlm-base/lib/python3.10/importlib/metadata/init.py", line 969, in

distribution return Distribution.from_name(distribution_name)

File "/share/conda_envs/internlm-base/lib/python3.10/importlib/metadata/init.py", line 548, in

from_name raise PackageNotFoundError(name)

importlib.metadata.PackageNotFoundError: No package metadata was found for xtuner

之前可以训练,后来更换参数和数据报以下错误,就直接尝试在base环境里安装就出现上面的错误。

nohup: ignoring input

[2024-01-11 19:09:22,787] [INFO] [real_accelerator.py:161:get_accelerator] Setting ds_accelerator to cuda (auto detect)

[2024-01-11 19:10:00,577] [INFO] [real_accelerator.py:161:get_accelerator] Setting ds_accelerator to cuda (auto detect)

01/11 19:10:22 - mmengine - INFO -

System environment:

sys.platform: linux

Python: 3.10.13 (main, Sep 11 2023, 13:44:35) [GCC 11.2.0]

CUDA available: True

numpy_random_seed: 716082487

GPU 0: NVIDIA A100-SXM4-80GB

CUDA_HOME: /usr/local/cuda

NVCC: Cuda compilation tools, release 11.7, V11.7.99

GCC: gcc (Ubuntu 9.4.0-1ubuntu1~20.04.2) 9.4.0

PyTorch: 2.0.1

PyTorch compiling details: PyTorch built with:

-

GCC 9.3

-

C++ Version: 201703

-

Intel(R) oneAPI Math Kernel Library Version 2023.1-Product Build 20230303 for Intel(R) 64 architecture applications

-

Intel(R) MKL-DNN v2.7.3 (Git Hash 6dbeffbae1f23cbbeae17adb7b5b13f1f37c080e)

-

OpenMP 201511 (a.k.a. OpenMP 4.5)

-

LAPACK is enabled (usually provided by MKL)

-

NNPACK is enabled

-

CPU capability usage: AVX2

-

CUDA Runtime 11.7

-

NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_61,code=sm_61;-gencode;arch=compute_70,code=sm_70;-gencode;arch=compute_75,code=sm_75;-gencode;arch=compute_80,code=sm_80;-gencode;arch=compute_86,code=sm_86;-gencode;arch=compute_37,code=compute_37

-

CuDNN 8.5

-

Magma 2.6.1

-

Build settings: BLAS_INFO=mkl, BUILD_TYPE=Release, CUDA_VERSION=11.7, CUDNN_VERSION=8.5.0, CXX_COMPILER=/opt/rh/devtoolset-9/root/usr/bin/c++, CXX_FLAGS= -D_GLIBCXX_USE_CXX11_ABI=0 -fabi-version=11 -Wno-deprecated -fvisibility-inlines-hidden -DUSE_PTHREADPOOL -DNDEBUG -DUSE_KINETO -DLIBKINETO_NOROCTRACER -DUSE_FBGEMM -DUSE_QNNPACK -DUSE_PYTORCH_QNNPACK -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -O2 -fPIC -Wall -Wextra -Werror=return-type -Werror=non-virtual-dtor -Werror=bool-operation -Wnarrowing -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wunused-local-typedefs -Wno-unused-parameter -Wno-unused-function -Wno-unused-result -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-stringop-overflow -Wno-psabi -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -fdiagnostics-color=always -faligned-new -Wno-unused-but-set-variable -Wno-maybe-uninitialized -fno-math-errno -fno-trapping-math -Werror=format -Werror=cast-function-type -Wno-stringop-overflow, LAPACK_INFO=mkl, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_DISABLE_GPU_ASSERTS=ON, TORCH_VERSION=2.0.1, USE_CUDA=ON, USE_CUDNN=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=ON, USE_MPI=OFF, USE_NCCL=ON, USE_NNPACK=ON, USE_OPENMP=ON, USE_ROCM=OFF,

TorchVision: 0.15.2

OpenCV: 4.9.0

MMEngine: 0.10.2

Runtime environment:

launcher: none

randomness: {'seed': None, 'deterministic': False}

cudnn_benchmark: False

mp_cfg: {'mp_start_method': 'fork', 'opencv_num_threads': 0}

dist_cfg: {'backend': 'nccl'}

seed: None

deterministic: False

Distributed launcher: none

Distributed training: False

GPU number: 1

01/11 19:10:22 - mmengine - INFO - Config:

SYSTEM = ''

accumulative_counts = 16

batch_size = 1

betas = (

0.9,

0.999,

)

custom_hooks = [

dict(

tokenizer=dict(

padding_side='right',

pretrained_model_name_or_path=

'/root/model/Shanghai_AI_Laboratory/internlm-chat-7b',

trust_remote_code=True,

type='transformers.AutoTokenizer.from_pretrained'),

type='xtuner.engine.DatasetInfoHook'),

dict(

evaluation_inputs=[

'请给我介绍五个上海的景点',

'Please tell me five scenic spots in Shanghai',

],

every_n_iters=500,

prompt_template='xtuner.utils.PROMPT_TEMPLATE.internlm_chat',

system='',

tokenizer=dict(

padding_side='right',

pretrained_model_name_or_path=

'/root/model/Shanghai_AI_Laboratory/internlm-chat-7b',

trust_remote_code=True,

type='transformers.AutoTokenizer.from_pretrained'),

type='xtuner.engine.EvaluateChatHook'),

]

data_path = '/root/code/xturn/grade-school-math/grade_school_math/data/new'

dataloader_num_workers = 0

default_hooks = dict(

checkpoint=dict(interval=1, type='mmengine.hooks.CheckpointHook'),

logger=dict(interval=10, type='mmengine.hooks.LoggerHook'),

param_scheduler=dict(type='mmengine.hooks.ParamSchedulerHook'),

sampler_seed=dict(type='mmengine.hooks.DistSamplerSeedHook'),

timer=dict(type='mmengine.hooks.IterTimerHook'))

env_cfg = dict(

cudnn_benchmark=False,

dist_cfg=dict(backend='nccl'),

mp_cfg=dict(mp_start_method='fork', opencv_num_threads=0))

evaluation_freq = 500

evaluation_inputs = [

'请给我介绍五个上海的景点',

'Please tell me five scenic spots in Shanghai',

]

launcher = 'none'

load_from = None

log_level = 'INFO'

lr = 0.0002

max_epochs = 3

max_length = 2048

max_norm = 1

model = dict(

llm=dict(

pretrained_model_name_or_path=

'/root/model/Shanghai_AI_Laboratory/internlm-chat-7b',

quantization_config=dict(

bnb_4bit_compute_dtype='torch.float16',

bnb_4bit_quant_type='nf4',

bnb_4bit_use_double_quant=True,

llm_int8_has_fp16_weight=False,

llm_int8_threshold=6.0,

load_in_4bit=True,

load_in_8bit=False,

type='transformers.BitsAndBytesConfig'),

torch_dtype='torch.float16',

trust_remote_code=True,

type='transformers.AutoModelForCausalLM.from_pretrained'),

lora=dict(

bias='none',

lora_alpha=16,

lora_dropout=0.1,

r=64,

task_type='CAUSAL_LM',

type='peft.LoraConfig'),

type='xtuner.model.SupervisedFinetune')

optim_type = 'bitsandbytes.optim.PagedAdamW32bit'

optim_wrapper = dict(

optimizer=dict(

betas=(

0.9,

0.999,

),

lr=0.0002,

type='bitsandbytes.optim.PagedAdamW32bit',

weight_decay=0),

type='DeepSpeedOptimWrapper')

pack_to_max_length = True

param_scheduler = dict(

T_max=3,

by_epoch=True,

convert_to_iter_based=True,

eta_min=0.0,

type='mmengine.optim.CosineAnnealingLR')

pretrained_model_name_or_path = '/root/model/Shanghai_AI_Laboratory/internlm-chat-7b'

prompt_template = 'xtuner.utils.PROMPT_TEMPLATE.internlm_chat'

randomness = dict(deterministic=False, seed=None)

resume = False

runner_type = 'FlexibleRunner'

strategy = dict(

config=dict(

bf16=dict(enabled=True),

fp16=dict(enabled=False, initial_scale_power=16),

gradient_accumulation_steps='auto',

gradient_clipping='auto',

train_micro_batch_size_per_gpu='auto',

zero_allow_untested_optimizer=True,

zero_force_ds_cpu_optimizer=False,

zero_optimization=dict(overlap_comm=True, stage=2)),

exclude_frozen_parameters=True,

gradient_accumulation_steps=16,

gradient_clipping=1,

train_micro_batch_size_per_gpu=1,

type='DeepSpeedStrategy')

tokenizer = dict(

padding_side='right',

pretrained_model_name_or_path=

'/root/model/Shanghai_AI_Laboratory/internlm-chat-7b',

trust_remote_code=True,

type='transformers.AutoTokenizer.from_pretrained')

train_cfg = dict(by_epoch=True, max_epochs=3, val_interval=1)

train_dataloader = dict(

batch_size=1,

collate_fn=dict(type='xtuner.dataset.collate_fns.default_collate_fn'),

dataset=dict(

dataset=dict(

path=

'/root/code/xturn/grade-school-math/grade_school_math/data/new',

type='datasets.load_dataset'),

dataset_map_fn='xtuner.dataset.map_fns.oasst1_map_fn',

max_length=2048,

pack_to_max_length=True,

remove_unused_columns=True,

shuffle_before_pack=True,

template_map_fn=dict(

template='xtuner.utils.PROMPT_TEMPLATE.internlm_chat',

type='xtuner.dataset.map_fns.template_map_fn_factory'),

tokenizer=dict(

padding_side='right',

pretrained_model_name_or_path=

'/root/model/Shanghai_AI_Laboratory/internlm-chat-7b',

trust_remote_code=True,

type='transformers.AutoTokenizer.from_pretrained'),

type='xtuner.dataset.process_hf_dataset'),

num_workers=0,

sampler=dict(shuffle=True, type='mmengine.dataset.DefaultSampler'))

train_dataset = dict(

dataset=dict(

path='/root/code/xturn/grade-school-math/grade_school_math/data/new',

type='datasets.load_dataset'),

dataset_map_fn='xtuner.dataset.map_fns.oasst1_map_fn',

max_length=2048,

pack_to_max_length=True,

remove_unused_columns=True,

shuffle_before_pack=True,

template_map_fn=dict(

template='xtuner.utils.PROMPT_TEMPLATE.internlm_chat',

type='xtuner.dataset.map_fns.template_map_fn_factory'),

tokenizer=dict(

padding_side='right',

pretrained_model_name_or_path=

'/root/model/Shanghai_AI_Laboratory/internlm-chat-7b',

trust_remote_code=True,

type='transformers.AutoTokenizer.from_pretrained'),

type='xtuner.dataset.process_hf_dataset')

visualizer = None

weight_decay = 0

work_dir = './work_dirs/internlm_chat_7b_qlora_oasst1_e3_copy'

01/11 19:10:23 - mmengine - WARNING - Failed to search registry with scope "mmengine" in the "builder" registry tree. As a workaround, the current "builder" registry in "xtuner" is used to build instance. This may cause unexpected failure when running the built modules. Please check whether "mmengine" is a correct scope, or whether the registry is initialized.

01/11 19:10:24 - mmengine - INFO - Hooks will be executed in the following order:

before_run:

(VERY_HIGH ) RuntimeInfoHook

(BELOW_NORMAL) LoggerHook

before_train:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(NORMAL ) DatasetInfoHook

(NORMAL ) EvaluateChatHook

(VERY_LOW ) CheckpointHook

before_train_epoch:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(NORMAL ) DistSamplerSeedHook

before_train_iter:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

after_train_iter:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(NORMAL ) EvaluateChatHook

(BELOW_NORMAL) LoggerHook

(LOW ) ParamSchedulerHook

(VERY_LOW ) CheckpointHook

after_train_epoch:

(NORMAL ) IterTimerHook

(LOW ) ParamSchedulerHook

(VERY_LOW ) CheckpointHook

before_val:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) DatasetInfoHook

before_val_epoch:

(NORMAL ) IterTimerHook

before_val_iter:

(NORMAL ) IterTimerHook

after_val_iter:

(NORMAL ) IterTimerHook

(BELOW_NORMAL) LoggerHook

after_val_epoch:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(BELOW_NORMAL) LoggerHook

(LOW ) ParamSchedulerHook

(VERY_LOW ) CheckpointHook

after_val:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) EvaluateChatHook

after_train:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) EvaluateChatHook

(VERY_LOW ) CheckpointHook

before_test:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) DatasetInfoHook

before_test_epoch:

(NORMAL ) IterTimerHook

before_test_iter:

(NORMAL ) IterTimerHook

after_test_iter:

(NORMAL ) IterTimerHook

(BELOW_NORMAL) LoggerHook

after_test_epoch:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(BELOW_NORMAL) LoggerHook

after_test:

(VERY_HIGH ) RuntimeInfoHook

after_run:

(BELOW_NORMAL) LoggerHook

01/11 19:10:26 - mmengine - WARNING - Dataset Dataset has no metainfo. dataset_meta in visualizer will be None.

quantization_config convert to <class 'transformers.utils.quantization_config.BitsAndBytesConfig'>

Loading checkpoint shards: 0%| | 0/8 [00:00<?, ?it/s]

Loading checkpoint shards: 12%|█▎ | 1/8 [00:03<00:23, 3.34s/it]

Loading checkpoint shards: 25%|██▌ | 2/8 [00:06<00:19, 3.30s/it]

Loading checkpoint shards: 38%|███▊ | 3/8 [00:09<00:16, 3.25s/it]

Loading checkpoint shards: 50%|█████ | 4/8 [00:12<00:12, 3.17s/it]

Loading checkpoint shards: 62%|██████▎ | 5/8 [00:16<00:09, 3.20s/it]

Loading checkpoint shards: 75%|███████▌ | 6/8 [00:20<00:07, 3.65s/it]

Loading checkpoint shards: 88%|████████▊ | 7/8 [00:23<00:03, 3.43s/it]

Loading checkpoint shards: 100%|██████████| 8/8 [00:24<00:00, 2.55s/it]

Loading checkpoint shards: 100%|██████████| 8/8 [00:24<00:00, 3.04s/it]

01/11 19:10:53 - mmengine - INFO - dispatch internlm attn forward

01/11 19:10:53 - mmengine - WARNING - Due to the implementation of the PyTorch version of flash attention, even when the output_attentions flag is set to True, it is not possible to return the attn_weights.

[2024-01-11 19:11:48,486] [INFO] [logging.py:96:log_dist] [Rank -1] DeepSpeed info: version=0.12.6, git-hash=unknown, git-branch=unknown

[2024-01-11 19:11:48,486] [INFO] [comm.py:637:init_distributed] cdb=None

[2024-01-11 19:11:48,486] [INFO] [comm.py:652:init_distributed] Not using the DeepSpeed or dist launchers, attempting to detect MPI environment...

[2024-01-11 19:11:48,653] [INFO] [comm.py:702:mpi_discovery] Discovered MPI settings of world_rank=0, local_rank=0, world_size=1, master_addr=192.168.237.56, master_port=29500

[2024-01-11 19:11:48,653] [INFO] [comm.py:668:init_distributed] Initializing TorchBackend in DeepSpeed with backend nccl

[2024-01-11 19:11:49,014] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed Flops Profiler Enabled: False

[2024-01-11 19:11:49,019] [INFO] [logging.py:96:log_dist] [Rank 0] Using client Optimizer as basic optimizer

[2024-01-11 19:11:49,019] [INFO] [logging.py:96:log_dist] [Rank 0] Removing param_group that has no 'params' in the basic Optimizer

[2024-01-11 19:11:49,089] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed Basic Optimizer = PagedAdamW32bit

[2024-01-11 19:11:49,089] [INFO] [utils.py:56:is_zero_supported_optimizer] Checking ZeRO support for optimizer=PagedAdamW32bit type=<class 'bitsandbytes.optim.adamw.PagedAdamW32bit'>

[2024-01-11 19:11:49,089] [WARNING] [engine.py:1166:do_optimizer_sanity_check] **** You are using ZeRO with an untested optimizer, proceed with caution *****

[2024-01-11 19:11:49,089] [INFO] [logging.py:96:log_dist] [Rank 0] Creating torch.bfloat16 ZeRO stage 2 optimizer

[2024-01-11 19:11:49,090] [INFO] [stage_1_and_2.py:148:init] Reduce bucket size 500,000,000

[2024-01-11 19:11:49,090] [INFO] [stage_1_and_2.py:149:init] Allgather bucket size 500,000,000

[2024-01-11 19:11:49,090] [INFO] [stage_1_and_2.py:150:init] CPU Offload: False

[2024-01-11 19:11:49,090] [INFO] [stage_1_and_2.py:151:init] Round robin gradient partitioning: False

[2024-01-11 19:11:51,497] [INFO] [utils.py:791:see_memory_usage] Before initializing optimizer states

[2024-01-11 19:11:51,498] [INFO] [utils.py:792:see_memory_usage] MA 5.63 GB Max_MA 5.93 GB CA 6.31 GB Max_CA 6 GB

[2024-01-11 19:11:51,498] [INFO] [utils.py:799:see_memory_usage] CPU Virtual Memory: used = 105.09 GB, percent = 5.2%

[2024-01-11 19:11:51,748] [INFO] [utils.py:791:see_memory_usage] After initializing optimizer states

[2024-01-11 19:11:51,748] [INFO] [utils.py:792:see_memory_usage] MA 5.63 GB Max_MA 6.23 GB CA 6.91 GB Max_CA 7 GB

[2024-01-11 19:11:51,749] [INFO] [utils.py:799:see_memory_usage] CPU Virtual Memory: used = 105.32 GB, percent = 5.2%

[2024-01-11 19:11:51,749] [INFO] [stage_1_and_2.py:516:init] optimizer state initialized

[2024-01-11 19:11:51,870] [INFO] [utils.py:791:see_memory_usage] After initializing ZeRO optimizer

[2024-01-11 19:11:51,871] [INFO] [utils.py:792:see_memory_usage] MA 5.63 GB Max_MA 5.63 GB CA 6.91 GB Max_CA 7 GB

[2024-01-11 19:11:51,871] [INFO] [utils.py:799:see_memory_usage] CPU Virtual Memory: used = 105.39 GB, percent = 5.2%

[2024-01-11 19:11:51,882] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed Final Optimizer = PagedAdamW32bit

[2024-01-11 19:11:51,882] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed using client LR scheduler

[2024-01-11 19:11:51,882] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed LR Scheduler = None

[2024-01-11 19:11:51,882] [INFO] [logging.py:96:log_dist] [Rank 0] step=0, skipped=0, lr=[0.0002], mom=[(0.9, 0.999)]

[2024-01-11 19:11:51,886] [INFO] [config.py:984:print] DeepSpeedEngine configuration:

[2024-01-11 19:11:51,886] [INFO] [config.py:988:print] activation_checkpointing_config {

"partition_activations": false,

"contiguous_memory_optimization": false,

"cpu_checkpointing": false,

"number_checkpoints": null,

"synchronize_checkpoint_boundary": false,

"profile": false

}

[2024-01-11 19:11:51,886] [INFO] [config.py:988:print] aio_config ................... {'block_size': 1048576, 'queue_depth': 8, 'thread_count': 1, 'single_submit': False, 'overlap_events': True}

[2024-01-11 19:11:51,886] [INFO] [config.py:988:print] amp_enabled .................. False

[2024-01-11 19:11:51,886] [INFO] [config.py:988:print] amp_params ................... False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] autotuning_config ............ {

"enabled": false,

"start_step": null,

"end_step": null,

"metric_path": null,

"arg_mappings": null,

"metric": "throughput",

"model_info": null,

"results_dir": "autotuning_results",

"exps_dir": "autotuning_exps",

"overwrite": true,

"fast": true,

"start_profile_step": 3,

"end_profile_step": 5,

"tuner_type": "gridsearch",

"tuner_early_stopping": 5,

"tuner_num_trials": 50,

"model_info_path": null,

"mp_size": 1,

"max_train_batch_size": null,

"min_train_batch_size": 1,

"max_train_micro_batch_size_per_gpu": 1.024000e+03,

"min_train_micro_batch_size_per_gpu": 1,

"num_tuning_micro_batch_sizes": 3

}

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] bfloat16_enabled ............. True

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] checkpoint_parallel_write_pipeline False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] checkpoint_tag_validation_enabled True

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] checkpoint_tag_validation_fail False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] comms_config ................. <deepspeed.comm.config.DeepSpeedCommsConfig object at 0x7f0b4ba0bee0>

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] communication_data_type ...... None

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] compression_config ........... {'weight_quantization': {'shared_parameters': {'enabled': False, 'quantizer_kernel': False, 'schedule_offset': 0, 'quantize_groups': 1, 'quantize_verbose': False, 'quantization_type': 'symmetric', 'quantize_weight_in_forward': False, 'rounding': 'nearest', 'fp16_mixed_quantize': False, 'quantize_change_ratio': 0.001}, 'different_groups': {}}, 'activation_quantization': {'shared_parameters': {'enabled': False, 'quantization_type': 'symmetric', 'range_calibration': 'dynamic', 'schedule_offset': 1000}, 'different_groups': {}}, 'sparse_pruning': {'shared_parameters': {'enabled': False, 'method': 'l1', 'schedule_offset': 1000}, 'different_groups': {}}, 'row_pruning': {'shared_parameters': {'enabled': False, 'method': 'l1', 'schedule_offset': 1000}, 'different_groups': {}}, 'head_pruning': {'shared_parameters': {'enabled': False, 'method': 'topk', 'schedule_offset': 1000}, 'different_groups': {}}, 'channel_pruning': {'shared_parameters': {'enabled': False, 'method': 'l1', 'schedule_offset': 1000}, 'different_groups': {}}, 'layer_reduction': {'enabled': False}}

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] curriculum_enabled_legacy .... False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] curriculum_params_legacy ..... False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] data_efficiency_config ....... {'enabled': False, 'seed': 1234, 'data_sampling': {'enabled': False, 'num_epochs': 1000, 'num_workers': 0, 'curriculum_learning': {'enabled': False}}, 'data_routing': {'enabled': False, 'random_ltd': {'enabled': False, 'layer_token_lr_schedule': {'enabled': False}}}}

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] data_efficiency_enabled ...... False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] dataloader_drop_last ......... False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] disable_allgather ............ False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] dump_state ................... False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] dynamic_loss_scale_args ...... None

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] eigenvalue_enabled ........... False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] eigenvalue_gas_boundary_resolution 1

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] eigenvalue_layer_name ........ bert.encoder.layer

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] eigenvalue_layer_num ......... 0

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] eigenvalue_max_iter .......... 100

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] eigenvalue_stability ......... 1e-06

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] eigenvalue_tol ............... 0.01

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] eigenvalue_verbose ........... False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] elasticity_enabled ........... False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] flops_profiler_config ........ {

"enabled": false,

"recompute_fwd_factor": 0.0,

"profile_step": 1,

"module_depth": -1,

"top_modules": 1,

"detailed": true,

"output_file": null

}

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] fp16_auto_cast ............... None

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] fp16_enabled ................. False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] fp16_master_weights_and_gradients False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] global_rank .................. 0

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] grad_accum_dtype ............. None

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] gradient_accumulation_steps .. 16

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] gradient_clipping ............ 1

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] gradient_predivide_factor .... 1.0

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] graph_harvesting ............. False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] hybrid_engine ................ enabled=False max_out_tokens=512 inference_tp_size=1 release_inference_cache=False pin_parameters=True tp_gather_partition_size=8

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] initial_dynamic_scale ........ 1

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] load_universal_checkpoint .... False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] loss_scale ................... 1.0

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] memory_breakdown ............. False

[2024-01-11 19:11:51,887] [INFO] [config.py:988:print] mics_hierarchial_params_gather False

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] mics_shard_size .............. -1

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] monitor_config ............... tensorboard=TensorBoardConfig(enabled=False, output_path='', job_name='DeepSpeedJobName') wandb=WandbConfig(enabled=False, group=None, team=None, project='deepspeed') csv_monitor=CSVConfig(enabled=False, output_path='', job_name='DeepSpeedJobName') enabled=False

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] nebula_config ................ {

"enabled": false,

"persistent_storage_path": null,

"persistent_time_interval": 100,

"num_of_version_in_retention": 2,

"enable_nebula_load": true,

"load_path": null

}

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] optimizer_legacy_fusion ...... False

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] optimizer_name ............... None

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] optimizer_params ............. None

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] pipeline ..................... {'stages': 'auto', 'partition': 'best', 'seed_layers': False, 'activation_checkpoint_interval': 0, 'pipe_partitioned': True, 'grad_partitioned': True}

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] pld_enabled .................. False

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] pld_params ................... False

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] prescale_gradients ........... False

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] scheduler_name ............... None

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] scheduler_params ............. None

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] seq_parallel_communication_data_type torch.float32

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] sparse_attention ............. None

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] sparse_gradients_enabled ..... False

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] steps_per_print .............. 10000000000000

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] train_batch_size ............. 16

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] train_micro_batch_size_per_gpu 1

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] use_data_before_expert_parallel False

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] use_node_local_storage ....... False

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] wall_clock_breakdown ......... False

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] weight_quantization_config ... None

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] world_size ................... 1

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] zero_allow_untested_optimizer True

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] zero_config .................. stage=2 contiguous_gradients=True reduce_scatter=True reduce_bucket_size=500,000,000 use_multi_rank_bucket_allreduce=True allgather_partitions=True allgather_bucket_size=500,000,000 overlap_comm=True load_from_fp32_weights=True elastic_checkpoint=False offload_param=None offload_optimizer=None sub_group_size=1,000,000,000 cpu_offload_param=None cpu_offload_use_pin_memory=None cpu_offload=None prefetch_bucket_size=50,000,000 param_persistence_threshold=100,000 model_persistence_threshold=sys.maxsize max_live_parameters=1,000,000,000 max_reuse_distance=1,000,000,000 gather_16bit_weights_on_model_save=False stage3_gather_fp16_weights_on_model_save=False ignore_unused_parameters=True legacy_stage1=False round_robin_gradients=False zero_hpz_partition_size=1 zero_quantized_weights=False zero_quantized_nontrainable_weights=False zero_quantized_gradients=False mics_shard_size=-1 mics_hierarchical_params_gather=False memory_efficient_linear=True pipeline_loading_checkpoint=False override_module_apply=True

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] zero_enabled ................. True

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] zero_force_ds_cpu_optimizer .. False

[2024-01-11 19:11:51,888] [INFO] [config.py:988:print] zero_optimization_stage ...... 2

[2024-01-11 19:11:51,888] [INFO] [config.py:974:print_user_config] json = {

"gradient_accumulation_steps": 16,

"train_micro_batch_size_per_gpu": 1,

"gradient_clipping": 1,

"zero_allow_untested_optimizer": true,

"zero_force_ds_cpu_optimizer": false,

"zero_optimization": {

"stage": 2,

"overlap_comm": true

},

"fp16": {

"enabled": false,

"initial_scale_power": 16

},

"bf16": {

"enabled": true

},

"steps_per_print": 1.000000e+13

}

Traceback (most recent call last):

File "/root/xtuner019/xtuner/xtuner/tools/train.py", line 260, in

main()

File "/root/xtuner019/xtuner/xtuner/tools/train.py", line 256, in main

runner.train()

File "/root/.local/lib/python3.10/site-packages/mmengine/runner/_flexible_runner.py", line 1182, in train

self.strategy.prepare(

File "/root/.local/lib/python3.10/site-packages/mmengine/_strategy/deepspeed.py", line 389, in prepare

self.param_schedulers = self.build_param_scheduler(

File "/root/.local/lib/python3.10/site-packages/mmengine/_strategy/base.py", line 658, in build_param_scheduler

param_schedulers = self._build_param_scheduler(

File "/root/.local/lib/python3.10/site-packages/mmengine/_strategy/base.py", line 563, in _build_param_scheduler

PARAM_SCHEDULERS.build(

File "/root/.local/lib/python3.10/site-packages/mmengine/registry/registry.py", line 570, in build

return self.build_func(cfg, *args, **kwargs, registry=self)

File "/root/.local/lib/python3.10/site-packages/mmengine/registry/build_functions.py", line 294, in build_scheduler_from_cfg

return scheduler_cls.build_iter_from_epoch( # type: ignore

File "/root/.local/lib/python3.10/site-packages/mmengine/optim/scheduler/param_scheduler.py", line 663, in build_iter_from_epoch

assert epoch_length is not None and epoch_length > 0,

AssertionError: epoch_length must be a positive integer, but got 0.

请各位大佬看看

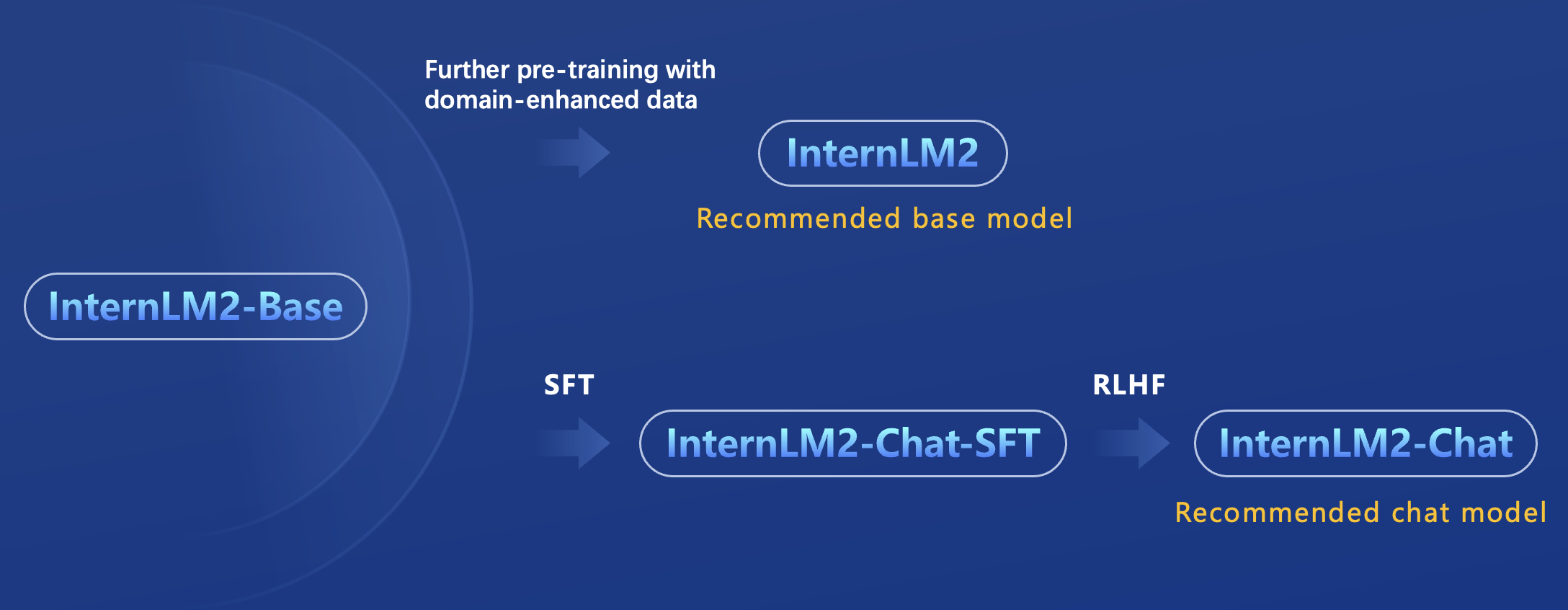

internlm2-1.8b

internlm2-1.8b

internlm2-chat-1.8b-sft

internlm2-chat-1.8b-sft

internlm2-chat-1.8b

internlm2-chat-1.8b

internlm2-base-7b

internlm2-base-7b

internlm2-7b

internlm2-7b

internlm2-chat-7b-sft

internlm2-chat-7b-sft

internlm2-chat-7b

internlm2-chat-7b

internlm2-base-20b

internlm2-base-20b

internlm2-20b

internlm2-20b

internlm2-chat-20b-sft

internlm2-chat-20b-sft

internlm2-chat-20b

internlm2-chat-20b