Use this script to get your entire site indexed on Google in less than 48 hours. No tricks, no hacks, just a simple script and a Google API.

You can read more about the motivation behind it and how it works in this blog post https://seogets.com/blog/google-indexing-script

Important

- Indexing != Ranking. This will not help your page rank on Google, it'll just let Google know about the existence of your pages.

- This script uses Google Indexing API. We do not recommend using this script on spam/low-quality content.

- Install Node.js

- An account on Google Search Console with the verified sites you want to index

- An account on Google Cloud

- Follow this guide from Google. By the end of it, you should have a project on Google Cloud with the Indexing API enabled, a service account with the

Ownerpermission on your sites. - Make sure you enable both

Google Search Console APIandWeb Search Indexing APIon your Google Project ➤ API Services ➤ Enabled API & Services. - Download the JSON file with the credentials of your service account and save it in the same folder as the script. The file should be named

service_account.json

Install the cli globally on your machine.

npm i -g google-indexing-scriptClone the repository to your machine.

git clone https://github.com/goenning/google-indexing-script.git

cd google-indexing-scriptInstall and build the project.

npm install

npm run build

npm i -g .Note

Ensure you are using an up-to-date Node.js version, with a preference for v20 or later. Check your current version with node -v.

With service_account.json (recommended)

Create a .gis directory in your home folder and move the service_account.json file there.

mkdir ~/.gis

mv service_account.json ~/.gisRun the script with the domain or url you want to index.

gis <domain or url>

# example

gis seogets.comHere are some other ways to run the script:

# custom path to service_account.json

gis seogets.com --path /path/to/service_account.json

# long version command

google-indexing-script seogets.com

# cloned repository

npm run index seogets.comWith environment variables

Open service_account.json and copy the client_email and private_key values.

Run the script with the domain or url you want to index.

GIS_CLIENT_EMAIL=your-client-email GIS_PRIVATE_KEY=your-private-key gis seogets.comWith arguments (not recommended)

Open service_account.json and copy the client_email and private_key values.

Once you have the values, run the script with the domain or url you want to index, the client email and the private key.

gis seogets.com --client-email your-client-email --private-key your-private-keyAs a npm module

You can also use the script as a npm module in your own project.

npm i google-indexing-scriptimport { index } from 'google-indexing-script'

import serviceAccount from './service_account.json'

index('seogets.com', {

client_email: serviceAccount.client_email,

private_key: serviceAccount.private_key

})

.then(console.log)

.catch(console.error)Read the API documentation for more details.

Here's an example of what you should expect:

Important

- Your site must have 1 or more sitemaps submitted to Google Search Console. Otherwise, the script will not be able to find the pages to index.

- You can run the script as many times as you want. It will only index the pages that are not already indexed.

- Sites with a large number of pages might take a while to index, be patient.

If you prefer a hands-free, and less technical solution, you can use a SaaS platform like TagParrot.

MIT License

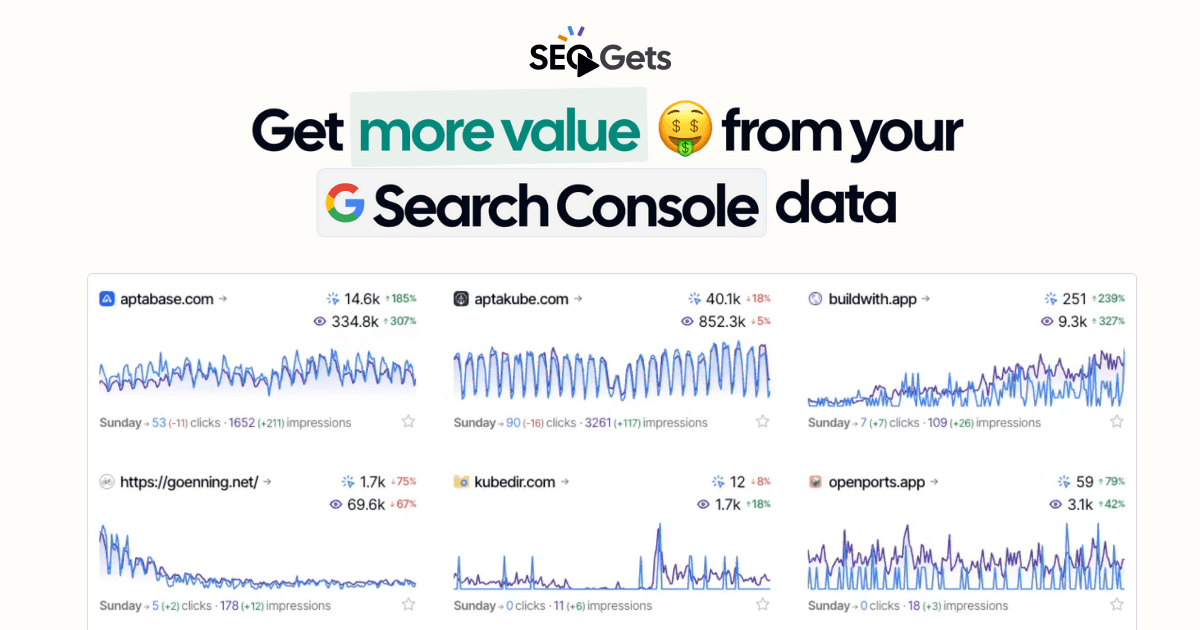

This project is sponsored by SEO Gets