[1/2] c++ -MMD -MF flatten_unflatten.o.d -DTORCH_EXTENSION_NAME=utils -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE=\"_gcc\" -DPYBIND11_STDLIB=\"_libstdcpp\" -DPYBIND11_BUILD_ABI=\"_cxxabi1011\" -isystem /root/anaconda3/envs/exbert/lib/python3.8/site-packages/torch/include -isystem /root/anaconda3/envs/exbert/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -isystem /root/anaconda3/envs/exbert/lib/python3.8/site-packages/torch/include/TH -isystem /root/anaconda3/envs/exbert/lib/python3.8/site-packages/torch/include/THC -isystem /root/anaconda3/envs/exbert/include/python3.8 -D_GLIBCXX_USE_CXX11_ABI=0 -fPIC -std=c++14 -c /root/anaconda3/envs/exbert/lib/python3.8/site-packages/deepspeed/ops/csrc/utils/flatten_unflatten.cpp -o flatten_unflatten.o

FAILED: flatten_unflatten.o

c++ -MMD -MF flatten_unflatten.o.d -DTORCH_EXTENSION_NAME=utils -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE=\"_gcc\" -DPYBIND11_STDLIB=\"_libstdcpp\" -DPYBIND11_BUILD_ABI=\"_cxxabi1011\" -isystem /root/anaconda3/envs/exbert/lib/python3.8/site-packages/torch/include -isystem /root/anaconda3/envs/exbert/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -isystem /root/anaconda3/envs/exbert/lib/python3.8/site-packages/torch/include/TH -isystem /root/anaconda3/envs/exbert/lib/python3.8/site-packages/torch/include/THC -isystem /root/anaconda3/envs/exbert/include/python3.8 -D_GLIBCXX_USE_CXX11_ABI=0 -fPIC -std=c++14 -c /root/anaconda3/envs/exbert/lib/python3.8/site-packages/deepspeed/ops/csrc/utils/flatten_unflatten.cpp -o flatten_unflatten.o

c++: 错误:unrecognized command line option ‘-std=c++14’

ninja: build stopped: subcommand failed.

Traceback (most recent call last):

File "/root/anaconda3/envs/exbert/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 1717, in _run_ninja_build

subprocess.run(

File "/root/anaconda3/envs/exbert/lib/python3.8/subprocess.py", line 516, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['ninja', '-v']' returned non-zero exit status 1.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "run_pretraining.py", line 712, in <module>

main()

File "run_pretraining.py", line 692, in main

model, optimizer, lr_scheduler = prepare_model_and_optimizer(args)

File "run_pretraining.py", line 439, in prepare_model_and_optimizer

model.network, optimizer, _, lr_scheduler = deepspeed.initialize(

File "/root/anaconda3/envs/exbert/lib/python3.8/site-packages/deepspeed/__init__.py", line 126, in initialize

engine = DeepSpeedEngine(args=args,

File "/root/anaconda3/envs/exbert/lib/python3.8/site-packages/deepspeed/runtime/engine.py", line 223, in __init__

util_ops = UtilsBuilder().load()

File "/root/anaconda3/envs/exbert/lib/python3.8/site-packages/deepspeed/ops/op_builder/builder.py", line 239, in load

return self.jit_load(verbose)

File "/root/anaconda3/envs/exbert/lib/python3.8/site-packages/deepspeed/ops/op_builder/builder.py", line 267, in jit_load

op_module = load(

File "/root/anaconda3/envs/exbert/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 1124, in load

return _jit_compile(

File "/root/anaconda3/envs/exbert/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 1337, in _jit_compile

_write_ninja_file_and_build_library(

File "/root/anaconda3/envs/exbert/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 1449, in _write_ninja_file_and_build_library

_run_ninja_build(

File "/root/anaconda3/envs/exbert/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 1733, in _run_ninja_build

raise RuntimeError(message) from e

RuntimeError: Error building extension 'utils'

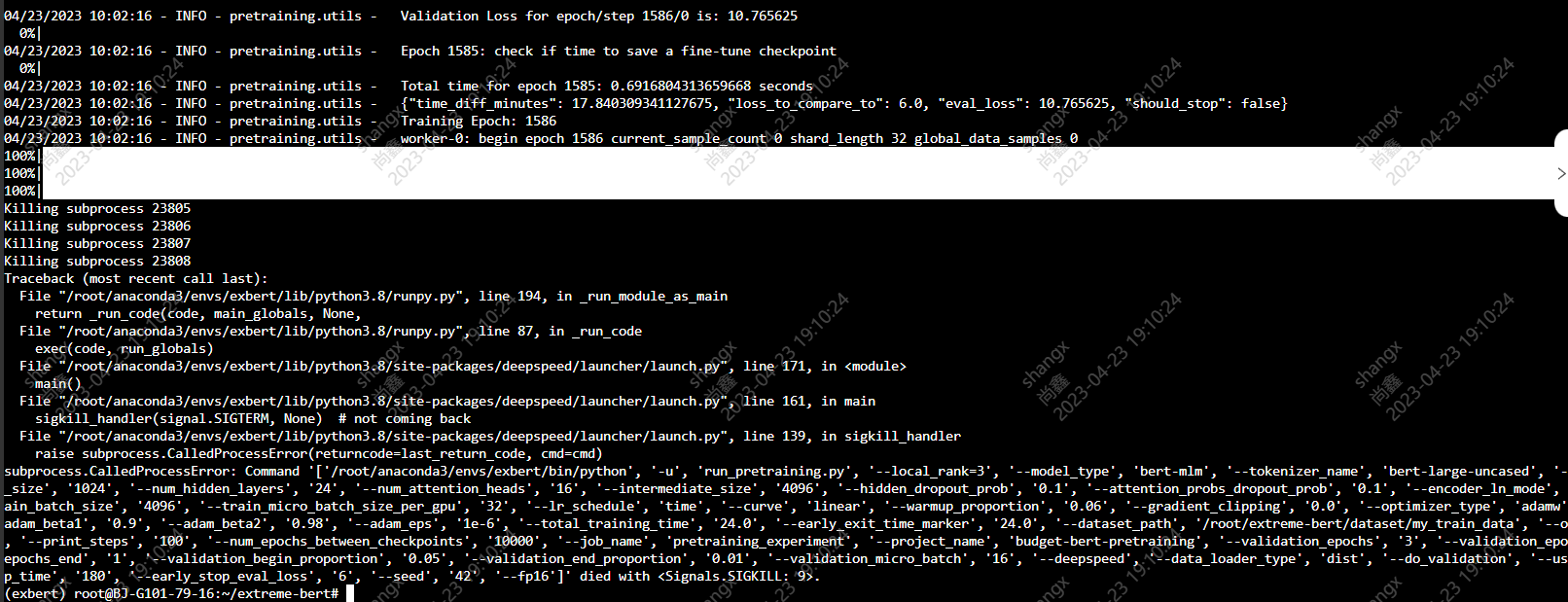

Killing subprocess 860

Traceback (most recent call last):

File "/root/anaconda3/envs/exbert/lib/python3.8/runpy.py", line 194, in _run_module_as_main

return _run_code(code, main_globals, None,

File "/root/anaconda3/envs/exbert/lib/python3.8/runpy.py", line 87, in _run_code

exec(code, run_globals)

File "/root/anaconda3/envs/exbert/lib/python3.8/site-packages/deepspeed/launcher/launch.py", line 171, in <module>

main()

File "/root/anaconda3/envs/exbert/lib/python3.8/site-packages/deepspeed/launcher/launch.py", line 161, in main

sigkill_handler(signal.SIGTERM, None) # not coming back

File "/root/anaconda3/envs/exbert/lib/python3.8/site-packages/deepspeed/launcher/launch.py", line 139, in sigkill_handler

raise subprocess.CalledProcessError(returncode=last_return_code, cmd=cmd)

subprocess.CalledProcessError: Command '['/root/anaconda3/envs/exbert/bin/python', '-u', 'run_pretraining.py', '--local_rank=0', '--model_type', 'bert-mlm', '--tokenizer_name', 'bert-large-uncased', '--hidden_act', 'gelu', '--hidden_size', '1024', '--num_hidden_layers', '24', '--num_attention_heads', '16', '--intermediate_size', '4096', '--hidden_dropout_prob', '0.1', '--attention_probs_dropout_prob', '0.1', '--encoder_ln_mode', 'pre-ln', '--lr', '1e-3', '--train_batch_size', '4096', '--train_micro_batch_size_per_gpu', '32', '--lr_schedule', 'time', '--curve', 'linear', '--warmup_proportion', '0.06', '--gradient_clipping', '0.0', '--optimizer_type', 'adamw', '--weight_decay', '0.01', '--adam_beta1', '0.9', '--adam_beta2', '0.98', '--adam_eps', '1e-6', '--total_training_time', '24.0', '--early_exit_time_marker', '24.0', '--dataset_path', './example_multisent_perline.txt', '--output_dir', '/tmp/training-out', '--print_steps', '100', '--num_epochs_between_checkpoints', '10000', '--job_name', 'pretraining_experiment', '--project_name', 'budget-bert-pretraining', '--validation_epochs', '3', '--validation_epochs_begin', '1', '--validation_epochs_end', '1', '--validation_begin_proportion', '0.05', '--validation_end_proportion', '0.01', '--validation_micro_batch', '16', '--deepspeed', '--data_loader_type', 'dist', '--do_validation', '--use_early_stopping', '--early_stop_time', '180', '--early_stop_eval_loss', '6', '--seed', '42', '--fp16']' returned non-zero exit status 1.