elastic / ember Goto Github PK

View Code? Open in Web Editor NEWElastic Malware Benchmark for Empowering Researchers

License: Other

Elastic Malware Benchmark for Empowering Researchers

License: Other

Is there a version of feature extraction script writing in c++?

Can you please a provide a file which maps the sha256 hash of the binary to its md5 hash so that I can easily verify if the provided md5 hashes are available in VirusShare md5 hash list, so that I don't waste time for searching each sha256 hash in virusshare and download it if it is available.

Just a json file which looks in the following format is enough

{

"sha256_1":"md5_1",

"sha256_2":"md5_2",

.

.

.

}

I try to run malconv.py on dataset EMBER. May I ask how to generate a URL to fetch file contents by sha256 hash? What's the data form of 'ember_training.csv.gz' and 'ember_test.csv.gz' in malconv.py? Thank you in advance.

In "train_ember.py" script at row number 20, ("if not (os.path.exists(X_train_path) and os.path.exists(y_train_path))"). I thinking this one will be used to check the existing path and files of training dataset. In case, the files are existing then it will return true and create vectorized features.

Might the "not" code in this line be eliminated ?

Please forgive me if I misunderstand about this.

Hi,

How to run ember dataset on Malconv? I want to attempt this competition( https://www.elastic.co/blog/machine-learning-static-evasion-competition).

So the Malconv model cannot be trained with the data(vectorized dataset) given in your github page? How to gain access to the files?

Lief's current versions available for anaconda are 0.8.0.post7, 0.8.1.post1, 0.8.2.post1, 0.8.3.post3, 0.9.0, it does not find 0.8.3. Can it be updated to 0.9.0?

Thank you for the awesome work. My question is how to retrain the model based on a few new samples? Is that possible with the existing code? Or do I need to train from scratch?

Hello,this code in the ember2018-notebook.ipynb can not display.Could you help me?

plotdf = emberdf.copy() gbdf = plotdf.groupby(["label", "subset"]).count().reset_index() alt.Chart(gbdf).mark_bar().encode( alt.X('subset:O', axis=alt.Axis(title='Subset')), alt.Y('sum(sha256):Q', axis=alt.Axis(title='Number of samples')), alt.Color('label:N', scale=alt.Scale(range=["#00b300", "#3333ff", "#ff3333"]), legend=alt.Legend(values=["unlabeled", "benign", "malicious"])) )

error message:

`KeyError Traceback (most recent call last)

in

1 plotdf = emberdf.copy()

----> 2 gbdf = plotdf.groupby(["label", "subset"]).count().reset_index()

3 alt.Chart(gbdf).mark_bar().encode(

4 alt.X('subset:O', axis=alt.Axis(title='Subset')),

5 alt.Y('sum(sha256):Q', axis=alt.Axis(title='Number of samples')),

~/miniconda3/envs/ember/lib/python3.7/site-packages/pandas/core/frame.py in groupby(self, by, axis, level, as_index, sort, group_keys, squeeze, observed, dropna)

6523 squeeze=squeeze,

6524 observed=observed,

-> 6525 dropna=dropna,

6526 )

6527

~/miniconda3/envs/ember/lib/python3.7/site-packages/pandas/core/groupby/groupby.py in init(self, obj, keys, axis, level, grouper, exclusions, selection, as_index, sort, group_keys, squeeze, observed, mutated, dropna)

531 observed=observed,

532 mutated=self.mutated,

--> 533 dropna=self.dropna,

534 )

535

~/miniconda3/envs/ember/lib/python3.7/site-packages/pandas/core/groupby/grouper.py in get_grouper(obj, key, axis, level, sort, observed, mutated, validate, dropna)

784 in_axis, name, level, gpr = False, None, gpr, None

785 else:

--> 786 raise KeyError(gpr)

787 elif isinstance(gpr, Grouper) and gpr.key is not None:

788 # Add key to exclusions

KeyError: 'subset'

`

Hello, I am using the ember-2018 data set, once I try to create the vectorized feature, I am getting an error: KeyError: 'datadirectories'

RemoteTraceback Traceback (most recent call last)

RemoteTraceback:

"""

Traceback (most recent call last):

File "/anaconda/envs/azureml_py36/lib/python3.6/multiprocessing/pool.py", line 119, in worker

result = (True, func(*args, **kwds))

File "/anaconda/envs/azureml_py36/lib/python3.6/site-packages/ember/init.py", line 44, in vectorize_unpack

return vectorize(*args)

File "/anaconda/envs/azureml_py36/lib/python3.6/site-packages/ember/init.py", line 31, in vectorize

feature_vector = extractor.process_raw_features(raw_features)

File "/anaconda/envs/azureml_py36/lib/python3.6/site-packages/ember/features.py", line 531, in process_raw_features

feature_vectors = [fe.process_raw_features(raw_obj[fe.name]) for fe in self.features]

File "/anaconda/envs/azureml_py36/lib/python3.6/site-packages/ember/features.py", line 531, in

feature_vectors = [fe.process_raw_features(raw_obj[fe.name]) for fe in self.features]

KeyError: 'datadirectories'

"""

The above exception was the direct cause of the following exception:

KeyError Traceback (most recent call last)

in

----> 1 ember.create_vectorized_features(data_dir, 2)

2 ember.create_metadata(data_dir)

/anaconda/envs/azureml_py36/lib/python3.6/site-packages/ember/init.py in create_vectorized_features(data_dir, feature_version)

73 raw_feature_paths = [os.path.join(data_dir, "train_features_{}.jsonl".format(i)) for i in range(6)]

74 nrows = sum([1 for fp in raw_feature_paths for line in open(fp)])

---> 75 vectorize_subset(X_path, y_path, raw_feature_paths, extractor, nrows)

76

77 print("Vectorizing test set")

/anaconda/envs/azureml_py36/lib/python3.6/site-packages/ember/init.py in vectorize_subset(X_path, y_path, raw_feature_paths, extractor, nrows)

58 argument_iterator = ((irow, raw_features_string, X_path, y_path, extractor, nrows)

59 for irow, raw_features_string in enumerate(raw_feature_iterator(raw_feature_paths)))

---> 60 for _ in tqdm.tqdm(pool.imap_unordered(vectorize_unpack, argument_iterator), total=nrows):

61 pass

62

/anaconda/envs/azureml_py36/lib/python3.6/site-packages/tqdm/std.py in iter(self)

1128

1129 try:

-> 1130 for obj in iterable:

1131 yield obj

1132 # Update and possibly print the progressbar.

/anaconda/envs/azureml_py36/lib/python3.6/multiprocessing/pool.py in next(self, timeout)

733 if success:

734 return value

--> 735 raise value

736

737 next = next # XXX

KeyError: 'datadirectories'

###################

Requirements seem to be installed correctly:

pip install -r requirements.txt

Requirement already satisfied: lief>=0.9.0 in /anaconda/envs/azureml_py36/lib/python3.6/site-packages (from -r requirements.txt (line 1)) (0.9.0)

Requirement already satisfied: tqdm>=4.31.0 in /anaconda/envs/azureml_py36/lib/python3.6/site-packages (from -r requirements.txt (line 2)) (4.48.0)

Requirement already satisfied: numpy>=1.16.3 in /anaconda/envs/azureml_py36/lib/python3.6/site-packages (from -r requirements.txt (line 3)) (1.16.6)

Requirement already satisfied: pandas>=0.24.2 in /anaconda/envs/azureml_py36/lib/python3.6/site-packages (from -r requirements.txt (line 4)) (1.1.0)

Requirement already satisfied: lightgbm>=2.2.3 in /anaconda/envs/azureml_py36/lib/python3.6/site-packages (from -r requirements.txt (line 5)) (2.3.0)

Requirement already satisfied: scikit-learn>=0.20.3 in /anaconda/envs/azureml_py36/lib/python3.6/site-packages (from -r requirements.txt (line 6)) (0.20.3)

Requirement already satisfied: pytz>=2017.2 in /anaconda/envs/azureml_py36/lib/python3.6/site-packages (from pandas>=0.24.2->-r requirements.txt (line 4)) (2019.3)

Requirement already satisfied: python-dateutil>=2.7.3 in /anaconda/envs/azureml_py36/lib/python3.6/site-packages (from pandas>=0.24.2->-r requirements.txt (line 4)) (2.8.1)

Requirement already satisfied: scipy in /anaconda/envs/azureml_py36/lib/python3.6/site-packages (from lightgbm>=2.2.3->-r requirements.txt (line 5)) (1.4.1)

Requirement already satisfied: six>=1.5 in /anaconda/envs/azureml_py36/lib/python3.6/site-packages (from python-dateutil>=2.7.3->pandas>=0.24.2->-r requirements.txt (line 4)) (1.12.0)

Thanks, appreciate your help

Given the Nth sample in the training set I want to determine the SHA256 for that sample. How does one do this? (I'm using the v1 dataset FWIW)

The feature vectors in the training set are in one place (obtained via ember.read_vectorized_features()). And the metadata is obtained differently (via ember.read_metadata()). But the Nth feature vector obtained in the training set via ember.read_vectorized_features() doesn't seem to map to the Nth record in the metadata.

I'm proving that this is not the case by downloading the PE file for the SHA256 that I think is mapped to the Nth feature vector in the training set, then using Ember to extract the feature vector from the PE file, and comparing it to the feature vector in the training set. The two feature vectors are wildly different.

I'm aware that there are slight differences in some feature calculations depending on the version of lief but A) I'm using lief==0.9.0 which is what docs say I should be using for v1 dataset, and B) the differences in the two feature vectors are significant. Often 400-600 features have very different values including for features like the timestamp.

I've tried a few different ways. To illustrate, here is the latest thing I tried:

metadata = ember.read_metadata(ember_data_dir)

gw_hashes = metadata[(metadata['subset'] == 'train') & (metadata['label'] == 0)]

gw_hashes.reset_index(inplace=True)

X_train, y_train, _, _ = ember.read_vectorized_features(ember_data_dir, feature_version=1)

X_train_gw = X_train[y_train == 0]

assert gw_hashes.shape[0] == X_train_gw.shape[0]

The assertion doesn't trip, which makes sense. I didn't expect it to.

But at this point the features extracted from the PE file for hash gw_hashes[N] are generally (always?) very different from the feature vector obtained from X_train_gw[N].

So how do I get the SHA256 for an arbitrary sample in the training set?

The data contains large .jsonl files which store the features of the PE32 files used to create the model. It would be nice to test Ember out on my own PE32 files, or to add my data to what Endgame has provided.

I have successfully trained the EMBER 2018 dataset (feature version 2) using the train_ember.py script, and managed to plot the charts/results using the jupyter notebook.

However, when attempting to run python train_ember.py [/path/to/dataset] command for the EMBER 2017 feature version 2 dataset, I get the following warning:

[LightGBM] [Warning] Contains only one class

[LightGBM] [Info] Number of positive: 0, number of negative: 900000

[LightGBM] [Info] Total Bins 95616

[LightGBM] [Info] Number of data: 900000, number of used features: 1822

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.000000 -> initscore=-34.538776

[LightGBM] [Info] Start training from score -34.538776

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.000000 -> initscore=-34.538776

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

Any idea what went wrong?

When I was ready to use the create_metadata() to create a csv file for dataset, there is an error showing below:

`RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.`

I just called the ember.create_metadata("./dataset/")

Why did I have this error?

Hi,

I am trying to install lief post3 which is needed for training the ember dataset. I am running the command: pip install lief == 0.8.3.post3.

pip is already installed with the latest version in my system. But when I run the command, it only installs lief version 0.8.3 instead of 0.8.3.post3 (as shown in the screenshot).

How to install lief == 0.8.3.post3? or is the command wrong? Please help.

Thanks in advance.

Can you provide the benign binary files you used for feature extraction?

Hello, is this project still active? I was working on recreating the model without ember function, just wondering if you are willing to release the whole kernel / model as a notebook as I am struggling to successfully import ember to the notebook. the reason to recreate the notebook is because I want to test multiple models and techniques on the dataset

Hello Dear Author,

I'm curious about the collected data, are they strictly following with a time series? I.e., when using your code to vectorize data into a feature matrix and a corresponding pandas DataFrame, is the previous data always "happens earlier" than the later data? For example, the data in the 0th row must be an event (goodware/malware) earlier than the 1st row.

Can the code run under the python3.5 ? When im done this ,these seems a question that it reported that the ember need lief 0.8.3, while the requirements.txt is written," lief = 0.9.0".

I load the vectorized data using the script below

emberdf = ember.read_metadata(data_dir)

X_train, y_train, X_test, y_test = ember.read_vectorized_features(data_dir)

however, when I check the labels in y_train , they do not match the labels privided in emberdf['label']

also the labels in dataframe are distributed as: 400K(0), 400K(1) 200K(-1)

but the labels in y_train are distributed as: 789149 (0) , 6335(1), 4516(-1)

Something is not right.

I am only using the privided scripts and I am using the 2018 dataset with features of version 2

The SSL certificate on pubdata.endgame.com expired 2020-04-09, 12:59:03 p.m. leading to an error message when downloading (and the security implications of overriding this error)

First off, thank you for this awesome dataset! Completely agree that this level of control on both benign and malware sets of this size has been a shortfall based on my researching. As a relatively new ML'r I would like to use the dataset with more traditional sklearn modules instead of the provided Ember ones. Apologize if this isn't a great place to ask, but what steps can I take to prep the dataset to then take over and apply say a basic LogisticRegression model to the Import calls? Thanks!

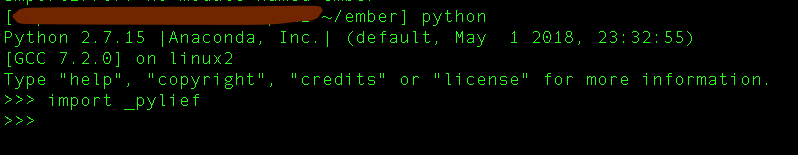

Hello, I'm having lief issues when I train_ember.py inside emberenv. I'm on a CentOS7.5 box.

Lief works fine when I import it from a python interpreter outside of emberenv (screenshot attached).

However, when I the script train_ember.py imports it inside emberenv, it fails to import (screenshot attached) with a GLIBCXX error similar to #13.

Can someone suggest pointers on how to fix this?

pip 9.0.2 introduced some breaking changes, so it is no longer available on the main channels or conda-forge , Update - pip=9.0.2=py36_0 to - pip=9.0.3=py36_0 in environment_minimal.yml

I'm trying to create vectorized features using the instructions given in your Readme.md file. However, I'm unable to see any kind of progress in the interpreter window. There is no way to figure out if there is any activity besides the fact that my CPU is at 100% and the dat files created by the code are being modified.

Error while running malconv model,maybe there need some file

Is this code running on Windows platform?

Hello Phil,

I am doing this project on Malware Detection using Machine Learning, I have a few doubts,

I am not able to understand the class ByteEntropyHistogram and how it is working, is there any other easy way out?

Also how to deal with Section properties and Entropy fields?

Please help,

Thank You!

Hello, I am a beginner. I noticed ember is a malware dateset for static analysis.

But someone published a paper on 35th Annual Computer Security Applications Conference. In that paper, they claimed that they did dynamic analysis on a subset of ember with Cuckoo Sandbox. I wonder if there is someway to do dynamic analysis on ember with Cuckoo Sandbox? I would appreciate it if you could give me an answer.

There is the paper: dl.acm.org/doi/10.1145/3359789.3359835

Free versions on arxiv: arxiv.org/abs/1910.11376

Hi, i already run ember_train.py which gives me the following output:

Training LightGBM model

/usr/local/lib/python3.6/dist-packages/lightgbm-2.3.1-py3.6-linux-x86_64.egg/lightgbm/engine.py:148: UserWarning: Found num_iterations in params. Will use it instead of argument

warnings.warn("Found {} in params. Will use it instead of argument".format(alias))

[LightGBM] [Warning] objective is set=binary, application=binary will be ignored. Current value: objective=binary

[LightGBM] [Warning] objective is set=binary, application=binary will be ignored. Current value: objective=binary

[LightGBM] [Warning] Starting from the 2.1.2 version, default value for the "boost_from_average" parameter in "binary" objective is true.

This may cause significantly different results comparing to the previous versions of LightGBM.

Try to set boost_from_average=false, if your old models produce bad results

[LightGBM] [Info] Number of positive: 300000, number of negative: 300000

[LightGBM] [Info] Total Bins 212045

Killed

Seems like something went wrong. Shouldn't it give a model.txt file as an output on which i could run the classify_binaries.py module?

I hope you find some time to help me.

Best regards Kathi

Hi, I have a problem with function create_vectorized_feature. If I run it, I always get error message

ember.create_vectorized_features("/ember_dataset/ember/")

Vectorizing training set

Traceback (most recent call last):

File "<ipython-input-3-38013b95de24>", line 1, in <module>

ember.create_vectorized_features("/ember_dataset/ember/")

File "C:\Users\Michal\Anaconda3\lib\site-packages\ember-0.1.0-py3.6.egg\ember\__init__.py", line 71, in create_vectorized_features

vectorize_subset(X_path, y_path, raw_feature_paths, 900000)

File "C:\Users\Michal\Anaconda3\lib\site-packages\ember-0.1.0-py3.6.egg\ember\__init__.py", line 51, in vectorize_subset

X = np.memmap(X_path, dtype=np.float32, mode="w+", shape=(nrows, extractor.dim))

File "C:\Users\Michal\Anaconda3\lib\site-packages\numpy\core\memmap.py", line 221, in __new__

fid = open(filename, (mode == 'c' and 'r' or mode)+'b')

FileNotFoundError: [Errno 2] No such file or directory: '/ember_dataset/ember/X_train.dat'

I am using windows 10, and the path is correct, python file I have in folder D:\Downloads, and dataset are in folder D:\Downloads\ember_dataset\ember, this is my code

import ember

ember.create_vectorized_features("/ember_dataset/ember/")

Could someone help me? Thank you in advance.

When training the initial LightGBM model from train_ember.py, the above warning message repeats several hundred times, causing a bit of confusion as to whether the model is actually training correctly. The model does seem to train correctly despite the warning.

Looking around, this might be due to hyperparameter choices (decreasing min_data_in_leaf and num_leaves in particular might influence the warning's appearance). There might also be a way to suppress LightGBM's warnings.

Mostly posting this issue so that others running into the same confusion might see it!

Good morning,

could you please confirm us if i can use emebr for an unsupervised analysis like clustering.

Best Regads.

Where to download raw binaries of ember data set

Kali Linux now comes default with Python 3.7 which does not seem to work with LIEF - which is also stated in the docs.

Any workaround re this?

Hello Author,

How can optimization be done on PE files that have more number of imports and exports?

I found that when there are large number of dll and api's in PE files, it takes long time to extract.

Also there are too many for loops in the code, can this be optimised?

I just download the dataset and it is only Json file there, where and how to get raw byte data and run the malconv?

does anybody here can help me?

So everything appears to be working fine and i do receive an output prediction but my question is which output's are deemed as being malicious and benign

Test One

7.374090014710014e-08

Test Two

0.9475527058964659

in this case is a higher prediction value indicate the file is benign and any prediction value under 1 is malicious?

thank you in advance.

I am running on Windows 10 and tried both Python 3.6 and 3.7 I get this error when I run:

import ember

ember.create_vectorized_features("data/ember2018/")

ember.create_metadata("data/ember2018/")

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

data_dir = "./data2018/ember2018" # change this to where you unzipped the download

Vectorizing training set

100%|██████████| 800000/800000 [20:02<00:00, 665.49it/s]

Vectorizing test set

100%|██████████| 200000/200000 [03:01<00:00, 1102.61it/s]

FileNotFoundError Traceback (most recent call last)

in

1 emberdf = ember.read_metadata(data_dir)

----> 2 X_train, y_train, X_test, y_test = ember.read_vectorized_features(data_dir)

3 lgbm_model = lgb.Booster(model_file=os.path.join(data_dir, "ember_model_2018.txt"))

~/anaconda3/lib/python3.7/site-packages/ember-0.1.0-py3.7.egg/ember/init.py in read_vectorized_features(data_dir, subset, feature_version)

103 X_train_path = os.path.join(data_dir, "X_train.dat")

104 y_train_path = os.path.join(data_dir, "y_train.dat")

--> 105 y_train = np.memmap(y_train_path, dtype=np.float32, mode="r")

106 N = y_train.shape[0]

107 X_train = np.memmap(X_train_path, dtype=np.float32, mode="r", shape=(N, ndim))

~/anaconda3/lib/python3.7/site-packages/numpy/core/memmap.py in new(subtype, filename, dtype, mode, offset, shape, order)

223 f_ctx = contextlib_nullcontext(filename)

224 else:

--> 225 f_ctx = open(os_fspath(filename), ('r' if mode == 'c' else mode)+'b')

226

227 with f_ctx as fid:

FileNotFoundError: [Errno 2] No such file or directory: './data2018/ember2018/y_train.dat'

In a fresh installation of conda (Ubuntu 18.04 LTS) and with a new environment created by:

conda create --name ember python=3.6 there is a failure in the installation of the required packages:

(base) mari@beast:~/repos/ember$ conda activate ember

(ember) mari@beast:~/repos/ember$ conda install --file requirements_conda.txt

Collecting package metadata (current_repodata.json): done

Solving environment: failed with current_repodata.json, will retry with next repodata source.

Collecting package metadata (repodata.json): done

Solving environment: failed

PackagesNotFoundError: The following packages are not available from current channels:

- lightgbm[version='>=2.2.3']

Current channels:

- https://repo.anaconda.com/pkgs/main/linux-64

- https://repo.anaconda.com/pkgs/main/noarch

- https://repo.anaconda.com/pkgs/r/linux-64

- https://repo.anaconda.com/pkgs/r/noarch

Searching the main conda channel, it seems that the lightgbm package is an older version:

(ember) mari@beast:~/repos/ember$ conda search lightgbm

Loading channels: done

# Name Version Build Channel

lightgbm 2.2.1 py27he6710b0_0 pkgs/main

lightgbm 2.2.1 py36he6710b0_0 pkgs/main

lightgbm 2.2.1 py37he6710b0_0 pkgs/main

The 2.2.3 version of lightgbm can be installed with pip so this is a minor issue, just reporting it in case someone else stumbles into it.

Hello, I have one question about features. If I use function read_vectorized_features, I get feature matrix 900 000 x 2351 for X_train. Then I used feature selection SelectKBest from sklearn. I get indexes of columns which should be best, but it is possible to find, what features are in these columns? For example, I get index 90, is possible to find what type of feature is in this column? I don't know how to do it. Thank you in advance.

Hi, does anyone happen to have the optimized LGB model .txt file? Specifically for the LGB model trained on EMBER2018 features.

I am not able to run train_ember.py with the --optimize flag due to limited hardware memory. If anyone has the saved optimized model and is able to share that will be much appreciated!!

I want to know how to extract the features of PE files,because when i read the python file ,“features.py”, it‘s hard to understand the code. I want to know if it's convenient for you to tell me the specific extraction method, or if there's an instruction manual or a link to the paper or something like that. Thank you!

I'm running into an error trying to run Ember on a Cent based distro. It looks like pylief was built using a version of GLIBC that I don't believe is supported on RHEL/CentOS. Has anyone found a workaround or solution to this? I'm able to get it working fine on Ubuntu/Debian, but I need this to run on RHEL because "reasons".

Any help is appreciated!

[GCC 4.8.5 20150623 (Red Hat 4.8.5-16)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import ember

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "ember/__init__.py", line 10, in <module>

from .features import PEFeatureExtractor

File "ember/features.py", line 16, in <module>

import lief

File "/usr/lib64/python2.7/site-packages/lief/__init__.py", line 4, in <module>

import _pylief

ImportError: /lib64/libstdc++.so.6: version `GLIBCXX_3.4.20' not found (required by /usr/lib64/python2.7/site-packages/_pylief.so)```Hi,

I get an error each time I try to run python train_ember.py. "usage: train_ember [-h] [-v FEATUREVERSION] [--optimize] DATADIR".

When I type python train_ember.py /ember2018 (data features folder path), I get this error "is not a directory with raw feature files".

Thanks

Currently the following line parses only named imports from a library:

imports[lib.name].extend([entry.name[:10000] for entry in lib.entries])

Ordinal imports are unnamed and return an empty string when name is called. There are a few elements of the dataset who have high quantities of ordinal imports from some library.

['f1689c71733e2864ed040854eb2b5ca25cec1efa11f8d7994a6bfe6d1235a343', '1ccca67c95f9261491aa229a933df5712e577a4e80689d647d027bf0185c23ee', '4522284d27e3b7e8a8678ab6c1aba9654279646f81683b757c688c61f161f0cb']

This puts the imports feature out of wack. The empty string is sent to 529 and this causes that entry in to be highly variant wrt the other 1023 entries in the feature vector.

This can either be fixed here or upstream in lief.

`data_dir = "/home/cse31/MalReserach/data/ember/"

RemoteTraceback Traceback (most recent call last)

RemoteTraceback:

"""

Traceback (most recent call last):

File "/home/cse31/anaconda3/lib/python3.7/multiprocessing/pool.py", line 121, in worker

result = (True, func(*args, **kwds))

File "/home/cse31/anaconda3/lib/python3.7/site-packages/ember-0.1.0-py3.7.egg/ember/init.py", line 44, in vectorize_unpack

return vectorize(*args)

File "/home/cse31/anaconda3/lib/python3.7/site-packages/ember-0.1.0-py3.7.egg/ember/init.py", line 31, in vectorize

feature_vector = extractor.process_raw_features(raw_features)

File "/home/cse31/anaconda3/lib/python3.7/site-packages/ember-0.1.0-py3.7.egg/ember/features.py", line 522, in process_raw_features

feature_vectors = [fe.process_raw_features(raw_obj[fe.name]) for fe in self.features]

File "/home/cse31/anaconda3/lib/python3.7/site-packages/ember-0.1.0-py3.7.egg/ember/features.py", line 522, in

feature_vectors = [fe.process_raw_features(raw_obj[fe.name]) for fe in self.features]

KeyError: 'datadirectories'

"""

The above exception was the direct cause of the following exception:

KeyError Traceback (most recent call last)

in

1 data_dir = "/home/cse31/MalReserach/data/ember/" # change this to where you unzipped the download

2

----> 3 ember.create_vectorized_features(data_dir)

4 _ = ember.create_metadata(data_dir)

~/anaconda3/lib/python3.7/site-packages/ember-0.1.0-py3.7.egg/ember/init.py in create_vectorized_features(data_dir, feature_version)

73 raw_feature_paths = [os.path.join(data_dir, "train_features_{}.jsonl".format(i)) for i in range(6)]

74 nrows = sum([1 for fp in raw_feature_paths for line in open(fp)])

---> 75 vectorize_subset(X_path, y_path, raw_feature_paths, extractor, nrows)

76

77 print("Vectorizing test set")

~/anaconda3/lib/python3.7/site-packages/ember-0.1.0-py3.7.egg/ember/init.py in vectorize_subset(X_path, y_path, raw_feature_paths, extractor, nrows)

58 argument_iterator = ((irow, raw_features_string, X_path, y_path, extractor, nrows)

59 for irow, raw_features_string in enumerate(raw_feature_iterator(raw_feature_paths)))

---> 60 for _ in tqdm.tqdm(pool.imap_unordered(vectorize_unpack, argument_iterator), total=nrows):

61 pass

62

~/anaconda3/lib/python3.7/site-packages/tqdm/_tqdm.py in iter(self)

1003 """), fp_write=getattr(self.fp, 'write', sys.stderr.write))

1004

-> 1005 for obj in iterable:

1006 yield obj

1007 # Update and possibly print the progressbar.

~/anaconda3/lib/python3.7/multiprocessing/pool.py in next(self, timeout)

746 if success:

747 return value

--> 748 raise value

749

750 next = next # XXX

KeyError: 'datadirectories'

I am attempting to reproduce the benchmark to compute additional evaluation metrics. I followed the procedure outlined and obtained a model, but it appears to have lower performance than reported in the paper (AUC=.98576 (see my reply for correction) while paper reports .99911). I figured it's likely due to the difference between the versions of the 2017 dataset as I'm using version 2. Can anyone confirm?

I'm primarily interested in the accuracy and F1 score for the original benchmark for comparison purposes as the benchmark appears to still outperform any DNN in the existing literature (which is very cool). Thanks!

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.