Scripts and samples to support Confluent Platform talks. May be rough around the edges. For automated tutorials and QA'd code, see https://github.com/confluentinc/examples/

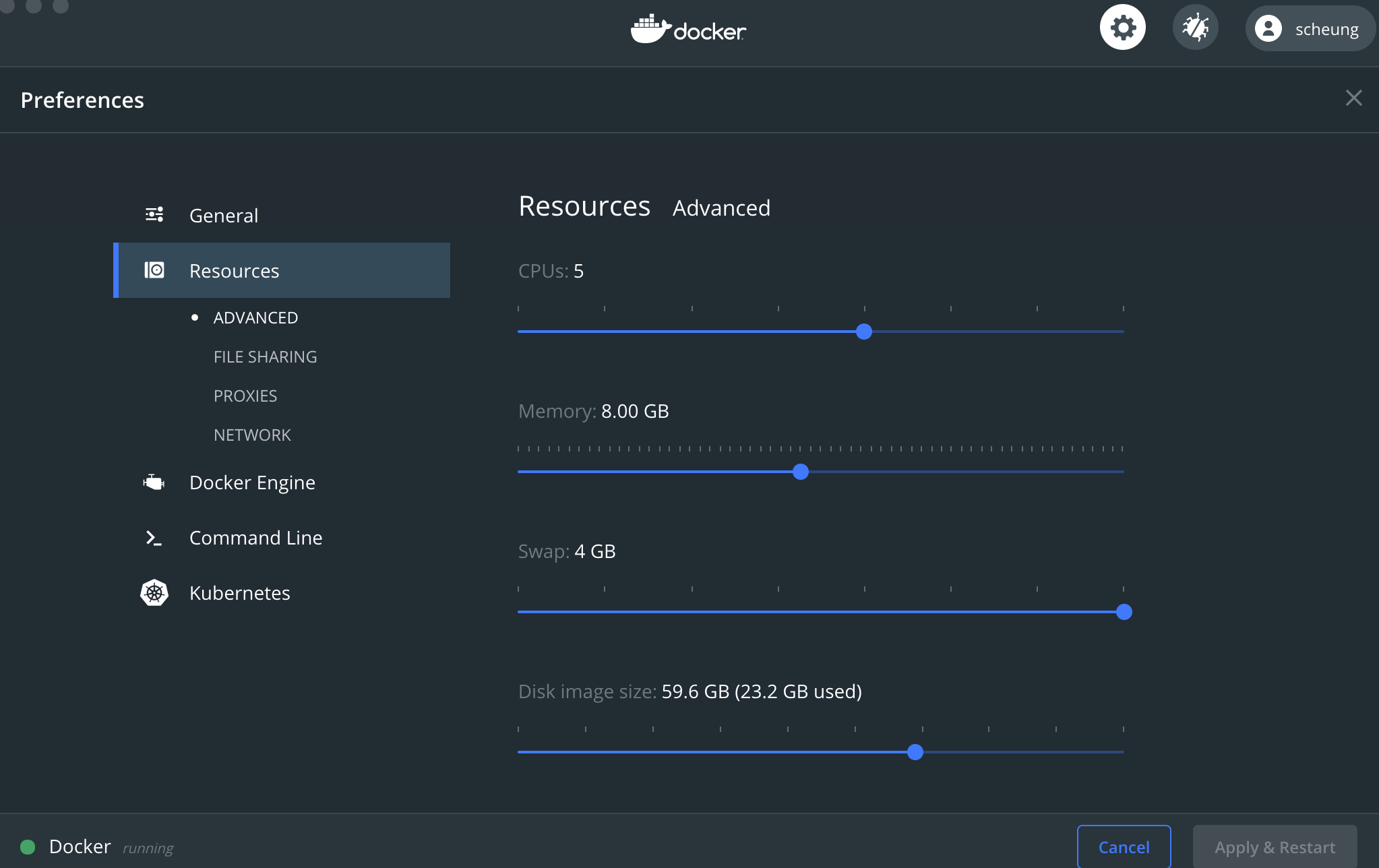

You may well need to allocate Docker 8GB when running these. Avoid allocating all your machine's cores to Docker as this may cause the machine to become unresponsive when running large stacks. On a four-core Mac Book two cores for Docker should be fine.

- Building a Telegram bot with Go, Apache Kafka, and ksqlDB (🎥 talk)

- Streaming Pac-Man

- Workshop: Event Driven Microservices

- Workshop: Choosing Christmas Movies with Kubernetes, Spring Boot, and Apache Kafka (🎥 recording)

- Kafka Summit NYC: Confluent Operator Demo aka Escape from EKS

- Kafka Summit 2020 - I don’t always test my streams, but when I do, I do it in production!

- Apache Kafka® Event Streaming Platform For Kotlin Developers (🎥 recording)

- PICKUP DATA - A Kafka Adventure Game

- Dashing off a Dashboard

- Streaming ETL and Analytics on Confluent with Maritime AIS data

- Pipeline to the cloud - on-premises RDBMS to Cloud datawarehouse e.g. Snowflake (✍️ blog)

- Rail data streaming pipeline (🗣️talk)

- Apache Kafka and KSQL in Action: Let’s Build a Streaming Data Pipeline! (🗣️talk)

- MySQL / Debezium CDC / KSQL / Elasticsearch

- Oracle CDC / KSQL / Elasticsearch

- Postgres / Debezium CDC / KSQL / Elasticsearch

- CDC demo with MySQL (🗣️talk)

- CDC demo with Oracle

- Building data pipelines with Confluent Cloud and GCP (BigQuery, GCS, etc)

- MS SQL with Debezium and ksqlDB (✍️ blog)

- Streaming ETL pipeline from MongoDB to Snowflake with Apache Kafka®

- Bridge to Cloud (and back!) with Confluent and MongoDB Atlas

- Introduction to ksqlDB 01 (🗣️talk)

- Introduction to KSQL 02

- ksqlDB tombstones

- Using Twitter data with ksqlDB

- KSQL UDF Advanced Example

- KSQL Troubleshooting

- ATM Fraud detection with Kafka and KSQL (🗣️talk)

- Kafka Streams/KSQL Movie Demo

- KSQL MQTT demo

- KSQL Dump Utility

- KSQL workshop (more recent version is here)

- Multi-node ksqlDB and Kafka Connect clusters

- Streaming ETL pipeline from MongoDB to Snowflake with Apache Kafka®

- Bridge to Cloud (and back!) with Confluent and MongoDB Atlas

- Confluent + IBM Demo Read data from IBM MQ and IBM DB2, join with ksqlDB, sink to IBM MQ

- Single Message Transform in Kafka Connect

- Improvements Kafka Connect in Apache Kafka 2.3

- From Zero to Hero with Kafka Connect (🗣️talk)

- Kafka Connect Converters and Serialization (✍️ blog)

- Building a Kafka Connect cluster

- Kafka Connect error handling (✍️ blog)

- Multi-node ksqlDB and Kafka Connect clusters

- Specific connectors

- 👉 S3 Sink (🎥 tutorial)

- 👉 Database (tutorial 🎥 1 / 🎥 2)

- 👉 Elasticsearch (🎥 Tutorial)

- RSS feed into Kafka

- Loading CSV data into Kafka (🎥 Tutorial)

- Loading XML data into Kafka

- Kafka Connect JDBC Source demo environment (✍️ blog)

- InfluxDB & Kafka Connect (✍️ blog)

- RabbitMQ into Kafka (✍️ blog)

- MQTT Connect Connector Demo

- Example Kafka Connect syslog configuration and Docker Compose (see blog series 1/2/3 and standalone articles here and here)

- Azure SQL Data Warehouse Connector Sink Demo

- Confluent + IBM Demo Read data from IBM MQ and IBM DB2, join with ksqlDB, sink to IBM MQ

- Solace Sink/Source Demo

- Getting Started with Confluent Cloud using Java

- Getting Started with Confluent Cloud using Go

- Streaming Pac-Man

- "The Cube" Demo

- Using Replicator with Confluent Cloud

- Streaming ETL pipeline from MongoDB to Snowflake with Apache Kafka®

- Micronaut & AWS Lambda on Confluent Cloud

- Bridge to Cloud (and back!) with Confluent and MongoDB Atlas

- Random Pizza Generator with Micronaut on Confluent Cloud

- Random Pizza Generator with Flask and Python on Confluent Cloud

- Self-Balancing Clusters Demo

- Tiered Storage Demo

- Cluster Linking Demo

- Cluster Linking and Schema Linking Demo

- Cluster Linking and Schema Linking Disaster Recovery step by step

- Confluent Admin REST APIs Demo

- CP-Ansible on Ansible Tower

- Kafka as a Platform: the Ecosystem from the Ground Up (🎥 recording)

- Hacky export/import between Kafka clusters using

kafkacat - Docker Compose for just the community licensed components of Confluent Platform

- Topic Tailer, stream topics to the browser using websockets

- KPay payment processing example

- Industry themes (e.g. banking Next Best Offer)

- Distributed tracing

- Analysing Sonos data in Kafka (✍️ blog)

- Analysing Wi-Fi pcap data with Kafka

- Twitter streams and Operator

- Produce Test Kafka Data

- Bugs/issues with demo: raise an issue on this github project

- General question/assistance: https://confluent.io/community/ask-the-community/