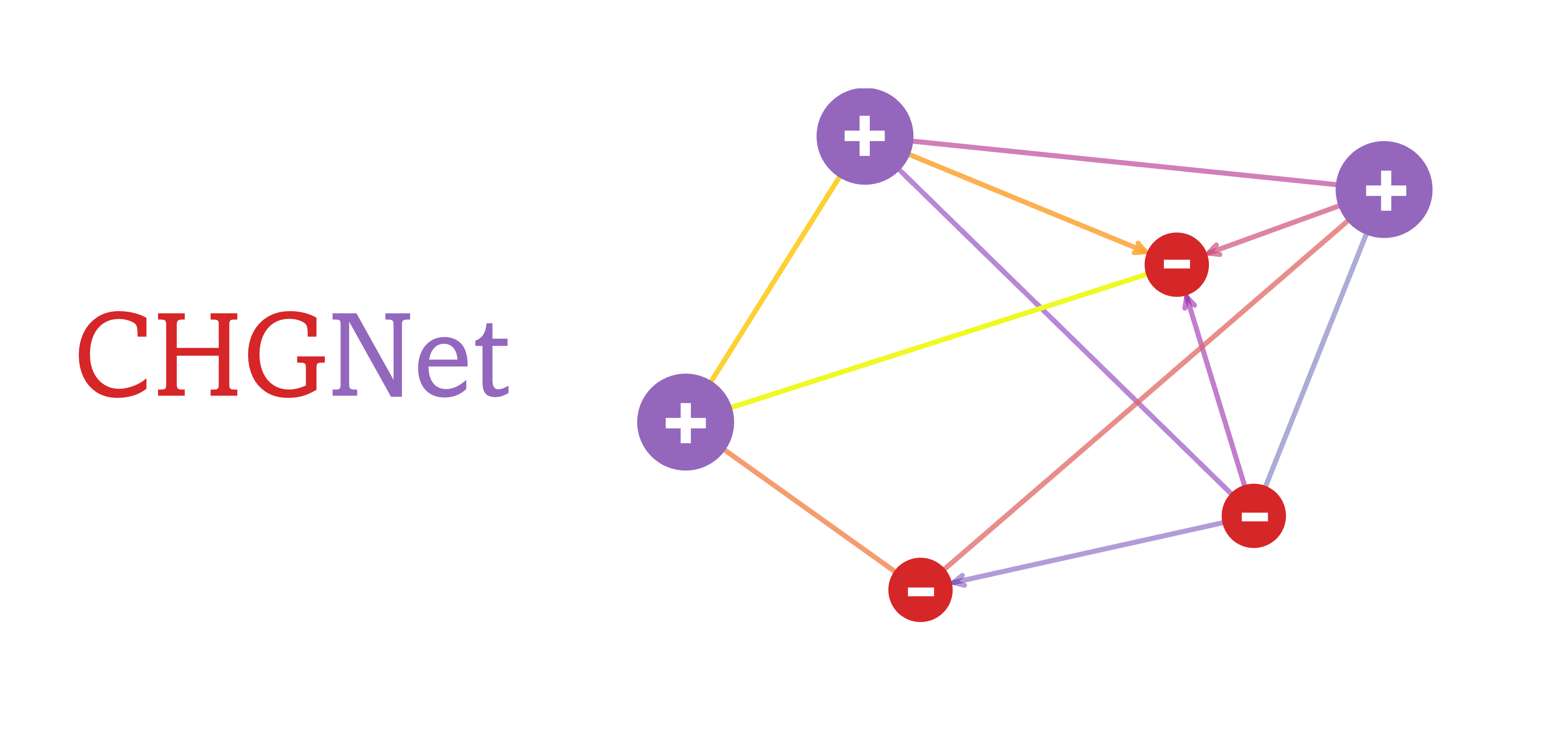

A pretrained universal neural network potential for

charge-informed atomistic modeling (see publication)

CHGNet highlights its ability to study electron interactions and charge distribution in atomistic modeling with near DFT accuracy. The charge inference is realized by regularizing the atom features with DFT magnetic moments, which carry rich information about both local ionic environments and charge distribution.

Pretrained CHGNet achieves excellent performance on materials stability prediction from unrelaxed structures according to Matbench Discovery [repo].

| Notebooks | Google Colab | Descriptions |

|---|---|---|

| CHGNet Basics | Examples for loading pre-trained CHGNet, predicting energy, force, stress, magmom as well as running structure optimization and MD. | |

| Tuning CHGNet | Examples of fine tuning the pretrained CHGNet to your system of interest. | |

| Visualize Relaxation | Crystal Toolkit app that visualizes convergence of atom positions, energies and forces of a structure during CHGNet relaxation. | |

| Phonon DOS + Bands | Use CHGNet with the atomate2 phonon workflow based on finite displacements as implemented in Phonopy to calculate phonon density of states and band structure for Si (mp-149). |

|

| Elastic tensor + bulk/shear modulus | Use CHGNet with the atomate2 elastic workflow based on a stress-strain approach to calculate elastic tensor and derived bulk and shear modulus for Si (mp-149). |

pip install chgnetif PyPI installation fails or you need the latest main branch commits, you can install from source:

pip install git+https://github.com/CederGroupHub/chgnetSee the sciML webinar tutorial on 2023-11-02 and API docs.

Pretrained CHGNet can predict the energy (eV/atom), force (eV/A), stress (GPa) and

magmom (

from chgnet.model.model import CHGNet

from pymatgen.core import Structure

chgnet = CHGNet.load()

structure = Structure.from_file('examples/mp-18767-LiMnO2.cif')

prediction = chgnet.predict_structure(structure)

for key, unit in [

("energy", "eV/atom"),

("forces", "eV/A"),

("stress", "GPa"),

("magmom", "mu_B"),

]:

print(f"CHGNet-predicted {key} ({unit}):\n{prediction[key[0]]}\n")Charge-informed molecular dynamics can be simulated with pretrained CHGNet through ASE python interface (see below),

or through LAMMPS.

from chgnet.model.model import CHGNet

from chgnet.model.dynamics import MolecularDynamics

from pymatgen.core import Structure

import warnings

warnings.filterwarnings("ignore", module="pymatgen")

warnings.filterwarnings("ignore", module="ase")

structure = Structure.from_file("examples/mp-18767-LiMnO2.cif")

chgnet = CHGNet.load()

md = MolecularDynamics(

atoms=structure,

model=chgnet,

ensemble="nvt",

temperature=1000, # in K

timestep=2, # in femto-seconds

trajectory="md_out.traj",

logfile="md_out.log",

loginterval=100,

)

md.run(50) # run a 0.1 ps MD simulationThe MD defaults to CUDA if available, to manually set device to cpu or mps:

MolecularDynamics(use_device='cpu').

MD outputs are saved to the ASE trajectory file, to visualize the MD trajectory and magnetic moments after the MD run:

from ase.io.trajectory import Trajectory

from pymatgen.io.ase import AseAtomsAdaptor

from chgnet.utils import solve_charge_by_mag

traj = Trajectory("md_out.traj")

mag = traj[-1].get_magnetic_moments()

# get the non-charge-decorated structure

structure = AseAtomsAdaptor.get_structure(traj[-1])

print(structure)

# get the charge-decorated structure

struct_with_chg = solve_charge_by_mag(structure)

print(struct_with_chg)To manipulate the MD trajectory, convert to other data formats, calculate mean square displacement, etc, please refer to ASE trajectory documentation.

CHGNet can perform fast structure optimization and provide site-wise magnetic moments. This makes it ideal for pre-relaxation and

MAGMOM initialization in spin-polarized DFT.

from chgnet.model import StructOptimizer

relaxer = StructOptimizer()

result = relaxer.relax(structure)

print("CHGNet relaxed structure", result["final_structure"])

print("relaxed total energy in eV:", result['trajectory'].energies[-1])CHGNet 0.3.0 is released with new pretrained weights! (release date: 10/22/23)

CHGNet.load() now loads 0.3.0 by default,

previous 0.2.0 version can be loaded with CHGNet.load('0.2.0')

Fine-tuning will help achieve better accuracy if a high-precision study is desired. To train/tune a CHGNet, you need to define your data in a

pytorch Dataset object. The example datasets are provided in data/dataset.py

from chgnet.data.dataset import StructureData, get_train_val_test_loader

from chgnet.trainer import Trainer

dataset = StructureData(

structures=list_of_structures,

energies=list_of_energies,

forces=list_of_forces,

stresses=list_of_stresses,

magmoms=list_of_magmoms,

)

train_loader, val_loader, test_loader = get_train_val_test_loader(

dataset, batch_size=32, train_ratio=0.9, val_ratio=0.05

)

trainer = Trainer(

model=chgnet,

targets="efsm",

optimizer="Adam",

criterion="MSE",

learning_rate=1e-2,

epochs=50,

use_device="cuda",

)

trainer.train(train_loader, val_loader, test_loader)Check fine-tuning example notebook

- The target quantity used for training should be energy/atom (not total energy) if you're fine-tuning the pretrained

CHGNet. - The pretrained dataset of

CHGNetcomes from GGA+U DFT withMaterialsProject2020Compatibilitycorrections applied. The parameter for VASP is described inMPRelaxSet. If you're fine-tuning withMPRelaxSet, it is recommended to apply theMP2020compatibility to your energy labels so that they're consistent with the pretrained dataset. - If you're fine-tuning to functionals other than GGA, we recommend you refit the

AtomRef. CHGNetstress is in units of GPa, and the unit conversion has already been included indataset.py. SoVASPstress can be directly fed toStructureData- To save time from graph conversion step for each training, we recommend you use

GraphDatadefined indataset.py, which reads graphs directly from saved directory. To create saved graphs, seeexamples/make_graphs.py.

The Materials Project trajectory (MPtrj) dataset used to pretrain CHGNet is available at figshare.

The MPtrj dataset consists of all the GGA/GGA+U DFT calculations from the September 2022 Materials Project. By using the MPtrj dataset, users agree to abide the Materials Project terms of use.

If you use CHGNet or MPtrj dataset, please cite this paper:

@article{deng_2023_chgnet,

title={CHGNet as a pretrained universal neural network potential for charge-informed atomistic modelling},

DOI={10.1038/s42256-023-00716-3},

journal={Nature Machine Intelligence},

author={Deng, Bowen and Zhong, Peichen and Jun, KyuJung and Riebesell, Janosh and Han, Kevin and Bartel, Christopher J. and Ceder, Gerbrand},

year={2023},

pages={1–11}

}CHGNet is under active development, if you encounter any bugs in installation and usage,

please open an issue. We appreciate your contributions!