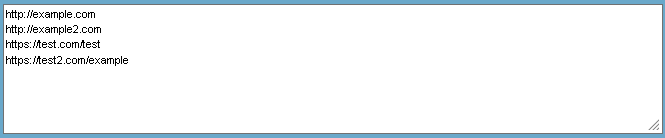

Link Gopher is a web browser extension: it extracts all links from web page, sorts them, removes duplicates, and displays them in a new tab for inspection or copy and paste into other systems.

To download and install the latest release:

- Link Gopher on Mozilla Add-ons for Firefox

- Link Gopher on Chrome Web Store for Google Chrome

There is brief documentation

Copyright (c) 2008, 2009, 2014, 2017, 2021, 2023 by Andrew Ziem. All rights reserved.

Licensed under the GNU General Public License version 3 or later