Here you can find the materials for the "Data Engineering 4: Using R in Production" course, part of the MSc in Business Analytics at CEU.

- Table of Contents

- Schedule

- Home assignment

- Week 1: Using R in the Cloud

- Week 2: Scaling R applications

- Week 3: Stream processing with R

- Recap

- Quiz

- Background: Example use-case and why to use R to do stream processing?

- Preparations

- Configuring for standard ports

- Setting up a demo stream

- A simple stream consumer app in R

- Parsing and structuring records read from the stream

- Stream processor daemon

- Shiny app showing the progress

- Dockerizing R scripts

- Contact

- 15:30 - 17:10 Session 1

- 17:10 - 17:30 Coffee break

- 17:30 - 19:10 Session 2

The goal of this assignment is to confirm that the students have a general understanding on how to build data pipelines using Amazon Web Services and R, and can actually implement a stream processing application (either running in almost real-time or batched/scheduled way) in practice.

To minimize the system administration and most of the engineering tasks for the students, the below pre-configured tools are provided as free options, but students can decide to build their own environment (on the top of or independently from these) and feel free to use any other tools:

CEU-Binancestream in the Ireland region of the central CEU AWS account with the real-time order data from the Binance cryptocurrency exchange on Bitcoin (BTC), Ethereum (ETH), Litecoin (LTC), Neo (NEO), Binance Coin (BNB) and Tether (USDT) -- including the the attributes of each transaction as specified at https://github.com/binance-exchange/binance-official-api-docs/blob/master/web-socket-streams.md#trade-streamsde4-week3Amazon Machine Image that you can use to spin up an EC2 node with RStudio Server, Shiny Server, Jenkins, Redis and Docker installed & pre-configured (use the “ceu” username and “ceudata” password if asked for all services) along with the most often used R packages (including the ones we used for stream processing, egbotor,AWR.Kinesisand thebinancerpackage)gergely-week2EC2 IAM role with full access to Kinesis, Dynamodb, Cloudwatch and encrypt/decrypt access to the "all-the-keys" KMS keyde4KMS key that you can use to decrypt the below string to get a Slack access token for theceu-data-botuser:AQICAHhh7Ku/BWdSbCqos9k49Vnk1+WytvoesgX+1bOvLAlyegHa210D93pgytNnThR9qVVxAAAAmjCBlwYJKoZIhvcNAQcGoIGJMIGGAgEAMIGABgkqhkiG9w0BBwEwHgYJYIZIAWUDBAEuMBEEDKkH9le72xKmMsgjTQIBEIBT/7MbIV2sG6Hh+fb8BJQ9a6VNOZ1rhPAgvSET6IUdiki92fMZ6dDBOpmSSuaa3t8KIF9KtrlbQAYQNtPVHUvFl1GpyM0k8bD7jLSsUPeRjFNoI+Q=- lecture and seminar notes at https://github.com/daroczig/CEU-R-prod

Make sure to clean-up your EC2 nodes, security groups, keys etc created in the past weeks, as left-over AWS resources will contribute negative points to your final grade!

-

Minimal project (for grade up to "B"): schedule a Jenkins job that runs every hour and reads 250 messages from the "CEU-Binance" stream. Use this batch of data to

- Draw a barplot on the overall number of units per symbol in the "#bots-final-project" Slack channel based on the number of transactions returned from the Kinesis stream

- Get the current symbol prices from the Binance API, and compute the overall price of the 250 transactions in USD and print to the console

-

Suggested project (for grade up to "A"): Create a stream processing application using the

AWR.KinesisR package's daemon + Redis (similar to what we did on the 3rd week) to record the overall amount of coins exchanged on Binance (per symbol) in the most recent micro-batch (in other words, whatever records the Java daemon reports, sum up amount by symbol and store in Redis). No need to clear the cache ... so if a symbol was not included in a batch, don't update those keys in Redis. Create a Jenkins job that reads from this Redis cache and prints the overall value (in USD) of the transactions -- based on the coin prices reported by the Binance API at the time of request. Create at least two more additional charts that display a metric you find meaningful, and report in the "#bots-final-project" Slack channel.

- Create a PDF document that describes your solution and all the main steps involved with low level details: attach screenshots (includeing the URL nav bar and the date/time widget of your OS, so like full-screen and not area-picked screenshots) of your browser showing what you are doing in RStudio Server or eg Jenkins, make sure that the code you wrote is either visible on the screenshots, or included in the PDF. The minimal amount of screenshots are: EC2 creation, R code shown in your RStudio Server, Jenkins job config page, Jenkins job output, Slack channel notifications.

- STOP the EC2 Instance you worked on, but don’t terminate it, so we can start it and check how it works. Note that your instance will be terminated by us after the end of the class.

- Include the

instance_idon the first page of the PDF, along with your name or student id. - Upload the PDF to Moodle.

Midnight (CET) on April 19, 2020

Contact Mihaly Orsos (TA) on the ceu-bizanalytics Slack or at [email protected]

Goal: learn how to run and schedule R jobs in the cloud.

- http://bit.ly/budapestdata-2018-dbs-in-a-startup (presented at the Budapest Data Forum in 2018)

- http://bit.ly/daroczig-rstudio-conf-2020 (presented at the RStudio::conf in 2020)

-

Use the central CEU AWS account: https://ceu.signin.aws.amazon.com/console

-

Secure your access key(s), other credentials and any login information ...

... because a truly wise person learns from the mistakes of others!

"When I woke up the next morning, I had four emails and a missed phone call from Amazon AWS - something about 140 servers running on my AWS account, mining Bitcoin" -- Hoffman said

"Nevertheless, now I know that Bitcoin can be mined with SQL, which is priceless ;-)" -- Uri Shaked

So set up 2FA (go to IAM / Users / username / Security credentials / Assigned MFA device): https://console.aws.amazon.com/iam

PS probably you do not really need to store any access keys, but you may rely on roles (and the Key Management Service, and the Secrets Manager and so on)

-

Let's use the

eu-west-1Ireland region

Note: we follow the instructions on Windows in the Computer Lab, but please find below how to access the boxes from Mac or Linux as well when working with the instances remotely.

-

Create (or import) an SSH key in AWS (EC2 / Key Pairs): https://eu-west-1.console.aws.amazon.com/ec2/v2/home?region=eu-west-1#KeyPairs:sort=keyName

-

Get an SSH client:

-

Windows -- Download and install PuTTY: https://www.putty.org

-

Mac -- Install PuTTY for Mac using homebrew or macports

sudo brew install putty sudo port install putty

-

Linux -- probably the OpenSSH client is already installed, but to use the same tools on all operating systems, please install and use PuTTY on Linux too, eg on Ubuntu:

sudo apt install putty

-

-

Convert the generated pem key to PuTTY formatNon need to do this anymore, AWS can provide the key as PPK now.-

GUI: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/putty.html#putty-private-key

-

CLI:

puttygen key.pem -O private -o key.ppk

-

-

Make sure the key is readable only by your Windows/Linux/Mac user, eg

chmod 0400 key.ppk

-

Create a tiny EC2 instance

- Optional: create an Elastic IP for your box

- Go the the Instances overview at https://eu-west-1.console.aws.amazon.com/ec2/v2/home?region=eu-west-1#Instances:sort=instanceId

- Click "Launch Instance"

- Pick the

Ubuntu Server 18.04 LTS (HVM), SSD Volume TypeAMI - Pick

t3.microinstance type (see more instance types) - Click "Review and Launch"

- Pick a unique name for the security group after clicking "Edit Security Group"

- Click "Launch"

- Select your AWS key created above and launch

-

Connect to the box

- Specify the hostname or IP address

- Specify the key for authentication

- Set the username to

ubuntuon the Connection/Data tab - Save the Session profile

- Click the "Open" button

- Accept & cache server's host key

Alternatively, you can connect via a standard SSH client on a Mac or Linux, something like:

chmod 0400 /path/to/your/pem

ssh -i /path/to/your/pem -p 8000 ubuntu@ip-address-of-your-machine-

Look at the docs: https://www.rstudio.com/products/rstudio/download-server

-

Download Ubuntu

aptpackage listsudo apt update

-

Install dependencies

sudo apt install r-base gdebi-core

-

Try R

R

For example:

1 + 4 hist(mtcars$hp)

-

Install RStudio Server

wget https://download2.rstudio.org/server/bionic/amd64/rstudio-server-1.2.5033-amd64.deb sudo gdebi rstudio-server-1.2.5033-amd64.deb

-

Check process and open ports

rstudio-server status sudo rstudio-server status sudo systemctl status rstudio-server sudo ps aux| grep rstudio sudo netstat -tapen | grep LIST sudo netstat -tapen

-

Look at the docs: http://docs.rstudio.com/ide/server-pro/

-

Confirm that the service is up and running and the port is open

ubuntu@ip-172-31-12-150:~$ sudo netstat -tapen | grep LIST tcp 0 0 0.0.0.0:8787 0.0.0.0:* LISTEN 0 49065 23587/rserver tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 0 15671 1305/sshd tcp6 0 0 :::22 :::* LISTEN 0 15673 1305/sshd

-

Try to connect to the host from a browser on port 8787, eg http://foobar.eu-west-1.compute.amazonaws.com:8787

-

Realize it's not working

-

Open up port 8787 in the security group

-

Authentication: http://docs.rstudio.com/ide/server-pro/authenticating-users.html

-

Create a new user:

sudo adduser ceu -

Login & quick demo:

1+2 plot(mtcars) install.packages('fortunes') library(fortunes) fortune() fortune(200) system('whoami')

-

Reload webpage (F5), realize we continue where we left the browser :)

-

Demo the terminal:

$ whoami ceu $ sudo whoami ceu is not in the sudoers file. This incident will be reported.

-

Grant sudo access to the new user by going back to SSH with

rootaccess:sudo apt install -y mc sudo mc sudo mcedit /etc/sudoers sudo adduser ceu admin man adduser man deluser

Note 1: might need to relogin / restart RStudio / reload R / reload page

Note 2: you might want to add NOPASSWD to the sudoers file:

```sh

ceu ALL=(ALL) NOPASSWD:ALL

```

Although also note (3) the related security risks.

- Custom login page: http://docs.rstudio.com/ide/server-pro/authenticating-users.html#customizing-the-sign-in-page

- Custom port: http://docs.rstudio.com/ide/server-pro/access-and-security.html#network-port-and-address

-

Installing packages:

## don't do this at this point! ## install.packages('ggplot2')

-

Use binary packages instead via apt & Launchpad PPA:

sudo add-apt-repository ppa:marutter/rrutter sudo add-apt-repository ppa:marutter/c2d4u sudo apt-get update sudo apt-get upgrade sudo apt-get install r-cran-ggplot2 -

Ready to use it from R after restarting the session:

library(ggplot2) ggplot(mtcars, aes(hp)) + geom_histogram()

-

Get some real-time data and visualize it:

-

Install the

devtoolsR package and a few others (binary distribution) in the RStudio/Terminal:sudo apt-get install r-cran-devtools r-cran-data.table r-cran-httr r-cran-jsonlite r-cran-data.table r-cran-stringi r-cran-stringr r-cran-glue

-

Switch back to the R console and install the

binancerR package from GitHub to interact with crypto exchanges (note the extra dependency to be installed from CRAN):install.packages('snakecase') devtools::install_github('daroczig/binancer', upgrade_dependencies = FALSE)

-

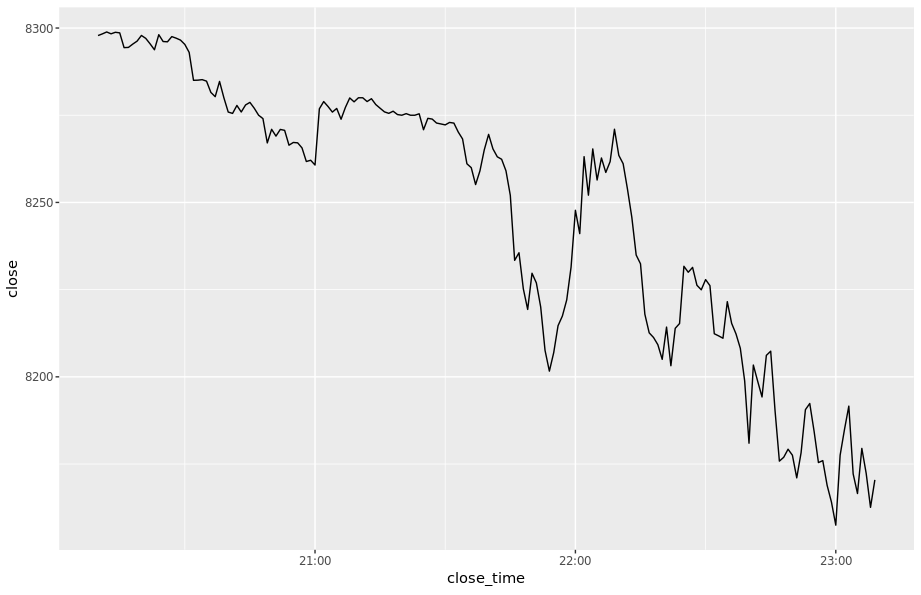

First steps with live data: load the

binancerpackage and then use thebinance_klinesfunction to get the last 3 hours of Bitcoin price changes (in USD) with 1-minute granularity -- resulting in an object like:> str(klines) Classes ‘data.table’ and 'data.frame': 180 obs. of 12 variables: $ open_time : POSIXct, format: "2020-03-08 20:09:00" "2020-03-08 20:10:00" "2020-03-08 20:11:00" "2020-03-08 20:12:00" ... $ open : num 8292 8298 8298 8299 8298 ... $ high : num 8299 8299 8299 8299 8299 ... $ low : num 8292 8297 8297 8298 8296 ... $ close : num 8298 8298 8299 8298 8299 ... $ volume : num 25.65 9.57 20.21 9.65 24.69 ... $ close_time : POSIXct, format: "2020-03-08 20:09:59" "2020-03-08 20:10:59" "2020-03-08 20:11:59" "2020-03-08 20:12:59" ... $ quote_asset_volume : num 212759 79431 167677 80099 204883 ... $ trades : int 371 202 274 186 352 271 374 202 143 306 ... $ taker_buy_base_asset_volume : num 13.43 5.84 11.74 7.12 15.24 ... $ taker_buy_quote_asset_volume: num 111430 48448 97416 59071 126493 ... $ symbol : chr "BTCUSDT" "BTCUSDT" "BTCUSDT" "BTCUSDT" ... - attr(*, ".internal.selfref")=<externalptr>

Click here for the code generating the above ...

library(binancer) klines <- binance_klines('BTCUSDT', interval = '1m', limit = 60*3) str(klines) summary(klines$close)

-

Visualize the data, eg on a simple line chart:

Click here for the code generating the above ...

library(ggplot2) ggplot(klines, aes(close_time, close)) + geom_line()

-

Now create a candle chart, something like:

Click here for the code generating the above ...

library(scales) ggplot(klines, aes(open_time)) + geom_linerange(aes(ymin = open, ymax = close, color = close < open), size = 2) + geom_errorbar(aes(ymin = low, ymax = high), size = 0.25) + theme_bw() + theme('legend.position' = 'none') + xlab('') + ggtitle(paste('Last Updated:', Sys.time())) + scale_y_continuous(labels = dollar) + scale_color_manual(values = c('#1a9850', '#d73027')) # RdYlGn

-

Compare prices of 4 currencies (eg ETH, ARK, NEO and IOTA) in the past 24 hours on 15 mins intervals:

Click here for the code generating the above ...

library(data.table) klines <- rbindlist(lapply( c('ETHBTC', 'ARKBTC', 'NEOBTC', 'IOTABTC'), binance_klines, interval = '15m', limit = 4*24)) ggplot(klines, aes(open_time)) + geom_linerange(aes(ymin = open, ymax = close, color = close < open), size = 2) + geom_errorbar(aes(ymin = low, ymax = high), size = 0.25) + theme_bw() + theme('legend.position' = 'none') + xlab('') + ggtitle(paste('Last Updated:', Sys.time())) + scale_color_manual(values = c('#1a9850', '#d73027')) + facet_wrap(~symbol, scales = 'free', nrow = 2)

-

Some further useful functions:

binance_ticker_all_prices()binance_coins_prices()binance_credentialsandbinance_balances

-

Create an R script that reports and/or plots on some cryptocurrencies, ideas:

- compute the (relative) change in prices of cryptocurrencies in the past 24 / 168 hours

- go back in time 1 / 12 / 24 months and "invest" $1K in BTC and see the value today

- write a bot buying and selling crypto on a virtual exchange

-

-

Install Jenkins from the RStudio/Terminal: https://pkg.jenkins.io/debian-stable/

wget -q -O - https://pkg.jenkins.io/debian-stable/jenkins.io.key | sudo apt-key add - echo "deb https://pkg.jenkins.io/debian-stable binary/" | sudo tee -a /etc/apt/sources.list sudo apt update sudo apt install openjdk-8-jdk-headless jenkins ## installing Java as well sudo netstat -tapen | grep java

-

Open up port 8080 in the related security group

-

Access Jenkins from your browser and finish installation

-

Read the initial admin password from RStudio/Terminal via

sudo cat /var/lib/jenkins/secrets/initialAdminPassword

-

Proceed with installing the suggested plugins

-

Create your first user (eg

ceu)

-

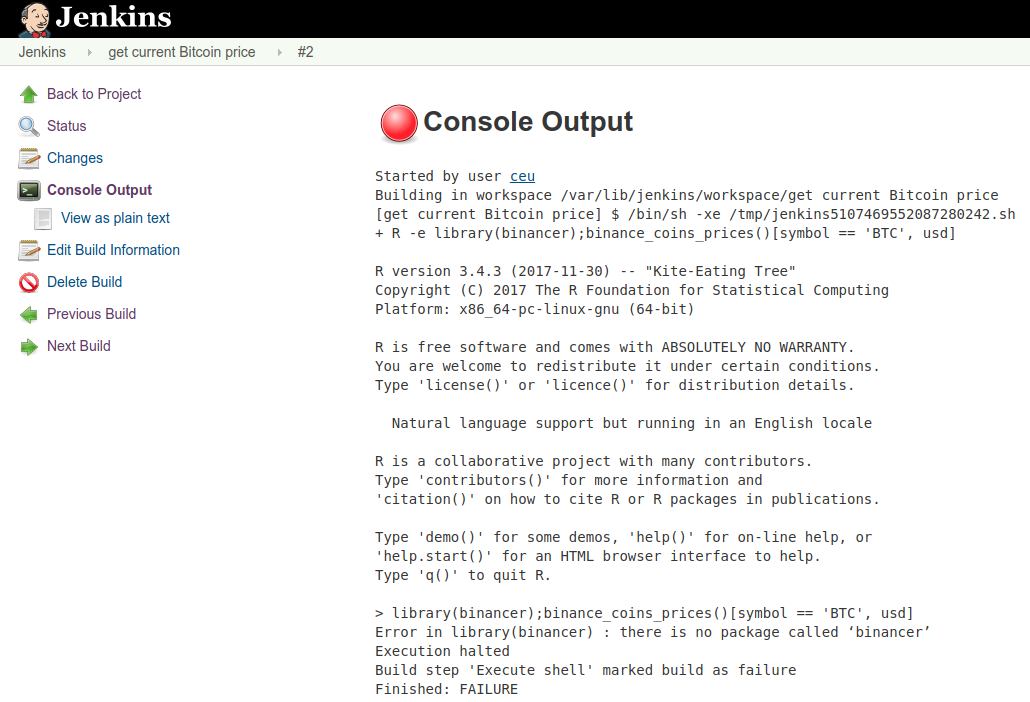

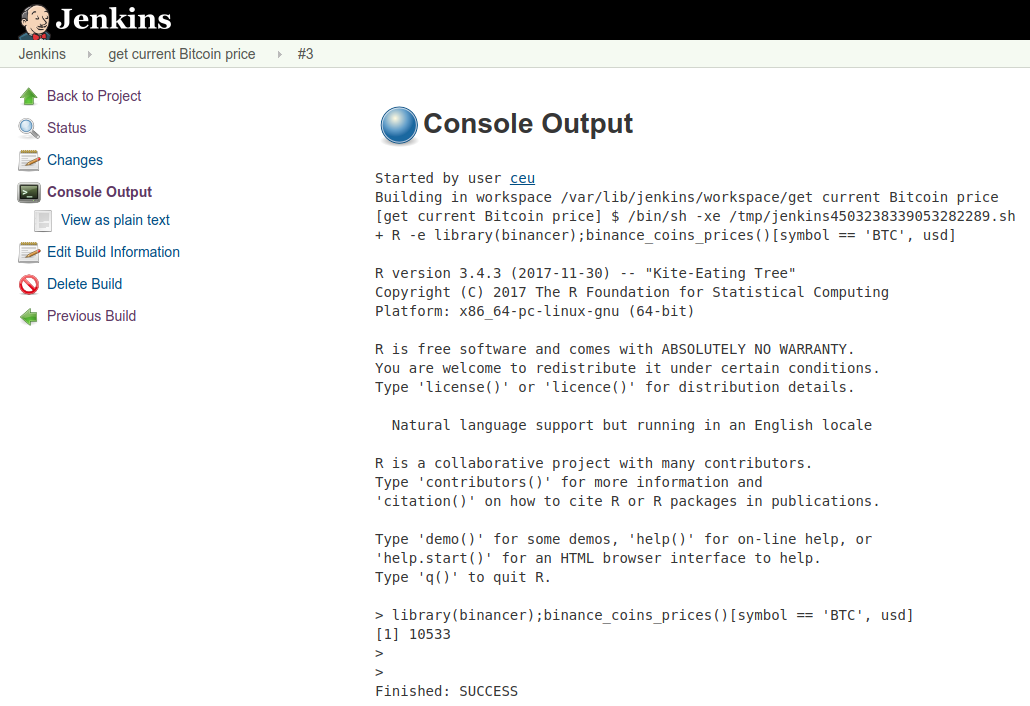

Let's schedule a Jenkins job to check on the Bitcoin prices every hour!

-

Log in to Jenkins using your instance's public IP address and port 8080

-

Use the

ceuusername andceudatapassword -

Create a "New Item" (job):

-

Enter the name of the job:

get current Bitcoin price -

Pick "Freestyle project"

-

Click "OK"

-

Add a new "Execute shell" build step

-

Enter the below command to look up the most recent BTC price

R -e "library(binancer);binance_coins_prices()[symbol == 'BTC', usd]" -

Run the job

-

-

Debug & figure out what's the problem ...

-

Install R packages system wide from RStudio/Terminal (more on this later):

sudo Rscript -e "library(devtools);with_libpaths(new = '/usr/local/lib/R/site-library', install_github('daroczig/binancer', upgrade_dependencies = FALSE))" -

Rerun the job

Read the rOpenSci Docker tutorial -- quiz next week! Think about why we might want to use Docker.

Hello folks, we'll start at 15:30.

- I highly recommended to get a second screen for your laptop: it can be a monitor attached to your laptop, or the TV in your living room connected via HDMI cable to your laptop, or if you have a spare tablet or laptop that you can use -- all should work fine. The goal is to have a dedicated screen for the video-conferencing, so that you can follow the stream (and ask questions), and have your usual laptop screen to do the exercises etc

- It's much better to use a headphone (compared to using the laptop speakers and microphone) so that you hear better all the others and also the others can better understand you

- Please click on the "mute" button on your end when not asking questions to reduce background noise

- Please DO feel free to ask questions and interact with the course at any time -- doing the class remotely doesn't mean you are just watching a video! Please raise your voice if something is not clear, and follow the exercises to make the most out of this unfortunate situation.

Quick intro to Google Hangouts / Meet:

- mute/unmute

- enable/disable camera (save bandwith and CPU cycles)

- share your screen or a window

- chat sidebar

- alternative way of reaching me: email, slack, moodle

What we convered last week:

- 2FA/MFA in AWS

- Creating EC2 nodes

- Connecting to EC2 nodes via SSH/Putty (note the difference between the PPK and PEM key formats)

- Updating security groups

- Installing RStudio Server

- The difference between R Console and Shell

- Adding new Linux users

- Granting

sudoaccess to Linux users - Installing R packages system-wide VS in the user's home folder

- Installing and setting up Jenkins

- Debugging issues, eg small instance with limited memory for compiling packages from source and user permissions

Note the above detailed steps for the above!

Also, in the below steps, you can skip running all the instructions prefixed with 💪 -- as the Amazon AMI has already configured so for your convenience, and these steps are just here as an FYI if you want to reproduce the same environment later (eg for a hobby project or work).

Please fill in the form at https://goo.gl/forms/oKQ0zILuQljgBxyH3

- Log in to the central CEU AWS account: https://ceu.signin.aws.amazon.com/console

- Did you use 2FA / MFA?!

- Use the Ireland regiuon

- Go to the EC2 console at https://eu-west-1.console.aws.amazon.com/ec2/v2/home?region=eu-west-1#Instances:sort=instanceId

- Realize the mess we left there from last week! 😱 Fix it. Kill your old security groups as well. Remove unneded keys.

- 💪 Check on the wasted money in the AWS Cost Explorer

- Create a new

t3.microinstance using thede4-week3AMI andt3.smallinstance size using a new security group with a unique name and opening up the 22 (ssh), 8000 (alternate ssh), 8787 (rstudio) and 8080 (jenkins) ports - Log in to RStudio using the new instance's public IP address and 8787 port, then the

ceuusername andceudatapassword - Check if the price of a Bitcoin is more than $4,000 (feel free to scroll up to get some hints from last week's R scripts)

Optionally you can associate a fixed IP address to your box:

- Allocate a new Elastic IP address at https://eu-west-1.console.aws.amazon.com/ec2/v2/home?region=eu-west-1#Addresses:

- Name this resource by assigning a "Name" tag

- Associate this Elastic IP with your stopped box, then start it

Optionally you can associate a subdomain with your node, using the above created Elastic IP address:

-

Go to Route 53: https://console.aws.amazon.com/route53/home

-

Go to Hosted Zones and click on

ceudata.net -

Create a new Record, where

- fill in the desired

Name(subdomain) - paste the public IP address or hostname of your server in the

Valuefield - click

Create

- fill in the desired

-

Now you will be able to access your box using this custon (sub)domain, no need to remember IP addresses.

-

Learn about little R: https://github.com/eddelbuettel/littler

-

Set up e-mail notifications via eg mailjet.com

-

Sign up, confirm your e-mail address and domain

-

Take a note on the SMTP settings, eg

- SMTP server: in-v3.mailjet.com

- Port: 465

- SSL: Yes

- Username: ***

- Password: ***

-

Configure Jenkins at http://SERVERNAME.ceudata.net:8080/configure

-

Set up the default FROM e-mail address: [email protected]

-

Search for "Extended E-mail Notification" and configure

- SMTP Server

- Click "Advanced"

- Check "Use SMTP Authentication"

- Enter User Name from the above steps from SNS

- Enter Password from the above steps from SNS

- Check "Use SSL"

- SMTP port: 465

-

-

Set up "Post-build Actions" in Jenkins: Editable Email Notification - read the manual and info popups, configure to get an e-mail on job failures and fixes

-

Configure the job to send the whole e-mail body as the deault body template for all outgoing emails

${BUILD_LOG, maxLines=1000} -

-

Look at other Jenkins plugins, eg the Slack Notifier: https://plugins.jenkins.io/slack

-

Create an R script with the below content and save on the server, eg as

/home/ceu/bitcoin-price.R:library(binancer) prices <- binance_coins_prices() sprintf('The current Bitcoin price is: %s', prices[symbol == 'BTC', usd])

-

Follow the steps from the Schedule R commands section to create a new Jenkins job, but instead of calling

R -e "..."in shell step, reference the above R script usingRscriptinstead

Rscript /home/ceu/de4.RWe need a persistent storage for our Jenkins jobs ... let's give a try to a key-value database:

-

💪 Install server

sudo apt install redis-server netstat -tapen | grep LIST -

💪 Install client

sudo Rscript -e "withr::with_libpaths(new = '/usr/local/lib/R/site-library', install.packages('rredis', repos='https://cran.rstudio.com/'))" -

Interact from R

## set up and initialize the connection to the local redis server library(rredis) redisConnect() ## set/get values redisSet('foo', 'bar') redisGet('foo') ## increment and decrease counters redisIncr('counter') redisIncr('counter') redisIncr('counter') redisGet('counter') redisDecr('counter') redisDecr('counter2') ## get multiple values at once redisMGet(c('counter', 'counter2'))

For more examples and ideas, see the rredis package vignette or try the interactive, genaral (not R-specific) redis tutorial.

-

Exercises

- Create a Jenkins job running every minute to cache the most recent Bitcoin and Ethereum prices in Redis

- Write an R script in RStudio that can read the Bitcoin and Ethereum prices from the Redis cache

Example solution ...

library(rredis)

redisConnect()

redisSet('price:BTC', binance_klines('BTCUSDT', interval = '1m', limit = 1)$close)

redisSet('price:ETH', binance_klines('ETHUSDT', interval = '1m', limit = 1)$close)

redisGet('price:BTC')

redisGet('price:ETH')

redisMGet(c('price:BTC', 'price:ETH'))Example solution using a helper function doing some logging ...

store <- function(symbol) {

print(paste('Looking up and storing', symbol))

redisSet(paste('price', symbol, sep = ':'),

binance_klines(paste0(symbol, 'USDT'), interval = '1m', limit = 1)$close)

}

store('BTC')

store('ETH')

## list all keys with the "price" prefix and lookup the actual values

redisMGet(redisKeys('price:*'))More on databases at the "Mastering R" class in the Spring semester ;)

- Join the #ba-de4-2019-bots channel in the

ceu-bizanalyticsSlack - 💪 A custom Slack app is already created at https://api.slack.com/apps/A9FBHCLPR, but feel free to create a new one and use the related app in the following steps

- Look up the app's bots in the sidebar

- Look up the Access Token

- Get familiar with the Slack Notifier Jenkins plugin: https://plugins.jenkins.io/slack

- Configure global Slack options (what about storing the token?)

- Set up post-hook actions to alert in Slack if a Jenkins job is failing

Slides at http://bit.ly/satrday-la-2018-daroczig

-

Do not store the token in plain-text!

-

Let's use Amazon's Key Management Service: https://github.com/daroczig/CEU-R-prod/raw/2017-2018/AWR.Kinesis/AWR.Kinesis-talk.pdf (slides 73-75)

-

💪 Instead of using the Java SDK, let's install

boto3Python module and use viareticulate:sudo apt install python4-pip sudo pip3 install boto3 sudo Rscript -e "withr::with_libpaths(new = '/usr/local/lib/R/site-library', install.packages('reticulate', repos='https://cran.rstudio.com/'))" sudo Rscript -e "library(devtools);withr::with_libpaths(new = '/usr/local/lib/R/site-library', install_github('daroczig/botor', upgrade_dependencies = FALSE))"

-

💪 Create a KMS key in IAM:

alias/de4 -

Grant access to that KMS key by creating an EC2 IAM role at https://console.aws.amazon.com/iam/home?region=eu-west-1#/roles with the

AWSKeyManagementServicePowerUserpolicy and explicit grant access to the key in the KMS console -

Attach the newly created IAM role

-

Use this KMS key to encrypt the Slack token:

library(botor) botor(region = 'eu-west-1') kms_encrypt('token', key = 'alias/de4')

-

Store the ciphertext and use

kms_decryptto decrypt later, see egkms_decrypt("AQICAHhh7Ku/BWdSbCqos9k49Vnk1+WytvoesgX+1bOvLAlyegHa210D93pgytNnThR9qVVxAAAAmjCBlwYJKoZIhvcNAQcGoIGJMIGGAgEAMIGABgkqhkiG9w0BBwEwHgYJYIZIAWUDBAEuMBEEDKkH9le72xKmMsgjTQIBEIBT/7MbIV2sG6Hh+fb8BJQ9a6VNOZ1rhPAgvSET6IUdiki92fMZ6dDBOpmSSuaa3t8KIF9KtrlbQAYQNtPVHUvFl1GpyM0k8bD7jLSsUPeRjFNoI+Q=")

-

💪 Install the Slack R client

sudo apt install r-cran-rlang r-cran-purrr r-cran-tibble r-cran-dplyr sudo R -e "withr::with_libpaths(new = '/usr/local/lib/R/site-library', install.packages('slackr', repos='https://cran.rstudio.com/'))" -

Init and send our first messages with

slackrlibrary(botor) token <- kms_decrypt("AQICAHhh7Ku/BWdSbCqos9k49Vnk1+WytvoesgX+1bOvLAlyegHa210D93pgytNnThR9qVVxAAAAmjCBlwYJKoZIhvcNAQcGoIGJMIGGAgEAMIGABgkqhkiG9w0BBwEwHgYJYIZIAWUDBAEuMBEEDKkH9le72xKmMsgjTQIBEIBT/7MbIV2sG6Hh+fb8BJQ9a6VNOZ1rhPAgvSET6IUdiki92fMZ6dDBOpmSSuaa3t8KIF9KtrlbQAYQNtPVHUvFl1GpyM0k8bD7jLSsUPeRjFNoI+Q=") library(slackr) slackr_setup(username = 'jenkins', api_token = token, icon_emoji = ':jenkins-rage:') text_slackr(text = 'Hi there!', channel = '#ba-de4-2019-bots')

-

A more complex message

library(binancer) prices <- binance_coins_prices() msg <- sprintf(':money_with_wings: The current Bitcoin price is: $%s', prices[symbol == 'BTC', usd]) text_slackr(text = msg, preformatted = FALSE, channel = '#ba-de4-2019-bots')

-

Or plot

library(ggplot2) klines <- binance_klines('BTCUSDT', interval = '1m', limit = 60*3) p <- ggplot(klines, aes(close_time, close)) + geom_line() ggslackr(plot = p, channels = '#ba-de4-2019-bots', width = 12)

Besides KMS, there are a few other great options as well, see eg the System Manager's Parameter Store at https://eu-west-1.console.aws.amazon.com/systems-manager/parameters/?region=eu-west-1 (which will need you to grant access to SSM for the related IAM role, eg via attaching the AmazonSSMReadOnlyAccess policy):

ssm_get_parameter('slack')We will also cover this in more details in the Mastering R class in the Spring semester.

- Create a Jenkins job to alert if Bitcoin price is below $3.8K or higher than $4K

- Create a Jenkins job to alert if Bitcoin price changed more than $200 in the past hour

- Create a Jenkins job to alert if Bitcoin price changed more than 5% in the past day

- Create a Jenkins job running hourly to generate a candlestick chart on the price of BTC and ETH

Example solution for the first exercise ...

## get data right from the Binance API

library(binancer)

btc <- binance_klines('BTCUSDT', interval = '1m', limit = 1)$close

## or from the local cache (updated every minute from Jenkins as per above)

library(rredis)

btc <- redisGet('price:BTC')

## log whatever was retreived

library(logger)

log_info('The current price of a Bitcoin is ${btc}')

## send alert

if (btc < 3800 | btc > 4000) {

library(botor)

token <- ssm_get_parameter('slack')

library(slackr)

slackr_setup(username = 'jenkins', api_token = token, icon_emoji = ':jenkins-rage:')

text_slackr(

text = paste('uh ... oh... BTC price:', btc),

channel = '#ba-de4-2019-bots')

}What we convered last week:

- AWS resurce cleanup

- New EC2 node using a custom AMI

- Concept of Elastic IP

- Using domain names

- Scheduling Jenkins jobs

- Introduction to Redis

- Interacting with Slack from R and Jenkins jobs

- Securely handle credentials in R

You can skip running all the below instructions prefixed with 💪 -- as the Amazon AMI has already configured so for your convenience, and these steps are just here as an FYI if you want to reproduce the same environment later (eg for a hobby project or work).

Instead of doing a quiz this week ... you get all the 10% for rather providing some (anonymous) feedback on this course at the end of the class today.

https://github.com/daroczig/CEU-R-prod/raw/2017-2018/AWR.Kinesis/AWR.Kinesis-talk.pdf (presented at the Big Data Day Los Angeles 2016, EARL 2016 London and useR! 2017 Brussels)

- Log in to the central CEU AWS account: https://ceu.signin.aws.amazon.com/console

- Did you use 2FA / MFA?!

- Use the Ireland regiuon

- Go to the EC2 console

- Realize the mess we left there from last week! 😱 Fix it. Kill your old security groups as well. Remove unneded keys. Left over AWS resources (created before March 24) will contribute negative points to the final grade of the student starting the AWS instance etc!

- Create a new

t3.smallinstance using thede4-week3AMI, thegergely-week2IAM role, and a new security group with a unique name and opening up the 22 (ssh), 8000 (alternate ssh), 8787 (rstudio) and 8080 (jenkins) ports - Log in to RStudio using the new instance's public IP address and 8787 port (or skip using the port and go with the

/rstudiopath as per below), then theceuusername andceudatapassword - Create and run once an R script in RStudio Server that reports in the #ba-de4-2019-bots channel that you are ready

To avoid using ports like 8787 and 8080, let's configure our services to listen on the standard 80 (HTTP) and potentially on the 443 (HTTPS) port as well, and serve RStudio on the /rstudio, and Jenkins on the /jenkins path.

For this end, we will use Nginx as a reverse-proxy, so let's install it first:

sudo apt install -y nginxThen we need to edit the main site's configuration at /etc/nginx/sites-enabled/default to act as a proxy, which also do some transformations, eg rewriting the URL (removing the /rstudio path) before hitting RStudio Server:

server {

listen 80;

rewrite ^/rstudio$ $scheme://$http_host/rstudio/ permanent;

location ^~ /rstudio {

rewrite ^/rstudio/(.*)$ /$1 break;

proxy_pass http://127.0.0.1:8787/$1;

proxy_redirect http://127.0.0.1:8787/ $scheme://$http_host/rstudio/;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header Host $host;

}

}

And restart Nginx:

sudo systemctl restart nginxLet's see if the port is open on the machine:

sudo netstat -tapen|grep LISTLet's see if we can access RStudio Server on the new path:

curl localhost/rstudioNow let's see from the outside world ... and realize that we need to open up port 80!

Now we need to tweak the config to support Jenkins as well, but the above Nginx rewrite hack will not work, so we will just make it a standard reverse-proxy, eg:

server {

listen 80;

rewrite ^/rstudio$ $scheme://$http_host/rstudio/ permanent;

location ^~ /rstudio {

rewrite ^/rstudio/(.*)$ /$1 break;

proxy_pass http://127.0.0.1:8787/$1;

proxy_redirect http://127.0.0.1:8787/ $scheme://$http_host/rstudio/;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header Host $host;

}

location ^~ /jenkins/ {

proxy_pass http://127.0.0.1:8080/jenkins/;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header Host $host;

}

}

And we also need to let Jenkins also know about the custom path, so edit the JENKINS_ARGS config in /etc/default/jenkins by adding:

--prefix=/jenkinsThen restart Jenkins, and good to go!

This way you can access the above services via the below URLs:

RStudio Server:

Jenkins:

If you cannot access RStudio Server on port 80, you might need to restart nginx as per above.

This section describes how to set up a Kinesis stream with 5 shards on the live Binance transactions read from its websocket -- running in a Docker container, then feeding the JSON lines to Kinesis via the Amazon Kinesis Agent.

-

Start a

t2.microinstance running "Amazon Linux AMI 2018.03.0" (where it's easier to install the Kinesis Agent compared to using eg Ubuntu) with a known key. Make sure to set a name and enable termination protection (in the instance details)! Use SSH, Putty or eg the browser-based SSH connection. -

Install Docker (note that we are not on Ubuntu today, but using Red Hat's

yumpackage manager):sudo yum install docker sudo service docker start sudo service docker status -

Let's use a small Python app relying on the Binance API to fetch live transactions and store in a local file:

- sources: https://github.com/daroczig/ceu-de3-docker-binance-streamer

- docker: https://cloud.docker.com/repository/registry-1.docker.io/daroczig/ceu-de3-docker-binance-streamer

Usage:

screen -RRd streamer sudo docker run -ti --rm daroczig/ceu-de3-docker-binance-streamer > /tmp/transactions.json ## "C-a c" to create a new screen, then you can switch with C-a " ls -latr /tmp tail -f /tmp/transactions.json -

Install the Kinesis Agent:

As per https://docs.aws.amazon.com/firehose/latest/dev/writing-with-agents.html#download-install:

sudo yum install -y aws-kinesis-agent -

Create a new Kinesis Stream (called

crypto) at https://eu-west-1.console.aws.amazon.com/kinesis -

Configure the Kinesis Agent:

sudo yum install mc sudo mcedit /etc/aws-kinesis/agent.jsonRunning the above commands, edit the config file to update the Kinesis endpoint, the name of the stream on the local file path:

{ "cloudwatch.emitMetrics": true, "kinesis.endpoint": "https://kinesis.eu-west-1.amazonaws.com", "firehose.endpoint": "", "flows": [ { "filePattern": "/tmp/transactions.json", "kinesisStream": "crypto", "partitionKeyOption": "RANDOM" } ] } -

Restart the Agent:

sudo service aws-kinesis-agent start -

Check the status and logs:

sudo service aws-kinesis-agent status sudo journalctl -xe ls -latr /var/log/aws-kinesis-agent/aws-kinesis-agent.log tail -f /var/log/aws-kinesis-agent/aws-kinesis-agent.log -

Make sure that the IAM role can write to Kinesis and Cloudwatch, eg by attaching the

AmazonKinesisFullAccesspolicy, then restart the agentsudo service aws-kinesis-agent restart -

Check the AWS console's monitor if all looks good there as well

-

Note for the need of permissions to

cloudwatch:PutMetricData

As the botor package was already installed, we can rely on the power of boto3 to interact with the Kinesis stream. The IAM role attached to the node already has the AmazonKinesisFullAccess policy attached, so we have permissions to read from the stream.

First we need to create a shard iterator, then using that, we can read the actual records from the shard:

library(botor)

botor(region = 'eu-west-1')

shard_iterator <- kinesis_get_shard_iterator('crypto', '0')

records <- kinesis_get_records(shard_iterator$ShardIterator)

str(records)Let's parse these records:

records$Records[[1]]

records$Records[[1]]$Data

library(jsonlite)

fromJSON(as.character(records$Records[[1]]$Data))Exercises:

- parse the loaded 25 records into a

data.tableobject with proper column types. Get some help on the data format from the Binance API docs! - count the overall number of coins exchanged

- count the overall value of transactions in USD (hint:

binance_ticker_all_prices()andbinance_coins_prices()) - visualize the distribution of symbol pairs

A potential solution that you should not look at before thinking ...

library(data.table)

dt <- rbindlist(lapply(records$Records, function(record) {

fromJSON(as.character(record$Data))

}))

str(dt)

setnames(dt, 'a', 'seller_id')

setnames(dt, 'b', 'buyer_id')

setnames(dt, 'E', 'event_timestamp')

## Unix timestamp / Epoch (number of seconds since Jan 1, 1970): https://www.epochconverter.com

dt[, event_timestamp := as.POSIXct(event_timestamp / 1000, origin = '1970-01-01')]

setnames(dt, 'q', 'quantity')

setnames(dt, 'p', 'price')

setnames(dt, 's', 'symbol')

setnames(dt, 't', 'trade_id')

setnames(dt, 'T', 'trade_timestamp')

dt[, trade_timestamp := as.POSIXct(trade_timestamp / 1000, origin = '1970-01-01')]

str(dt)

for (id in grep('_id', names(dt), value = TRUE)) {

dt[, (id) := as.character(get(id))]

}

str(dt)

library(binancer)

binance_coins_prices()

dt[, .N, by = symbol]

dt[symbol=='ETHUSDT']

dt[, from := substr(symbol, 1, 3)]

dt <- merge(dt, binance_coins_prices(), by.x = 'from', by.y = 'symbol', all.x = TRUE, all.y = FALSE)

dt[, value := as.numeric(quantity) * usd]

dt[, sum(value)]Let's write an R function to increment counters on the number of transactions per symbols:

-

Get sample raw data as per above (you might need to get a new shard iterator if expired):

records <- kinesis_get_records(shard_iterator$ShardIterator)$Record

-

Function to parse and process it

txprocessor <- function(record) { symbol <- fromJSON(as.character(record$Data))$s log_info(paste('Found 1 transaction on', symbol)) redisIncr(paste('symbol', symbol, sep = ':')) }

-

Iterate on all records

library(logger) library(rredis) redisConnect() for (record in records) { txprocessor(record) }

-

Check counters

symbols <- redisMGet(redisKeys('symbol:*')) symbols symbols <- data.frame( symbol = sub('^symbol:', '', names(symbols)), N = as.numeric(symbols)) symbols

-

Visualize

library(ggplot2) ggplot(symbols, aes(symbol, N)) + geom_bar(stat = 'identity')

-

Rerun step (1) and (3) to do the data processing, then (4) and (5) for the updated data visualization.

-

🤦♂️

-

Let's make use of the next shard iterator:

## reset counters redisDelete(redisKeys('symbol:*')) ## get the first shard iterator shard_iterator <- kinesis_get_shard_iterator('crypt', '0')$ShardIterator while (TRUE) { response <- kinesis_get_records(shard_iterator) ## get the next iterator shard_iterator <- response$NextShardIterator ## extract records records <- response$Record for (record in records) { txprocessor(record) } ## summarize symbols <- redisMGet(redisKeys('symbol:*')) symbols <- data.frame( symbol = sub('^symbol:', '', names(symbols)), N = as.numeric(symbols)) ## visualize print(ggplot(symbols, aes(symbol, N)) + geom_bar(stat = 'identity') + ggtitle(sum(symbols$N))) }

-

So far, we used the

boto3Python module from R viabotorto interact with AWS, but this time we will integrate Java -- by calling the AWS Java SDK to interact with our Kinesis stream, then later on to run a Java daemon to manage our stream processing application.- 💪 First, let's install Java and the

rJavaR package:

sudo apt install r-cran-rjava

- 💪 Then the R package wrapping the AWS Java SDK and the Kinesis client, then update to the most recent dev version right away:

sudo R -e "withr::with_libpaths(new = '/usr/local/lib/R/site-library', install.packages('AWR.Kinesis', repos='https://cran.rstudio.com/'))" sudo R -e "library(devtools);with_libpaths(new = '/usr/local/lib/R/site-library', install_github('daroczig/AWR.Kinesis'))"

- 💪 Note, after installing Java, you might need to run

sudo R CMD javareconfand/or restart R or the RStudio Server viasudo rstudio-server restart:/

Error : .onLoad failed in loadNamespace() for 'rJava', details: call: dyn.load(file, DLLpath = DLLpath, ...) error: unable to load shared object '/usr/lib/R/site-library/rJava/libs/rJava.so': libjvm.so: cannot open shared object file: No such file or directory

- And after all, a couple lines of R code to get some data from the stream via the Java SDK (just like we did above with the Python backend):

library(rJava) library(AWR.Kinesis) records <- kinesis_get_records('crypto', 'eu-west-1') str(records) records[1] library(jsonlite) fromJSON(records[1])

- 💪 First, let's install Java and the

-

Create a new folder for the Kinesis consumer files:

streamer -

Create an

app.propertiesfile within that subfolder

executableName = ./app.R

regionName = eu-west-1

streamName = crypto

applicationName = my_demo_app_sadsadsa

AWSCredentialsProvider = DefaultAWSCredentialsProviderChain

- Create the

app.Rfile:

#!/usr/bin/Rscript

library(logger)

log_appender(appender_file('app.log'))

library(AWR.Kinesis)

library(methods)

library(jsonlite)

kinesis_consumer(

initialize = function() {

log_info('Hello')

library(rredis)

redisConnect(nodelay = FALSE)

log_info('Connected to Redis')

},

processRecords = function(records) {

log_info(paste('Received', nrow(records), 'records from Kinesis'))

for (record in records$data) {

symbol <- fromJSON(record)$s

log_info(paste('Found 1 transaction on', symbol))

redisIncr(paste('symbol', symbol, sep = ':'))

}

},

updater = list(

list(1/6, function() {

log_info('Checking overall counters')

symbols <- redisMGet(redisKeys('symbol:*'))

log_info(paste(sum(as.numeric(symbols)), 'records processed so far'))

})),

shutdown = function()

log_info('Bye'),

checkpointing = 1,

logfile = 'app.log')-

💪 Allow writing checkpointing data to DynamoDB and CloudWatch in IAM

-

Convert the above R script into an executable using the Terminal:

cd streamer

chmod +x app.R- Run the app in the Terminal:

/usr/bin/java -cp /usr/local/lib/R/site-library/AWR/java/*:/usr/local/lib/R/site-library/AWR.Kinesis/java/*:./ \

com.amazonaws.services.kinesis.multilang.MultiLangDaemon \

./app.properties

- Check on

app.log

-

Reset counters

library(rredis) redisConnect() keys <- redisKeys('symbol*') redisDelete(keys)

-

💪 Install the

treemappackagesudo apt install r-cran-httpuv r-cran-shiny r-cran-xtable r-cran-htmltools r-cran-igraph r-cran-lubridate r-cran-tidyr r-cran-quantmod r-cran-broom r-cran-zoo r-cran-htmlwidgets r-cran-tidyselect r-cran-rlist r-cran-rlang r-cran-xml sudo R -e "devtools::with_libpaths(new = '/usr/local/lib/R/site-library', install.packages(c('treemap', 'highcharter'), repos='https://cran.rstudio.com/'))" -

Run the below Shiny app

## packages for plotting library(treemap) library(highcharter) ## connect to Redis library(rredis) redisConnect() library(shiny) library(data.table) ui <- shinyUI(highchartOutput('treemap', height = '800px')) server <- shinyServer(function(input, output, session) { symbols <- reactive({ ## auto-update every 2 seconds reactiveTimer(2000)() ## get frequencies symbols <- redisMGet(redisKeys('symbol:*')) symbols <- data.table( symbol = sub('^symbol:', '', names(symbols)), N = as.numeric(symbols)) ## color top 3 symbols[, color := 1] symbols[symbol %in% symbols[order(-N)][1:3, symbol], color := 2] ## return symbols }) output$treemap <- renderHighchart({ tm <- treemap(symbols(), index = c('symbol'), vSize = 'N', vColor = 'color', type = 'value', draw = FALSE) N <- sum(symbols()$N) hc_title(hctreemap(tm, animation = FALSE), text = sprintf('Transactions (N=%s)', N)) }) }) shinyApp(ui = ui, server = server, options = list(port = 3838))

Exercise: create a new GitHub repository with a Dockerfile installing botor (and its dependencies), binancer and slackr to be able to run the above jobs in a Docker container. Set up a DockerHub registry for the Docker image and start using in the Jenkins jobs.

Hints:

-

create a new GitHub repo

-

create a new RStudio project using the git repo

-

set the default git user on the EC2 box

git config --global user.email "[email protected]" git config --global user.name "Your Name"

-

create a Personal Access Token set up on GitHub for HTTPS auth on your EC2 box

-

example GitHub repo: https://github.com/daroczig/ceu-de3-docker-prep

-

example DockerHub repo: https://cloud.docker.com/repository/registry-1.docker.io/daroczig/ceu-de3-week5-prep

-

install Docker on EC2:

sudo apt update sudo apt install -y apt-transport-https ca-certificates curl software-properties-common curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - sudo add-apt-repository \ "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" sudo apt-get update sudo apt-get install docker-ce

-

example run:

docker run --rm -ti daroczig/ceu-de3-week5-prep R -e "binancer::binance_klines('BTCUSDT', interval = '1m', limit = 1)[1, close]"

We are continuously trying to improve the content of this class and looking forward to any feedback and suggestions: https://forms.gle/C5YDtJNxj7kTHjxU9

File a GitHub ticket.