Comments (16)

@anirusharma project id mention in the error log. Does it match with the project id when you check in the console?

from dlp-dataflow-deidentification.

@santhh : No it doesnt match, somehow it is picking some other incorrect project id.

from dlp-dataflow-deidentification.

@anirusharma There is a default project id picked up if SA does not have proper scopes and access. Please make sure the SA you are using has following access:

Cloud KMS CryptoKey Encrypter/Decrypter

Editor

Dataflow Admin

from dlp-dataflow-deidentification.

@santhh : thanks , attached is pic, default compute service role has access.

But still instead of picking project number = 725596089534 , it picks project number = 770406736630 and fails.

Also when I go in my correct project API console

https://console.developers.google.com/apis/api/cloudkms.googleapis.com/overview?project=725596089534

I can see all KMS failures on dashboard but there is no detailed log to check from where it decided to pick project number = 770406736630 instead of project=725596089534

Also i tried doing modification in command and added parameter dlpProject=725596089534 and this time I can see in logs it goes , it picks the correct project it seems but it still fails though with same error

java.lang.RuntimeException: org.apache.beam.sdk.util.UserCodeException: com.google.api.client.googleapis.json.GoogleJsonResponseException: 404 Not Found

{

"code" : 404,

"errors" : [ {

"domain" : "global",

"message" : "Project '725596089534' not found.",

"reason" : "notFound"

} ],

"message" : "Project '725596089534' not found.",

"status" : "NOT_FOUND"

from dlp-dataflow-deidentification.

@anirusharma Can you please share the gradle run args and the use case you trying to execute?

from dlp-dataflow-deidentification.

@santhh

It is execution of Example 1 mentioned in Readme. and that works fine in DirectRunner.

Below is the command

gcloud dataflow jobs run test-run-2 --gcs-location gs://templates_test_as/dlp-tokenization --parameters inputFile=gs://input_as/test.csv,project=testbatch-211413,batchSize=4700,deidentifyTemplateName=projects/test

batch-211413/deidentifyTemplates/1771891382411767128,outputFile=gs://output_as/template_def_run,inspectTemplateName=projects/testbatch-211413/inspectTemplates/1

771891382411767128,csek=CiQAaKcXyTkkel9lOqigD+YFIgawKgwix/gd3T1/EMi/4sr8X2ISSQAiB54ZlJA9vmJkrjxJH3n0RrABd/GhyRPmEFfCvDpkI5+01YBRNYT5id8dowi6SOjO+ZQ8YhRadfNCZZ6a

pTRcBuDkjlJnOf8=,csekhash=AXGVgPjfaO0JCI5QQyfyz08E1WVoKBxP7wKP9Vouthw=,fileDecryptKeyName=test,fileDecryptKey=quickstart

from dlp-dataflow-deidentification.

@anirusharma Ok assuming you are using customer supplied key for GCS bucket, you don't need inspect template name. So just take that args out and try please.

Also, assuming you have created the template successfully and SA has access to the bucket?

Can you also try just do a gradle run without using template? For example:

gradle run -DmainClass=com.google.swarm.tokenization.CSVBatchPipeline -Pargs="--streaming --project=--runner=DataflowRunner --inputFile=.csv --batchSize=4700 --dlpProject= --deidentifyTemplateName=projects//deidentifyTemplates/8658110966372436613 --outputFile=gs://output-tokenization-data/output-structured-data --csek=CiQAbkxly/0bahEV7baFtLUmYF5pSx0+qdeleHOZmIPBVc7cnRISSQD7JBqXna11NmNa9NzAQuYBnUNnYZ81xAoUYtBFWqzHGklPMRlDgSxGxgzhqQB4zesAboXaHuTBEZM/4VD/C8HsicP6Boh6XXk= --csekhash=lzjD1iV85ZqaF/C+uGrVWsLq2bdN7nGIruTjT/mgNIE= --fileDecryptKeyName=gcs-bucket-encryption --fileDecryptKey=data-file-key --workerHarnessContainerImage=dataflow.gcr.io/v1beta3/beam-java-streaming:beam-master-20180710"

from dlp-dataflow-deidentification.

@santhh

gcloud dataflow jobs run test-run-0 --gcs-location gs://templates_test_as/dlp-tokenization --parameters inputFile=gs://input_as/test.csv,project=testbatch-211413,batchSize=4700,deidentifyTemplateName=projects/test

batch-211413/deidentifyTemplates/1771891382411767128,outputFile=gs://output_as/template_def_run,inspectTemplateName=projects/testbatch-211413/inspectTemplates/1

771891382411767128,csek=CiQAaKcXyTkkel9lOqigD+YFIgawKgwix/gd3T1/EMi/4sr8X2ISSQAiB54ZlJA9vmJkrjxJH3n0RrABd/GhyRPmEFfCvDpkI5+01YBRNYT5id8dowi6SOjO+ZQ8YhRadfNCZZ6a

pTRcBuDkjlJnOf8=,csekhash=AXGVgPjfaO0JCI5QQyfyz08E1WVoKBxP7wKP9Vouthw=,fileDecryptKeyName=test,fileDecryptKey=quickstart,dlpProject=testbatch-211413

Running above mentioned command , i am able to bypass this error i.e. by explicitly passing dlpProject same as my project name (and not id that I also tried to pass previously)

Now i am getting a new error

java.lang.RuntimeException: org.apache.beam.sdk.util.UserCodeException: com.google.api.gax.rpc.PermissionDeniedException: io.grpc.StatusRuntimeException: PERMISSION_DENIED: Not authorized to access requested deidentify template.

com.google.cloud.dataflow.worker.GroupAlsoByWindowsParDoFn$1.output(GroupAlsoByWindowsParDoFn.java:183)

com.google.cloud.dataflow.worker.GroupAlsoByWindowFnRunner$1.outputWindowedValue(GroupAlsoByWindowFnRunner.java:102)

com.google.cloud.dataflow.worker.StreamingGroupAlsoByWindowReshuffleFn.processElement(StreamingGroupAlsoByWindowReshuffleFn.java:55)

com.google.cloud.dataflow.worker.StreamingGroupAlsoByWindowReshuffleFn.processElement(StreamingGroupAlsoByWindowReshuffleFn.java:37)

com.google.cloud.dataflow.worker.GroupAlsoByWindowFnRunner.invokeProcessElement(GroupAlsoByWindowFnRunner.java:115)

com.google.cloud.dataflow.worker.GroupAlsoByWindowFnRunner.processElement(GroupAlsoByWindowFnRunner.java:73)

com.google.cloud.dataflow.worker.GroupAlsoByWindowsParDoFn.processElement(GroupAlsoByWindowsParDoFn.java:133)

com.google.cloud.dataflow.worker.util.common.worker.ParDoOperation.process(ParDoOperation.java:43)

com.google.cloud.dataflow.worker.util.common.worker.OutputReceiver.process(OutputReceiver.java:48)

com.google.cloud.dataflow.worker.util.common.worker.ReadOperation.runReadLoop(ReadOperation.java:200)

com.google.cloud.dataflow.worker.util.common.worker.ReadOperation.start(ReadOperation.java:158)

com.google.cloud.dataflow.worker.util.common.worker.MapTaskExecutor.execute(MapTaskExecutor.java:75)

com.google.cloud.dataflow.worker.StreamingDataflowWorker.process(StreamingDataflowWorker.java:1227)

com.google.cloud.dataflow.worker.StreamingDataflowWorker.access$1000(StreamingDataflowWorker.java:136)

com.google.cloud.dataflow.worker.StreamingDataflowWorker$6.run(StreamingDataflowWorker.java:966)

java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

java.lang.Thread.run(Thread.java:745)

Caused by: org.apache.beam.sdk.util.UserCodeException: com.google.api.gax.rpc.PermissionDeniedException: io.grpc.StatusRuntimeException: PERMISSION_DENIED: Not authorized to access requested deidentify template.

What role do I need to add to which service account to fix it ?

from dlp-dataflow-deidentification.

@anirusharma Just to confirm, project id is actually the string format (same as project name) but project number is numeric. In the log, it always gives project number reference. But pretty much everywhere else in GCP you always use id which is in string format. For example: Project Name: my-dlp-project, projectId: my-dlp-project which has project number: 5098765432. It's a little bit confusing if you are using GCP for the first time. If you check your dashboard-> project setting, you will see.

you can use DLP Administrator role for the error below.

from dlp-dataflow-deidentification.

@santhh : thanks , understood difference between project id and project number.

Now i am getting this error.

java.lang.RuntimeException: org.apache.beam.sdk.util.UserCodeException: com.google.api.gax.rpc.PermissionDeniedException: io.grpc.StatusRuntimeException: PERMISSION_DENIED: Not authorized to access requested deidentify template.

And Compute service default role has access to DLP. What could be causing this.

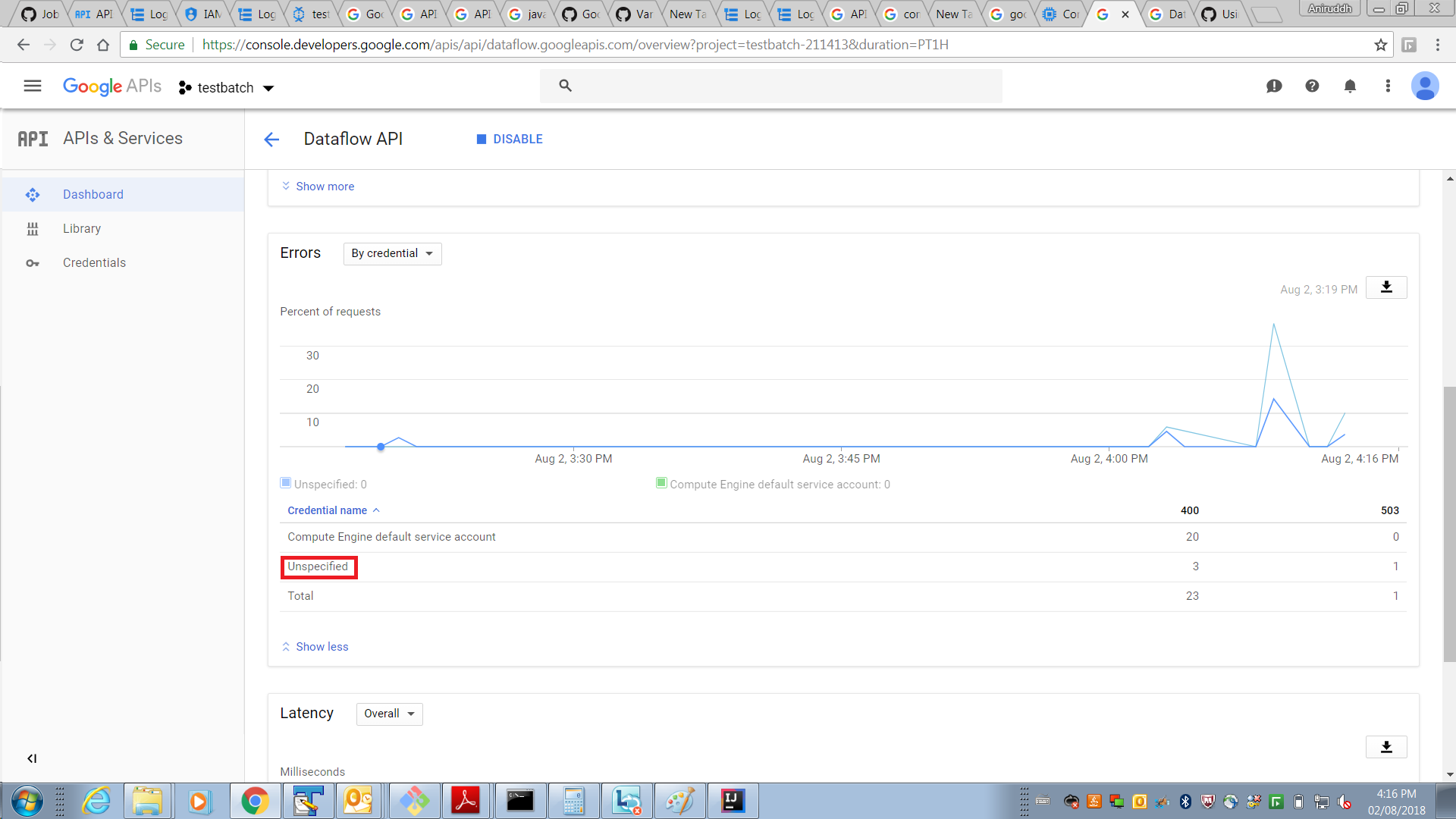

Interestingly in API Dashboard I see the error comes on 'Unspecified' account. Please see the screneshot.

Now this dashboard shows errors in API calls, but dont know where to see detailed logs which also explains why this error happens. Now in one API it says error is happening in 'Unspecified' credential while other one is saying error is happening in 'Compute Engine Default Service Account' . Not sure what is dedicated place to check for the error.

By the way 'Compute Engine Default Service Account' has DLP Administrator and

DLP De-identify Templates Editor roles assigned to it.

from dlp-dataflow-deidentification.

@anirusharma Can you please check if the VM instance that is created for data flow process has scope setup as "full access to cloud apis"?

In UI it looks something like this:

Cloud API access scopes

Allow full access to all Cloud APIs

if not you can setup by using gcloud command:

gcloud compute instances set-scopes [INSTANCE_NAME]

--service-account [SERVICE_ACCOUNT_EMAIL]

--scopes=https://www.googleapis.com/auth/cloud-platform

from dlp-dataflow-deidentification.

@santhh :Thanks , full access to Cloud APIs was already activated. It still fails with same error. Might be like before it is failing due to some other problem but somehow prints a misleading error message. Not sure.

from dlp-dataflow-deidentification.

@anirusharma Ok. I would try to see if issue relates to SA first. Can you try to get the template form API explorer by using your account?

If it works, can you use compute service account (create a json file and set local env with google app credentials) and do a curl on the REST API for re identify ?

At least this would help to understand if the permission denied relate to the service account?

from dlp-dataflow-deidentification.

@santhh : Yes, I run this kind of command and I can access template from service account , so this means service account has access.

curl -s -H 'Content-Type: application/json' -H 'Authorization: Bearer ya29.c.ElryBTIUyePsf4dD18KqOqFDTaRl6ikfOVhhhqJ68ntdDF1Jt1kXnGi1SySMBsqkWuDgC1VAzvT6nTvrBESq1IazvPKhJkb7Jdrqa1OsB0wd7rptHWHlxmvmU' 'https://dlp.googleapis.com/v2/projects/testbatch-211413/deidentifyTemplates/1771891382411767128'

It seems like its some other error but logs are masking the problem

from dlp-dataflow-deidentification.

@santhh : Apology , i did a mistake and figured out the issue. It was InspectTemplate was not correctly created

from dlp-dataflow-deidentification.

I am getting the same error when running the following

curl -s -H 'Content-Type: application/json' -H 'Authorization: Bearer ya29.c.El8-BtTX7M7O0RNViHn-yc_jHdMo8Df-NzXhJQ8uJacZ4CiVsVIHDu9YoA6jbdpmfGq82FYWx70W1rtFDplYpUoGl2tj-iN4Bz2yi-IncbTVWWC8XSqWzx2M3JArCYOYKw' 'https://dlp.googleapis.com/v2/projects/alexa-practice-project/content:inspect' -d @table-inspect-request.json

How did you create a correct inspect template @anirusharma ? Thanks in advance!

from dlp-dataflow-deidentification.

Related Issues (20)

- Template that is generated needs to be fix. BigQuery tablespec is called labels

- Process 'command' finished with a non-zero exit value 1

- ContentProcessorDofn Type Issue HOT 1

- Exception while creating template HOT 1

- Exception while creating template. HOT 4

- Default table for output is brittle since GCS allows for file names that are incompatible with BQ table ids

- Using deidentify template and inspect template in a regional location results in permission error HOT 8

- Custom Dataflow Template failing HOT 2

- Dataflow job is throwing exceptions - Followed all the steps as mentioned in the Git and Google HOT 2

- DLP to run on the data within an existing Big Query table HOT 1

- Correct arguments for gcloud dataflow jobs run

- Pushing 2 files with 2 different names at the same timestamp is crashing the job HOT 12

- Command to run Dataflow in deploy-data-tokeninzation-solution.sh returns error HOT 1

- Build failing with the error for SanitizeFileNameDoFn.java:37 HOT 5

- Command to run ReIdentification From BigQuery pipeline gives error HOT 4

- Please restore the docker image in the container registry

- Let the user set the location of dlp to call HOT 1

- --additional-experiments=enable_secure_boot is not added to the java file com.google.swarm.tokenization.DLPTextToBigQueryStreamingV2PipelineOptions HOT 1

- Could not re-identify the de-identified data from Big query to Pubsub. HOT 1

- Gradle build issue HOT 5

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from dlp-dataflow-deidentification.