Comments (7)

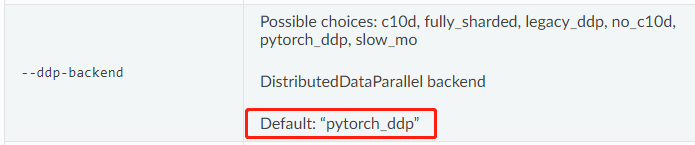

As I read the [fairseq document](https://fairseq.readthedocs.io/en/latest/command_line_tools.html#fairseq-train). They defaultly use distributed data parallel. You use 32 GPU, the batch size is 8 when --required-batch-size-multiple 1 . So for each time of gradient descent, the gradient is learned from 32*8=256 cases. But when I use 1 gpu, the real batch size is 1*8=8. So this make some different.

I changed the learning rate, as it going lower, the performance getting better. From the table below, we can say 1e-06 is a proper lr for 1 GPU.

<style> </style>| wiki-test-kilt | msnbc-test-kilt | aida-test-kilt | clueweb-test-kilt | aquaint-test-kilt | ace2004-test-kilt | |

|---|---|---|---|---|---|---|

| author_aida_model | 83.02 | 83.54 | 87.92 | 68.75 | 84.32 | 84.82 |

| 3e-05 | 76.53 | 78.66 | 83.86 | 66.59 | 81.84 | 81.71 |

| 2e-05 | 79.70 | 81.25 | 85.11 | 67.86 | 81.84 | 84.05 |

| 1e-05 | 82.01 | 81.40 | 85.77 | 68.74 | 84.32 | 84.44 |

| 7e-06 | 82.23 | 82.62 | 86.27 | 68.74 | 84.46 | 84.44 |

| 5e-06 | 82.44 | 82.62 | 86.24 | 68.92 | 84.32 | 84.44 |

| 3e-06 | 82.85 | 83.08 | 86.85 | 68.97 | 84.32 | 85.21 |

| 1e-06 | 82.82 | 83.69 | 87.54 | 69.06 | 85.14 | 85.99 |

| 9e-07 | 82.94 | 83.99 | 87.38 | 69.11 | 85.01 | 85.60 |

By the way. Thanks for you always quick answer for this issue and the issue #56 . I get the same trie tree with kilt_titles_trie_dict.pkl and get similar result with the model you shared.

Thanks again! Your guidances help a lot.

from genre.

It looks like in the first table you are using a "trie tree build specially for aida" where in the second you are using "WIKI trie tree 'kilt_titles_trie_dict.pkl'". So these two tables do not look comparable.

from genre.

no, the first table also use WIKI trie tree, not a tree build specially for aida. I add the word "without" before it now.

from genre.

Try to lower the learning rate. I used many GPUs so the gradient might have been more precise.

from genre.

hi, i guess maybe this is because you use a smaller trie tree (tree build for aida) for pretrain and finetune, so the searching space is smaller for the model. This will bring some performance improvement.

Do you think this assumption is reasonable?

from genre.

I did not train with a trie. Not during pertaining nor during finetuning.

from genre.

Yes, you didn't. I re-read the section 4.1, you train and finetune just like normal generation task. But when testing, use a trie to constrain the output.

But in Appendix A.1, the paper mentioned we fine-tune on AIDA without resetting the learning rate nor the optimizer statistics for 10k steps.

I just use 1 GPU, and you use 32 GPU, will this lead the result difference? I will try to lower the learning rate and see.

from genre.

Related Issues (20)

- is prefix_allowed_tokens_fn only working for seq2seq model.generate? HOT 2

- Loading mgenre models is taking 44GB RAM

- Problem in candidate-based generation on GENRE using transformers >= 4.36.0

- the same entity name question

- Inference speed is too slow. Is this problem because of Constrained beam search?

- can not receive different outputs from mGENRE.sample using dropout in train mode and different seeds HOT 2

- can't find ID to title map json file HOT 1

- alignment between candidate and KILT wikipedia data source HOT 4

- Question: Running genre on multiple GPUs HOT 1

- format of entries for entity linking training HOT 2

- Invalid prediction - no wikipedia entity HOT 10

- Fail to Reproduce the dev score of GENRE Document Retrieval HOT 7

- mGENRE finetuning issue

- Why do you prepend `eos_token_id' to sent_orig HOT 2

- colab script to run GENRE

- NameError: name 'batched_hypos' is not defined (mGENRE) HOT 5

- [Question] Evaluating mGENRE on Mewsli-9

- Fine-tune with hugging face trainer

- import package error

- Chinese entity linking

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from genre.