shaker's People

Forkers

p1llule openfnord alexwaibel tlaurion hpoa909 leveche tomhoover ldeso mylostone sienks-qubes ivan-pinatti alzer89shaker's Issues

Reader template installation script fails

Qubes-OS R4.1.1

Here is what I get when I install the reader template. The package installs correctly but the scriptlet fails to run the salt.

$ sudo qubes-dom0-update 3isec-qubes-reader

Using sys-whonix as UpdateVM to download updates for Dom0; this may take some time...

Qubes OS Repository for Dom0 2.9 MB/s | 3.0 kB 00:00

Qubes OS Repository for Dom0 21 kB/s | 1.1 kB 00:00

Dependencies resolved.

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

3isec-qubes-reader x86_64 0.1-1.fc32 qubes-dom0-cached 4.8 k

Transaction Summary

================================================================================

Install 1 Package

Total size: 4.8 k

Installed size: 1.6 k

Is this ok [y/N]: y

Downloading Packages:

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Installing : 3isec-qubes-reader-0.1-1.fc32.x86_64 1/1

Running scriptlet: 3isec-qubes-reader-0.1-1.fc32.x86_64 1/1

local:

Data failed to compile:

----------

State 'change_default' in SLS 'reader.clone' is not formed as a list

----------

State 'change_template' in SLS 'reader.clone' is not formed as a list

DOM0 configuration failed, not continuing

Verifying : 3isec-qubes-reader-0.1-1.fc32.x86_64 1/1

Installed:

3isec-qubes-reader-0.1-1.fc32.x86_64

Complete!

Mullvad - script references wireshark instead of wireguard

Deploy inotifywait script to modify repo definitions when they change

A limitation of cacher today is that it stops qubesos to use its appvm to report updates status to dom0. Meaning users have to check manually for all templates package updates in their hygiene.

Another limitation is that a lot of people install apps in qubes for a spontaneous need without installing those apps in the repo. This fails miserably, and is the same reason of first limitation and a blocker for massive adoption/upstreaming.

À solution to this would be to have inotify script deployed and running as a service in the templates, therefore also running in the qubes.

That service could detect (qubesdb /type) if we are in a template and make sure all repos are apt-cacher compliant, or if not, make the urls transformed back to the standard format.

Using inotifywait here would assure that anyone wanting to install whatever they want, not to have to even consider adapting for apt-cacher-ng. Just drop the standard repo url, bypass network restrictions to download pubkey (still a problem but I give up on that one: I guess extrepo is the expected project usage for mitigation, or have the user expected to know how to export proxy address to use wget/curl, not this issue solution proposal) and you're good to go.

Updating/reinstallimg sys-cacher rpm, or recalling salt recipe on restored newly installed template would be the only thing sys-cacher users would need to remember to do on dom0 (Where an helper script could call template salt calls too..) making sys-cacher nearly seemless solution.

Idea coming from:

What would still be needed on top of cacher is a wrapper of some sorts to change repo definitions URLs as soon as they are added (probably per cacher deployment) to be apt-cacher-ng compliant prior to the users trying to use those repo definitions not being transformed to be apt-cacher-ng compliant and failing. More thinking is needed to fix that. It could be a directory watching deamon (inotifywait), triggered when the repo directory files are modified and applying a sed to transform all current https URLs to use apt-cacher-ng as part of the cacher (or sys-whonix, or both). @unman, please check inotifywait. If that wrapper was deployed in templates per cacher, the whole problem of users adding repos after cacher deployment would be dealt with and become a non-issue, and would not require qubes updater to be modified either.

Some comments on cacher rpm and doc discrepencies and invalid application

Bear with me, learning my way as of now and using this long term needed proxy to practice my understanding.

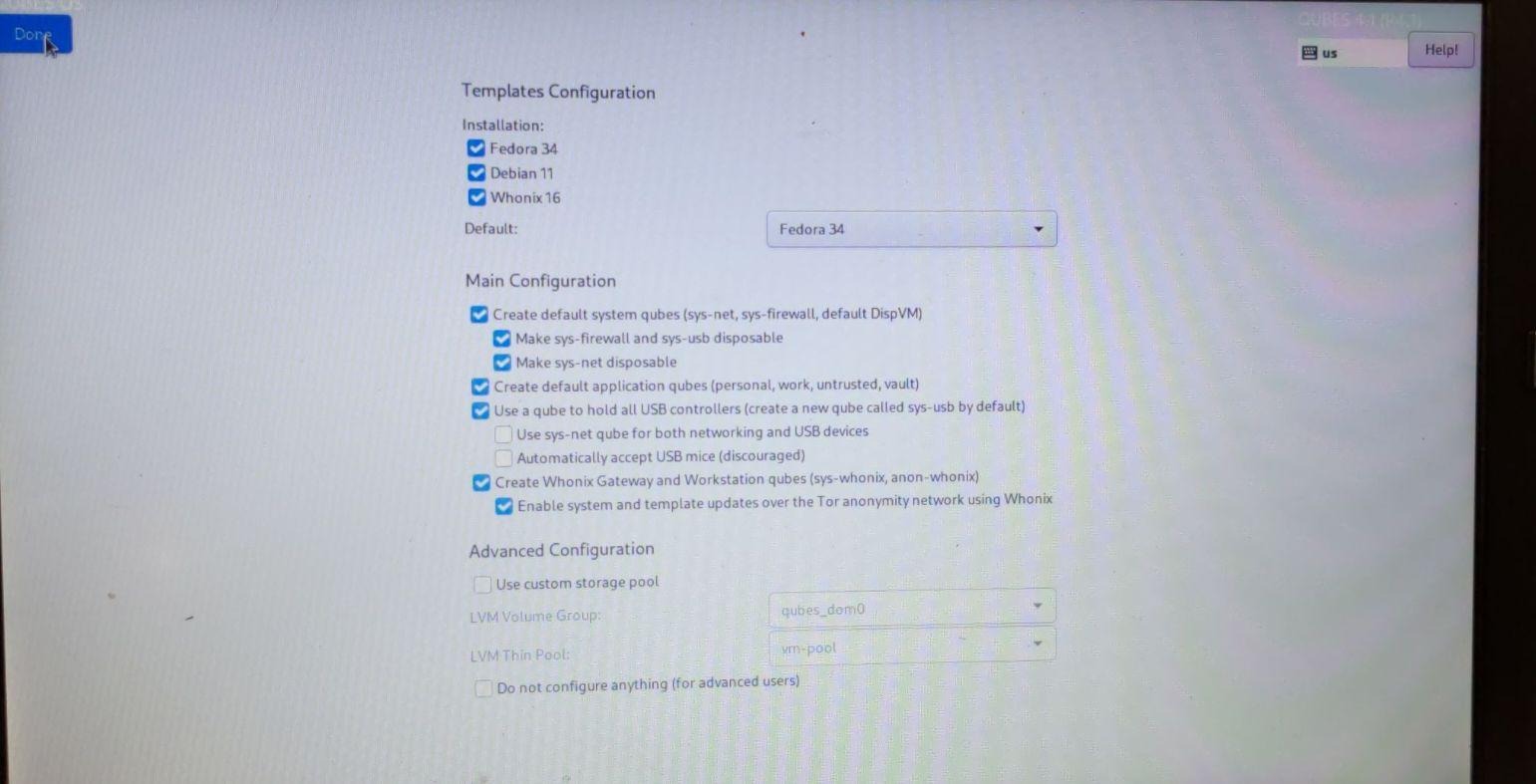

We are on Q4.1.1 here, with whonix-wks and whonix-gw as well latest, installed with a fedault qubes installation (the expected large audience)

I installed cacher from your repository. Another issue will be raised on that later on, nothing got copied from sys-whonix update vm to dom0 and I had to qvm-run the rpm to dom0 with redirection trick, and then installed it locally with rpm -i. Not the interest of this issue, which is aimed at making things work by default for anyone installing long awaited cacher:

1- README is wrong where cacher.spec is right on guiding on applying change_templates.sls

Line 17 in 147c07e

Line 59 in 147c07e

If you intended by:

Line 16 in 147c07e

To say that

--targets=Name_Of_Template, you should probably be more explicit about it (not so clear for newbies learning salt like myself). Still, its cacher.change_templates not cacher.change_templates.sls

2- change_templates.sls is not applying changes properly for Q4.1 own repos since there are spaces between baseurl=https, as opposed to other fedora definitions....

shaker/cacher/change_templates.sls

Line 37 in 147c07e

What worked for me was to modify change_templates.sls to the following:

EDITED:

# vim: set syntax=yaml ts=2 sw=2 sts=2 et :

#

#

#

{% if grains['os_family']|lower == 'debian' %}

{% for repo in salt['file.find']('/etc/apt/sources.list.d/', name='*list') %}

{{ repo }}_baseurl:

file.replace:

- name: {{ repo }}

- pattern: 'https://'

- repl: 'http://HTTPS///'

- flags: [ 'IGNORECASE', 'MULTILINE' ]

{% endfor %}

/etc/apt/sources.list:

file.replace:

- name: /etc/apt/sources.list

- pattern: 'https:'

- repl: 'http://HTTPS/'

- flags: [ 'IGNORECASE', 'MULTILINE' ]

{% elif grains['os_family']|lower == 'arch' %}

pacman:

file.replace:

- names:

- /etc/pacman.d/mirrorlist

- /etc/pacman.d/99-qubes-repository-4.1.conf.disabled

- pattern: 'https:'

- repl: 'http://HTTPS/'

- flags: [ 'IGNORECASE', 'MULTILINE' ]

{% elif grains['os_family']|lower == 'redhat' %}

{% for repo in salt['file.find']('/etc/yum.repos.d/', name='*repo*') %}

{{ repo }}_baseurl:

file.replace:

- name: {{ repo }}

- pattern: 'baseurl=https://'

- repl: 'baseurl=http://HTTPS///'

- flags: [ 'IGNORECASE', 'MULTILINE' ]

{{ repo }}_baseurl_:

file.replace:

- name: {{ repo }}

- pattern: 'baseurl = https://'

- repl: 'baseurl = http://HTTPS///'

- flags: [ 'IGNORECASE', 'MULTILINE' ]

{{ repo }}_metalink:

file.replace:

- name: {{ repo }}

- pattern: 'metalink=https://(.*)basearch'

- repl: 'metalink=http://HTTPS///\1basearch&protocol=http'

- flags: [ 'IGNORECASE', 'MULTILINE' ]

{% endfor %}

{% endif %}

3- I am not sure how to resolve whonix complaining that no tor enabled update-vm is found for the moment and haven't found any solution for it for the moment:

WARNING: Execution of /usr/bin/apt prevented by /etc/uwt.d/40_qubes.conf because no torified Qubes updates proxy found.

Please make sure Whonix-Gateway (commonly called sys-whonix) is running.

- If you are using Qubes R3.2: The NetVM of this TemplateVM should be set to Whonix-Gateway (commonly called sys-whonix).

- If you are using Qubes R4 or higher: Check your _dom0_ /etc/qubes-rpc/policy/qubes.UpdatesProxy settings.

_At the very top_ of that file you should have the following:

$tag:whonix-updatevm $default allow,target=sys-whonix

To see if it is fixed, try running in Whonix TemplateVM:

sudo systemctl restart qubes-whonix-torified-updates-proxy-check

Then try to update / use apt-get again.

For more help on this subject see:

https://www.whonix.org/wiki/Qubes/UpdatesProxy

If this warning message is transient, it can be safely ignored.

https://qubes.3isec.org/tasks.html missing repo filename

https://qubes.3isec.org/3isec-qubes-task-manager-0.1-1.x86_64.rpm

Expects repo def under /etc/yum.repos.d/3isec-dom0.repo

While instructions at https://qubes.3isec.org/tasks.html don't specify that a specific filename is required

Cacher - allow cacher to be used for dom0 updates

Straightforward to do.

Worth caching those packages? Probably not at the moment.

Cacher package opens all templates/standalones on install

On installing the cacher package, all templates and standalones are opened.

Rewriting of repository definitions is only defined for debian,red-hat, and arch derivatives.

Reported on Forum wrt windows templates.

It is possible to use salt on windows templates, but how many users will do that?

More importantly, will those other templates/qubes gracefully shut down?

Intended license for this project?

Shaker/tasks is being talked about on matrix for next QubesOS summit.

Questions that were asked are

- intended license for shaker/tasks

- possible integration paths under QubesOS cummunity repo

That happened here and on https://matrix.to/#/!aYyyGgWwbaJfJexJtF:matrix.org/$rmhrXBRY0Sq5IAPCLX5pWkLDRa2ONu2OGbC0xdvh9iA?via=matrix.org&via=invisiblethingslab.com&via=envs.net

Pihole relies on cacher

pihole/install.sls rewrites repos regardless of whether cacher exists.

This is just wrong.

Lacks some minimal doc

It requires quite some dedication today to make sense of this material. The following would IMHO improve tremendously its usefulness:

- a README for each directory -- the one in

cacher/does help, and the format would also benefit from some enhancements (e.g. explain what each qubesctl invocation achieves; use markdown formatting) - a toplevel README, which would possibly list projects in increasing complexity, and explain shortly what they demonstrate

Syncthing - package removal does not remove duplicate nft rules

On package removal inbound nft rules should be removed.

If there are duplicate rules, only the first is removed, leaving the other(s).

The in.sh script should remove all examples of the same rule.

cacher issues with spotify : "500 SSL error: certificate verify failed [IP: 127.0.0.1 8082]"

Upstream instructions at https://www.spotify.com/ca-en/download/linux/

Transformed to fit cacher requirements

curl -sS https://download.spotify.com/debian/pubkey_7A3A762FAFD4A51F.gpg | sudo gpg --dearmor --yes -o /etc/apt/trusted.gpg.d/spotify.gpg

echo "deb http://HTTPS:///repository.spotify.com stable non-free" | sudo tee /etc/apt/sources.list.d/spotify.list

sudo apt-get update && sudo apt-get install spotify-client

Results in

E: Failed to fetch http://HTTPS///repository.spotify.com/dists/stable/InRelease 500 SSL error: certificate verify failed [IP: 127.0.0.1 8082]

E: Some index files failed to download. They have been ignored, or old ones used instead.

Any suggestions @unman ?

Why is CUPS a dependency for apt-cacher-ng?

Line 23 in d669a3d

As I try to keep CUPS out of most of my computery life, I can't help but wonder: Is CUPS really a necessary dependency, or just leftover from some other project/code?

Any way in salt to apply to all files?

shaker/cacher/change_templates.sls

Line 9 in 449db5c

Questioning here because if it could, then it should probably be applied to all repos deployed for arch Debian and Fedora in a seperate change_all_templates.sls and maybe even applied before qubes updates when cacher is deployed?

(Don't hesitate to point me to your notes directly. That's it, you made me curious again at salt and got me motivated to get a real shot at it this time).

Thank you @unman

Make whonix templates happy to use cacher

1- The following userinfo.html would need to be put under /usr/lib/apt-cacher-ng/userinfo.htmlunder cloned template-cacher so that whonix templates proxy check detects a tor enabled local proxy (otherwise fails):

<!DOCTYPE html>

<html lang="en"><html>

<head>

<title>403 Filtered</title>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8" />

<meta name="application-name" content="tor proxy"/>

</head>

</html>

(As stated under #10 (comment) point 1)

Syncthing - nft rules lost on reboot

nft rules configured for inbound connections are lost on reboot, even though configured with ./in.sh -p

This is because sys-* are disposable by default on 4.1

Cacher update proxy throws error for some repos

With some repositories correctly configured to use cacher, updates fail.

The reported error is:

503 Server reports unexpected range [IP: 127.0.0.1:8082]

Updates fail for that repository because InRelease cannot be downloaded.

Other repositories update well.

Can be see with (e.g):

http://HTTPS///apt.syncthing.net

License and authorship information?

Hi @unman!

Thanks for sharing these states! I am keen on using some of them (starting by the cacher state) that I'd like to adapt to my own needs. I usually package my own states and make them public mostly for convenience (example), I'd be keen on doing the same with any work derived from yours.

I haven't found any copyright statement or license information in the repo, though:

- under which license(s) are you publishing this repository?

- what does the corresponding copyright statement look like?

If that helps, I'm happy to open a PR with that information if you tell me your preferences. 🙂

@tlaurion as the only other contributor listed, I assume you'd be fine with @unman's choice of license, but I'd ask you what's the copyright statement you prefer for your own contributions 🙂

Share relies on cacher

share/install.sls rewrites repos regardless of whether cacher exists.

This is just wrong.

Cacher package postun defuncs split-gpg

3- Something isn't right here at cacher package uninstall:

Lines 67 to 70 in 3f59aac

It doesn't look like if you are removing the cacher lines for update proxy here, more like a copy paste of split-gpg:

Lines 38 to 41 in 3f59aac

Probably a bad copy paste, but I think you would like to know before someone complains that uninstalling cacher makes gpg-split defunc.

(as quoted from #10 (comment) point 3)

Cacher incompatible with sys-whonix (updates fail for whonix-ws and whonix-gw)

EDIT:

- Working, undesired implementation cacher proxy, advertising itself as guaranteeing tor proxy even if false, is at: #10 (comment)

- The only way whonix template updates could be cached as all other templates if repo defs modigied to compoy with apt-cacher-ng requirements is if a cacher-whonix version was created, deactivating whonix-gw's tinyproxy and replacing it with apt-cacher-ng so that sys-whonix would be the update+cache proxy.

When cacher is activated, whonix-gw and whonix-ws templates cannot be updated anymore, since both whonix-gw and whonix-ws templates implement a check through systemd qubes-whonix-torified-updates-proxy-check at boot.

Also, cacher overrides whonix recipes applied at salt install from qubes installation, deployed when the user specifies that he wants all updates to be downloaded through sys-whonix.

The standard place where qubes defines, and applies policies on what to use as update proxies to be used is under /etc/qubes-rpc/policy/qubes.UpdatesProxy which contains on standard install:

Whonix still has policies deployed at 4.0 standard place:

$type:TemplateVM $default allow,target=sys-whonix

$tag:whonix-updatevm $anyvm deny

And where cacher write those at standard q4.1 place

Lines 7 to 9 in 3f59aac

First thing first, I think both cacher and whonix should agree on where UpdatesProxy settings should be prepended/modified, which I think historically (and per Qubes documentation as well) it should be under /etc/qubes-rpc/policy/qubes.UpdatesProxy for clarity and not adding confusion.

Whonix policies needs to be applied per q4.1 standard under Qubes. Not subject of this issue.

The following applies proper tor+cacher settings:

shaker/cacher/change_templates.sls

Lines 5 to 13 in 3f59aac

Unfortunately, whonix templates implement a sys-whonix usage check which prevents templates to use cacher.

This is documented over https://www.whonix.org/wiki/Qubes/UpdatesProxy, and is the result of qubes-whonix-torified-updates-proxy-check systemd service started at boot.

Source code of the script can be found at https://github.com/Whonix/qubes-whonix/blob/98d80c75b02c877b556a864f253437a5d57c422c/usr/lib/qubes-whonix/init/torified-updates-proxy-check

Hacking around current internals of both project, one can temporarily disable cacher to have torified-updates-proxy-check check succeed and put its success flag that subsists for the life of that booted Templatevm. We can then reactivate cacher's added UpdatesProxy bypass and restart qubesd, and validate cacher is able to deal with tor+http->cacher->tor+https on Whonix TemplatesVMs:

1- deactivate cacher override of qubes.UpdateProxy policy:

[user@dom0 ~]$ cat /etc/qubes/policy.d/30-user.policy

#qubes.UpdatesProxy * @type:TemplateVM @default allow target=cacher

2- restart qubesd

[user@dom0 ~]$ sudo systemctl restart qubesd

[user@dom0 ~]$

3- Manually restart whonix template's torified-updates-proxy-check (here whonix-gw-16)

user@host:~$ sudo systemctl restart qubes-whonix-torified-updates-proxy-check

We see that whonix applied his state at:

https://github.com/Whonix/qubes-whonix/blob/98d80c75b02c877b556a864f253437a5d57c422c/usr/lib/qubes-whonix/init/torified-updates-proxy-check#L46

user@host:~$ ls /run/updatesproxycheck/whonix-secure-proxy-check-done

/run/updatesproxycheck/whonix-secure-proxy-check-done

4- Manually change cacher override and restart qubesd

[user@dom0 ~]$ cat /etc/qubes/policy.d/30-user.policy

qubes.UpdatesProxy * @type:TemplateVM @default allow target=cacher

[user@dom0 ~]$ sudo systemctl restart qubesd

5- check functionality of downloading tor+https over cacher from whonix template:

user@host:~$ sudo apt update

Hit:1 http://HTTPS///deb.qubes-os.org/r4.1/vm bullseye InRelease

Hit:2 tor+http://HTTPS///deb.debian.org/debian bullseye InRelease

Hit:3 tor+http://HTTPS///deb.debian.org/debian bullseye-updates InRelease

Hit:4 tor+http://HTTPS///deb.debian.org/debian-security bullseye-security InRelease

Hit:5 tor+http://HTTPS///deb.debian.org/debian bullseye-backports InRelease

Get:6 tor+http://HTTPS///fasttrack.debian.net/debian bullseye-fasttrack InRelease [12.9 kB]

Hit:7 tor+http://HTTPS///deb.whonix.org bullseye InRelease

Fetched 12.9 kB in 7s (1,938 B/s)

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

Problem with this is that qubes update processes will start templates and try to apply updates unattended, and this obviously won't work unattended.

The question is then how to have whonix templates do a functional test for it to see that torrified updates are possible instead of whonix believing he is the only one providing the service? The code seems to implement curl check, but also doesn't work even if cacher is exposed on as a tinyproxy replacement, listening on 127.0.0.1:8082. Still digging, but at the end, we need to apply mitigation ( disable Whonix check) or proper functional testing from Whonix, which should see that the torrified repositories are actually accessible.

How to fix this?

Some hints:

1- cacher and whonix should modify the policy at the same place to ease troubleshooting and understanding of what is modified on the host system, even more when dom0 is concerned. I think cacher should prepend /etc/qubes-rpc/policy/qubes.UpdatesProxy

2- Whonix seems to have thought of a proxy check override:

https://github.com/Whonix/qubes-whonix/blob/685898472356930308268c1be59782fbbb7efbc3/etc/uwt.d/40_qubes.conf#L15-L21

@adrenalos: not sure this is the best option, and I haven't found where to trigger that override so that the check is bypassed?

3- At Qubes OS install, torrified updates and torrifying all network traffic (setting sys-whonix as default gateway) is two different things, the later not being enforced by default. Salt recipes are available to force updates through sys-whonix when selected at install, which dom0 still uses after cacher deployment:

So my setup picked up sys-whonix as the default gateway for cacher since I configured my setup to use sys-whonix proxy as default, which permits tor+http/HTTPS to go through after applying manual mitigations. But that would not necessarily be the case for default deployments but would need to verify, sys-firewall being the default unless changed.

@unman: on that, I think the configure script should handle that corner case and make sure sys-whonix is the default gateway for cacher if whonix is deployed. Or your solution wants to work independently of Whonix altogether (#6) but there would be discrepancy between Qubes installation options, what most users use and what is available out of the box after installing cacher from rpm:

Lines 50 to 60 in 3f59aac

4- That is, cacher cannot be used as of now for dom0 updates either. Assigning dom0 updates to cacher gives the following error from cacher:

sh: /usr/lib/qubes/qubes-download-dom0-updates.sh: not found

So when using cacher + sys-whonix, sys-whonix would still be used by dom0 (where caching would not necessarily makes sense since dom0 doesn't share same fedora version then templates, but I understand that this is desired to change in the near future.

Point being here: sys-whonix would still offer its tinyproxy service, sys-whonix would still be needed to torrify whonix templates updates and dom0 would still depend on sys-firewall, not cacher, on a default install (without whonix being installed). Maybe a little bit more thoughts needs to be given to the long term approach of pushing this amazing caching proxy forward to not break things on willing testers :)

@fepitre: adding you to see if you have additional recommendations, feel free to tag Marek if you feel like so later on, but this caching proxy is a really long awaited feature (QubesOS/qubes-issues#1957) which would be a life changer if salt recipes, cloning from debian-11-minimal and sepecializing templates for different use cases, and bringing a salt store being the desired outcome from all of this.

Thank you both. Looking forward for a cacher that can be deployed as "rpm as a service" on default installations.

We are close to that, but as of now, this doesn't work, still, out of the box and seems to need a bit of collaboration from you both.

Thanks guys!

Tor qube recipe missing

In recent post over forum, you said you did not use whonix.

This explains why cacher is not taking it into consideration.

But if all recipes take into consideration a tor netvm, the making of such netvm should also be salted?

Add dark mode tweaks to dom0 and templates

Openvn - Menu item not created

Although the menu item for vpn_setup is set as part of the config, no Menu item is actually created.

Instead the item is in the "selected" list with the "item is not installed" note.

Users have to manually refresh the application list.

Cacher does not allow access from connected qubes

configuration still retains iptables commands, not recognised in debian-12

How to manage dom0 qvm-template requirements?

Rationale behind installing custom /etc/apt-cacher-ng/fedora_mirrors (in AppVM state)

- This might be a small bug in the configuration, but afaiu currently the extended fedora_mirrors will not be persisted since 50_user.conf is missing

binds+=( '/etc/apt-cacher-ng' ):

binds+=( '/var/cache/apt-cacher-ng' )

binds+=( '/var/log/apt-cacher-ng' )

- May I ask why you added

packages.oit.ncsu.eduto fedora_mirrors and chose to install it in the AppVM instead of the TemplateVM? I'm adapting your configurations and would like to understand the reasoning, in case it's important.

Thank you for your extensive notes on Qubes and Salt btw, they are beyond helpful.

Remove monero support

Monero not packed under whonix anymore and formula depending on whonix 16.

media qube calls Disposable allowing for editing

As title - should be called with view option.

Users can still opt to use the multimedia or other qubes for editing, but this is not main aim here.

shaker, package manager and cacher not compatible with Q4.2

Raw notes since I did a quick attempt overnight weeks ago.

- packages not avail for q4.2

- When installing 4.1 packages over q4.2, the installer is complaining about dependencies not being available

- Attempted to install cacher (life savor) but the policy file changed and q4.2 has a policy manager wchih parses the policies for update proxy and don't find it.

Basically q4.1 and q4.2 needs to be dealt separately.

I would also love if there was some guidance given so that qubes can have qubes-update-check+updates-proxy-setup enabled by default in services of newly created qubes, so that qubes can install applications from the cacher, to be reused in template if user decides he wants to install permanently, only downloading once and being reusable.

I understand that whonix won't use cacher. Not that of a problem.

Asking this now because wyng-qubes-util will support TLVM<->BRTFS restoration soon, and I would love to be able to test qubes install on BRTFS and have a basic setup working for me with my qubes and templates restored and just install new templates and replicate package installation on newly deployed templates. Since data is always a struggle, for me, cacher is a dependency.

BRTFS + bees holds some really valid promises, and is one of my priority to test. I would love to prove a point that deduplication at pool level at restoration + BRTFS speed increase vs TLVM is a real thing, and that wyng-qubes-util is a lifesaver to backup, and restore on BRTFS when deduplication is in effect at pool level. Test would be pretty simple there. Backup everything and then restore on TLVM on another test laptop and compare consumed space.

All of this would be possible if cacher was there, available on Q4.2, which is progressing fast and seems to be becoming pretty stable!

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.