SA360 WebQuery to BigQuery Exporter

Background

Large SA360 customers want to build custom reports by combining their 1st party data with paid-search data.

The Easiest way to achieve that is by combining the data in BigQuery. There are two ways to programmatically import SA360 data into BigQuery

WebQuery makes reporting easier compared to the API (with less steps), as it allows adding additional entity data (e.g. Business data) in the report, which makes the report simple as compared to an API where this stitching has to be done in a user's program.

How does it work?

The tool uses Service account credentials to extract Reports from SA360 and also send data into BigQuery.

First the system extracts the Report (in XML format) from SA360 and converts it into CSV on the fly (using SAX parsing), this file is then staged (copied) to GCS, followed by calling BQ API to load the data into a separate table.

Support for User account credentials has been dropped due to security issues arising from storing user credentials locally.

Video Tutorial

Usage

Create Google Cloud Project

- Create a Google Cloud Project and enable billing.

- Enable APIs.

- Create a Service Account to be used for running this project.

- Grant the service account permissions

- SA360 permission to retrieve webquery report

- BigQuery and Storage permission

- Set Variables

export PROJECT_ID="<google-cloud-project-id>" export GCS_BUCKET_NAME="<name-of-cloud-storage-bucket>" export BIGQUERY_DATASET_NAME="<name-of-dataset>" export SERVICE_ACCOUNT_NAME="<service-account-name>" export COMPUTE_ENGINE_INSTANCE_NAME="<compute-instance-name>"

Create Resources

-

Create Cloud Storage bucket to stage the reports.

gsutil mb -p ${PROJECT_ID} "gs://${GCS_BUCKET_NAME}"

-

Crate BigQuery dataset to store the reports

bq mk --project_id="${PROJECT_ID}" ${BIGQUERY_DATASET_NAME}

-

Create Service account

gcloud iam service-accounts create ${SERVICE_ACCOUNT_NAME} \ --description="Service account to run SA360 webquery to BigQuery"

-

Create Compute Engine instance, Set default zone and region

gcloud compute instances create ${COMPUTE_ENGINE_INSTANCE_NAME} \ --service-account="${SERVICE_ACCOUNT_NAME}@${PROJECT_ID}.iam.gserviceaccount.com" \ --scopes=https://www.googleapis.com/auth/cloud-platform,https://www.googleapis.com/auth/doubleclicksearch \ --image-project debian-cloud \ --image-family debian-10

Ensure that the user/serviceAccount has at least READ permissions for SA360 and EDITOR Permissions for BigQuery.

Compile and run

-

Create a Configuration file (csv) with specified headers. (consider

sample-config.csvas a reference) -

Compile and package source code into an executable JAR.

./gradlew clean shadowJar

-

Copy the fatJar to the Compute Engine instance.

gcloud compute scp build/libs/dswqtobq-1.1-all.jar ${COMPUTE_ENGINE_INSTANCE_NAME}:~/ gcloud compute scp <configuration-csv-file> ${COMPUTE_ENGINE_INSTANCE_NAME}:~/

-

SSH into the Compute Engine instance

gcloud compute ssh ${COMPUTE_ENGINE_INSTANCE_NAME}Install Java 11 on the VM if required:

sudo apt install -y openjdk-11-jdk -

Run the jar file

# run the JAR file by specifying the configuraiton file as first parameter java -jar dswqtobq-1.1-all.jar <location of configuration CSV file> <local-report-temp-folder>

You can schedule to run it automatically using cron, after this step.

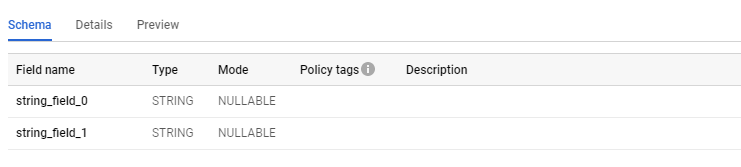

CSV File Format

The CSV configuration file must contain following headers, The order does not matter.

gcsBucketName- The GCS Bucket to be used for staging CSV file for BQ upload.projectId- GCP Project Id to use for billing as well as for BQ Table location.datasetId- BQ Dataset id/name belonging to given projectId.tableId- Prefix to be used for the BigQuery TablewebQueryUrl- SearchAds 360 WebQuery link